If you're a developer using a PostgreSQL database for your resource-intensive AI and machine learning projects, you can now take advantage of Intel hardware acceleration using Intel® Advanced Vector Extensions 512 (Intel® AVX-512) to get a significant boost in processing efficiency without having to do any extensive code rewrites.

In this article, you'll learn how to take advantage of Intel's two recent upstream contributions to the open source PostgreSQL database. These contributions include a framework to include Intel AVX-512 support implicitly within PostgreSQL without the need to rewrite substantial portions of the code and a specific scenario of population count (i.e., popcount) optimized using Intel AVX-512 vectorization that resulted in 36% performance improvements to Postgres. bit_count().

Popcount Optimization and Performance Gains

The motivation behind these improvements is clear: as data volumes grow exponentially, the need for rapid query response times becomes increasingly critical. Relational databases play a key role here, as they store and provide access to critical data that businesses and enterprises use to draw insights and generate trends. PostgreSQL's reduced latency in SQL functions, thanks to Intel AVX-512, helps meet the stringent performance SLAs demanded by enterprise customers, especially when migrating databases to the cloud.

One specific optimization is the population count (popcount) function, which has seen substantial performance gains. Tested on the latest Intel® Xeon® processors in both on-premises and AWS environments, benchmarks have shown a 36% improvement in the bit_count() function, with further gains in vector database scenarios. These enhancements are crucial for developers working with AI/ML applications that require efficient vector database operations.

Seamless Integration for Enhanced Vector Operations

The integration of Intel AVX-512 into PostgreSQL allows developers to automatically utilize vectorization capabilities if the hardware supports it. This seamless enablement ensures that PostgreSQL can leverage the full potential of Intel AVX-512 for compute-bound workloads, particularly in vector operations that benefit from single instruction multiple data (SIMD) processing such as large-scale matrix calculations for machine learning algorithms and complex geospatial queries in mapping applications.

Intel’s contribution introduces Intel AVX-512 to the PostgreSQL ecosystem implicitly. This means that if a given machine running PostgreSQL supports Intel AVX-512 in the hardware, then PostgreSQL will automatically start using vectorization capabilities without needing any special compile or runtime flags.

Implicit enablement of Intel AVX-512 requires making a few specific checks to ensure all the hardware capabilities are available. These checks are implemented in PostgreSQL and must be performed on any system to leverage the correct instructions for a given hardware platform. Skipping these steps may result in an application crash with an illegal instruction on an unsupported system. The checks are runtime checks to ensure the instructions and 512-bit registers are available for the Intel AVX-512 algorithm. If these criteria are not met, then the older Intel® Streaming SIMD Extensions (SSE 4.1) algorithm is chosen as a replacement. This fallback avoids a potential security risk at runtime exposed by application crashes.

Why is this important? This change ensures that PostgreSQL performance improves in specific areas due to built-in Intel AVX-512 accelerator vectorization capabilities while ensuring that the other aspects of the database remain intact.

Technical Insights: Intel AVX-512 and Population Count

Under the hood, Intel AVX-512 enables PostgreSQL to process data in parallel using wider 512-bit registers, accelerating computations that would otherwise be executed serially. To achieve this, vector operations in CPUs support single instruction multiple data (SIMD). The benefit to developers using these instructions is the ability to speed up their databases by parallelizing a portion of computation. By using wider 512-bit registers and rewriting some portion of the code using SIMD intrinsics, databases can use these abstractions to efficiently use “data parallel” processing, which speeds up computation that would have been done serially.

Population Count’s Three-Stage Process

For the population count, the Intel AVX-512 optimization involves a three-stage process that aligns data to 512-bit boundaries ensuring efficient bit counting without frequent memory storage.

The population count of a specific memory block is defined as the number of set bits in the block. The efficient calculation of population count is a well-researched topic, with multiple implementations that exist in hardware and software. Before our optimization work, a software and hardware implementation using SSE 4.2 existed in PostgreSQL. These leverage some of the built-in intrinsic functions provided by compilers such as GCC and Visual Studio. Our work further pushes hardware optimization boundaries by introducing an Intel AVX-512 optimized version of population count implementation.

To calculate the population count, however, the buffer must be aligned to 512-bit (64-byte) boundaries. Since this is not guaranteed, we proposed an implementation that is executed in three stages:

1) Count the leading unaligned bytes

2) Loop over subsequent 64-byte buffer sizes counting bits

3) Count the remaining bits in the tail less than 64-bytes

The head and tail are accomplished by using masked loads from memory where the bits not in the buffer are copied as zeros into the 512-bit registers. This allows the algorithm to use and accumulate the population count in a 512-bit register without having to store results in memory until the end. Then, at the end, it reduces that 512-bit register down to a single 64-bit integer in memory containing the count of bits in the buffer.

Scenarios and Results

The section below shows the scenarios tested and the results observed on various hardware environments using popcount within PostgreSQL. This will give developers an idea of the level of speed-up they can expect when leveraging Intel AVX-512 hardware acceleration.

Benchmarking Environment – AWS and On-Prem

All the benchmarks below have been run on a host system (Ubuntu Linux OS (Operating System)) and two AWS EC2 virtual machines running the same Linux version. All are running modern Intel CPUs. This work demonstrates the performance gains due to Intel AVX-512 in on-prem and AWS environments.

Please note that the results observed on other enterprise or cloud environments may differ due to different SKUs by the provider.

Host System (no virtual machine):

- CPU(s): Two CPUs (224 vCPUs; Intel® Xeon® Platinum 8480+ codename Sapphire Rapids)

- Memory: 512GB DDR4

- Microcode: 0x2b0004d0

- Multi-Core Turbo: 3.0GHz

- OS: Ubuntu 22.04.4

- Kernel: 6.2.0-37-generic

- Storage: 1-500GB NVME

- Compiler: gcc 12.3

- Testing Date: January 2024

Cloud Systems (EC2 virtual machines):

- AWS m6i-2xlarge

- vCPUs: 8 (Intel® Xeon® Platinum 8375C; codename Ice Lake)

- Memory: 32GB

- Microcode: 0xd0003d1

- Multi-Core Turbo: 3.5GHz

- OS: Ubuntu 22.04.4

- Kernel: 6.5.0-1014-aws

- Storage: 128GB (Amazon Elastic Block Storage)

- Compiler: gcc 12.3

- Testing Date: January 2024

- AWS m7i-2xlarge

- vCPUs: 8 (Intel® Xeon® Platinum 8488C; codename Sapphire Rapids)

- Memory: 32GB

- Microcode: 0x2b0005d1

- Multi-Core Turbo: 3.2GHz

- OS: Ubuntu 22.04.4

- Kernel: 6.5.0-1017-aws

- Storage: 128GB (Amazon Elastic Block Storage)

- Compiler: gcc 12.3

- Testing Date: January 2024

We ran a special benchmark using the PostgreSQL bit_count() function (population count of a string or byte array).

SQL bit_count() Benchmark

This benchmark validates any benefits that would translate to users of PostgreSQL, who would leverage popcount functionality. This involved two steps:

-

Using the bit_count() SQL function, a performance test was created on a database with large column widths up to 16K bytes containing 1 million rows.

-

The code used in the popcount microbenchmark using Intel AVX-512 was implemented in PostgreSQL. Note that this required several other changes in the PostgreSQL build system, but we will focus on the algorithm performance within PostgreSQL for this discussion.

The performance of Intel AVX-512 and SSE algorithms were compared to measure improvements in this benchmark.

The results produced a noticeable improvement using a large-scale factor database, with an average of 37% increase in performance. The benefits were measured across various Intel systems, which support Intel AVX-512. The latest generation at the time of testing was 4th Gen Intel® Xeon®, which is available in AWS EC2 as m7i/c7i/r7i instance types. These 4th Gen platforms showed the best performance.

Another key observation is that this change did not regress performance on older systems (earlier than 3rd Gen Intel® Xeon®), which was a requirement to introduce this change in the PostgreSQL community. The PostgreSQL community has welcomed these advancements, leading to the acceptance of an Intel-contributed patch that incorporates the Intel AVX-512 improvements. This development is set to benefit customers running large-scale PostgreSQL deployments on AWS and other cloud platforms powered by Intel instances.

Performance Results

The following results were derived using the SQL bit_count() benchmark only on PostgreSQL 16 with and without the Intel AVX-512 improvements.

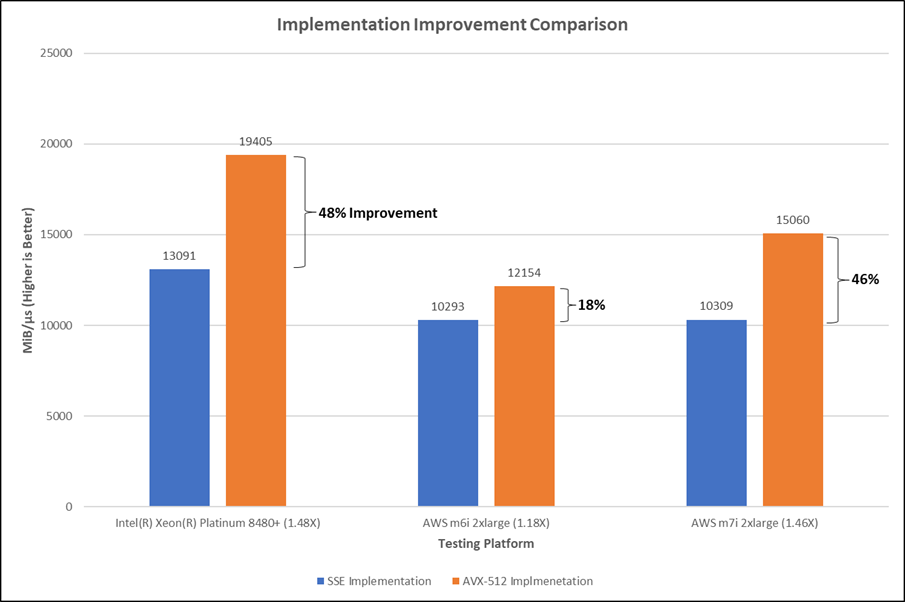

This is the average of measurements for sizes 64MiB-1GiB with random data and multiple iterations per configuration. The chart below represents complete averaging across:

-

EC2 m6i (3rd Gen Intel Xeon Scalable processor family – codenamed Ice Lake)

-

EC2 m7i (4th Gen Intel Xeon Scalable processor family)

-

4th Gen Intel® Xeon® Platinum 8480+

The numbers on the left side of the chart are in Mebibytes per microsecond (base 2^20, not 10^6). Higher is better.

Figure 1. PostgreSQL 16 bit_count() population count benchmark results

The chart highlights that 48% improvements were seen on on-prem and 18-46% on AWS cloud instances by using the Intel AVX-512 implementation of popcount. The baseline for these gains is the implementation using SSE. We omitted the generic software implementation from the comparison as it underperforms significantly and is not comparable. As a developer, these improvements to the algorithm can greatly enhance the performance of PostgreSQL on Intel platforms.

Next Steps

The introduction of Intel AVX-512 hardware acceleration into PostgreSQL represents a significant step forward for developers. It offers enhanced performance for specific functions like population count, enabling more efficient handling of large datasets and AI/ML workloads without compromising the stability and reliability of the database system.

We plan to benchmark more use cases that rely on popcount within PostgreSQL to highlight the benefits of popcount optimization within vector search. A recent example is the introduction of bit vector support within Pgvector (an extension that supports storing and searching vectors from natural language processing), starting with the 0.7.0 release, which uses Hamming and Jaccard distances. These methods rely on popcount in PostgreSQL. On a machine supporting Intel AVX-512, such workloads will run faster.

Further, we would like to extend the support of Intel AVX-512 to other scenarios within PostgreSQL, such as optimizing the performance of crc32c during writes, as an ongoing effort to benefit customers running large-scale PostgreSQL deployments on AWS and other clouds powered by Intel instances.

Learn more about how Intel can boost the performance of compute-intensive workloads.

About the Authors

Akash Shankaran, Lead Software Architect, Intel

Akash is a software architect and tech lead at Intel’s DCAI software team. His interests are in distributed systems, databases, and data management systems. He works on finding new opportunities to optimize PostgreSQL and enabling them in various ecosystems. You can find him on LinkedIn.

Paul Amonson, Senior Software Engineer, Intel

Paul is a senior software engineer currently working on adding optimized features leveraging Intel hardware into the OSS PostgreSQL database. You can find him on LinkedIn.

Diego Bailón Humpert, Global AWS Compute and Automotive GTM Lead, Intel

Diego leads the global AWS database go-to-market for Intel. He joined Intel in 2020 and has focused his work on optimizing database practices on AWS with Intel and facilitating database migrations to the AWS cloud. You can find him on LinkedIn.

Notices & Disclaimers

Performance varies by use, configuration and other factors. Learn more on the Performance Index site.

Performance results are based on testing as of dates shown in configurations and may not reflect all publicly available updates. See backup for configuration details. No product or component can be absolutely secure.

Your costs and results may vary.

Intel technologies may require enabled hardware, software, or service activation.

© Intel Corporation. Intel, the Intel logo, and other Intel marks are trademarks of Intel Corporation or its subsidiaries. Other names and brands may be claimed as the property of others.