Assessing security threats in open source projects can be overwhelming, but there's a tool that helps build trust quickly by measuring how projects adhere to certain best practices.

An Open Source Security Foundation project called OpenSSF Scorecard helps put some order and automation into the risk assessment process. In this episode, we chat with one of OpenSSF Scorecard’s contributors, Brian Russell of Google, and Ryan Ware, Director of Open Source Security at Intel, about the problems Scorecard addresses, and how it might help improve the experience of developers and consumers of open source software. We'll dive into the automated security checks, how to use the data, and how to include Scorecard in a workflow.

Katherine Druckman: I'm back with Ryan Ware, Intel's Director of Open Source Security, joined by Brian Russell from Google's Open Source Security Team. We're discussing the OpenSSF Scorecard project, a security assessment for open source projects. I'm particularly excited after watching Brian's presentation at the Southern California Linux Expo (SCaLE 20x) available on YouTube.

What’s OpenSSF Scorecard and why do we need it?

Brian Russell:

Before defining what it is, it's important to understand why we need it. The problem with a lot of open source software centers around trust. How do you trust the software that you're using? How do you know it's secure? What do you know about the governance and the maintainers who are making this project? Ideally, you could meet everyone who makes the open source projects you're using, but it's not something you can do when you're using hundreds to thousands to tens of thousands of different open source projects. So you're really trying to build up knowledge and understand where your software comes from – that's what spawned the OpenSSF Scorecard into a project.

OpenSSF Scorecard wants to help build trust quickly by measuring how different projects are adhering to certain best practices. So, there's no mysterious scoring or anything that's not well defined. Instead, we're looking for generally accepted best practices in open source software. Are there people who review code? If there are multiple contributors, does it require code review? Does this project have active maintainers? Is anybody still actually looking at it?

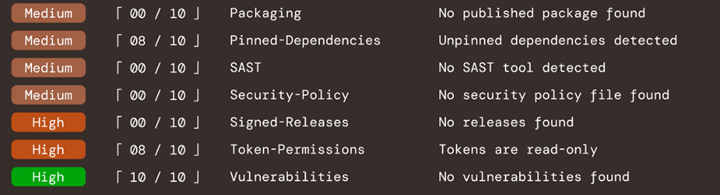

It looks at the last three months of activity. Are issues getting resolved? Are contributions being made? All of those things. There are around 15 or so parameters in the Scorecard today. We consolidate that information and make it available so if you're looking at a Scorecard, you can get a sense pretty quickly of what a project is doing and what best practices they're really adhering to.

Ryan Ware:

I always love it when some team comes and brings an open source project that they get from SourceForge that's been there and hasn't been updated since 2010.

Scorecard: Via OpenSSF

Katherine Druckman:

Brian, how did you get involved in the Scorecard project?

Brian Russell:

I've had different roles in software, but before I got involved in OpenSSF I was working in Google Cloud* as a product manager for vulnerability management systems that are part of cloud. So, I started working with folks who were starting OpenSSF started from the Google side, around three-and-a-half years ago. I thought the problems they were tackling were important from a variety of different roles, from open source maintainers to software users...

I was drawn in by the importance of the problem and the community aiming to solve these issues with trusting and securing open source software. It’s an enthusiastic, passionate community of people who aren’t just doing this from a pure compliance perspective. They genuinely care about doing what's best for the open source community, and that’s great for someone like me who loves open source. There are clearly a lot of problems to be solved in security, and if you're going to solve them, coming at it by keeping open source in a healthy state, to me, is really important.

Katherine Druckman:

What security concerns can be addressed? What are some specific problems that can be mitigated by using Scorecard?

Brian Russell:

Scorecard is unique in that it's not necessarily trying to solve a problem like sanitizing forms on a website where you're directly trying to close something that’s clearly dangerous. Instead, Scorecard centers around looking for best practices that would prevent bad things from going into a project.

Checking for the evidence of good things is a lot of what Scorecard is about. Are you going out and addressing vulnerabilities when they apply to your project? Have you published a security policy? We want secure projects, but we know there are a lot of unknowns that can come up in an open source attack scenario. Scorecard is looking at whether a project is being managed by a group who cares about security and doing things to make sure the project is being managed well.

There are a lot of scenarios where a second set of eyes caught an issue in a code review or someone quickly patched something when it was insecure, a lot of that happens if the best practices Scorecard checks for are employed. You're not going to be able to make the statement, at least anytime soon, of Scorecard definitively stopping attack X from happening. Instead, it's looking for how a project is being managed overall. Our hope and goal is to make that translate to more secure projects in the long term.

Ryan Ware:

So many of the security bugs out there are just bugs that somebody happens to be able to do something with. One of the ways to make a software project more secure is to just do all the things that help you eliminate bugs -- that's a lot of what Scorecard looks at, things that are best practices for removing bugs. And that's one of the things that that I find very interesting about Scorecard, and it's something that we looked at doing internally when trying to come up with a system to help make sure teams are doing the right things. I'm happy to see that there's an open source solution that we can help participate with and help expand.

Katherine Druckman:

Can you pop the hood on Scorecard and explain how it works? It's a checklist of best practices, but how are they measured?

Brian Russell:

A checklist is only as good as the information coming into it. In Scorecard’s case, we try to make sure every check is something that you can do programmatically. None of the checks done by Scorecard involve people going in and self-certifying. Nobody's coming in and saying, “Yeah, we do X” where that answer may or may not be true...

If your project is on GitHub*, there’s a GitHub API call looking for specific settings on the project. It depends on what the check is, but if, for example, it’s code reviews, you can see if a project requires a review before changes get merged. GitHub's API can verify that. Or, if it’s checking for a published security policy, we check for a security.md file.

Behind each check is a combination of things, but it's really a set of API calls centered around GitHub. The project will eventually expand to everywhere that code lives, and we do have GitLab* support waiting in the wings. There are some folks actively working on that integration for the project.

You can also run checks if you're using a Git-based system, certain things don't necessarily need to rely on a GitHub API or a GitLab API to check...You can run it locally on a command line, and we've packaged it into a GitHub action that you can run if you add it to your project.

As project maintainers, we also look at some of the top critical open source projects. We scan about a million and a half projects a week now, just looking at projects that have this information publicly available...We're scanning to these different projects into one data source that you can then consume through an API or by calling the database, where it's stored as a big query table.

It runs like a command line tool, but it's wrapped in a few different forms, and the data generated is publicly available. So, if you're also thinking about the limits of the tool, what can and can’t it do, if you can't check for something repeatedly in a programmatic way right now, that would fall outside the scope of Scorecard. But if there's something that we as a project would agree on that would make a great additional check, we could definitely keep expanding to additional best practices.

For more of this conversation and others, subscribe to the Open at Intel podcast:

- Openatintel.podbean.com

- Google Podcasts

- Apple Podcasts

- Spotify

- Amazon Music

- Or your favorite podcast player (RSS).

About the Author

Katherine Druckman, an Intel Open Source Evangelist, is a host of podcasts Open at Intel, Reality 2.0 and FLOSS Weekly. A security and privacy advocate, software engineer, and former digital director of Linux Journal, she's a long-time champion of open source and open standards.