What is it?

This article describes how to run the American Sign Language Recognition demo found in the Intel® Distribution of OpenVINO™ toolkit.

Requirements

Skills

Familiarity with GNU Linux* commands

Hardware

UP* Xtreme*: i7-8665UE 1.70GHz 4 Core

System, software and more

| Distro | Ubuntu 18.04.3 LTS Bionic Beaver |

| Kernel | Linux* kernel 5.0.0-1 |

| Toolkit | Intel® Distribution of OpenVINO™ toolkit |

| Model | asl-recognition-0003 person-detection-asl-0001 |

| Depends | scipy opencv-python |

sudo apt-get install python-numpy python-scipy python-matplotlib ipython python-pandas python-sympy python-nose

Original Demo

The American Sign Language Recognition demo is found in the Intel® Distribution of OpenVINO™ as a python demo.

Demo location

The demo is located in the following directory:

/opt/intel/openvino/inference_engine/demos/python_demos/asl_recognition_demo

This demo uses ASL recognition models.

Input

The input video is typically the webcam input or a video file.

- path to video file or device -i --input

- JSON --class_map

Workflow

For this workflow, the command reads video frames, runs person detector, extracts ROI. Frames and extracted rOI passed to neural network - predicts the asl gesture.

The output of visual results includes input frame with detected roi of the last recognized ASL.

Step 1. Download the models

The model downloader is located in the deployment_tools/tools/model_downloader directory. Download the person-detection-asl and asl-recognition models.

Change directory

cd /opt/intel/openvino/deployment_tools/tools/model_downloader

As root, run the downloader script to download the asl-rec* models.

python3 downloader.py --name asl-rec*

Download the person-detection-asl models.

python3 downloader.py --name person-detection-asl*

The results display typically in the following directory path similar to

/opt/intel/openvinoVersion/deployment_tools/open_model_zoo/tools/downloader/intel/nameofmodel/FP*/

The location of the downloaded models display during the download.

For the purposes of this demo, the models were moved to the /opt/intel/inference_engine/demos/python demos/asl_recognition_demo directory.

Step 2. Video input

There is no current open source video for this demo. The video input can be the webcam or a video file. Use the list to determine which words will work.

The sample used for this demo is "friend" using the camera input (-i 0).I

Step 3. Initialize the environment

. /opt/intel/openvino/bin/setupvars.sh

Step 4. Change Directory

cd /opt/intel/openvino/inference_engine/demos/python_demos/asl_recognition_demo

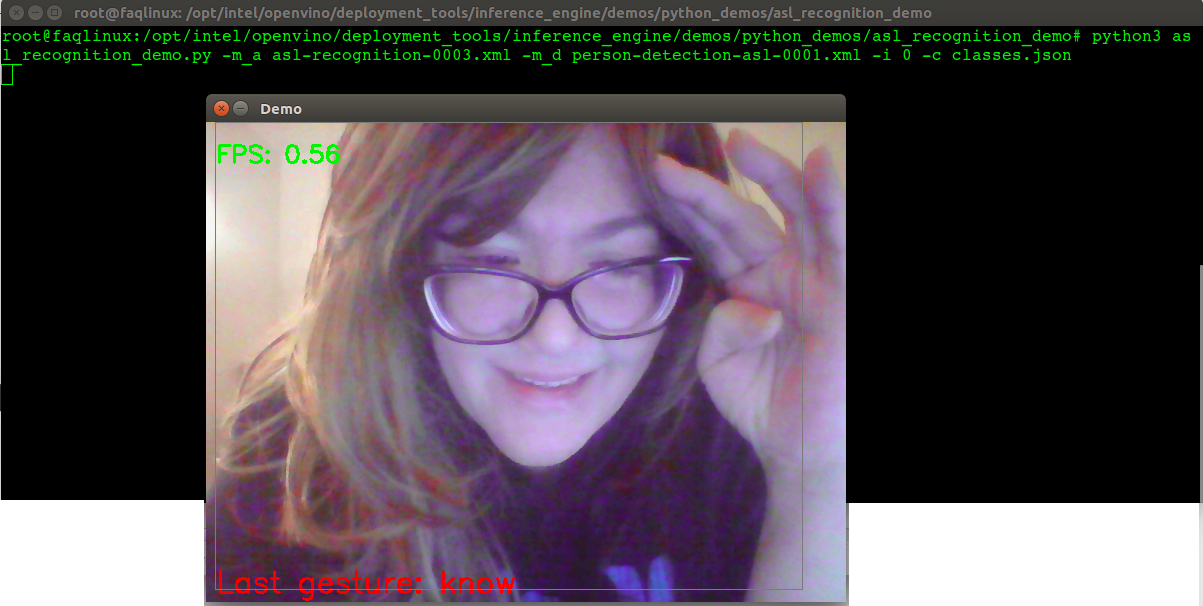

Step 5. Run Demo

After the moving the models to the demo directory, the command would look something like this:

python3 asl_recognition_demo.py -m_a asl-recognition-0003.xml -m_d person-detection-asl-0001.xml -i asl4.mp4 -c classes.json

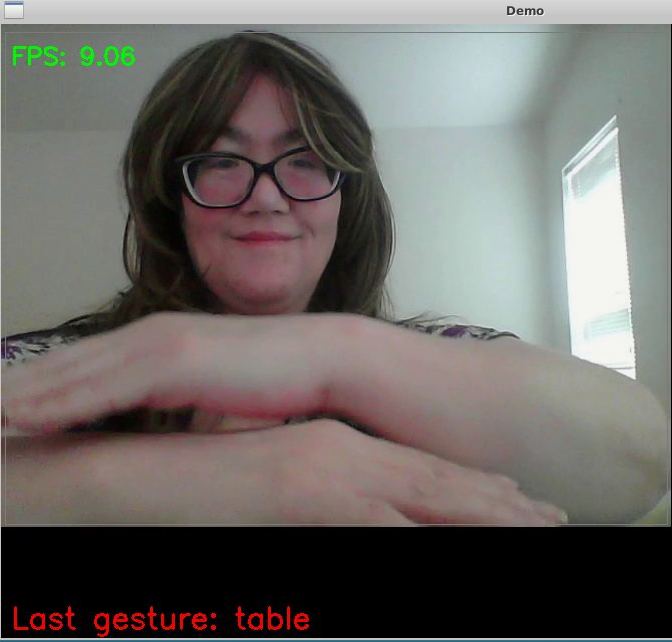

Results

Frames per second will vary depending on hardware and input.

Next Steps: Customize

The list of words below are included in the json file, classes.json. Try some of the following signs with the American Sign Language (ASL) demo.

| hello | nice | teacher |

| eat | no | happy |

| like | orange | want |

| deaf | school | sister |

| finish | white | bird |

| what | tired | friend |

| sit | mother | yes |

| student | learn | spring |

| good | fish | again |

| sad | table | need |

| where | father | milk |

| cousin | brother | paper |

| forget | nothing | book |

| girl | fine | black |

| boy | lost | family |

| hearing | bored | please |

| water | computer | help |

| doctor | yellow | write |

| hungry | but | drink |

| bathroom | man | how |

| understand | red | beautiful |

| sick | blue | green |

| english | name | you |

| who | same | nurse |

| day | now | brown |

| thanks | hurt | here |

| grandmother | pencil | walk |

| bad | read | when |

| dance | play | sign |

| go | big | sorry |

| work | draw | grandfather |

| woman | right | france |

| pink | know | live |

| night |

Command Roll

# location of demo

/opt/intel/openvino/inference_engine/demos/python_demos/asl_recognition_demo

# Input - The input video is typically the webcam input or a video file.

# path to video file or device -i --input

# JSON --class_map

# Change directory

cd /opt/intel/openvino/deployment_tools/tools/model_downloader

# As root, run the downloader script to download the asl-rec* models.

python3 downloader.py --name asl-rec*

# Download the person-detection-asl models.

python3 downloader.py --name person-detection-asl*

# The results display typically in the following directory path similar to

/opt/intel/openvinoVersion/deployment_tools/open_model_zoo/tools/downloader/intel/nameofmodel/FP*/

# The sample used for this demo is "friend" using the camera input (-i 0)

python3 asl_recognition_demo.py -m_a asl-recognition-0003.xml -m_d person-detection-asl-0001.xml -i 0 -c classes.json

# Initialize the environment

. /opt/intel/openvino/bin/setupvars.sh

# Change Directory

cd /opt/intel/openvino/inference_engine/demos/python_demos/asl_recognition_demo

# Run Demo

# After the moving the models to the demo directory, the command would look something like this with a mp4:

python3 asl_recognition_demo.py -m_a asl-recognition-0003.xml -m_d person-detection-asl-0001.xml -i asl4.mp4 -c classes.json