Purpose

This recipe describes how to get, build, and run the GROMACS* code on Intel® Xeon® Gold and Intel® Xeon Phi™ processors for better performance on a single node.

Introduction

GROMACS is a versatile package for performing molecular dynamics, using Newtonian equations of motion, for systems with hundreds to millions of particles. GROMACS is primarily designed for biochemical molecules like proteins, lipids, and nucleic acids that have a multitude of complicated bonded interactions. But, since GROMACS is extremely fast at calculating the non-bonded interactions typically dominating simulations, many researchers use it for research on non-biological systems, such as polymers.

GROMACS supports all the usual algorithms expected from a modern molecular dynamics implementation.

The GROMACS code is maintained by developers around the world. The code is available under the GNU General Public License from www.gromacs.org.

Code Access

Download GROMACS:

Get the GROMACS-2016.4 release or later. This code version includes optimization for better performance on Intel® Xeon® and Intel® Xeon Phi™ processors: http://manual.gromacs.org/documentation/2016.4/download.html

Build Directions

Build the GROMACS binary. Use cmake configuration for Intel compiler + Intel® Math Kernel Library (Intel® MKL) + Intel® MPI Library:

Set the Intel Xeon Phi processor BIOS options to be:

- Quadrant Cluster mode

- MCDRAM Flat mode

- Turbo Enabled

- Set environment for compilation:

mkdir "${GromacsPath}/build” # Create the installation directory installDir="${GromacsPath}/build" source /opt/intel/<version>/bin/compilervars.sh intel64 # Source the Intel compiler, Intel MKL and Intel MPI Library source /opt/intel/impi/<version>/mpivars.sh source /opt/intel/mkl/<version>/mklvars.sh intel64 cd =${GromacsPath}/src - Modify the configuration for each platform:

# Xeon AVX2 CONFIGURATION: FLAGS="-xCORE-AVX2 " and -DGMX_SIMD=AVX2_256

# Xeon AVX512 CONFIGURATION: FLAGS=" -xCORE-AVX512 " and -DGMX_SIMD=AVX512

# Xeon Phi AVX512 CONFIGURATION: FLAGS="-xMIC-AVX512 -g -static-intel" and -DGMX_SIMD= AVX_512_KNLFLAGS="-xMIC-AVX512 -g -static-intel"; CFLAGS=$FLAGS CXXFLAGS=$FLAGS CC=mpiicc CXX=mpiicpc cmake .. -DBUILD_SHARED_LIBS=OFF -DGMX_FFT_LIBRARY=mkl -DCMAKE_INSTALL_PREFIX=$installDir -DGMX_MPI=ON -DGMX_OPENMP=ON -DGMX_CYCLE_SUBCOUNTERS=ON -DGMX_GPU=OFF -DGMX_BUILD_HELP=OFF -DGMX_HWLOC=OFF -DGMX_SIMD=AVX_512_KNL -DGMX_OPENMP_MAX_THREADS=256 - Compile GROMACS:

make -j 4 sleep 5 make check

Generate Water Workloads Input Files:

To generate the .tpr input file:

tar xf water_GMX50_bare.tar.gzcd water-cut1.0_GMX50_bare/1536$InstallDir/gmx_mpi grompp -f pme.mdp -c conf.gro -p topol.top -o topol_pme.tpr$InstallDir/gmx_mpi grompp -f rf.mdp -c conf.gro -p topol.top -o topol_rf.tpr

Run Directions

Run workloads on Intel® Xeon Phi processor with the environment settings and command lines as (nodes.txt : localhost:272):

export I_MPI_DEBUG=5

export I_MPI_FABRICS=shm

export I_MPI_PIN_MODE=lib

export KMP_AFFINITY=verbose,compact,1

gmxBin="${installDir}/bin/gmx_mpi"

mpiexec.hydra -genvall -machinefile ./nodes.txt -np 66 numactl -m 1 $gmxBin mdrun -npme 0 -notunepme -ntomp 4 -dlb yes -v -nsteps 4000 -resethway -noconfout -pin on -s ${WorkloadPath}water-cut1.0_GMX50_bare/1536/topol_pme.tpr

export KMP_BLOCKTIME=0

mpiexec.hydra -genvall -machinefile ./nodes.txt -np 66 numactl -m 1 $gmxBin mdrun -ntomp 4 -dlb yes -v -nsteps 1000 -resethway -noconfout -pin on -s ${WorkloadPath}lignocellulose-rf.BGQ.tpr

mpiexec.hydra -genvall -machinefile ./nodes.txt -np 64 numactl -m 1 $gmxBin mdrun -ntomp 4 -dlb yes -v -nsteps 5000 -resethway -noconfout -pin on -s ${WorkloadPath}water-cut1.0_GMX50_bare/1536/topol_rf.tpr

Run workloads on Intel Xeon processor E5-2697 v4 and Intel Xeon Gold 6148 processor with the environment settings and command lines as:

export I_MPI_DEBUG=5

export I_MPI_FABRICS=shm

export I_MPI_PIN_MODE=lib

export KMP_AFFINITY=verbose,compact,1

gmxBin="${installDir}/bin/gmx_mpi"

mpiexec.hydra -genvall -machinefile ./nodes.txt -np $NPE $gmxBin mdrun -notunepme -ntomp 1 -dlb yes -v -nsteps 4000 -resethway -noconfout -s ${WorkloadPath}water-cut1.0_GMX50_bare/1536/topol_pme.tpr

export KMP_BLOCKTIME=0

mpiexec.hydra -genvall -machinefile ./nodes.txt -np $NPE $gmxBin mdrun -ntomp 1 -dlb yes -v -nsteps 1000 -resethway -noconfout -s ${WorkloadPath}lignocellulose-rf.BGQ.tpr

mpiexec.hydra -genvall -machinefile ./nodes.txt -np $NPE $gmxBin mdrun -ntomp 1 -dlb yes -v -nsteps 5000 -resethway -noconfout -s ${WorkloadPath}water-cut1.0_GMX50_bare/1536/topol_rf.tpr

Here NPE is a number of logical cores: NPE=72 for Intel Xeon processor E5-2697 v4 and NPE=80 for Intel Xeon Gold 6148 processor.

Performance Testing

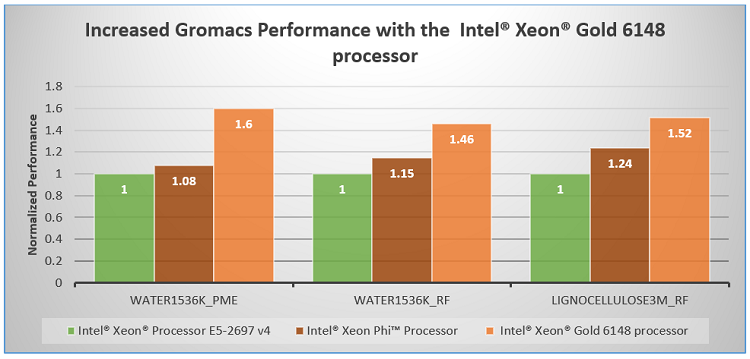

Performance tests for GROMACS are illustrated below with comparisons between an Intel Xeon processor E5-2697 v4 , an Intel® Xeon® Phi processor and an Intel Xeon Gold 6148 processor against three standard workloads: water1536k_pme, water1536k_rf, and lignocellulose3M_rf. In all cases, turbo mode is turned on.

Testing Platform Configurations

The following hardware was used for the above recipe and performance testing.

|

Processor |

Intel® Xeon® Processor E5-2697 v4 |

Intel® Xeon Phi™ Processor 7250 |

Intel® Xeon® Gold 6148 Processor |

|---|---|---|---|

| Stepping | 1 (B0) | 1 (B0) Bin1 | 4 (B0) Bin1 |

| Sockets / TDP | 2S / 290W | 1S / 215W | 2S/150W |

| Frequency / Cores / Threads | 2.3 GHz / 36 / 72 | 1.4 GHz / 68 / 272 | 2.4 GHz / 40 /80 |

| DDR4 | 8x16GB 2400 MHz(128GB) | 6x16 GB 2400 MHz | 2x16 GB 2666 MHz(192 GB) |

| MCDRAM | N/A | 16 GB Flat | NA |

| Cluster/Snoop Mode/Mem Mode | Home | Quadrant/flat | Home |

| Turbo | On | On | On |

| BIOS | GRRFSDP1.86B.0271.R00.1510301446 | GVPRCRB1.86B.0011.R04.1610130403 | 86B.01.00.0412 |

| Compiler | ICC-2017.1.132 | ICC-2017.1.132 | ICC-2018.1.163 |

| Operating System |

Red Hat Enterprise Linux* 7.2 3.10.0-327.el7.x86_64 |

Red Hat Enterprise Linux 7.2 3.10.0-327.13.1.el7.xppsl_1.3.3.151.x86_64 | Red Hat Enterprise Linux 7.3 3.10.0-514.el7.x86_64 |

GROMACS Build Configurations

| Gromacs Version | GROMACS-2016.4 |

|---|---|

| Intel Compiler Version | 2018.1.163 |

| Intel® MPI Library Version | 2018.1.163 |

| Workloads used | water1536k_pme, water1536k_rf, and lignocellulose3M_rf |