Fine-tuning and deploying large language models (LLMs) with billions of parameters requires significant memory and computational resources. To reduce these demands, we created a two-part solution. The first part of this solution was performed using the Llama 2 7B LLM developed by Meta* on an Intel® Gaudi® 2 AI accelerator.

This article shows how to further optimize the inference component of the Llama 2 7B model using the OpenVINO™ toolkit to accelerate text generation on high-throughput Intel® Arc™ GPUs, which are built into Intel® Core™ Ultra processors. The GPU enables high-performance and low-power processing by using the included feature set and capabilities and integrating them directly into the system-on-a-chip (SoC) to deliver a new design optimization point for graphics. The AI PC integrated GPU features eight Xe-cores and 128 vector engines with an advanced feature set, including support for Microsoft DirectX* 12 and Intel® Xe Super Sampling (XeSS) AI-based upscaling.

To streamline and enhance the deployment of the Llama 2 7B LLM on the AI PC, we use the OpenVINO™ toolkit optimizations that are available as part of the Hugging Face* Optimum ecosystem.

Prerequisites

Before running this application, do the following:

- Ensure your AI PC meets the OpenVINO toolkit system requirements.

- Install the dependencies in the requirements.txt file in the source repository.

Once you have successfully installed the required packages, you are ready to optimize the 7 billion Llama 2 LLM for deployment on your AI PC.

This solution uses the model that was fine-tuned and uploaded to Hugging Face on the FunDialogues community page.

The model, with approximately 7 billion parameters, is just under 27 GB. To reduce its memory footprint, we apply a compression technique using the OpenVINO toolkit to quantize the model weights to int8 precision.

The optimized libraries from the OpenVINO toolkit can be imported from the Optimum for Intel library as shown in the following code snippet. This library serves as the interface between the Hugging Face Transformers and Diffusers libraries with OpenVINO toolkit optimizations.

from optimum.intel import OVModelForCausalLM

from transformers import AutoTokenizer, pipeline

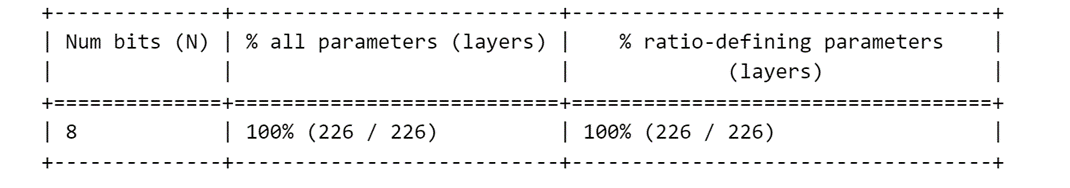

Next, the model is loaded from Hugging Face and exported to the OpenVINO toolkit intermediate representation (IR) format with optimizations for performing inference on Intel Arc GPUs. When exporting the model, the model weights are quantized to int8 precision, which is an 8-bit weight-only quantization provided by the Neural Network Compression Framework (NNCF). This method compresses weights to an 8-bit integer datatype, which balances model size reduction and accuracy, making it a versatile option for a broad range of applications.

model_id = "FunDialogues/llamav2-LoRaco-7b-merged"

model = OVModelForCausalLM.from_pretrained(model_id, export=True, load_in_8bit=True)

Once the model has been exported and quantized, the following output appears:

After the model is exported to the OpenVINO toolkit IR format, the tokenizer is created and the device is set to compile the model to the GPU.

tokenizer = AutoTokenizer.from_pretrained(model_id)

model.to("gpu")

Next, we use the Hugging Face text generation pipeline to run inference.

query = "How far away is the moon"

pipe = pipeline("text-generation", model = model, tokenizer = tokenizer, max_new_tokens = 50)

response = pipe(query)

print(response[0]['generated_text'])

Your output will be similar to:

Compiling the model to GPU ...

How far away is the moon from the earth?

The moon is about 238,900 miles away from the Earth.

The first time the pipeline is run, it compiles the model to the GPU, which takes between one to two minutes. After the model has been compiled, we can ask new questions, and it will take just a few seconds to generate new responses.

Next Steps

- On GitHub*, access the full source code.

- For help or to talk to OpenVINO toolkit developers, visit the Intel® DevHub channel on Discord*.

Notices and Disclaimers

Use of the pretrained Llama 2 model is subject to compliance with third-party licenses, including the Llama 2 Community License Agreement (LLAMAV2). For guidance on the intended use of the Llama 2 model, what will be considered misuse and out-of-scope uses, who are the intended users, and additional terms, review and read the license instructions.