| File(s): | Download |

| License: | BSD 3-clause License |

| Optimized for... | |

|---|---|

| OS: | Linux* kernel version 4.3 or higher |

| Hardware: | 2nd Generation Intel® Xeon® Scalable processor and Intel® Optane™ DC persistent memory Emulated: See How to Emulate Persistent Memory Using Dynamic Random-access Memory (DRAM) |

| Software: (Programming Language, tool, IDE, Framework) |

C Compiler, Persistent Memory Development Kit (PMDK) libraries |

| Prerequisites: | Familiarity with C |

Introduction

In this article, we describe how we created a new engine for pmemkv, which is a local embedded key-value data store created by Intel and optimized for use with persistent memory. Our caching engine leverages pmemkv APIs to implement a hybrid local and remote cache for applications in persistent and volatile memory. Pmemkv is part of the Persistent Memory Development Kit (PMDK).

We outline our design and development process, which can be used as a guide for the creation of additional engines for pmemkv.

Today’s cloud computing architecture supports an unpredictable demand for data where consumers expect web services to provide data as fast as possible. The requested data might not all be stored in a single node, and retrieving data from multiple network nodes can lead to lower system utilization, long spin-up time, and significant overhead spent marshaling data across the network. One way to address these issues is to create a hybrid cache that locally maintains frequently used data. Content caching is a necessity for any business that delivers content online or supports applications that interact with other cloud services. It helps optimize and enhance cloud services, providing users with a better overall experience.

Persistent memory provides byte-addressability and dynamic random-access memory (DRAM)-like access to data, with nearly the same speed and latency of DRAM along with the non-volatility of NAND flash. Data stored in persistent memory resides in memory even after losing power. It is a very attractive and useful feature set for the key-value data stores used in large-scale cloud applications. With pmemkv, cloud applications can easily manage key-value data on persistent platforms. Pmemkv provides functionality that supports adding new engines to interact with the data store using pmemkv APIs. Caching is an important part of any cloud application, so having a caching engine for a key-value data store is an immense value add. This white paper outlines the design and development of a pmemkv engine that caches data on behalf of a remote key/value service.

Our goal was to design and implement a caching engine layer using only existing persistent and volatile engines, and without requiring any core API changes.

This article assumes that you have a basic understanding of persistent memory concepts and are familiar with some elementary features of the PMDK. If not, please refer to the Intel® Developer Zone Persistent Memory Programming site for more information before you get started.

Solution Summary

Our caching engine proxies requests to the remote service and maintains a local persistent read cache. It allows compute nodes to be more quickly reused or restarted. With this local cache, the overall system is faster and needs fewer resources, as the frequently used data is now cached near the user application. The next time a cached key is queried, the value is returned from the local persistent cache.

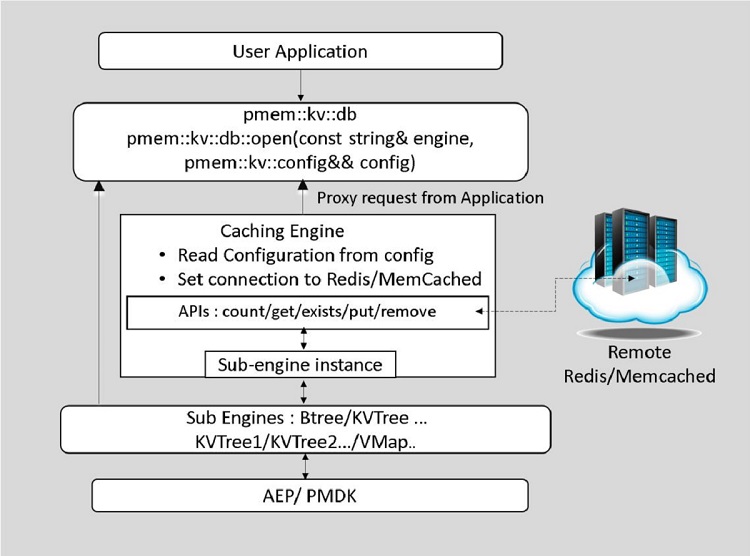

Figure 1. High-level architecture diagram for a caching engine

When the caching engine is started, it parses configuration parameters from a JavaScript* Object Notation (JSON) document passed into the open method of pmem::kv::db. This configuration includes the name of the sub-engine to use for storage, plus any other parameters needed by that specific sub-engine. The caching engine implements get, put, remove, count_all, exists, and get_all methods that interact consistently with other engines and with remote key-value stores.

We created our development environment in an Ubuntu* 18.04 virtual machine (VM) with emulation of persistent memory. All the basic functionality is implemented as a part of the caching engine using the guidelines provided in the Creating New Engines section of the file contributing.md in the pmemkv GitHub* archive.

Environment Details

For the development and test environment, we used a VM with the following hardware/software configuration running on VMware* workstation:

- RAM: 8 GB

- HDD: 100 GB

- Processor Cores: 4

- Operating System: Ubuntu 18.04

- Kernel Version: 4.17

- PMDK Version: 1.4.1

- Pmemkv (latest)

- Redis*: 5.0.3

- Memcached*: 0.21

Caching Engine Functionality

The new caching engine is implemented in the pmemkv GitHub archive src/engines-experimental/caching.h and src/engines-experimental/caching.cc header and source files. The engine name is caching and its namespace is pmem::kv. The implementation class name is caching. The header of the caching engine is shown below. The function definitions for the API implementation is explained in detail later in the document.

#include "../engine.h"

namespace pmem

{

namespace kv

{

class db;

static int64_t ttl; // todo move into private field

class caching : public engine_base {

public:

caching(std::unique_ptr<internal::config> cfg);

~caching();

std::string name() final;

status count_all(std::size_t &cnt) final;

status get_all(get_kv_callback *callback, void *arg) final;

status exists(string_view key) final;

status get(string_view key, get_v_callback *callback, void *arg) final;

status put(string_view key, string_view value) final;

status remove(string_view key) final;

private:

bool getString(internal::config &cfg, const char *key, std::string &str);

bool readConfig(internal::config &cfg);

bool getFromRemoteRedis(const std::string &key, std::string &value);

bool getFromRemoteMemcached(const std::string &key, std::string &value);

bool getKey(const std::string &key, std::string &valueField, bool api_flag);

int64_t attempts;

db *basePtr;

std::string host;

int64_t port;

std::string remoteType;

std::string remoteUser;

std::string remotePasswd;

std::string remoteUrl;

std::string subEngine;

pmemkv_config *subEngineConfig;

};

time_t convertTimeToEpoch(const char *theTime, const char *format = "%Y%m%d%H%M%S");

std::string getTimeStamp(time_t epochTime, const char *format = "%Y%m%d%H%M%S");

bool valueFieldConversion(std::string dateValue);

} /* namespace kv */

} /* namespace pmem */

The core caching engine stores the key-value pair and timestamp using existing storage engine APIs. The timestamp is prefixed to the value field and is stored in memory. After the data is requested by the user application, the time stamp is stripped from the value field, and the actual key-value pair is sent back to the user application. It provides APIs to the user application to cache the data locally. It also interacts with the remote Redis or Memcached server if the key is not found in the local cache during the get operation.

Configuration

Once the pmem::kv::db is allocated, it collects the complete configuration from the user application. The configuration is in a JSON document that is passed to the config structure and then to the open method of pmem::kv::db. The JSON configuration document has all the relevant information for setting the caching engine and its sub-engine details such as engine name and configuration.

The JSON configuration also has information about the type of backend that is being proxied (Redis or Memcached), connection settings (IP, port) to connect to the remote service and the Time to Live (TTL). TTL is a global value and applies to all the keys in the engine. The TTL default value is zero, which means that the data is stored in the cache indefinitely. The value indicates the number of seconds the data should be kept in the cache.

Here is the prototype for the open method used by all the engines in pmemkv. The parameters of this method are engine_name and config.

pmem::kv::db::open(const std::string &engine_name, pmemkv_config *config);

Next, we show how the user application prepares the config structure from JSON, then calls the open method with the engine name and pmemkv_config structure.

pmemkv_config *cfg = pmemkv_config_new();

if (cfg == nullptr)

throw std::runtime_error("Cannot create config");

auto ret = pmemkv_config_from_json(cfg,

"{\"ttl\":5000,

\"remote_type\":\"memcached\",

\"remote_user\":\"xxx\",\"remote_pwd\":\"yyy\",

\"remote_url\":\"...\",

\"subengine\":\"kvtree3\",

\"subengine_config\":{\"path\":\"/dev/shm/pmemkv\"}}");

if (ret != 0)

throw std::runtime_error("Cannot parse json");

kv = new db;

if (kv->open(“caching”, cfg) != status::OK)

throw std::runtime_error(db::errormsg());

Here, caching is the main engine and kvtree3 is the sub-engine. The caching engine defines the top-level JSON structure with all the parameters it requires, and the sub-engine config structure is defined by the sub-engine itself. The RapidJSON Library is used to parse the JSON values in the caching engine.

Once the caching engine is started by the pmem::kv::db, it finds the sub-engine and subengine_config from the JSON document. The caching engine is not required to know about the content of the subengine_config. It hands over the configuration to the sub-engine without understanding the structure (that is, path for persistent memory (kvtree3/btree) is a file, but path for volatile memory (vmap/vcmap) is a directory). This enables engines that have different configuration structures to be defined. The path in the sub-engine config for the persistent engine is where the persistent pool is created by the engine.

The pmem::kv::db close() method is used to stop the caching engine and sub-engines.

APIs

To use the caching engine, an application utilizes the APIs provided by the caching engine. Internally, the caching engine uses other existing engines such as cmap, vmap, and vcmap for storing key-value data. The caching engine provides the following APIs to the end user to implement caching support in the application:

get

This API provides a method to read a key-value pair from the local cache. When the API is called, it first checks for the key in the local cache using an existing underlying engine. There are two cases to consider when fetching a valid key-value pair. The action taken depends on the sum of the timestamp in the key-value pair and the TTL value that was collected from the JSON configuration:

- If the sum is less than the current timestamp or the key is not found in the local cache, it fetches the key-value pair from a remote Redis or Memcached service.

- If the sum is greater than the current timestamp, the caching engine will delete the timestamp from the value field, create a new key-value pair, and return it to the user application.

The get method then inserts or updates the new key-value pair into the local cache using the caching engine put method and returns the value for the key to the user application. Also, as shown in the implementation below, the get API uses the api_flag variable to test whether the request is from the user application or from exists API. If the call is from the exists API, then the remote service is not called to get the data.

status caching::get(string_view key, get_v_callback *callback, void *arg)

{

LOG("get key=" << std::string(key.data(), key.size()));

std::string value;

if (getKey(std::string(key.data(), key.size()), value, false)) {

callback(value.c_str(), value.size(), arg);

return status::OK;

} else

return status::NOT_FOUND;

}

bool caching::getKey(const std::string &key, std::string &valueField, bool api_flag)

{

auto cb = [](const char *v, size_t vb, void *arg) {

const auto c = ((std::string *)arg);

c->append(v, vb);

};

std::string value;

basePtr->get(key, cb, &value);

bool timeValidFlag;

if (!value.empty()) {

std::string timeStamp = value.substr(0, 14);

valueField = value.substr(14);

timeValidFlag = valueFieldConversion(timeStamp);

}

// No value for a key on local cache or if TTL not equal to zero and TTL is

// expired

if (value.empty() || (ttl && !timeValidFlag)) {

// api_flag is true when request is from Exists API and no need to

// connect to remote service api_flag is false when request is from Get

// API and connect to remote service

if (api_flag ||

(remoteType == "Redis" && !getFromRemoteRedis(key, valueField)) ||

(remoteType == "Memcached" &&

!getFromRemoteMemcached(key, valueField)) ||

(remoteType != "Redis" && remoteType != "Memcached"))

return false;

}

put(key, valueField);

return true;

}

put

This API provides a method to write a key-value pair to the local persistent or volatile cache memory. After the data is written, the response is sent back to the user application. It is not necessary to update the remote Redis or Memcached service.

As shown in the API implementation below, during the put operation, the timestamp is prefixed to the value field and stored in persistent/volatile memory as a key-value pair. The time stamp is prefixed to the value field of the key-value pair by the caching engine. The time stamp is updated in the value field when the key is updated in the caching engine.

status caching::put(string_view key, string_view value)

{

LOG("put key=" << std::string(key.data(), key.size())

<< ", value.size=" << std::to_string(value.size()));

const time_t curSysTime = time(0);

const std::string curTime = getTimeStamp(curSysTime);

const std::string ValueWithCurTime =

curTime + std::string(value.data(), value.size());

return basePtr->put(std::string(key.data(), key.size()), ValueWithCurTime);

}

remove

This API provides the developer with the ability to remove a key from the local cache. The method takes the key to be deleted and invokes the underlying storage engine for removal of the key. After removing the key from the local cache, the response is sent back to the user application. The method is implemented as shown below:

status caching::remove(string_view key)

{

LOG("remove key=" << std::string(key.data(), key.size()));

return basePtr->remove(std::string(key.data(), key.size()));

}

get_all

This API provides the developer with all the cached key-value pairs in the local cache. This method gets all the key-value pairs from the cache memory with the help of the underlying storage engine. As shown in the code below, the get_all API will remove keys from the local cache when their timestamp expires. Otherwise, it iterates the local cache. Once the key-value data is available to the method, it unpacks the timestamp from value and returns the key-value pair to the user application.

If the sum of the timestamp in the key-value pair and the TTL value collected from the JSON configuration is less than the current timestamp, then the key-value pair is deleted from the local cache memory. Otherwise, the current key-value pair present in the cache memory is sent back to the user application.

status caching::get_all(get_kv_callback *callback, void *arg)

{

LOG("get_all");

std::list<std::string> removingKeys;

GetAllCacheCallbackContext cxt = {arg, callback, &removingKeys};

auto cb = [](const char *k, size_t kb, const char *v, size_t vb, void *arg) {

const auto c = ((GetAllCacheCallbackContext *)arg);

std::string localValue = v;

std::string timeStamp = localValue.substr(0, 14);

std::string value = localValue.substr(14);

// TTL from config is ZERO or if the key is valid

if (!ttl || valueFieldConversion(timeStamp)) {

auto ret = c->cBack(k, kb, value.c_str(), value.length(), c->arg);

if (ret != 0)

return ret;

} else {

c->expiredKeys->push_back(k);

}

return 0;

};

if (basePtr) { // todo bail earlier if null

auto s = basePtr->get_all(cb, &cxt);

if (s != status::OK)

return s;

for (const auto &itr : removingKeys) {

auto s = basePtr->remove(itr);

if (s != status::OK)

return status::FAILED;

}

}

return status::OK;

}

count_all

This API iterates against the local cache and returns the total number of keys. As shown in the count_all API implementation below, when the cache memory is queried for the data using the count_all API, the caching engine internally invokes the get_all API of the caching engine. The Each method removes all the expired key-value pairs from the cache.

This step makes sure that the cache memory contains only frequently used data. After this step if the caching engine contains any keys, then the count_all API of the sub-engine is called, and the count of the key-value pairs is returned to the user application.

status caching::count_all(std::size_t &cnt)

{

LOG("count_all");

std::size_t result = 0;

get_all(

[](const char *k, size_t kb, const char *v, size_t vb, void *arg) {

auto c = ((std::size_t *)arg);

(*c)++;

return 0;

},

&result);

cnt = result;

return status::OK;

}

exists

This API returns the availability of the key in the local cache. As shown in the API implementation below, when the API is called, the key is passed to the get method of the underlying sub-engine.

If the sub-engine fetches a value corresponding to the key, then the status is success, else the failure status is returned to the user application. The timestamp of this key is also updated in the local cache.

status caching::exists(string_view key)

{

LOG("exists for key=" << std::string(key.data(), key.size()));

status s = status::NOT_FOUND;

std::string value;

if (getKey(std::string(key.data(), key.size()), value, true))

s = status::OK;

return s;

}

Using the Storage Engine

The engine to enable caching for pmemkv is open source and available. To use the caching engine, follow these steps:

- Install the PMDK libraries from GitHub.

- Download/clone the pmemkv repo:

- Git clone

- Install the required dependencies as per the Readme.

- Install the redis-acl, RapidJSON, and libmemcached dependencies as per the Readme for caching engine.

- Navigate to the bin directory.

- Run the test application, pmemkv_test, from pmemkv test directory.

Conclusion

Use of persistent memory-enabled caching will help businesses by providing optimized and faster cloud services and adding the benefit of persistent storage. One of the benefits of using caching is that overall application throughput can be increased without touching other cloud services or network as all the changes are to be made in the user application itself.

With the use of caching in an application, there is less latency and faster access to the data since the frequently used data now resides near the application where the requests are made.

Resources

Introduction to pmemkv – a short video

The Persistent Memory Development Kit (PMDK)

Introducing pmemkv - at pmem.io