Challenge

As AI technology gains wider adoption and machine learning applications are increasingly deployed to the network edge, the design tools for programming the enabling components, from field-programmable gate arrays (FPGAs) to XPUs, are evolving to meet the challenge. In the quest for more efficient, less expensive low-latency approaches for accelerating machine learning algorithms, many researchers have turned their attention to alternative computing models. These include advances in neuromorphic technology to accelerate inference operations and tools and technologies that unify programming efforts within a modern development environment that can span frameworks, platforms, and operating systems.

The costly nature of current machine learning technology and growing interest in the field of neuromorphic technology inspired graduate student Luke Kljucaric to embark on a project to identify better ways to achieve scalable machine learning.

"Current machine learning technology is limited in terms of latency for convolutional neural networks [CNNs]," Luke said. "New disruptive technology is required to reduce latency for object recognition tasks while also reducing the power requirements to make apps more scalable. Neuromorphic technology has the potential to meet these goals."

One way to approach this challenge, Luke feels, is by spurring adoption of FPGAs and supporting their use in data centers. His research is taking place at the National Science Foundation (NSF) Center for Space, High-Performance, and Resilient Computing (SHREC) at the University of Pittsburgh. "Through classes, tutorials, and workshops," he said, "we've exposed researchers and students to the advantages of FPGAs and ease of programming with modern development tools such as Intel oneAPI."

Solution

Luke is pursuing a PhD in computer and electrical engineering at the University of Pittsburgh. As the lead student in the high-performance computing (HPC) group at SHREC, his focus has been on FPGA and HPC research. He is learning to understand more fully the capabilities of current FPGA design tools. High-level design tools are a key area of interest. Accelerated machine learning—including algorithms for implementing CNNs and neuromorphic classification algorithms—is being explored and benchmarked for relative performance on CPUs, GPUs, TPUs, VPUs, and FPGAs. Luke is currently using Intel oneAPI for heterogeneous programming and optimizations, comparing the benefits of XPUs and FPGAs. "Being able to rely on a single source for programming both FPGAs and GPUs has been a boon to my research," Luke said. "It’s possible to do this with the OpenCL™ standard, but there is more support with oneAPI.

"I've also been using high-level synthesis tools from both Xilinx* and Intel to understand the best practices in using high-level code to develop low-latency FPGA architectures," Luke said.

The results of this research are likely to further advances in many sectors. These include autonomous vehicle operations, Industry 4.0 robotics and automated control systems, operation of unmanned aerial vehicles (UAVs), and development of neuromorphic processing toolkits.

Disruptive Change for Improved Machine Learning

Luke believes the compute resources and memory typically needed to run today's machine learning algorithms and perform tasks are excessive. Commonly used CNNs and tasks, such as high-accuracy feature extraction and object classification, consume far too many resources.

"By contrast," Luke said, "neuromorphic object classification algorithms have lower memory and compute complexity than CNNs at comparable accuracies. This can improve the scalability of machine learning apps. CNNs can achieve high classification accuracies, but to do so they require large datasets for the backpropagation algorithm to train. Neuromorphic algorithms, such as hierarchy of event-based time-surfaces (HOTS), have proven to be as accurate as CNNs on similar object-recognition tasks, requiring less data and even being 'untrained' on certain classes."

Luke noted that the neuromorphic algorithms operate on neuromorphic sensor data, or event-based data, rather than traditional camera images. Neuromorphic sensors can capture events at a microsecond resolution, helping enable real-time processing with low-latency processing architectures. FPGAs can play a key role in this scenario.

"FPGAs are reconfigurable logic devices that can realize low-latency data paths. The research I've been doing showcases the performance of the HOTS algorithm on FPGAs using state-of-the-art high-level synthesis tools on object recognition tasks using neuromorphic sensor data."

In evaluating the different acceleration options available, Luke determined that HOTS—a feature extraction algorithm—could be used effectively on FPGAs for boosting performance, but a classifier is also needed for the extracted features. For this, he employed a multilayer perceptron that can be more effectively accelerated on a GPU.

Development Notes and Enabling Technologies

After three years on this project, Luke has reached a stage where he is performing optimizations of the code he has created so far. Through Intel® DevCloud, Luke gained access to the Intel® oneAPI toolkits, Intel® CPUs, Intel® GPUs, and Intel® FPGAs, as well as AI frameworks and flexible environments needed to perform his research. "Intel DevCloud and oneAPI make programming different accelerators easier through one source base," he said.

Luke has found that the growing ecosystem of tools and libraries available from Intel are a strong asset for performing rapid development. The support community and developer support forums also proved useful.

Luke credits his PhD advisors with providing invaluable project assistance, including Dr. Alan George, who is department chair, R&H Mickle Endowed Chair, and professor of electrical and computer engineering (ECE) at the University of Pittsburgh and director of NSF Center of Space, High-performance, and Resilient Computing (SHREC). "Dr. George’s expertise in high-performance and reconfigurable computing," Luke said, "has helped guide my research on the FPGA, GPU, and programming side. Also, Dr. Ryad Benosman, one of the original authors of HOTS, is a professor of ophthalmology and ECE at the University of Pittsburgh. Dr. Benosman’s expertise in neuromorphic systems has helped guide my research on event-based data analysis, neuromorphic algorithms, and neuromorphic technology in general."

Conclusion: Ongoing Research

Luke’s research continues to explore ways to scale machine learning efficiently. This work is being done at the Intel Neuromorphic Research Community (Intel NRC), which hosts a research system (code-named Pohoiki Springs) that delivers a computational capacity of 100-million neurons.

Learn about the Community

Figure 1 shows an abstract interpretation of the neuromorphic computing model. "[The research system code-named] Pohoiki Springs scales up our neuromorphic research chip by more than 750 times, while operating at a power level under 500 watts. The system enables our research partners to explore ways to accelerate workloads that run slowly on conventional architectures," said Mike Davies, director of Intel NRC.

More Information

Figure 1. Abstract of a neuromorphic computing model

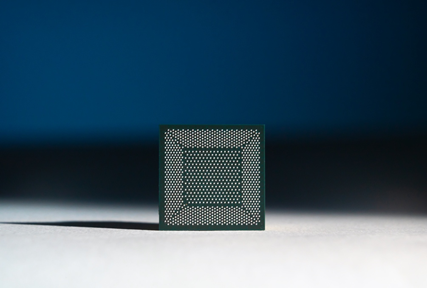

Fresh thinking and different approaches to computing are needed to elevate AI in this way. "Neuromorphic computing is a complete rethinking of computer architecture from the bottom up. The goal is to apply the latest insights from neuroscience to create chips that function less like traditional computers and more like the human brain. Neuromorphic systems replicate the way neurons are organized, communicate, and learn at the hardware level. Intel sees its [neuromorphic] research chip (Figure 2) and future neuromorphic processors defining a new model of programmable computing to serve the world’s rising demand for pervasive, intelligent devices," according to an Intel press release.

Figure 2. The Intel Labs research chip is expanding the boundaries of compute architecture.

Investigations being conducted at INRC are at a blue-sky level, while the path Luke is following is closer at hand and likely to achieve near-term results and practical implementations. Both paths go beyond conventional computing techniques and set the foundation for innovative ways to imbue computers with human skills and perceptions, advancing AI to confront next-generation challenges. View Luke's presentation on high-level synthesis design tools from Intel at the 2021 oneAPI Developer Summit IWOCL.

Figure 3. Luke Kljucaric analyzed design tools in a recent presentation.

Resources and Recommendations

For insights into current FPGA design tools, view Luke's video presented at the oneAPI Developer Summit, Comparative Analysis of High-Level Synthesis Design Tools.

Intel DevCloud offers free access to code from home with cutting-edge Intel CPUs, Intel GPUs, Intel FPGAs, and preinstalled Intel oneAPI toolkits that include tools, frameworks, and libraries.

For more information about HOTS, visit the Intel® DevMesh project page.