![]()

You are in a hurry or you just want to see a cool video showcasing a real-time telepresence robotics system which gives you an out-of-body experience without being interested in too many details of how it works? Have a look!

Synopsis

"Avatar" is an American science fiction film by James Cameron premiered in the year 2009. The film is about humans from earth colonizing the planet Pandora which is populated by the local tribe Na'vi. Na'vis are a humanoid species indigenous in Pandora. To create trust the humans developed a system which makes it possible to incarnate as a real Na'vi. In the film Captain Jake Sully (Sam Worthington) slipped into the role of such a native on Pandora to accomplish his mission. With this very special human technology he was able to look and feel like a real Na'vi.

Fìswiräti, nga pelun molunge fìtseng?

This creature, why did you bring him here?

The goal of "Project Avatar" was to build a project where you can have such an out-of-body experience using off-the-shelf hardware. The idea was to control a robot at another location using full body gesture control. The teleoperator on the the remote site is a Nao robot which is equipped with sensors to gather environmental data. Using feedback channels this data can be transferred back to the human controller.

The human operator should actually be able to see the world through the eyes of the Nao robot including the field of view in 3D. This is realizable by using RGB stereo cameras. Using tactile sensors the robot is able to send touch events over the feedback communication channel to the human controller. So the controller can actually feel sensor based events occurring in the environment at the robot's site comparable to some kind of force-feedback.

The hardware hacking team of TNG Technology Consulting GmbH wanted to implement a prototypical version of an immersive telepresence robotics system within one day only.

Telepresence robotics

In the context of telepresence robotics there is a operator site and a remote site. The remote site will be controlled by a human operator who is located on the operator site. The main goal of telepresence robotics is to overcome the four barriers between the operator and the remote environment. The four barriers to overcome are...

- Danger

- A teleoperator should make actions at a place which is hostile to life of human beings (e.g. robots clearing a disaster beyond all expectations).

- Distance

- A teleoperator should be able to get controlled from a remote place using a communication channel (e.g. communication via satellites).

- Matter

- A teleoperator should be able to access biospheres which are hostile to life for human beings (e.g. deep-sea robotics).

- Scale

- A teleoperator should be able to work with robotos with much more precision and accuracy (e.g. for remote surgery) or with machines which are much larger and stronger than an average human beeing.

Multimodal telepresence robotics

To implement a telepresence robotics system which achieves the goal to experience an out-of-body experience there is the strong need to implement feedback channels. Using the communication channel in reverse, gathered sensor information can be transferred from the remote site back to the operator site. Such an essential feedback information would be a 3D visual display in terms of multimodal telepresence robotics. This can be realized with at least two RGB cameras attached to the teleoperator itself. The video signal needs to get streamed through the communication feedback channel without any delays to the human operator.

![]()

Requirements

To implement our telepresence robotics solution, we need to use special hardware. To point out which hardware to use the hardware hacking team of the TNG Technology Consulting GmbH needed to define some functional requirements. Our requirements for this project were:

- The human operator controls the movements of the teleoperator at a remote site using gestures based on full body skeleton tracking

- The human operator has visual display 3D feedback using a head mounted display

- The human operators head movements will result in corresponding head movement of a teleoperated humanoid robot

- The human operator will receive tactile feedback when teleoperator is touched on head

- The data between the human controller and the teleoperator needs to get transferred without any cables

- Signal delays should be minimal for a pure out-of-body experience with your own Avatar.

Hardware

We’ve identified different 3D camera sensors capable of doing skeleton tracking. Among others there are e.g. the Leap Motion, Microsoft Kinect and Intel® RealSense™. LeapMotion is perfectly suited for hand tracking. As we need full body skeleton tracking Intel® RealSense™ or Microsoft Kinect were in the shortlist.

At the moment, the Intel RealSense SDK is capable of tracking the upper body. As we needed full body tracking to interpret a walking gesture metaphor we decided for the Microsoft Kinect 2. The implementation was done in way that we can easily implement our solution with Intel RealSense later on when full body skeleton tracking will be available in the future.

A typical head mounted display capable to show 3D vision is the Oculus Rift DK2. As we already had gathered some knowledge with these virtual reality headsets due to our "Augmented Rift Terminator Vision project" we just decided to use this device.

One of the well-known humanoid robots which are programmable in an easy way is the Nao robot of Softbanks robotics (formerly known as Aldebaran). You can easily implement movements using e.g. Python, C++ or Java. In 2015 over 5,000 units of Nao were sold into 50 countries worldwide. Thus an extremely active community is behind that product.

![]()

Demonstration video

Architecture Overview

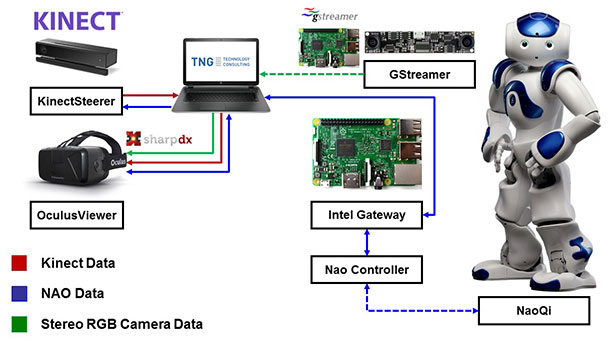

On the operator site we are using a normal laptop computer. At the remote site the Nao robot manipulates the environment. The Kinect camera and the Oculus Rift are directly attached to the laptop. Using the WAMP communication protocol the data of these controlleres are sent to the Nao robot. A Kinect sensor tracks the whole body except the head movement of the human operator. To control the head movements of the Nao robot we are using the built-in accelerometer of the Oculus Rift since this has much better accuracy than tracking the human operators head with the Kinect sensor.

The data based on the WAMP protocol is first sent to a WAMP router named "Crossbar" which is running on a Raspberry Pi Rev. 3. The controller software is also located on the Raspberry. There the incoming data is transformed into appropriate control commands for the robot. These commands are then sent to the Nao using Wi-Fi. Nao is also sending data back to laptop, e.g. the current posture. The reverse communication channel is used for such information.

A stereo RGB camera connected to second single board computer (like Intel Edison or Intel Joule) is attached to the robot's head using custom 3D printed glasses inspired by Futuramas "Bender". The stereo camera captures the video stream using the open source software GStreamer. A UDP stream is used to transfer the stream back to the laptop. It is streamed into the Oculus Rift using some kind of DirectX wrapper named SharpDX.

Implementation details

Skeleton Tracking

Kinect

Gathering full body skeleton data from the Microsoft Kinect camera is very easy and needs only a few lines of code.

public void Init(KinectSensor kinectSensor) {

BodyFrameReader bodyReader = kinectSensor.BodyFrameSource.OpenReader();

bodies = new Body[kinectSensor.BodyFrameSource.BodyCount];

bodyReader.FrameArrived += BodyFrameArrived;

}

private void BodyFrameArrived(object sender,

BodyFrameArrivedEventArgs bodyFrameEvent) {

BodyFrameReference frameReference = bodyFrameEvent.FrameReference;

BodyFrame frame = frameReference.AcquireFrame();

using (frame) {

frame.GetAndRefreshBodyData(bodies);

foreach (var body in bodies) {

if (body.IsTracked) {

// example how to get Head joint position

Joint head = body.Joints[JointType.Head];

float x = head.Position.X;

float y = head.Position.Y;

float z = head.Position.Z;

// do some awesome stuff

}

}

}

}

At first you have to initialize your BodyFrameReader. As the Kinect sensor can track up to six bodies you should always be sure to send the skeleton data of only one body to the Nao robot. If not doing so the Nao will receive movement data of all recognized bodies which would look like a robot going insane!

When the Kinect receives a BodyFrame you are able to acquire the current frame to access skeleton data for the different recognized bodies. Using the frame you can call the method GetAndRefreshBodyData() which will refresh the skeleton data for all recognized bodies. Now you can access the so called Joints and their 3D coordinates.

Intel RealSense

When using the the Intel RealSense SDK the implementation is just as easy as with the Kinect 2.

// ptd is a PXCMPersonTrackingData instance

Int32 npersons = ptd.QueryNumberOfPeople();

for (Int32 i = 0; i < npersons; i++) {

// Retrieve the PersonTracking instance

PXCMPersonTrackingData.Person ptp = ptd.QueryPersonData(

PXCMFaceTrackingData.AccessOrder.ACCESS_ORDER_BY_ID, i);

PXCMPersonTrackingData.PersonJoint ptj = ptp.QuerySkeletonJoints();

// work on the tracking data

int njoints = ptj.QueryNumJoints();

PXCMPersonTrackingData.JointPoint[] joints = new PXCMPersonTrackingData[njoints];

ptj.QueryJoints(joints);

// do some awesome stuff

}

Nao movements

Setup

The Nao robot can easily be programmed using JavaScript, C++, Python and Java. In our solution we used Python bindings. At first we need to setup the Nao robot. To be able to control the Nao with Python we need to import the naoqi library which is available on the Nao website.

import sys

import almath

import time

from naoqi import ALProxy

PORT = 9559

IP = "ip.address.of.your.nao.robot"

#Load Modules for different tasks

speechProxy = ALProxy("ALTextToSpeech", IP, PORT) # Allows NAO to speak.

audioProxy = ALProxy("ALAudioPlayer", IP, PORT) # Allows NAO to play audio.

postureProxy = ALProxy("ALRobotPosture", IP, PORT) # Allows use of predefined postures.

motionProxy = ALProxy("ALMotion", IP, PORT) # Provides methods to make NAO move.

To make use of specific Nao features you need to load different modules. In the following code samples you will understand how to make use of those different modules.

Nao learns to speak

To let Nao speak you just have to load the ALTextToSpeechModule. Using the method say() the robot will start to speak the text loudly. There are different parameters available which can be used to modify the pitch or the speed of the text to be spoken. For many languages download packages are available at the Nao marketplace.

speechProxy.say("Hello Intel Developer Zone!")

Nao is a poser

Nao is capable to do a lot of different postures. Postures are predefined routines inside the Nao kernel and will result in a chain of Nao movements to reach a posture state. Nao can go to a posture from every possible current position. Switching between postures like "LyingBelly" and "StandInit" is not a problem as well.

![]()

With the following code snippet Nao will change his posture to "StandInit" followed by a "LyingBelly" posture. You can switch between all postures shown in the picture above easily.

postureProxy.goToPosture("StandInit", 1)

time.sleep(1.0)

postureProxy.goToPosture("LyingBelly", 1)

Nao is moving

As only using predefined postures will is pretty boring we now want to move the robot's joints without using predefined macros. Nao has 25 degrees of freedom based on the joints of the human skeleton.

![]()

Let's say we want to move the left and the right arm to a fixed position as shown in the picture above. For this we need to make use of the ALMotion module. Using the motionProxy we can move the different Nao joints to its defined angle i.e. position.

leftJoints = ["LShoulderRoll", "LShoulderPitch"]

rightJoints = ["RShoulderRoll", "RShoulderPitch"]

leftAngles = [2.0, 0.6]

rightAngles = [-2.0, 0.6]

motionProxy.setAngles(leftJoints, leftAngles, 0.5)

motionProxy.setAngles(rightJoints, rightAngles, 0.5)

The method setAngles() expects an array of joints to move, angle data for those joints and the speed of the movement which should be between 0 (slow) and 1 (fast).

Conclusion

Using off-the-shelf hardware only the software consultant team of TNG Technology Consulting GmbH was able to realize a multimodal telepresence robotics system. Controlling the Nao with gestures was easy to implement within one day only. One of the major challenges are to process the massive amount of video data transferring it over a wireless connection. Using an Intel IoT Gateway and a special RouterBoard we were finally able to transfer the video signal and all other controller data with a delay which was adequate to a have a good out-of-body experience.

Authors

If you are interested in this showcase or a conference talk about this topic don't hesitate to contact us!

Ma sempul, oel ngati kameie.

Father, I see you.