From virtual assistants to language translation, natural language processing (NLP) has revolutionized the way we interact with technology. However, improving the performance of NLP tasks remains a critical challenge. Integrating Intel® oneAPI tools and IBM Watson’s NLP Library has significant implications for various industries, accelerating the performance of tasks such as sentiment analysis, topic modeling, named entity recognition, keyword extraction, text classification, entity categorization, and word embeddings.

We’ll break down what kinds of NLP tasks can be accelerated using oneAPI (the programming model and tools), how it enhances the performance of NLP tasks, and its future applications in various industries.

Introduction to IBM Watson’s NLP Library and oneAPI

IBM Watson’s NLP Library is an advanced machine learning tool that facilitates the analysis of text data. It is widely used in various industries for tasks such as sentiment analysis, entity extraction, and language detection. oneAPI is an integrated, cross-architecture programming model that enables developers to create high-performance heterogeneous applications for a range of devices. It provides a unified programming environment that supports various hardware architectures such as CPUs, GPUs, FPGAs, and other accelerators. The integration of Intel oneAPI tools and the Watson Library can significantly improve the performance of NLP tasks.

In October 2022, IBM expanded the embeddable AI portfolio with the release of IBM Watson Natural Language Processing Library for Embed, which is designed to help developers provide capabilities to process human language to derive meaning and context through intent and sentiment. IBM Research partnered with Intel to improve Watson NLP performance using Intel® oneAPI Deep Neural Network Library (oneDNN) and Intel-optimized TensorFlow and demonstrated benefits of up to 35% in function throughput for key NLP tasks when comparing the same workload on 3rd Gen processors.

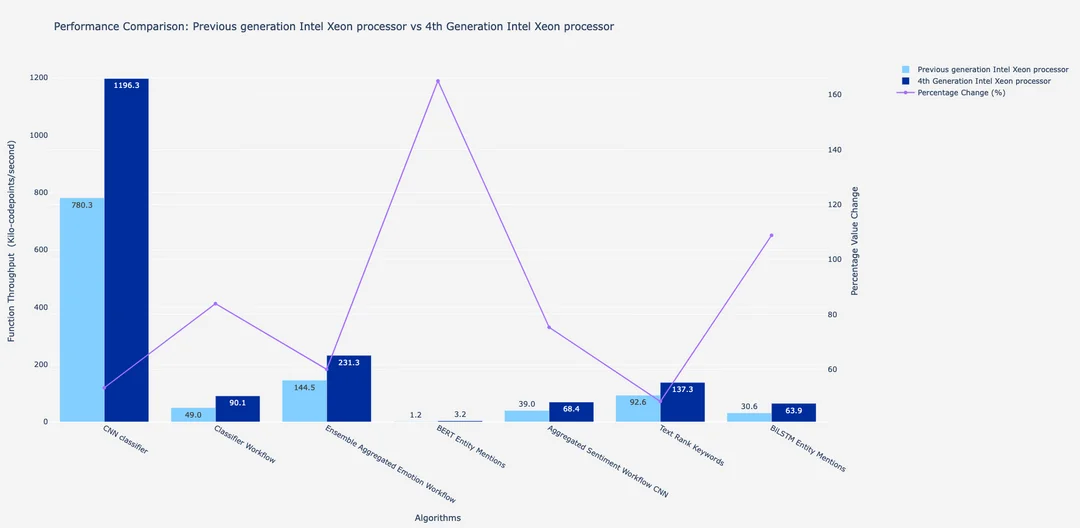

With the latest advancements in 4th Gen Intel® Xeon® Scalable processors, the overall performance in function throughput for NLP tasks has seen upwards of 165% improvement compared to 2nd Gen Xeon Scalable Processors currently deployed by IBM for inference on CPUs.

IBM Watson NLP Library for Embed

This library provides common NLP features, including:

- Sentiment analysis – classifies text to positive, negative, and neutral sentiment.

- Entity extraction – to detect and extract named entities such as personally identifiable information (PII).

- Text classification – to label any given text and assign it to a specific category or group.

These features provide value in a wide variety of use cases such as voice of the customer, brand intelligence, and contracts processing. Since the Watson NLP Library for Embed is containerized, it can be deployed to run anywhere and is easily embeddable in any offering. This allows IBM ecosystem partners to infuse NLP AI into their solutions using the company’s expertly trained models; for an enterprise use case, the solution can be curated to remove hate, bias, and profanity.

Understanding oneAPI’s Performance Advantages

oneAPI is a significant breakthrough in the AI landscape. It enables the creation of high-performance applications that can be executed across different hardware architectures seamlessly.

One of its primary advantages is the ability to optimize performance through parallelization. An application can leverage the computing power of various hardware architectures in parallel, thus improving its overall performance. Additionally, oneAPI provides a unified programming model that simplifies the development process and reduces development time, enabling developers to focus on optimizing their application’s performance.

Intel Optimizations Powered by oneAPI

Intel optimizes deep learning frameworks like TensorFlow and PyTorch with oneDNN, an open source, cross-platform performance library of basic building blocks for DL applications. These optimizations enable developers to utilize new hardware features and accelerators on Intel Xeon-based infrastructure and are targeted to accelerate key performance-intensive operations such as convolution, matrix multiplication, batch normalization, recurrent neural network (RNN) cells, and long short-term memory (LSTM) cells. oneDNN also leverages graph mode computation by fusing operations that are compute- and memory-bound to further speed up computation. oneDNN optimizations are available by default in the official TensorFlow release version starting with 2.9.

Testing the Watson NLP Library for Embed

When testing1 this library with oneDNN optimizations, text and sentiment classification tasks showed the greatest improvements in both duration and function throughput.

When comparing the same workload on 3rd Gen Xeon processors, there was a 35% improvement in function throughput for these tasks. However, when testing on the latest 4th Gen Xeons, here’s what happened:

"Integrating TensorFlow optimizations powered by Intel oneAPI Deep Neural Network Library into the IBM Watson NLP Library for Embed led to an upwards of 165% improvement in function throughput on text and sentiment classification tasks on 4th Gen Intel Xeon Scalable Processors. This improvement results in shorter duration of inference from the model, leading to quicker response time when embedding Watson NLP Library into our clients’ offerings.”

And according to Wei Li, Intel VP and GM for AI & Analytics, “The IBM Watson NLP Library for Embed is a great product for ecosystem partners looking to infuse Natural Language Processing into their enterprise solutions. Intel is excited to collaborate with IBM on Watson NLP’s adoption of the oneAPI Deep Neural Network Library and Intel’s optimizations for TensorFlow. We look forward to the continued partnership with IBM to explore the next frontiers of NLP and beyond through our software and hardware AI acceleration.”

Continuous AI Advancements

The IBM Watson Natural Language Processing Library helps to develop enterprise-ready solutions through robust AI models, extensive language coverage, and scalable container orchestration. It provides the flexibility to deploy natural language AI in any environment, and clients can take advantage of the improved performance on 4th Gen Intel Xeon Scalable Processors.

The integration of Intel oneAPI tools and IBM Watson’s NLP Library provides a significant breakthrough in the field of AI. The performance advantages of oneAPI and the advanced capabilities of IBM Watson’s NLP Library enable the acceleration of NLP tasks and text analysis with state-of-the-art model accuracy.

Learn More: