Yoga Classes Online with AI Pose Estimation

In 2020, MixPose* launched a remote yoga coaching platform, powered by AI pose estimation. A previously published article outlined MixPose at a high level outlining the benefits and innovations of the product, so this article takes a deeper dive into the underlying technology powering MixPose.

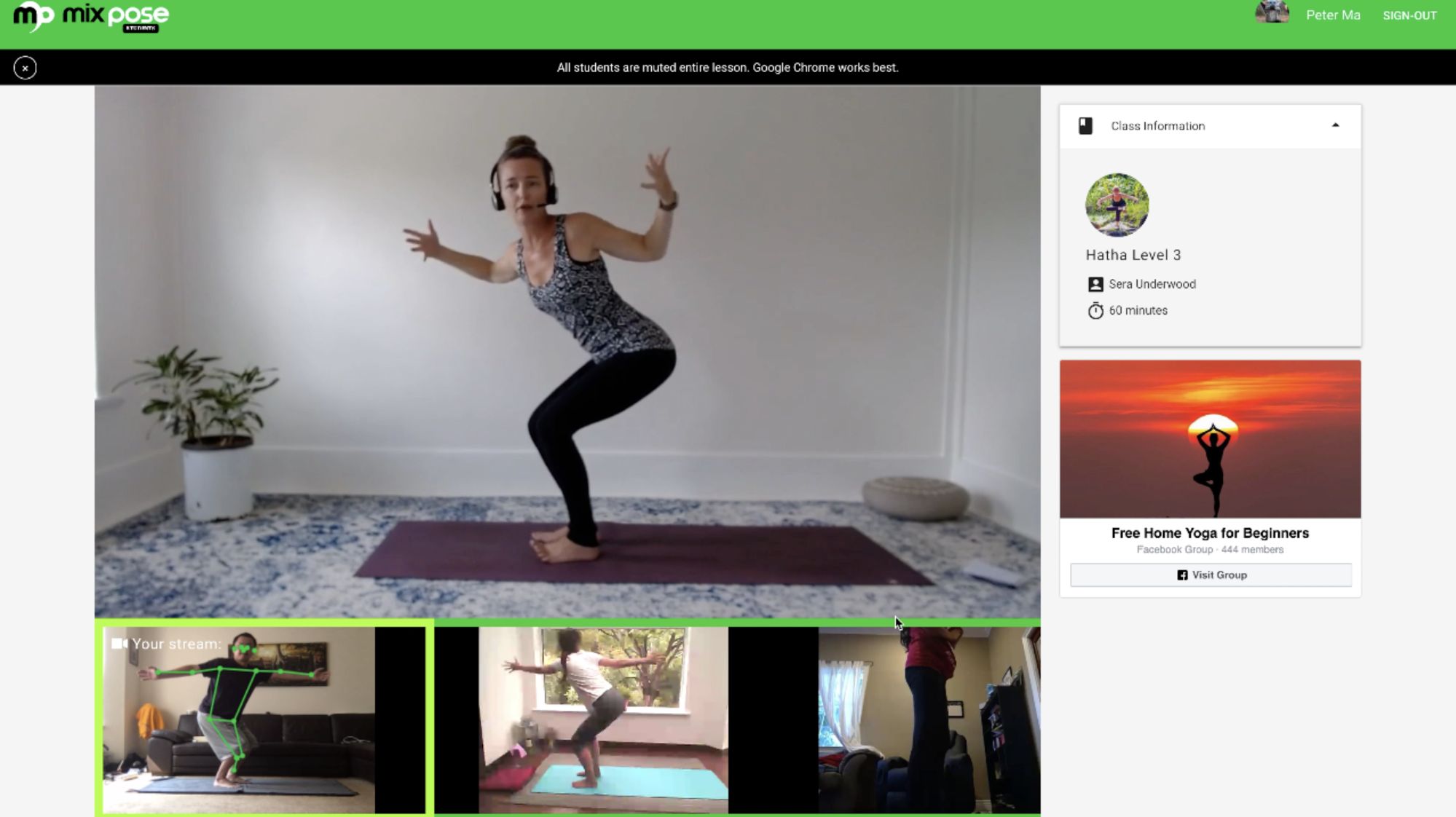

In the conceptualization of MixPose, the primary goal was to combine fully immersive content in HD with the real-time interaction capabilities of video conferencing. A platform that marries the best of both worlds would be the foundation on which we add AI pose estimation to help instructors engage more closely and frequently with their students. Further down the roadmap, AI pose estimation would facilitate the engagement of students with each other.

A number of technologies were required to help us achieve the previously stated goal. We will now dive more deeply into those relevant technology enablers.

What is Content Creation?

Live streaming has taken the world by storm with video gaming streaming on Twitch* as well as podcasts on YouTube-Live*. Driven by the popular, open-source streaming platform, OBS*, we are fully entrenched in a content creator revolution.

When we think of what comprises ‘content’, yoga and fitness wouldn’t seem natural fits for the term. However, if we drill down to the core of what ‘content’ is, it’s ultimately just an experience. As yoga and fitness classes sprout up online, the similarities between a YouTube video and a fitness class start to materialize -- instructors become content creators.

Since deep technology enablement is not typically a core competency for most fitness instructors, Mixpose was developed to bridge that gap and give instructors a tool to enable an AI powered fitness experience.

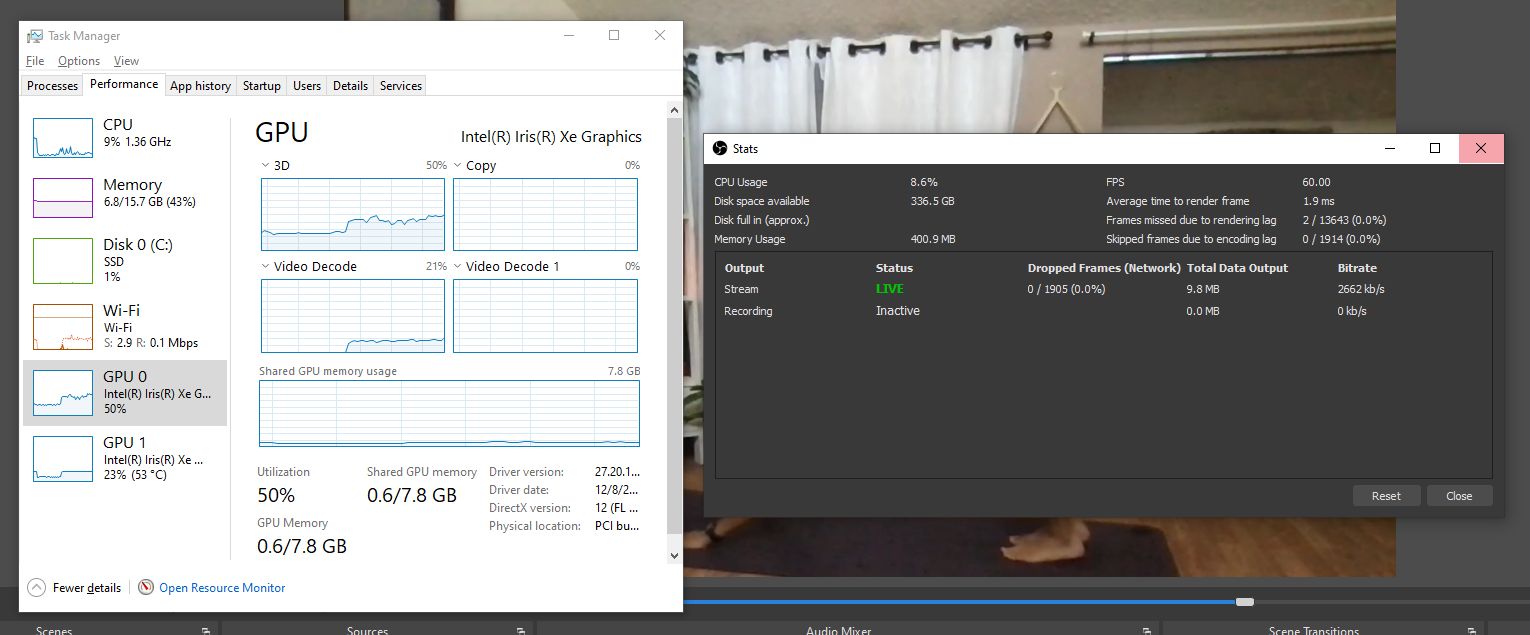

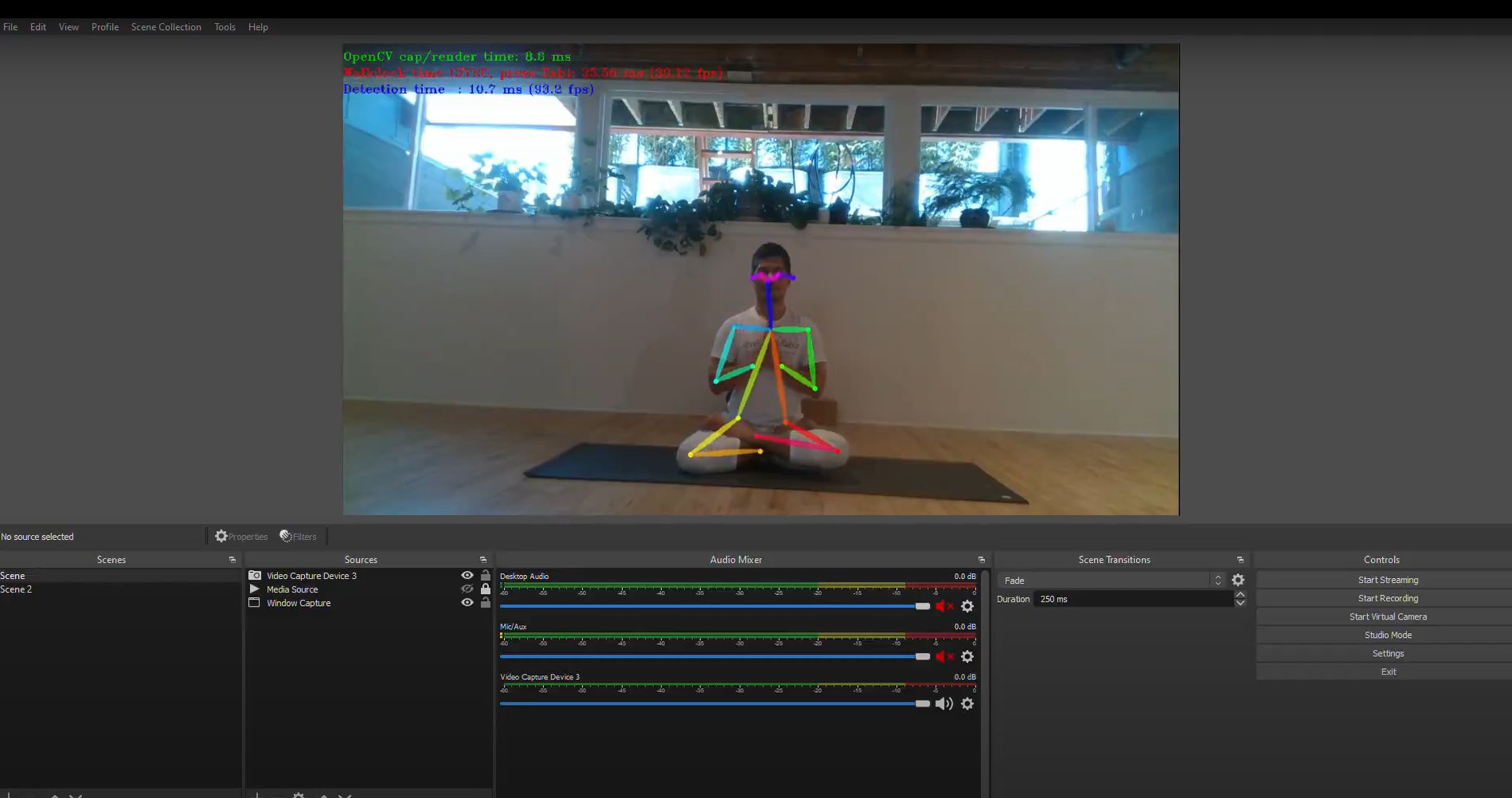

x264 with CPU and Live Encoding

According to VideoLan*, x264 is “a free software library and application for encoding video streams into the H.264/MPEG-4 AVC compression format, and is released under the terms of the GNU GPL.” It is the most popular encoder that content creators use for live streaming but it is extremely resource intensive because you are encoding HD quality content in real time. Any additional programs may lower the quality of the live stream, causing both missed frames and skipped frames due to rendering lag.

Another factor we need to consider in this space impacting user experience is bitrate. Bitrate, described more formally as the number of bits processed in a specified unit of time, is heavily dependent on the upload capabilities of the internet connection at a particular spot.

Intel® Iris® Xe Graphics and Intel® Quick Sync Video

Based on our research, due to live streaming utilizing an immense amount of CPU power, we recommend instructors leverage a dedicated GPU as a hardware encoder. Intel® Quick Sync Video was released in 2010, and in the decade since its release, Intel has greatly improved the efficiency of the product. These efficiencies were realized by optimizing the usage of hardware encoding as well as software encoding via the integrated GPU. This is an area where Intel® Iris® Xe graphics stand out as a key enabler in achieving maximum performance.

“Designed to leverage Intel’s earlier integrated GPUs, an Intel® Xeon® combined with the Intel® Visual Compute Accelerator Card (Intel® VCA Card), was aimed at the video encoding market, using Intel’s QuickSync media blocks to accelerate the process. Now that Intel has discrete GPUs, they no longer need to gang together CPUs for this market, and instead can sell accelerators with just the GPU,” wrote anandtech.

The Intel Quick Sync Video with Intel Iris Xe graphics will be able to encode the entire live stream through output so that the CPU can focus more on running other applications -- very helpful for using additional features during live streaming classes such as multiple cameras and switching between scenes on OBS without the risk of experiencing skipped frames.

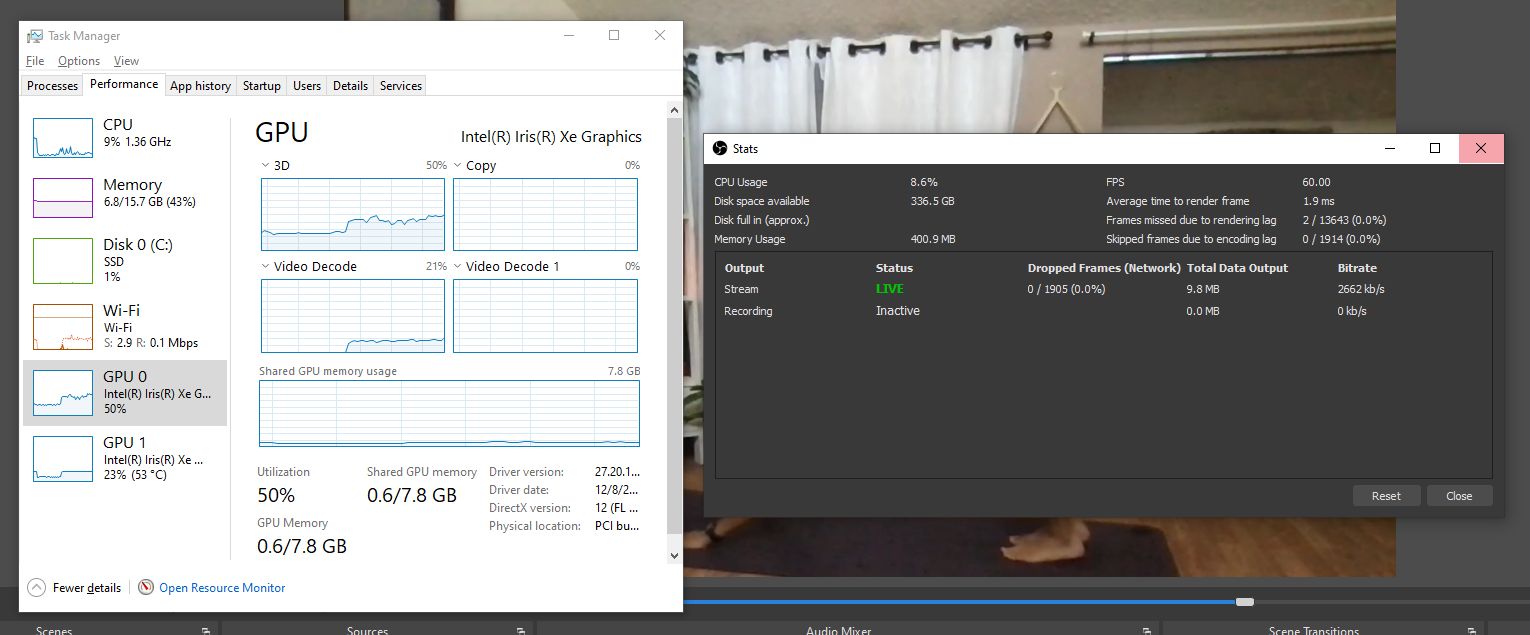

AI Pose Estimation and the Intel® Distribution of OpenVINO™ Toolkit

MixPose is utilizing AI on the edge for client side devices to run pose estimation. With the power of Intel Iris Xe graphics, we can experiment with AI pose estimation on the instructor’s side, enabling us to compare and contrast.

The Intel® Distribution of OpenVINO™ toolkit provides its 3D Human Pose Estimation as part of the dev kit, enabling a starting point for discerning different instructor poses. If we use the CPU alone to run OBS streaming, it is already pushed to its limits with intensive encoding tasks. This is where the targeted inferencing magic of OpenVINO™ really shines, as we can target the discrete GPU with the pose estimation inference, while the CPU is taking care of encoding. This technology, also referred to as Intel® Deep Link, allows Intel Iris Xe graphics to serve as a crucial component in the parallel processing stream used by MixPose.

Future MixPose AI Advances on the Horizon

There are many AI features we are aiming to add in the future. With any video conferencing software, we’d likely run into trouble with inappropriate behavior from a handful of bad actors. This is a problem that can be addressed with more AI computing power. Since we can detect user’s streams via webRTC, we will be able to run near real time inferencing on the stream content itself and automatically block users that violate the service agreement.

MixPose in Action

The full setup for MixPose uses:

- Intel Iris Xe Max-powered ASUS VivoBook Flip 14 TP470,

- 1 GoPro* camera,

- 1 Intel® RealSense™ camera

- Jabra- Evolve* 65 for the boom mic.

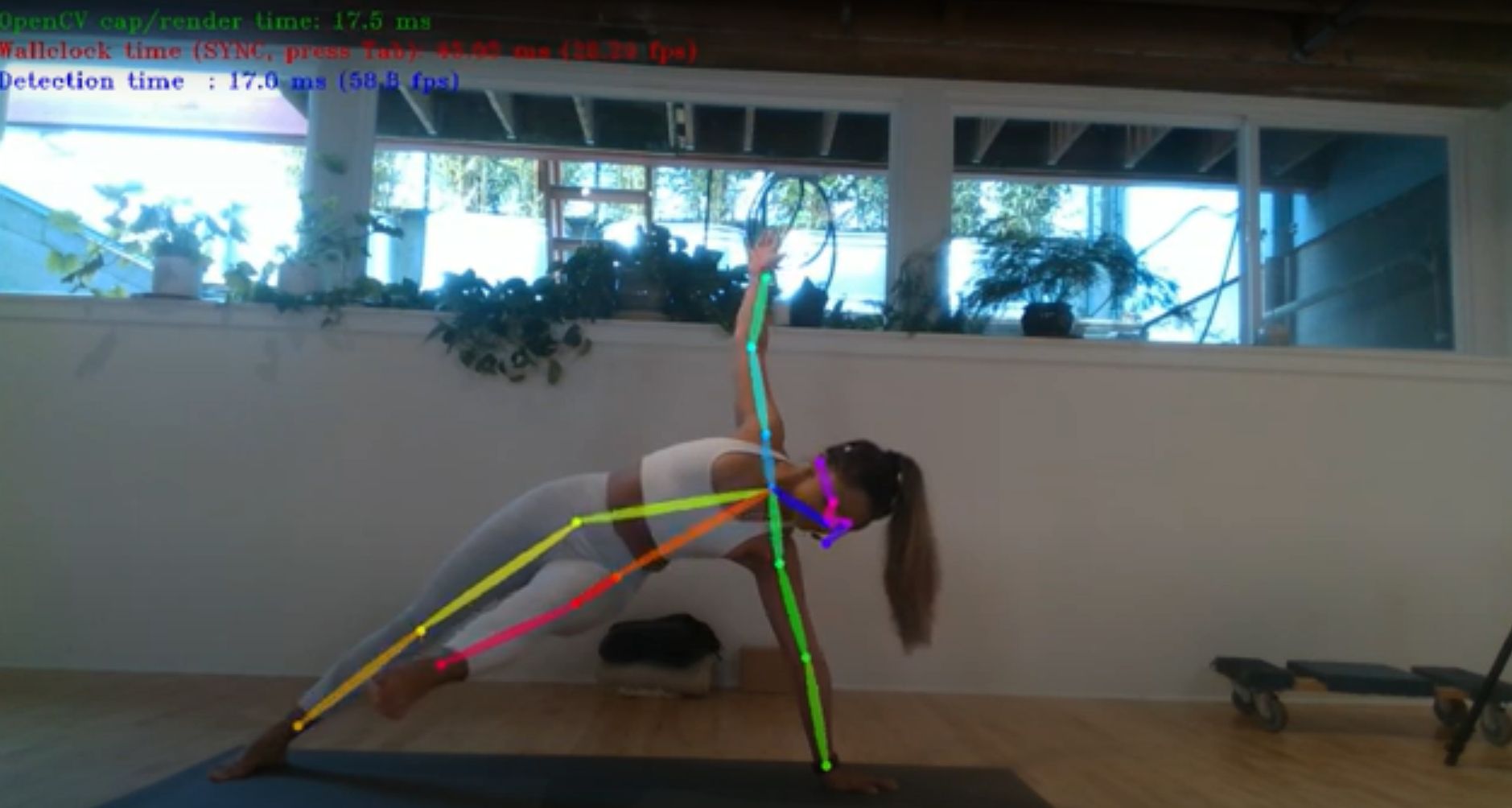

On the software side, we are using an OBS setup with:

- Audio Input Capture,

- Audio Output Capture,

- Video Capture device with the GoPro or

- Video Capture with RealSense Camera,

- Window capture for a second GoPro camera hooked up with OpenVINO pose estimation.

This setup gives us the ability to seamlessly switch between different camera angles.

Under OBS’s output configuration, content creators can set it to Intel Quick Sync H.264 option. This allows MixPose to take full advantage of Intel Iris Xe graphics media encoding power.

Hope to See You on the Mat

MixPose hosts 3-5 yoga classes daily. To experience Intel® Iris® Xe graphics in action, visit MixPose and join a free yoga class where you can experience this powerful technology.

The basic access for all classes are free and students can take live classes and engage with the instructor over chat. With our premium service, students interact with instructors over video and receive feedback during the class as well as receive other benefits such as workshops, events, and video-on-demand.

References:

Mixpose Site

Anandtech Intel® Iris® Xe Max Architecture Overview

Intel® Iris® Xe Max Overview

Intel® Quick Sync Video Overview

VideoLan x264 Overview

OpenVINO™ Human Pose Estimation Overview