Edge Computing and Edge Inference Concepts

Edge computing is about processing real-time data near the data source, which is considered the edge of the network. Applications are run as physically close as possible to the site where the data is being generated, instead of a centralized cloud or data center storage location. For example, if a vehicle automatically calculates fuel consumption based on data received directly from the sensors, the computer performing that action is called an Edge Computing device or simply "Edge device".

| Aspect | Edge Computing | Cloud Computing |

|---|---|---|

| Data processing | Data is processed closer to the source. | Data is stored and processed in a central location (usually a data center) |

| Latency | Latency is significantly reduced, decreasing the incidences of network lag related failure. Inference at the edge can potentially reduce the time to accomplish a result from a few seconds to a fraction of a second. | Requires longer time to respond to events where data is being transferred back and forth from the edge to cloud and cloud to edge. |

| Security and privacy | Keeping the majority of collected data locally reduces system vulnerability. | Cloud computing has a greater attack surface. |

| Low power and cost-effective | Utilizing accelerators has the potential to significantly reduce both the cost and the power consumption per inference channel. | Connectivity, data migration, bandwidth, and latency features are expensive. |

The integration of Artificial Intelligence (AI) algorithms in edge computing enables an edge device to continuously infer or predict based on the data received, and this is known as Inference at the Edge. Inference at the edge enables data-gathering devices, such as sensors, cameras, and microphones, to provide actionable intelligence using AI techniques. It also improves security as the data is not transferred to the cloud. Inference requires a pre-trained deep neural network model. Typical tools used to train neural network models include Tensorflow, MxNet, Pytorch, and Caffe. The model is trained by feeding as many data points as possible into a framework to increase its prediction accuracy.

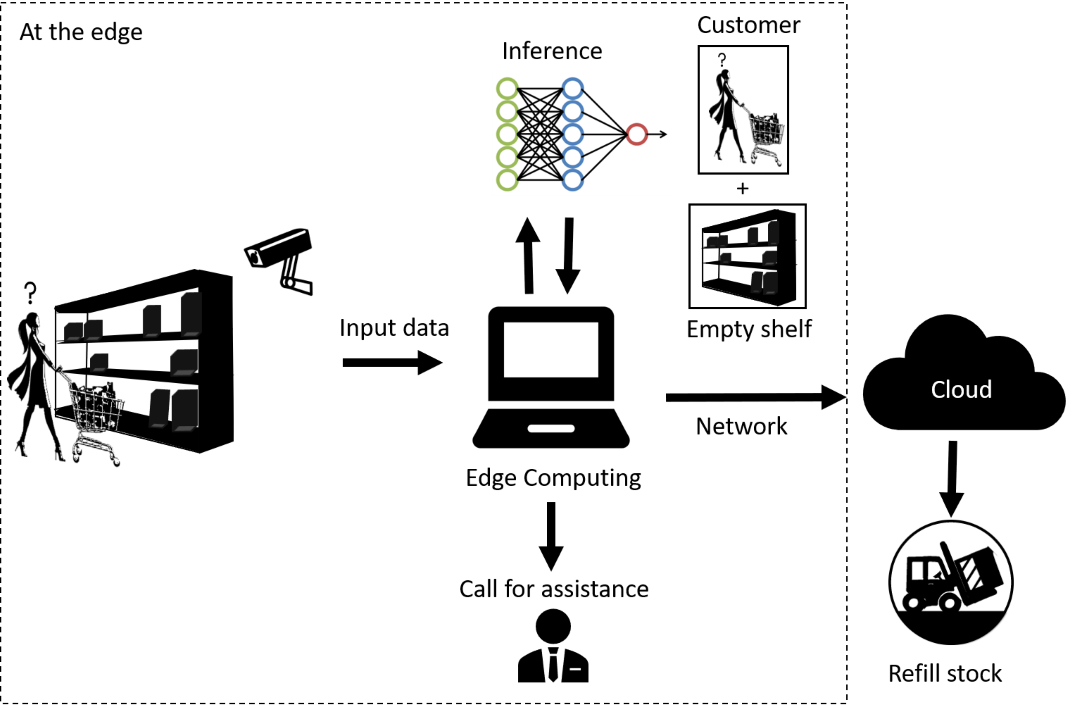

The following image demonstrates an example of how an edge inference system works. When the IP camera captures the image of a customer, who could not find a product that she wants to buy on the shelf, the input data from the camera is then transferred to an edge computing device for inference using a neural network. The output of the inference shows a customer and an empty shelf, which then triggered an alert to call for assistance. At the same time, the edge computing device triggers another cause of action to the cloud to refill stock.

How Edge Inference Benefits the Market Segments

Smart Cities

With data being captured and analyzed in real-time at the edge, it significantly reduces the time and amount of raw data transfer to the cloud and enables necessary actions to be taken instantly. Edge inference systems can help to contact authorities automatically for any crime and emergency events. Public transportation users can be informed instantly through mobile devices for any transport delays or breakdowns through mobile devices. Drivers can look for parking in crowded areas and perform payment through a mobile app without leaving their current tasks. Electricity and water consumption can be tracked and monitored for any maintenance operations before breakdowns happen.

Read more

- iOmniscient Delivers AI-based, Multisensory, Smart Surveillance Analytics Powered by Intel® Architecture

- Videonetics Turns Video Data Into Smart City Decision-making with Intel® Architecture

Retail

Edge inference can be used for many data analytics such as consumer personality, inventory, customer behavior, loss prevention, and demand forecasting. All these operations can be done by collecting and analyzing consumers' shopping patterns to show specific product information at a specific time to improve the shopping experience. Retailers can implement data storage security locally with only specific personnel accesses and share only specific information in the cloud. Self-checkout POS and payment over the internet with visual analytics working with RFID detection can prevent loss due to shoplifting and reduce queues in the store.

Read more

- Hudson News: Simplify, Optimize, Personalize Sales

-

Prevent Store Losses and Keep an Eye on Business: AI at the Edge

Industrial/Manufacturing

Real-time data processing by inferring at the edge allows defect detection on parts that are tough for human eyes and places where humans can't access. When a defect in a manufacturing line is detected earlier, the root cause can be identified and failures can be fixed before the production line breaks down. This can significantly reduce costs and increases process quality. Manufacturing companies can automate their production lines 24/7 with minimal downtime and remove humans from doing dangerous and repetitive tasks that may lead to health issues or workers resigning.

Read more

- ThyssenKrupp: An End-to-End IIoT Platform

- DeepSight and Intel Help Tire Manufacturer Speed Up Flaw Detection

Healthcare

Medical imaging using edge inference technology allows medical specialists to uncover diseases faster as inferring can identify abnormalities in living cells at an early stage of disease accurately. Medical specialists can then perform tests and diagnosis earlier and reduce the chances of fatality. With data stored at the edge, patients' information can be accessed immediately at the point of care.

Read more

Architecture Consideration

Throughput

For images, measuring the throughput in inferences/second or samples/second is a good metric because it shows a good indication of the peak performance and efficiency. These metrics are often found in common benchmark tools such as ResNet-50 or MLPerf. Knowing the required throughput for the use cases in the market segments helps to determine the processors and applications in your design.

Latency

This is a critical parameter in edge inference applications especially in manufacturing and autonomous driving where real-time applications are necessary. Images or events that happened needs to be processed and responded to within milliseconds. Hardware architecture and software framework architecture influence the system latency. Therefore, understanding the system architecture and choosing the right SW framework is important.

Precision

High precision such as 32-bit or 64-bit floating-point is often used for neural network training to achieve a certain level of accuracy faster when processing large data set. This complex operation usually requires dedicated resources due to the high processing power and large memory utilization. Inference, in contrast, can achieve a similar accuracy by using lower precision multiplications because the process is simpler, has been optimized, and data has been compressed. Inference does not need as much processing power and memory utilization as in training, therefore resources can be shared with other operations to reduce cost and power consumption. Using cores optimized for the different precision levels of matrix multiplication also helps to increase the throughput, reduce power, and increase the overall efficiency of the platform.

Power consumption

Power is a critical factor to consider when choosing processors, power management, memory, and any hardware devices to design your solution. Some edge inference solutions rely on batteries to power the system such as safety surveillance systems or portable medical devices. Power consumption also determines the thermal design of the system. A design that can operate without additional cooling components such as a fan or heat sink can lower the product cost.

Design scalability

Design scalability is the ability for a system to be expanded for future market needs without having to re-design or re-configure the system. This also includes the effort of deploying the solution in multiple places. Most edge inference solution providers would use heterogeneous systems that can be written in different languages and run on different operating systems and processors. Packaging your code and all its dependencies into container images can also help to deploy your application quickly and reliably into any platform regardless of the location.

Use case requirements

Understanding how your customers use the solution determines features that your solution should support. Following are some examples of use case requirements for different market segments.

| Industrial/Manufacturing |

|

| Retail |

|

| Medical/Healthcare |

|

| Smart city |

|