Overview

The Automated Self-Checkout application centers around helping to improve shoppers' experiences through expedited check-out. This tutorial shows how to create an automated self-checkout application by following the Jupyter* Notebook on the GitHub* repository, using the following stack of technologies: OpenVINO™ toolkit, Ultralytics YOLOv8*, and Roboflow* Supervision.

This application offers a short and easily modifiable implementation to detect and track objects within zones and provide real-time analytics data regarding whether objects were added or removed. With the flexibility of zones, retailers can define custom zones depending on the type of self-checkout they would like to employ:

- Self-checkout counters with designated areas for placing, removing, and bagging items.

- Zones on shelves to identify how many objects are removed from shelves for loss prevention or inventory management.

- Zones in shopping carts to identify how many items users add or remove from their carts.

OpenVINO helps developers overcome common restrictions in AI development and deployment, minimizing the time it takes to process input data to produce a prediction as an output. The time savings is quantified in benchmark data later in this tutorial, and when you perform real-world benchmarks on your own setup.

This application uses the YOLOv8 model and optimization process and tackles similar elements of zone definition as another application you can try: Intelligent Queue Management.

Prerequisites

To complete this tutorial, you need the following:

- Python* 3.8 or later installed on your system

- Git installed on your system

- A webcam or IP camera connected to your system

- Supported hardware that aligns with the OpenVINO toolkit system requirements

Step 1: Clone the Repository

To clone the automated self-checkout repository to your system and install libraries and tools, use the following command:

sudo apt install git git-lfs gcc python3-venv python3-dev

git clone –b recipes https://github.com/openvinotoolkit/openvino_notebooks.git openvino_notebooks

This clones the repository into the folder, openvino_notebooks, and downloads a sample video. Next, navigate to the folder:

cd openvino_notebooks/recipes/automated_self_checkout

Pull the video sample:

git lfs pull

This application uses Python to set up a virtual environment. If you do not have the virtual environment package installed, run the following:

sudo apt-get update

sudo apt-get upgrade

sudo apt-get install python3-ven

If you have a CPU with an integrated graphics card from Intel, to enable inference on this device, install the Intel® Graphics Compute Runtime for oneAPI Level Zero and OpenCL™ Driver. The command for Ubuntu* 20.04 is:

sudo apt-get install intel-opencl-icd

Next, prepare your virtual environment.

Step 2: Create and Activate a Virtual Environment

To avoid affecting things globally on your system, it is a best practice to isolate a Python project in its own environment. To set up a virtual environment, open the terminal or command prompt, and then navigate to the directory where you want to establish the environment. To create a new virtual environment, run the following command:

For UNIX*-based operating systems like Linux* or macOS*, use:

python3 -m venv venv

For Windows*, use:

python -m venv venv

This creates a new virtual environment, venv, in the current folder. Next, activate the environment you just created. The command used depends on your operating system.

For Unix-based operating systems like Linux or macOS, use:

source venv/bin/activate

For Windows, use:

venv\Scripts\activate

This activates the virtual environment and changes your shell's prompt to indicate as such.

Step 3: Install the Requirements

The Automated Self-Checkout application has a number of dependencies that must be installed in order to run. These are listed in the included requirements file and can be installed using the Python package installer.

python -m pip install --upgrade pip

pip install -r requirements.txt

All dependencies and requirements are now installed. Next, optimize and convert the models before running the application.

Step 4: Launch Jupyter* Notebook

Alongside a Jupyter Notebook, scripts are included in the repository for ease of production deployment. These scripts contain the same code as the notebooks and enable you to convert models and run the Automated Self-Checkout application directly from the command line, which is particularly helpful for automating and scaling your AI solutions in a production environment.

This tutorial uses a Jupyter Notebook.

Before converting and optimizing the YOLOv8 model, to launch JupyterLab, inside the automated_self_checkout notebook, run the following command:

jupyter lab docs

JupyterLab opens the docs directory that contains the notebooks.

Step 5: Convert and Optimize the Model

Open the convert-and-optimize-the-model notebook in the docs folder. The notebook contains detailed instructions to download and convert the YOLOv8 model. This prepares the model for use with the OpenVINO toolkit.

The following notebook snippet demonstrates how to convert the model using the export method:

# export model to OpenVINO format

out_dir = det_model.export(format="openvino", dynamic=False, half=True)

- det_model represents the YOLOv8 object detection model.

- format is set to OpenVINO to export the model in OpenVINO format.

- dynamic parameter is set to False, indicating that you're exporting the model with fixed input sizes (that is, a static model). A static model does not allow changes in the dimensions of the input tensors between different inference runs.

- half is set to True to use half-precision floating-point format (FP16) for the model weights.

This converts the YOLOv8 model to an OpenVINO intermediate representation (IR) format, allowing you to take advantage of the toolkit's capabilities to optimize the model and achieve better performance on a variety of hardware platforms. The IR format is a common format used by OpenVINO to represent deep learning models, enabling efficient inference across different hardware platforms.

Step 6: Run the Automated Self-Checkout Application

Open the run-the-application notebook in the docs folder. This notebook contains detailed instructions to run the Automated Self-Checkout application, analyze the video stream, and detect and count items in each designated zone.

Load Your YOLOv8 Model

For this application, load your OpenVINO YOLOv8 model with FP16 precision directly via the Ultralytics API to use the tracking algorithms Ultralytics provides. The following code snippet from the notebook demonstrates this:

from ultralytics import YOLO

model = YOLO('models/yolov8n_openvino_model/')

Define a Zone

A zone represents a specific area in the video and tells the application which areas it should focus on and which it can ignore. Zones are defined in a file that contains an array of JSON objects, each representing a zone with a unique ID, a set of points defining the polygonal region, and an optional label to describe the area. This configuration file is essential for the application to accurately monitor zones in the video stream.

When using video input, first extract a frame using the following block of code:

VID_PATH = "video.mov"

generator = sv.get_video_frames_generator(VID_PATH)

iterator = iter(generator)

frame = next(iterator)

cv2.imwrite("frame.jpg", frame)

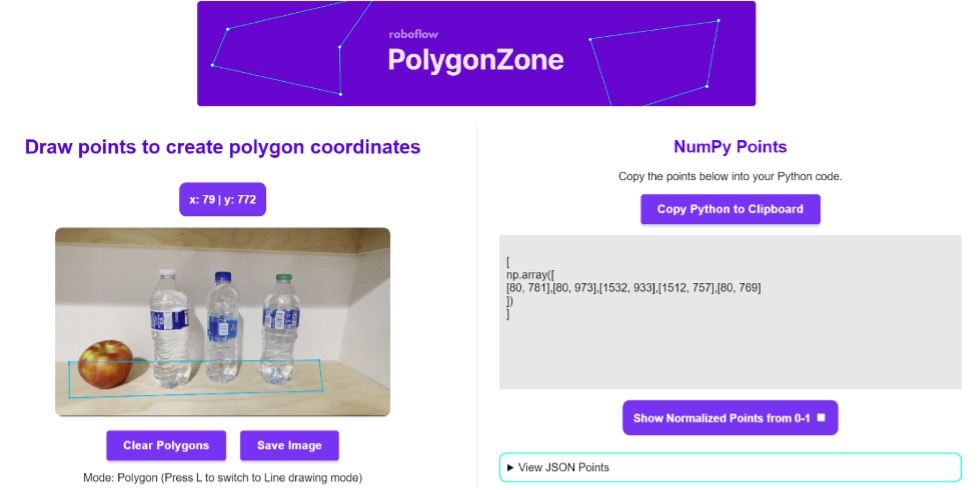

Then, draw specific points to obtain the polygon coordinates, and add these coordinates to the zones.json file by using the Roboflow Polygon Zone tool.

Define Helper Functions

There are three helper functions that support the self-checkout pipeline.

- The draw_text() function helps overlay text on the frames. This can be easily modified to be whatever text you need. OpenCV functions are used for this.

- The get_iou() function calculates the Intersection Over Union score between bounding boxes of detected objects. It uses the xyxy coordinates of the bounding boxes to calculate this score.

- The interest_bboxes () function helps you understand the interactions between bounding boxes and identify what is causing the addition or removal of objects.

Run the Application with the Main Processing Loop

Now you can run the Automated Self-Checkout application with the main processing loop. The main processing loop is responsible for running the application, processing the video stream, and detecting the number of objects in the zone. The loop uses the loaded YOLOv8 model and OpenVINO to perform object detection.

To run the application, first set the video path and zones configuration.

The application analyzes the video stream and generates detected zones and tracking IDs for each of the detected objects in the zone. After detecting objects in the zone, the application post-processes the results. This includes filtering the detected objects based on the confidence threshold and the object class.

Next, use the self_checkout loop. This loop uses the OpenVINO toolkit to perform object detection on a video file and counts the number of objects in each zone based on the detected objects, and associates them with tracking IDs.

The loop uses the path to the video file, the path to the model file, and the zone definitions as input to the model.track() functionality. The result displays the output video with the detected objects and zone annotations overlaid, and displays a notification if objects are interacted with.

You now have a functional Automated Self-Checkout system that can be used in retail checkout scenarios to improve customer satisfaction and increase sales.

Step 7: Extend the Kit for Improved Accuracy in Complex Environments

There is increasing diversity in the types of self-checkout use cases. Several possible extensions can help improve the accuracy of your model in complex environments:

- Fine-tune your model to improve accuracy for object detection and classification of specific objects.

- Augment your vision pipeline with additional models, such as using a text classification model to obtain more specific classes. Most vision checkout developers may choose to include a barcode recognition model as part of their pipeline to keep inventory of an object, in addition to the object detection model.

- Adjust the angle and view of your camera setup to ensure objects are fully visible in the frame.

- Adopt a multicamera setup to capture additional camera angles and track objects across multiple frames being captured. You can find more information on how to accomplish this using the Ultralytics APIs by referencing Ultralytics API documentation.

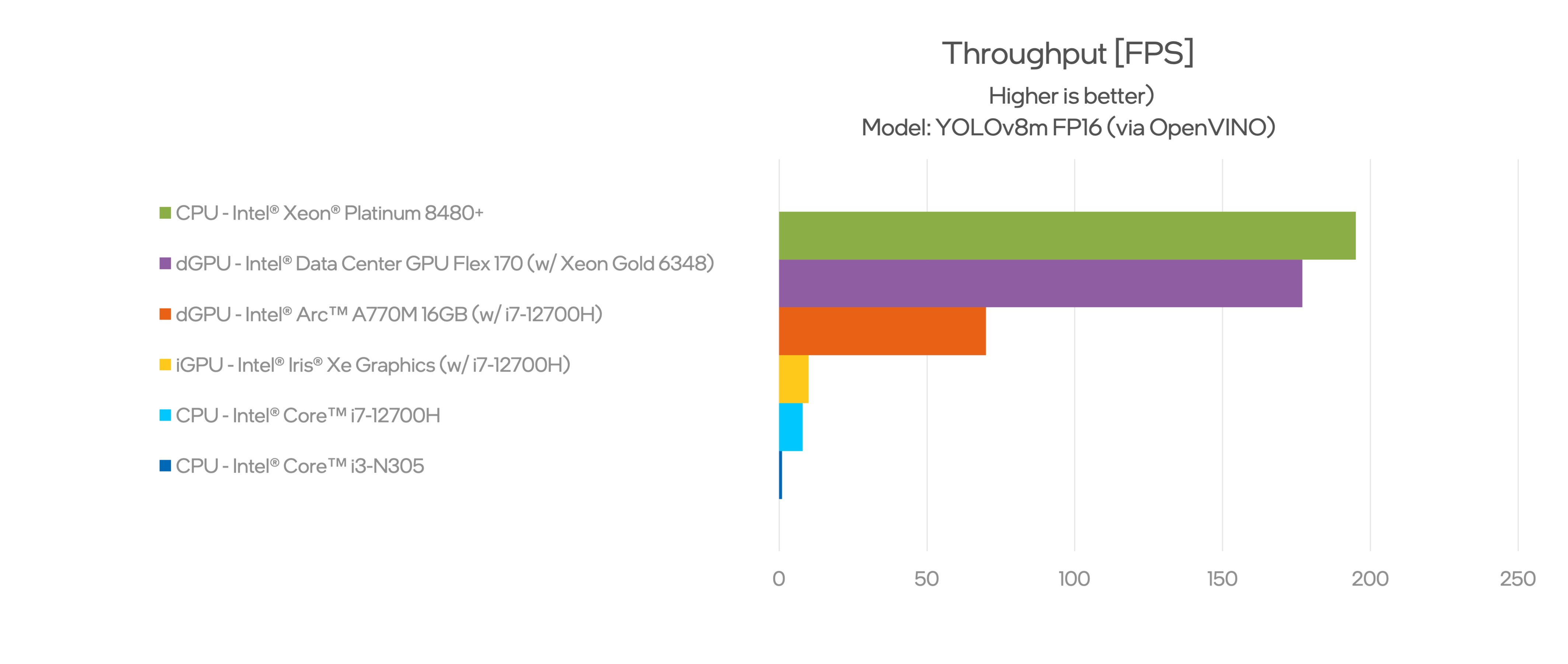

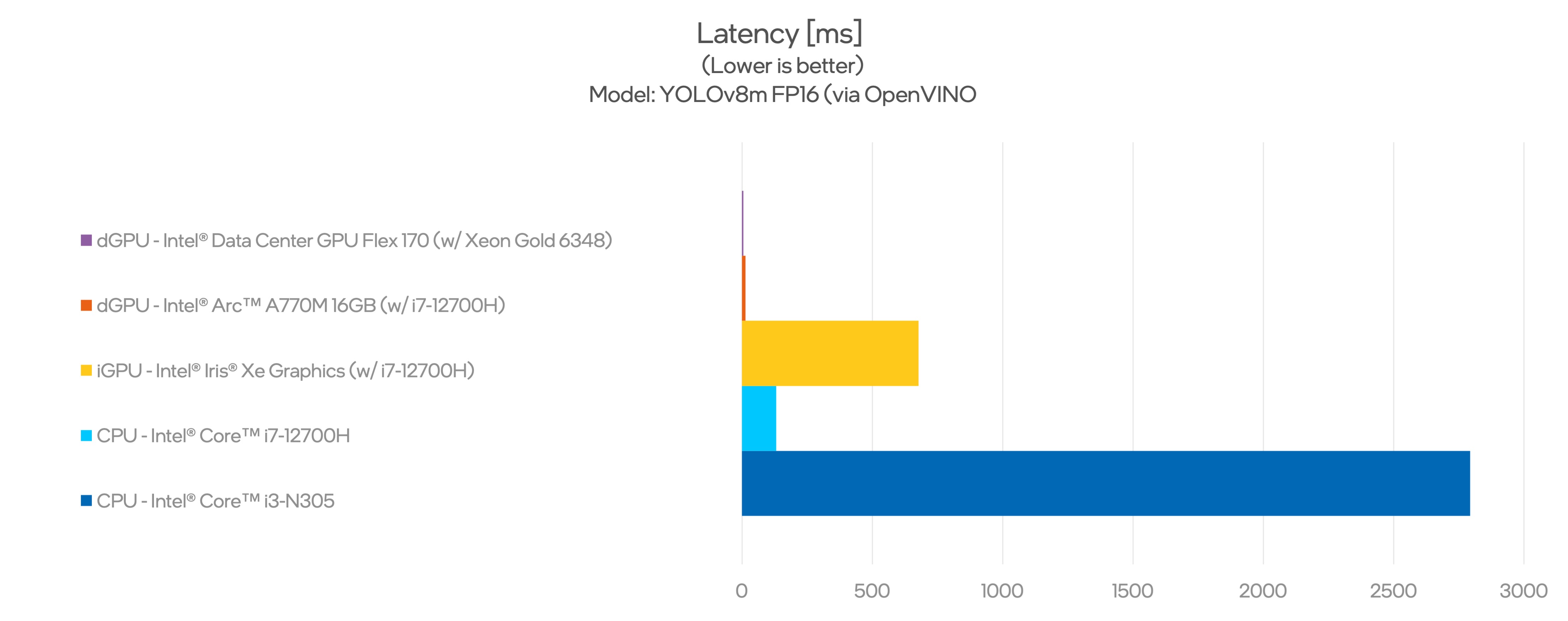

Step 8: Performance Benchmark with Benchmark_App

To evaluate the effectiveness of your YOLOv8 model optimization, use the OpenVINO toolkit benchmark_app, which provides insight into the model's real-world performance and allows you to plan for deployment.

Note These figures represent the model's theoretical maximums, and actual performance may vary depending on various factors. It's recommended to test the model in your deployment environment to understand its real-world performance.

To run the benchmarking, use the following command:

!benchmark_app -m $ model_det_path -d $device -hint latency -t 30

Replace $model_det_path with the path to your FP16 model, and $device with the specific device you're using (such as CPU or GPU). This command performs inference on the model for 30 seconds, and provides insights into the model's performance.

Note The input data shape is not explicitly defined in this command, but the -hint latency option is used to optimize the model for low latency.

For a comprehensive overview of available options and to explore additional command-line options, run benchmark_app --help.

Conclusion

The Automated Self-Checkout process is among technologies that are transforming industries. This Edge AI Reference Kit provides fundamental building blocks for object detection and tracking capabilities. It can be used to build and deploy a self-checkout application using Intel® software and hardware acceleration. This solution can be easily extendable and applied to problem statements requiring object detection, counting of objects, and decision-making, such as counting the number of medical devices in a zone at a hospital or counting the number of cars in a parking lot.

If you have questions, things you want to share, or want to start developing on your own with OpenVINO, use the following resources:

Intel Community Support Channel

OpenVINO Documentation

For more detailed information on performance benchmarks, visit GitHub.