Overview

Intel® MPI Library provides a high performance implementation of the Message Passing Interface (MPI) standard. Microsoft Azure* is a cloud service provider where you can build virtual machines to run jobs without requiring on-premise HPC infrastructure. This article will discuss how to run MPI-based programs on Azure virtual machines (VMs) using Intel® MPI Library. These instructions are currently focused only on Linux* virtual machines. Windows* virtual machine instructions will be coming in the future.

Cluster Configuration

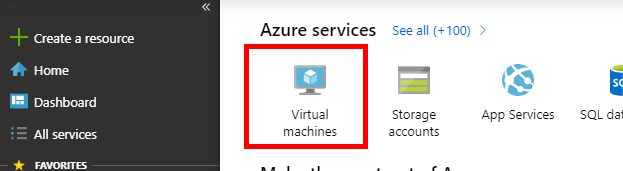

Please see Microsoft’s documentation for deploying an HPC cluster on Azure. This document provides guidance for how to configure and deploy a virtual cluster. Configure your system based on your application and workload requirements within this guidance. As an example, to create a CentOS 7.5 H8m virtual machine, start by selecting Virtual Machines from the Azure portal

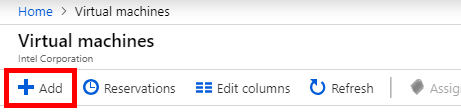

On the Virtual Machines page, click Add to begin configuring a new virtual machine.

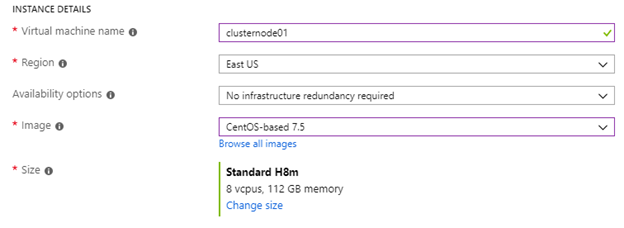

Select the following options for the virtual machine to get a CentOS 7.5 H8m virtual machine:

Ensure that your subnet is defined similarly for every node in your cluster. In this example, the subnet is the 10.0.0.0/24 network:

![]()

Intel® MPI Library Installation

Once you have deployed your virtual cluster, installing Intel® MPI Library is similar to a typical installation on a typical on-premise system. You will need to ensure that the installer is available on the system, as well as necessary license files. You will also need to decide if you are going to install to a single location with shared access from the virtual machines or locally on each virtual machine.

If you are going to be developing software on the virtual machine, you will need to install the full software development kit, either as a standalone installer or as part of Intel® Parallel Studio XE. The installation and license files can be obtained via the Intel® Registration Center. Compiling applications on a cloud system should work identically to compilation on a local system.

If you are only going to be running pre-compiled applications on the virtual system, you can use the runtime-only version. This is available either as a standalone tool (check the Runtime Environment Kit section of the Intel® MPI Library Features) or as part of the Intel® Parallel Studio XE Runtimes

Environment and Job Setup

Before running, ensure that you have met all of the prerequisite steps for Intel® MPI Library. In addition, it is frequently easier to work with hostnames rather than IP addresses. This can be done by editing the /etc/hosts file on each node and ensuring that every host is listed along with its Private IP address. The IP address can be obtained from the Azure portal for each virtual machine. Microsoft provides documentation on IP address types on Azure and configuring private IP addresses for Azure virtual machines which can help understanding how IP addresses are used in Azure.

Once connectivity is established, you can run your MPI job just like you do with a traditional cluster. Setup your environment variables using either psxevars.sh or mpivars.sh.

Ensure your hosts file contains all of the virtual machines to be used.

As a test, run the Intel® MPI Benchmarks, an MPI benchmark suite included with the Intel® MPI Library.

This will run a simple ping pong test which will verify basic functionality and performance.