Overview

This article describes how to configure containers to take advantage of Open vSwitch* with the Data Plane Development Kit (OvS-DPDK). With the rise of cloud computing, it’s increasingly important to get the most out of your server’s resources, and these technologies allow us to achieve that goal. To begin, we will install Docker* (v1.13.1), DPDK (v17.05.2) and OvS (v2.8.0) on Ubuntu* 17.10. We will also configure OvS to use the DPDK. Finally, we will use iPerf3 to benchmark an OvS run versus OvS-DPDK run to test network throughput.

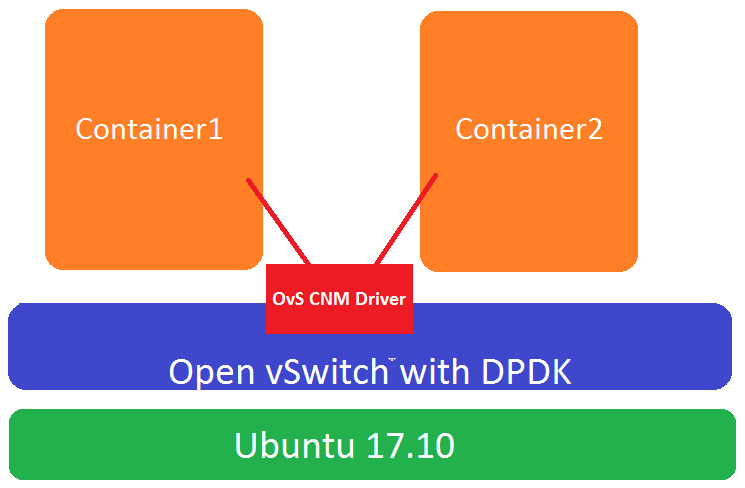

We configure Docker to create a logical switch using OvS-DPDK, and then connect two Docker containers to the switch. We then run a simple iPerf3 OvS-DPDK test case. The following diagram captures the setup.

Test Configuration

Installing Docker and OvS-DPDK

Run the following commands to install Docker and OvS-DPDK.

sudo apt install docker.io

sudo apt install openvswitch-switch-dpdk

After installing OvS-DPDK, we will update Ubuntu to use OvS-DPDK and restart the OvS service.

sudo update-alternatives --set OvS-vswitchd /usr/lib/openvswitch-switch

-dpdk/OvS-vswitchd-dpdk

sudo systemctl restart openvswitch-switch.service

Configure Ubuntu* 17.10 for OvS-DPDK

The system used in this demo is a two-socket, 22 core-per-socket server enabled with Intel® Hyper-Threading Technology (Intel® HT Technology), giving us a total of 44 physical cores. The CPU model used is an Intel® Xeon® processor E5-2699 v4 @ 2.20 GHz. To configure Ubuntu for optimal use of OvS-DPDK, we will change the GRUB* command-line options that are passed to Ubuntu at boot time for our system. To do this we edit the following config file:

/etc/default/grub

Change the setting GRUB_CMDLINE_LINUX_DEFAULT to the following:

GRUB_CMDLINE_LINUX_DEFAULT="default_hugepagesz=1G hugepagesz=1G hugepages=16 hugepagesz=2M hugepages=2048 iommu=pt intel_iommu=on isolcpus=1-21,23-43,45-65,67-87"

This makes GRUB aware of the new options to pass to Ubuntu during boot time. We set isolcpus so that the Linux* scheduler will run on only two physical cores. Later, we will allocate the remaining cores to the DPDK. Also, we set the number of pages and page size for hugepages. For details on why hugepages are required and how they can help to improve performance, refer to the section called Use of Hugepages in the Linux Environment in the System Requirements chapter of the Getting Started Guide for Linux at dpdk.org.

Note: The isolcpus setting varies depending on how many cores are available per CPU.

Also, we edit /etc/dpdk/dpdk.conf to specify the number of hugepages to reserve on system boot. Uncomment and change the setting NR_1G_PAGES to the following:

NR_1G_PAGES=8

Depending on the system memory size and the problem size of your DPDK application, you may increase or decrease the number of 1G pages. Use of hugepages increases performance, because fewer pages are needed and less time is spent doing Translation Lookaside Buffers lookups.

After both files have been updated, run the following commands:

sudo update-grub

sudo reboot

Reboot to apply the new settings. If needed, during the boot enter the BIOS and enable:

- Intel® Virtualization Technology (Intel® VT) for IA-32, Intel® 64 and Intel® architecture

- Intel VT for Directed I/O

After logging back into your Ubuntu session, create a mount path for your hugepages:

sudo mkdir -p /mnt/huge

sudo mkdir -p /mnt/huge_2mb

sudo mount -t hugetlbfs none /mnt/huge

sudo mount -t hugetlbfs none /mnt/huge_2mb -o pagesize=2MB

sudo mount -t hugetlbfs none /dev/hugepages

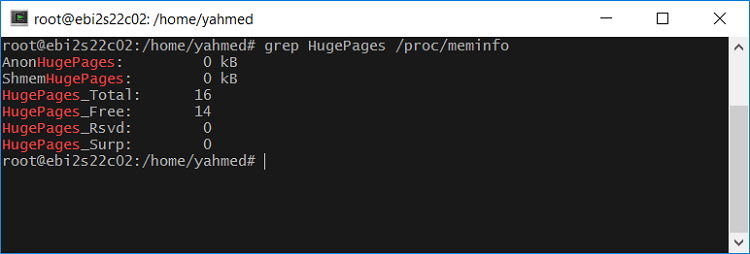

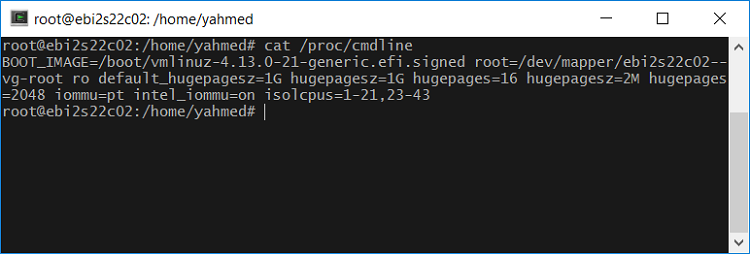

To ensure that the changes are in effect, run the commands below:

grep HugePages_ /proc/meminfo

cat /proc/cmdline

If the changes took place, your output from the above commands should look similar to the image below:

grep: hugepages output

cat: /proc/cmdline output

Configuring OvS-DPDK Settings

To initialize the ovs-vsctl database, a one-time step, we will run the command sudo ovs-vsctl –no-wait init. The OvS database will contain user-set options for OvS and the DPDK. To pass arguments to the DPDK, we will use the command-line utility as follows:

sudo ovs-vsctl set Open_vSwitch . <argument>

Additionally, the OvS-DPDK package relies on the following config files:

/etc/dpdk/dpdk.conf– Configures hugepages/etc/dpdk/interfaces– Configures/assigns network interface cards (NICs) for DPDK use

Next, we configure OvS to use DPDK with the following command:

sudo ovs-vsctl -no-wait set Open_vSwitch . other_config:dpdk-init=true

After the OvS is set up to use DPDK, we will change one OvS setting, two important DPDK configuration settings, and bind our NIC devices to the DPDK.

DPDK settings

dpdk-lcore-mask: Specifies the CPU cores on which dpdk lcore threads should be spawned. A hex string is expected.dpdk-socket-mem: Comma-separated list of memory to preallocate from hugepages on specific sockets.

OvS settings

pmd-cpu(poll mode drive-mask: PMD (poll-mode driver)) threads can be created and pinned to CPU cores by explicitly specifyingpmd-cpu-mask. These threads poll the DPDK devices for new packets instead of having the NIC driver send an interrupt when a new packet arrives.

Use the following commands to configure these settings:

sudo ovs-vsctl -no-wait set Open_vSwitch . other_config:dpdk-lcore-mask=0xfffffbffffe

sudo ovs-vsctl -no-wait set Open_vSwitch . other_config:dpdk-socket-mem="1024,1024"

sudo ovs-vsctl set Open_vSwitch . other_config:pmd-cpu-mask=0x800002

For dpdk-lcore-mask we used a mask of 0xfffffbffffe to specify the CPU cores on which dpdk-lcore should spawn. For more information about masking, read Mask(computing). In our system, we have the dpdk-lcore threads spawn on all cores except cores 0, 21, 22, and 43. Those cores are reserved for the Linux scheduler. Similarly, for the pmd-cpu-mask, we used the mask 0x800002 to spawn two PMD threads for non-uniform memory access (NUMA) Node 0, and another two PMD threads for NUMA Node 1. Finally, since we have a two-socket system, we allocate 1 GB of memory per NUMA Node; that is, “1024, 1024”. For a single-socket system, the string would be “1024”.

Binding Devices to DPDK

To bind your NIC device to the DPDK, run the dpdk-devbind command. For example, to bind eth1 from the current driver and move to use the vfio-pci driver, run dpdk-devbind --bind=vfio-pci eth1. To use the vfio-pci driver, run modsprobe to load it and its dependencies.

This is what it looked like on my system, with 2 x 10 Gb interfaces available:

sudo modprobe vfio-pci

sudo dpdk-devbind --bind=vfio-pci enp3s0f0

sudo dpdk-devbind --bind=vfio-pci enp3s0f1

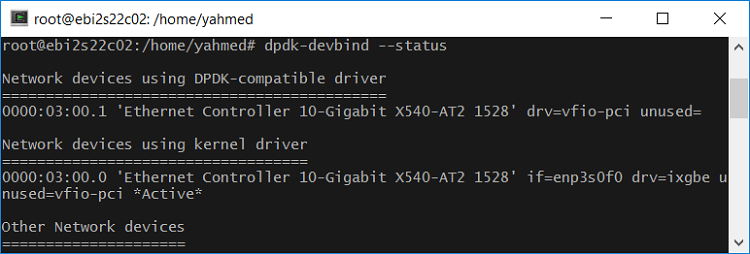

To check whether the NIC cards you specified are bound to the DPDK, run the command:

sudo dpdk-devbind --status

If all is correct, you should have an output similar to the image below:

Binding of NIC

Configuring Open vSwitch with Docker

To have Docker use OvS as a logical networking switch, we must complete a few more steps. First we must install Open Virtual Network (OVN) and Consul*, a distributed key-value store. To do this, run the commands below. With OVN and Consul we will run in overlay mode, which will allow a multi-tenant multi-host network possible.

sudo apt install ovn-common

sudo apt install ovn-central

sudo apt install ovn-host

sudo apt install ovn-docker

sudo apt install python-openvswitch

wget https://releases.hashicorp.com/consul/1.0.6/consul_1.0.6_linux_amd64.zip

unzip consul_1.0.6_linux_amd64.zip

sudo mv consul /usr/local/bin

The Open Virtual Network* (OVN) is a system that supports virtual network abstraction. This system complements the existing capabilities of OVS to add native support for virtual network abstractions, such as virtual L2 and L3 overlays and security groups. Services such as DHCP are also desirable features. Just like OVS, OVN’s design goal is to have a production-quality implementation that can operate at significant scale.

Once we have OVN and Consul installed we will:

- Stop the docker daemon.

- Set up the consul agent.

- Restart the docker daemon.

- Configure OVN in overlay mode.

Consul key-value store

To start a Consul key-value store, open another terminal and run the following command:

mkdir /tmp/consul

sudo consul agent -ui -server -data-dir /tmp/consul -advertise 127.0.0.1 -bootstrap-expect 1

This creates a consul agent that will only advertise locally, expect only one server in the consul cluster, and set the directory for the agent to save its state. A Consul key-store is needed for service discovery for multi-host, multi-tenant configurations.

An agent is the core process of Consul. The agent maintains membership information, registers services, runs checks, responds to queries, and more. The agent must run on every node that is part of a Consul cluster.

Restart docker daemon

Once the consul agent has started, open another terminal and restart the Docker daemon with the following arguments and with $HOST_IP set to the local IPv4 address:

sudo dockerd --cluster-store=consul://127.0.0.1:8500 --cluster-advertise=$HOST_IP:0

--cluster-store option tells the Engine the location of the key-value store for the overlay network. More information can be found on Docker Docs.

Configure ovn in overlay mode

To configure OVN in overlay mode, follow the instructions :

http://docs.openvswitch.org/en/latest/howto/docker/#the-overlay-mode

After OVN has been set up in overlay mode, install Python* Flask and start the ovn-docker-overlay-driver.

The ovn-docker-overlay-driver uses the Python flask module to listen to Docker’s networking api calls.

sudo pip install flask

sudo ovn-docker-overlay-driver --detach

To create the logical switch using OvS- DPDK that Docker will use, run the following command:

sudo docker network create -d openvswitch --subnet=192.168.22.0/24 ovs

To list the logical network switch run:

sudo docker network ls

Pull iperf3 Docker Image

To download the iperf3 docker container, run the following command:

sudo docker pull networkstatic/iperf3

Later we will create two iperf3 containers for a multi-tenant, single-host benchmark.

Default versus OvS-DPDK Networking Benchmark

First we will generate an artificial workload on our server, to simulate servers in production. To do this we install the following:

sudo apt install stress

After installation, run stress with the following arguments, or a set of arguments, to put a meaningful load on your server:

sudo stress -c 6 -i 6 -m 6 -d 6

-c, --cpu N spawn N workers spinning on sqrt()

-i, --io N spawn N workers spinning on sync()

-m, --vm N spawn N workers spinning on malloc()/free()

-d, --hdd N spawn N workers spinning on write()/unlink()

--hdd-bytes B write B bytes per hdd worker (default is 1GB)

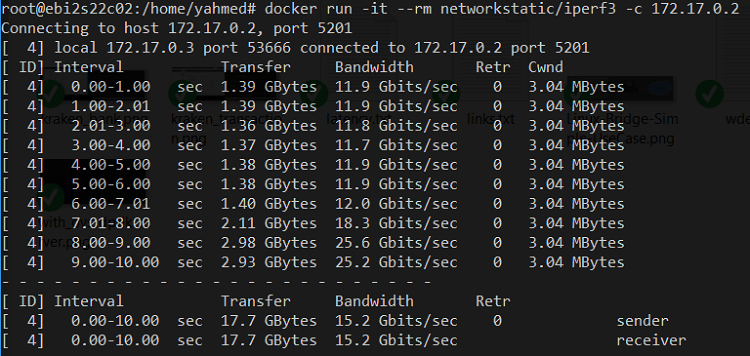

Default docker networking test

With our system under load, our first test will be to run the iperf3 container using Docker’s default network configuration, which uses Linux bridges. To benchmark throughput using Docker's default networking open 2 terminals. In the first terminal run the iper3 container in server mode.

sudo docker run -it --rm --name=iperf3-server -p 5201:5201

networkstatic/iperf3 -s

Open another terminal, and then run iper3 in client mode.

docker inspect --format "{{ .NetworkSettings.IPAddress }}" iperf3-server

sudo docker run -it --rm networkstatic/iperf3 -c ip_address

We will obtain the Docker-assigned ip address of the container named "iperf3-server" and then create another container in client mode with the argument of the ip-address of the iperf3-server.

Using Docker's default networking, we were able to achieve the network throughput shown below:

iperf3 benchmark default networking.

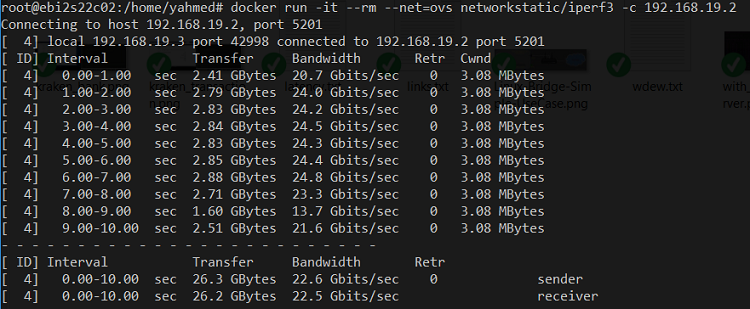

OvS-DPDK docker networking test

With our system still under load, we will repeat the same test as above, but will have our containers connect to the OvS-DPDK network.

sudo docker run -it --net=ovs --rm --name=iperf3-server -p 5201:5201

networkstatic/iperf3 -s

Open another terminal and run iperf3 in client mode.

sudo docker run -it --net=ovs --rm --name=iperf3-server -p 5201:5201

sudo docker run -it --net=ovs --rm networkstatic/iperf3 -c ip_address

Using Docker with OvS-DPDK networking, we were able to achieve the network throughput shown below:

iperf3 benchmark OvS-DPDK networking

Summary

By setting up Docker to use OvS-DPDK on our server, we achieved a approximately 1.5x increase of network throughput compared to Docker’s default networking. Also it is possible to use OvS-DPDK with OVN to create a multi-tenant, multi-host swarm/cluster in your server environment, with overlay-mode.

About the Author

Yaser Ahmed is a software engineer at Intel Corporation and has an MS degree in Applied Statistics from DePaul University and a BS degree in Electrical Engineering from the University of Minnesota.