Introduction

- Inference Engine Python API support.

- Samples to demonstrate Python API usage.

- Samples to demonstrate Python* API interoperability between AWS Greengrass* and the Inference Engine.

Prerequisites

Install the Intel® Distribution of OpenVINO™ toolkit

If you already have Intel® Distribution of OpenVINO™ toolkit 2018 R1.2 installed on your computer you can skip this section. Otherwise, you can get the free download here

Next, install the Intel® Distribution of OpenVINO™ toolkit as described here

Optional Installation Steps

After completing the toolkit installation steps, rebooting, and adding symbolic links as directed in the installation procedure, you can optionally add a command to your .bashrc file to permanently set the environment variables required to compile and run Intel® Distribution of OpenVINO™ toolkit applications.

- Open

.bashrc:cd ~ sudo nano .bashrc

- Add the following command at the bottom of

.bashrc:source /opt/intel/computer_vision_sdk_2018.1.265/bin/setupvars.sh

- Save and close the file by typing CTRL+X, Y, and then ENTER.

The installation procedure also recommends adding libOpenCL.so.1 to the library search path. One way to do this is to add an export command to setupvars.sh script:

- Open

setupvars.sh:cd /opt/intel/computer_vision_sdk_2018.1.265/bin/ sudo nano setupvars.sh

- Add the following command at the bottom of

setupvars.sh:export LD_LIBRARY_PATH=/usr/lib/x86_64-linux-gnu/libOpenCL.so.1:$LD_LIBRARY_PATH

- Save and close the file by typing CTRL+X, Y, and then ENTER.

- Reboot the system:

reboot

Run the Python* Classification Sample

Before proceeding with the Python classification sample, run the demo_squeezenet_download_convert_run.sh sample script from the demo folder as shown below:

cd /opt/intel/computer_vision_sdk/deployment_tools/demo sudo ./demo_squeezenet_download_convert_run.sh

(Important: You must run the demo_squeezenet_download_convert_run.sh script at least once in order to complete the remaining steps in this paper, as it downloads and prepares the deep learning model used later in this section.)

The demo_squeezenet_download_convert_run.sh script accomplishes several tasks:

- Downloads a public SqueezeNet model, which is used later for the Python classification sample.

- Installs the prerequisites to run Model Optimizer.

- Builds the classification demo app.

- Runs the classification demo app using the

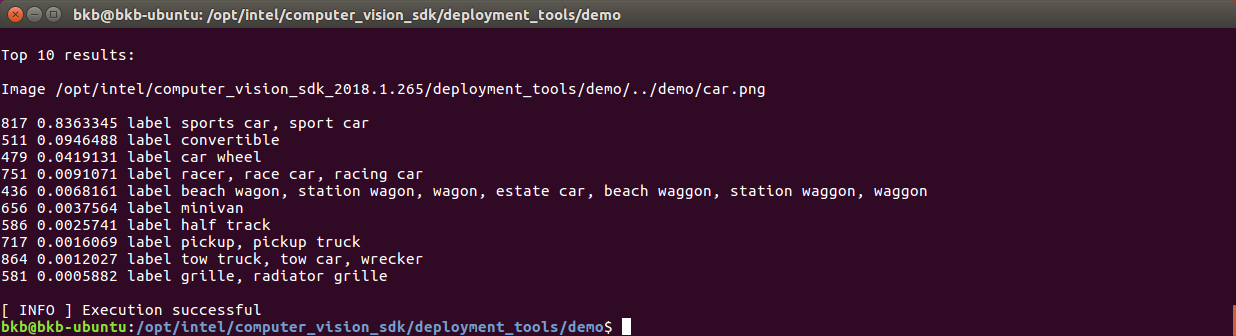

car.pngpicture from the demo folder. The classification demo app output should be similar to that shown in Figure 1.

Figure 1. Classification demo app output

If you did not modify your .bashrc file to permanently set the required environment variables (as indicated in the Optional Installation Steps section above), you may encounter problems running the demo. If this is the case, run setupvars.sh before running demo_squeezenet_download_convert_run.sh.

The setupvars.sh script also detects the latest installed Python version and configures the required environment. To check this, type the following command:

echo $PYTHONPATH

Python 3.5.2 was installed on the system used in the preparation of this paper, so the path to the preview version of the Inference Engine Python API is:

/opt/intel/computer_vision_sdk/deployment_tools/inference_engine/python_api/ubuntu_1604/python3

Go to the Python samples directory and run the classification sample:

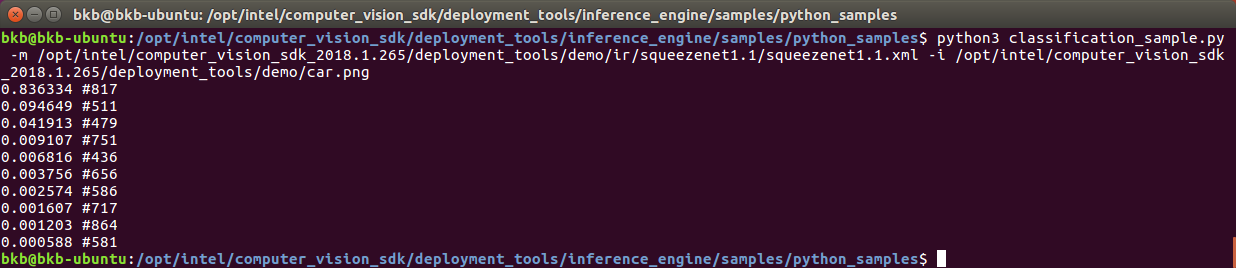

cd /opt/intel/computer_vision_sdk/deployment_tools/inference_engine/samples/python_samples python3 classification_sample.py -m /opt/intel/computer_vision_sdk_2018.1.265/deployment_tools/demo/ir/squeezenet1.1/squeezenet1.1.xml -i /opt/intel/computer_vision_sdk_2018.1.265/deployment_tools/demo/car.png

In the second command we are launching Python3 to run classification_sample.py, specifying the same model (-m) and image (-i) parameters that were used in the earlier demo (i.e., demo_squeezenet_download_convert_run.sh). (Troubleshooting: if you encounter the error "ImportError: No module named 'cv2'", run the command sudo pip3 install opencv-python to install the missing library.) The Python classification sample output should be similar to that shown in Figure 2.

Figure 2. Python classification sample output

Note that the numbers shown the second column (#817, #511…) refer to the index numbers in the labels file, which identifies all of the objects that are recognizable by the deep learning model. The labels file is located in:

/opt/intel/computer_vision_sdk_2018.1.265/deployment_tools/demo/ir/squeezenet1.1/squeezenet1.1.labels

Conclusion

This paper presented an overview of the Inference Engine Python API, which was introduced as a preview in the Intel® Distribution of OpenVINO™ toolkit R1.2 Release. It is important to remember that as a preview version of the Inference Engine Python API, it is intended for evaluation purposes only.

A hands-on demonstration of Python-based image classification was also presented in this paper, using the classification_sample.py example. This is only one of several Python samples contained in the Intel® Distribution of OpenVINO™ toolkit, so be sure to check out the other Python features contained in this release of the toolkit.