This article was originally published on roboflow.com.

The Contrastive Language Image Pretraining (CLIP) architecture is a foundation of modern computer vision. Use the CLIP architecture to train embedding models that can be used for image and video classification, retrieval augmented generation (RAG), image similarity computations, and more.

The CLIP architecture, released by OpenAI*, has several public checkpoints changed on large datasets. The first model using the CLIP architecture was released by OpenAI and, since then, many companies have trained their own CLIP models: Apple*, Meta* AI, and more. But these models are rarely tuned to a specific use case; rather, they are trained for general purposes.

The Intel® Gaudi® 2 accelerator, combined with Hugging Face* Transformers and its Intel® Gaudi® 2 accelerators with Optimum Habana optimizations, can be used to train a projection layer for a custom CLIP model. This CLIP model can be encoded to learn concepts related to a specific domain associated with the appropriate dataset.

This guide demonstrates how to train a CLIP projection layer using Intel® Gaudi® 2 accelerator. It will also show how to prepare a model for deployment. It will report on training and inference times from the model built in this article, so users can evaluate what they can expect in terms of performance during training and deployment.

Without further ado, let’s get started!

What is CLIP?

Contrastive Language Image Pretraining (CLIP) is a multimodal vision model architecture developed by OpenAI. CLIP can be used to calculate image and text embeddings. CLIP models are trained on pairs of images and text. These pairs are used to train an embedding model that learns associations between the contents of an image, with reference to the text caption associated with the image.

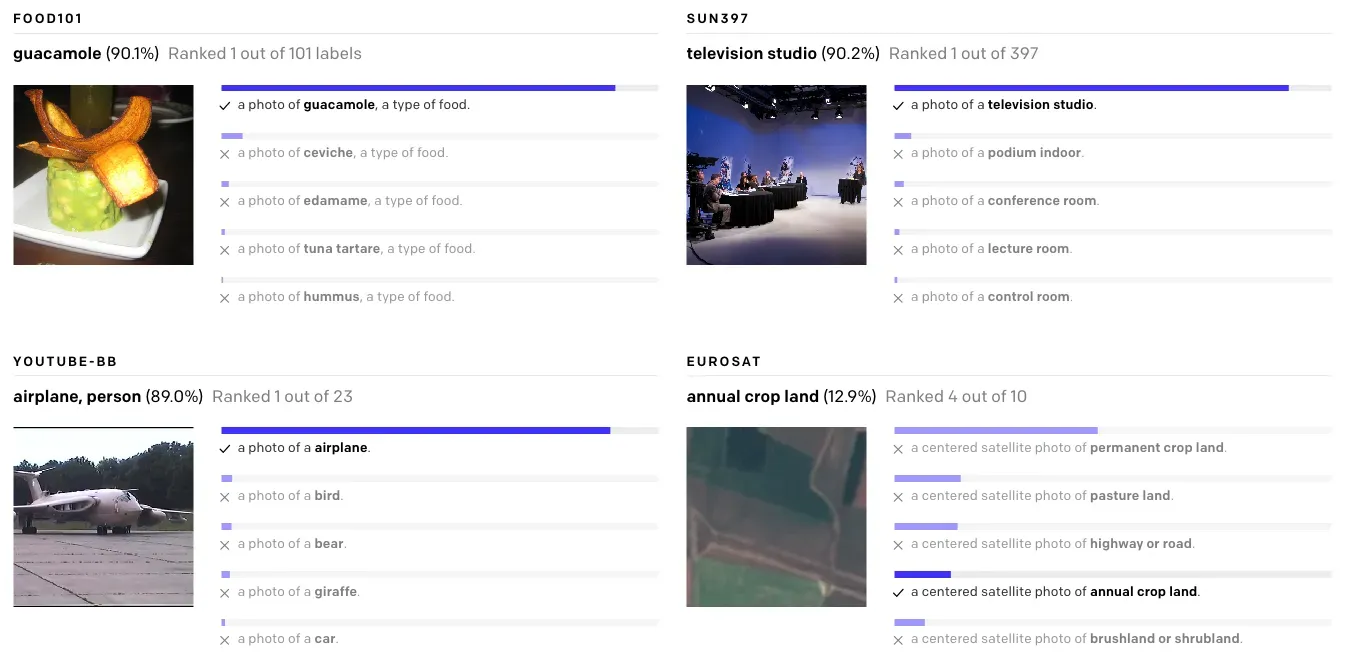

Figure 1. Using CLIP for image classification

Image Source: OpenAI

CLIP models can assist with many enterprise applications. For example, CLIP can be used to assist with:

- Classifying images of parts on assembly lines

- Classifying videos in a media archive

- Moderating image content at scale, and in real time

- Deduplicate images before training large models

- And more

CLIP models can run at several frames per second, depending on the hardware used. Hardware tailored for AI development, such as the Intel® Gaudi® 2 accelerator, is ideal for running models like CLIP. For example, a single Intel® Gaudi® 2 accelerator was able to compute 66,211 CLIP vectors in 20 minutes. This speed is enough to solve for many real-time applications; additional chips could be added for higher performance.

While out-of-the-box CLIP models, such as the checkpoint released by OpenAI, give good results in most instances, this is not true specific use cases and/or proprietary use cases that require confidential enterprise data. In these cases, training a custom CLIP model can be useful.

How to Train a CLIP Model on Intel® Gaudi® 2 Accelerator

A custom CLIP model can be trained using a tailored dataset that classifies images specific to the use case's function. For example, a model can be trained to distinguish between manufactured parts in an inventory, or to classify if defects are present in a set of products, or to identify different landmarks; assuming the appropriate dataset is available for all cases.

Training a CLIP model requires:

- A dataset of images, and;

- Detailed captions that describe the contents of each image.

The captions should be a few sentences long, and accurately describe what is visible in each image.

One way to train a CLIP model is to use Hugging Face Transformers, which has support for training vision-language models such as CLIP. Hugging Face Transformers has partnered with Intel to accelerate performance of training and inference on Intel® Gaudi® 2 accelerators through the Optimum Habana Transformers extensions. The example in this guide leverages the Transformers libary and the Optimum Habana Transformers to train a model using the COCO dataset.

Step 1: Download Optimum Habana Training Scripts and install requirements

To get started with CLIP on Intel® Gaudi® accelerators, download the required scripts from the Optimum Habana GitHub repository.

First, clone the Optimum Habana GitHub repository and install the Optimum Habana libraries:

git clone https://github.com/huggingface/optimum-habana

cd optimum-habana && python setup.py install

Then, navigate to the examples/contrastive-image-text folder:

cd examples/contrastive-image-text

This folder contains all of the training scripts used in this guide. This folder should be the working directory for the rest of this guide.

Next, install the project requirements:

pip install -r requirements.txt

Also install the Hugging Face Transformers library, which is used in model training:

pip install transformers

Step 2: Download and Configure a Dataset

To train a CLIP-like model, an image dataset with captions that correspond to each image is required. These captions should be dense in information, enough from which the model can learn about the contents of an image.

This guide uses the COCO dataset to train the CLIP model. This dataset comes with visual captions for over 100,000 images.

The Hugging Face team has prepared a script that trains a CLIP model using Intel® Gaudi® 2 accelerators for AI training. This script takes in a dataset in the COCO JSON format and trains a CLIP model on the dataset. This guide uses the default COCO dataset. However, any set of images, specified using a JSON file in the COCO JSON format, can be used for training a CLIP model. To learn more about the COCO JSON format, refer to the Microsoft* COCO dataset web page.

Images in the dataset should have the following features in COCO JSON format:

["image_id", "caption_id", "caption", "height", "width", "file_name", "coco_url", "image_path", "id"]

The required sub-sets of a dataset include a train, test, and valid dataset.

Download the CLIP dataset to our Intel® Gaudi® 2 accelerator in a subdirectory of the working directory (optimum-habana/examples/contrastive-image-text):

mkdir data

cd data

wget http://images.cocodataset.org/zips/train2017.zip

wget http://images.cocodataset.org/zips/val2017.zip

wget http://images.cocodataset.org/zips/test2017.zip

wget http://images.cocodataset.org/annotations/annotations_trainval2017.zip

wget http://images.cocodataset.org/annotations/image_info_test2017.zip

cd ..

Once the dataset is downloaded training the CLIP model can proceed.

Step 3: Create a Model Stub

The Hugging Face script uses a pre-trained text and vision encoder to train the CLIP-like vision-text dual encoder. Before training the projection layer of the model first download the required weights and model configurations for the dual encoder. To do this create a new Python* file, called model_stub.py, and add the following code:

from transformers import (

VisionTextDualEncoderModel,

VisionTextDualEncoderProcessor,

AutoTokenizer,

AutoImageProcessor

)

model = VisionTextDualEncoderModel.from_vision_text_pretrained(

"openai/clip-vit-base-patch32", "roberta-base"

)

tokenizer = AutoTokenizer.from_pretrained("roberta-base")

image_processor = AutoImageProcessor.from_pretrained("openai/clip-vit-base-patch32")

processor = VisionTextDualEncoderProcessor(image_processor, tokenizer)

# save the model and processor

model.save_pretrained("clip-roberta")

processor.save_pretrained("clip-roberta")

This code loads a CLIP model and saves a pre-trained vision-text encoder to the clip-roberta sub-directory. Then the projection layer is tuned using the roberta-base tokenizer and the associated captions. Run the model_stub.py code to download and save the pre-trained model:

PT_HPU_LAZY_MODE=1 python ./model_stub.py

Step 4: Train the Model

With the pre-trained model now available, train the model on a single or multiple HPUs with one of the following commands:

Train with a Single HPU

PT_HPU_LAZY_MODE=0 python run_clip.py \

--output_dir ./clip-roberta-finetuned \

--model_name_or_path ./clip-roberta \

--data_dir $PWD/data \

--dataset_name ydshieh/coco_dataset_script \

--dataset_config_name=2017 \

--image_column image_path \

--caption_column caption \

--remove_unused_columns=False \

--do_train --do_eval \

--per_device_train_batch_size="512" \

--per_device_eval_batch_size="64" \

--learning_rate="5e-5" --warmup_steps="0" --weight_decay 0.1 \

--overwrite_output_dir \

--save_strategy epoch \

--use_habana \

--gaudi_config_name Habana/clip \

--throughput_warmup_steps 3 \

--dataloader_num_workers 16 \

--sdp_on_bf16 \

--bf16 \

--trust_remote_code \

--torch_compile_backend=hpu_backend \

--torch_compile

Train with Multiple HPUs

PT_HPU_LAZY_MODE=0 PT_ENABLE_INT64_SUPPORT=1 \

python ../gaudi_spawn.py --world_size 8 --use_mpi run_clip.py \

--output_dir=/tmp/clip_roberta \

--model_name_or_path=./clip-roberta \

--data_dir $PWD/data \

--dataset_name ydshieh/coco_dataset_script \

--dataset_config_name 2017 \

--image_column image_path \

--caption_column caption \

--remove_unused_columns=False \

--do_train --do_eval \

--mediapipe_dataloader \

--per_device_train_batch_size="64" \

--per_device_eval_batch_size="64" \

--learning_rate="5e-5" --warmup_steps="0" --weight_decay 0.1 \

--overwrite_output_dir \

--use_habana \

--use_lazy_mode=False \

--gaudi_config_name="Habana/clip" \

--throughput_warmup_steps=3 \

--save_strategy="no" \

--dataloader_num_workers=2 \

--use_hpu_graphs \

--max_steps=100 \

--torch_compile_backend=hpu_backend \

--torch_compile \

--logging_nan_inf_filter \

--trust_remote_code

CLIP Training Benchmark on Intel® Gaudi® 2 Accelerator

To evaluate the performance of CLIP training on Intel® Gaudi® 2 accelerator, a training job on a single HPU was run using the COCO dataset. The amount of time the training job took to complete, from start to finish, was recorded using the time command. This training time includes dataset initialization but does not include data download since the COCO dataset was downloaded prior to training.

Training the model took 15 minutes and one second to train the entire COCO dataset and spanned thre epochs. This is not training a CLIP model from scratch. Rather, a projection layer is being "tuned" using the custom dataset, helping the CLIP model "learn" new concepts based on the new data.

How to Deploy CLIP to Intel® Gaudi® 2 Accelerator

This section discusses how to use the model trained in the previous section to deploy a CLIP-like model supporting inference on Intel® Gaudi® 2 accelerators.

Step 1: Write a CLIP Inference Script

To start deploying a tuned CLIP model for inference on an Intel® Gaudi® 2 accelerator, an inference script that calculates CLIP vectors using that model is required.

This script can then be adjusted for a range of use cases, including zero-shot image classification, video classification, dataset deduplication, image search, and more. Some of these use cases are breifly discussed in the Prepare Model for Production section of this guide, and provide references to code Roboflow* has already written.

For now, however, create a script that allows CLIP to calculate vectors with the model:

import json

import os

import faiss

import habana_frameworks.torch.core as htcore

import tqdm

from PIL import Image

from transformers import (

AutoImageProcessor,

AutoTokenizer,

VisionTextDualEncoderModel,

VisionTextDualEncoderProcessor,

)

def get_image_embedding(image):

inputs = processor(images=[image], return_tensors="pt", padding=True).to("hpu")

outputs = model.get_image_features(**inputs)

return outputs.cpu().detach().numpy()

def get_text_embedding(text):

inputs = processor(text=text, return_tensors="pt", padding=True).to("hpu")

outputs = model.get_text_features(**inputs)

return outputs.cpu().detach().numpy()

model = VisionTextDualEncoderModel.from_vision_text_pretrained(

"openai/clip-vit-base-patch32", "roberta-base"

).to("hpu")

tokenizer = AutoTokenizer.from_pretrained("roberta-base")

image_processor = AutoImageProcessor.from_pretrained("openai/clip-vit-base-patch32")

processor = VisionTextDualEncoderProcessor(image_processor, tokenizer)

print(get_image_embedding(Image.open(“image.jpeg”)))

This code defines functions that calculate text and image embeddings. It also calculates an image embedding for an image called image.jpeg and prints the results to the console.

Running the codes shows that the model successfully calculates and returns a CLIP embedding.

Step 2: Prepare Model for Production

Now that a model is configured, write custom logic that uses that model to solve a business problem.

There are many applications for CLIP in the enterprise. For example, CLIP can be used for:

- Dataset Deduplication: Find and remove duplicate images in a dataset to ensure a dataset is ready for training. Deduplication will help ensure duplicate images do not end up in training and validate datasets when data is split, which can compromise training quality. Deduplication will also ensure the model is not training on several examples of the same image, which is inefficient.

- Image Search: Create a semantic image search engine. This allows users to search a library of images using text and image queries. This is ideal for helping people find relevant images in a large corpus of image data. For example, a news organization could use a semantic image search to find images that would be relevant to an article.

- Image Classification: Assign a single or multiple labels to an image. Image classification can be used for consumer-facing applications (i.e. wildlife applications where someone can take a photo to learn more about an animal), content moderation, and more.

- Video Classification: Identify if specific scenes are present in a video. This is ideal for media indexing use cases where you want to identify the timestamps at which different features are present on a screen. Video classification can also be used to identify if a video contains NSFW content.

- Retrieval Augmented Generation: CLIP can be used to retrieve images that are candidates in RAG-based systems, including RAG systems that integrate with large multimodal models (LMMs) like GPT-4* with Vision.

The Roboflow team has prepared several tutorials for reference when building enterprise applications with CLIP and Intel® Gaudi® 2 accelerator:

- Build an Image Search Engine with CLIP using Intel® Gaudi® 2 AI Accelerators

- Build Enterprise Datasets with CLIP for Multimodal Model Training Using Intel® Gaudi® 2 AI Accelerators

- Multimodal Video Analysis with CLIP using Intel® Gaudi® 2 AI Accelerators

CLIP Inference Benchmark on Intel® Gaudi® 2 Accelerator

The Roboflow team calculated CLIP vectors for 66,211 images using a single Intel® Gaudi® accelerator to benchmark performance. We used the out-of-the-box CLIP model so you can understand how the model available in Transformers will perform on your system.

We chose the out-of-the-box CLIP model to offer a standard benchmark, as opposed to using a fine-tuned model where model performance may vary.

In our benchmarking, we found a single Intel® Gaudi® 2 AI accelerator could calculate CLIP vectors for 66,211 images in 20m11s using the default CLIP weights. Broken down, our system calculated ~3,310 CLIP vectors per minute, or ~55.2 CLIP vectors per second.

This performance is ideal for powering both batch processing and real-time applications for which calculating CLIP vectors are required.

Conclusion

Contrastive Language Image Pretraining (CLIP) models are embedding models that can be used for zero-shot image and video classification, semantic search applications, dataset deduplication, retrieval augmented generation (RAG) that involves images, and more.

This guide walked through how to use CLIP with the Intel® Gaudi® 2 accelerator. It demonstrated how to train a CLIP-like model using the Hugging Face Transformers library. It loaded a dataset in the COCO JSON format, then trained a projection layer to encode information from a custom dataset.

It then demonstrated how to run inference on a CLIP model using the Intel® Gaudi® 2 accelerator, with reference to guides that delved into specific use cases like dataset deduplication and video classification.