Overview

In Part 1 of the tutorial, we first installed Unity*, OpenVINO™, and its pre-requisite software. We then demonstrated how to use the Python* conversion script included with the OpenVINO™ Toolkit to convert a pretrained model from ONNX format to the OpenVINO™ Intermediate Representation format.

In this part, we will walk through the steps needed to create a Dynamic link library (DLL) in Visual Studio to perform inference with the pretrained deep learning model.

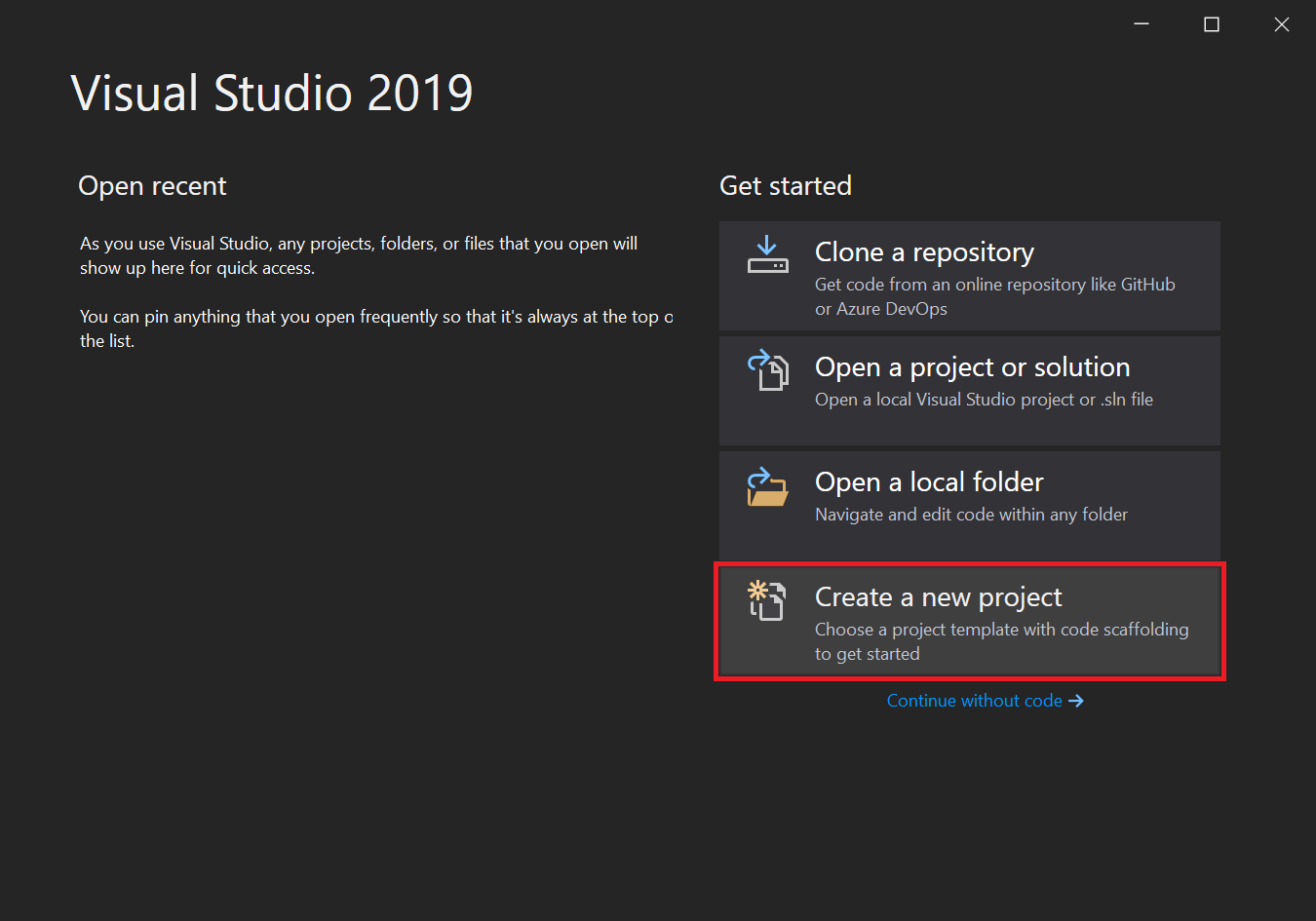

Create a New Visual Studio Project

Open Visual Studio and select Create a new project.

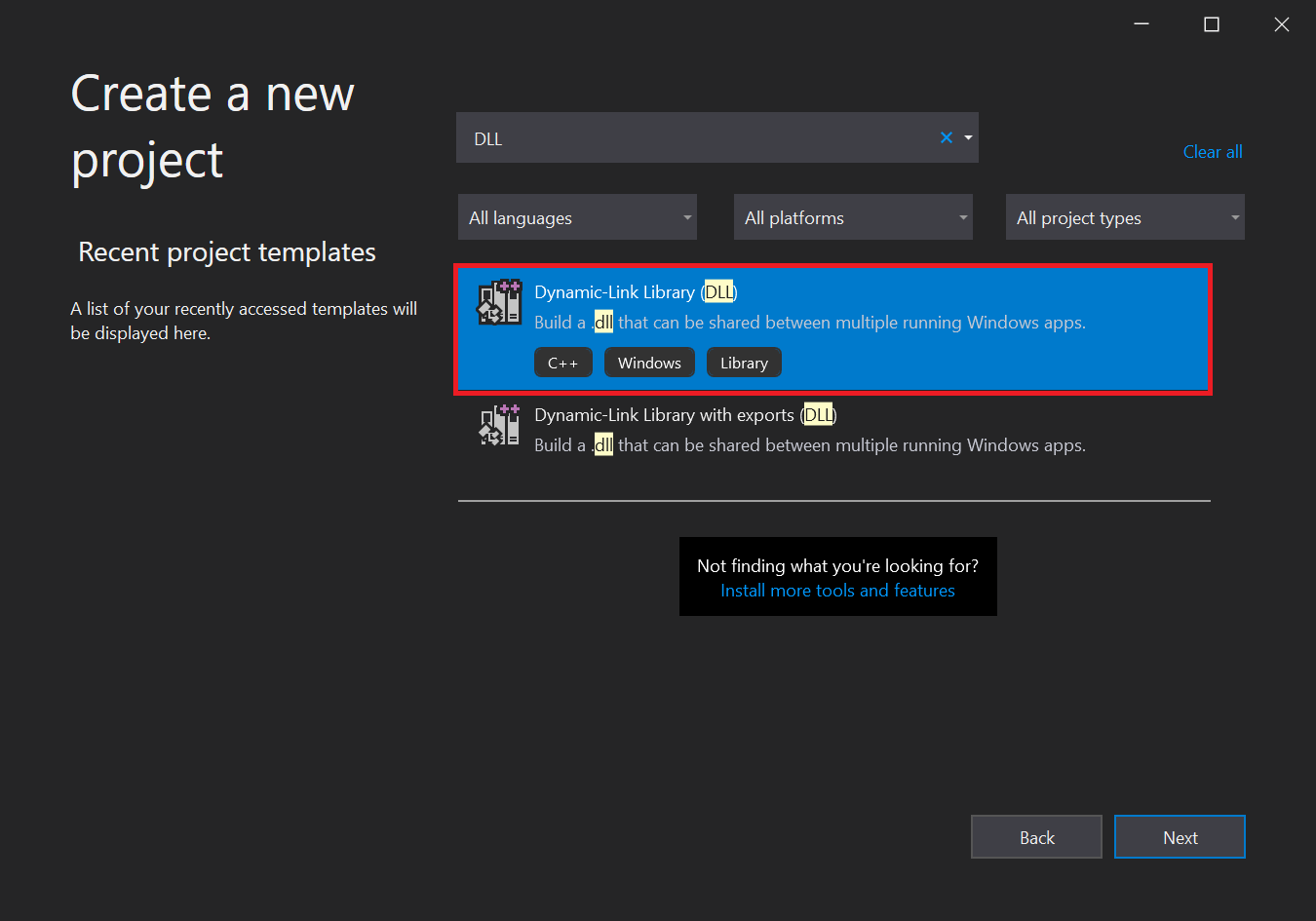

Type DLL into the search bar. Select the Dynamic-Link Library (DLL) option and press Next.

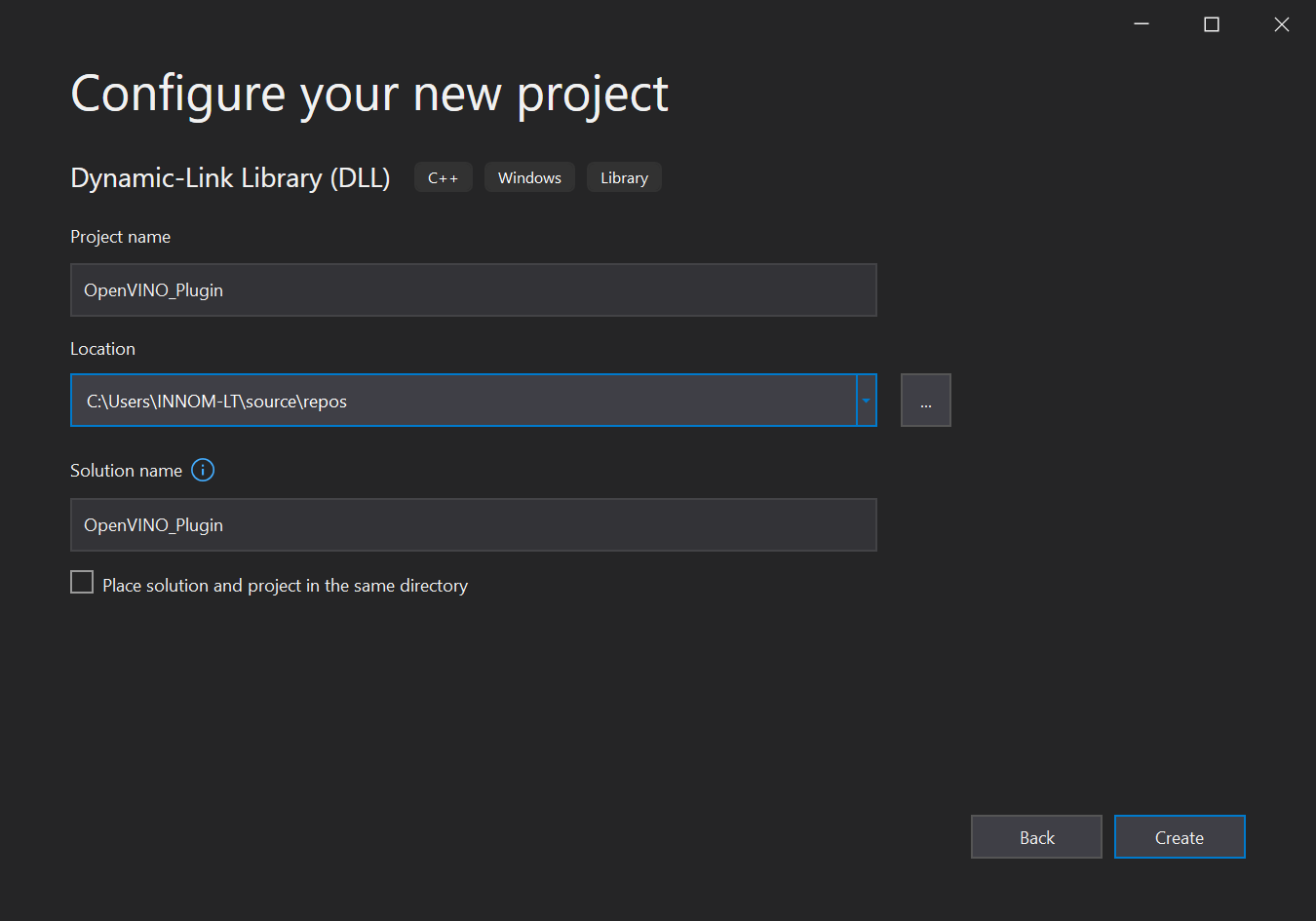

In the next window, name the new project OpenVINO_Plugin. Take note of the Location the project will be saved. Click Create. The default location can be replaced, but we will need to access the project folder to get the generated DLL file.

Configure Project

Update the default project configuration to access the OpenVINO™ Toolkit and build the project with it.

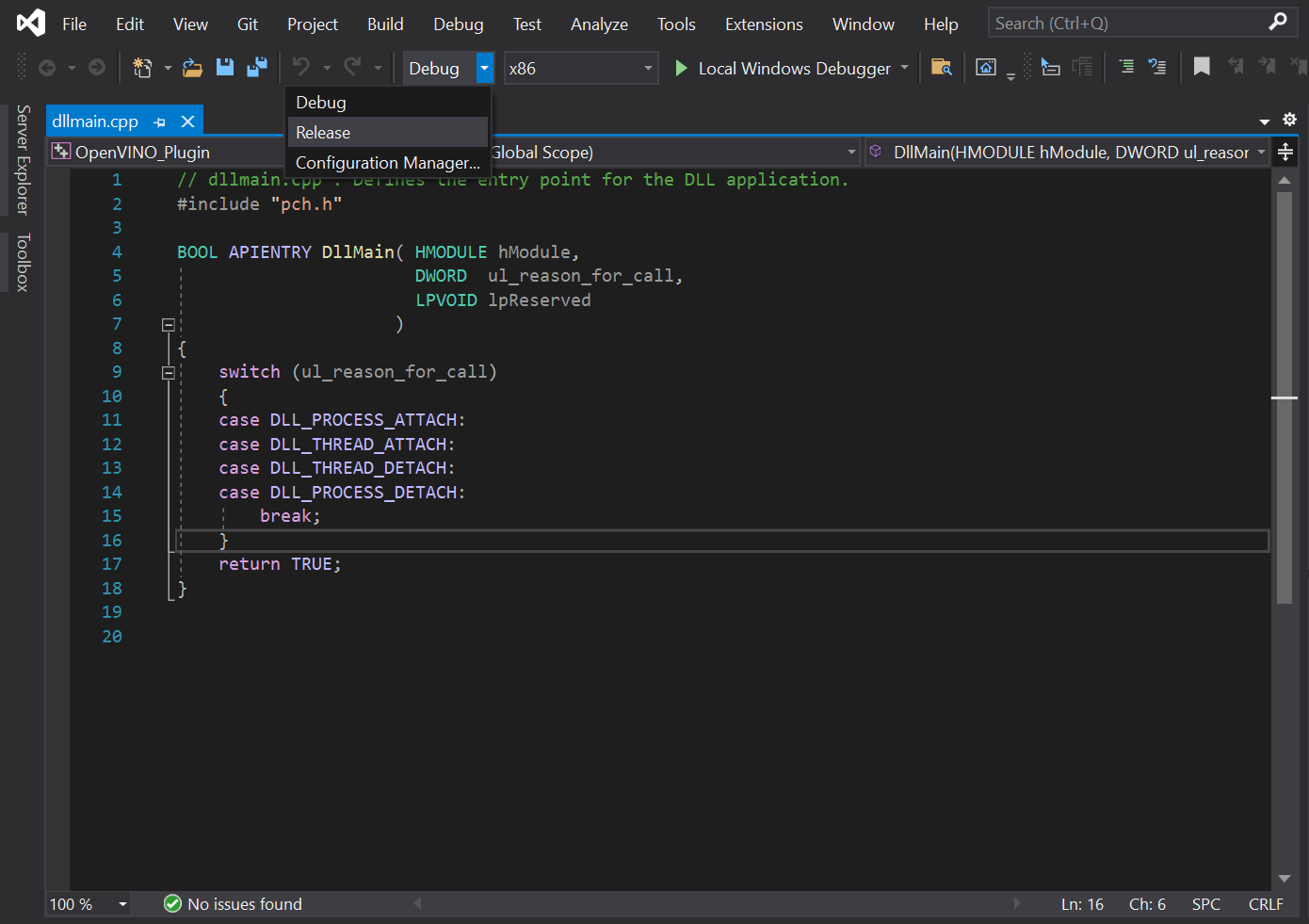

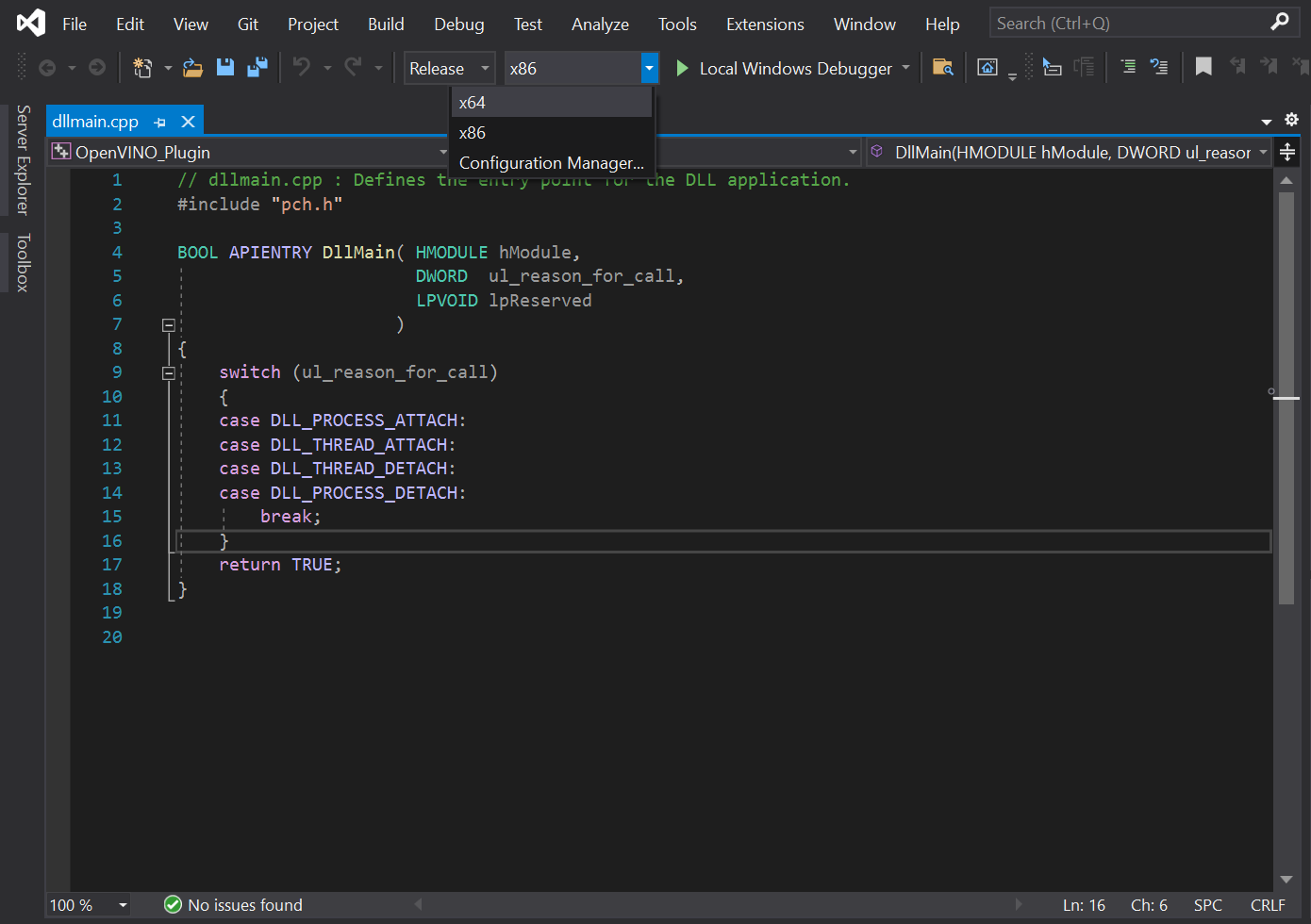

Set Build Configuration and Platform

The OpenVINO™ Toolkit does not support x86 builds. Set the project to build for x64. At the top of the window, open the Solution Configurations dropdown menu, and select Release.

Then, select the Solution Platform dropdown menu and select x64.

Add Include Directories

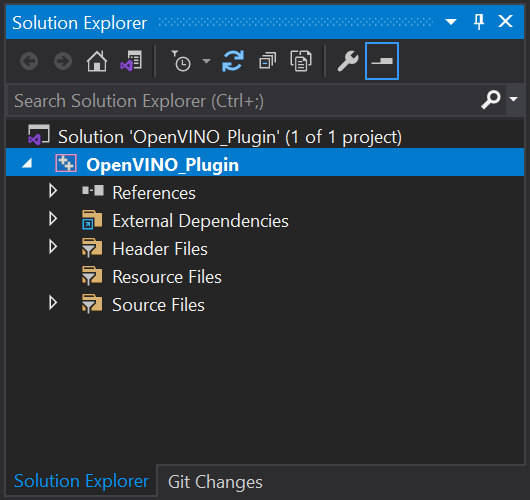

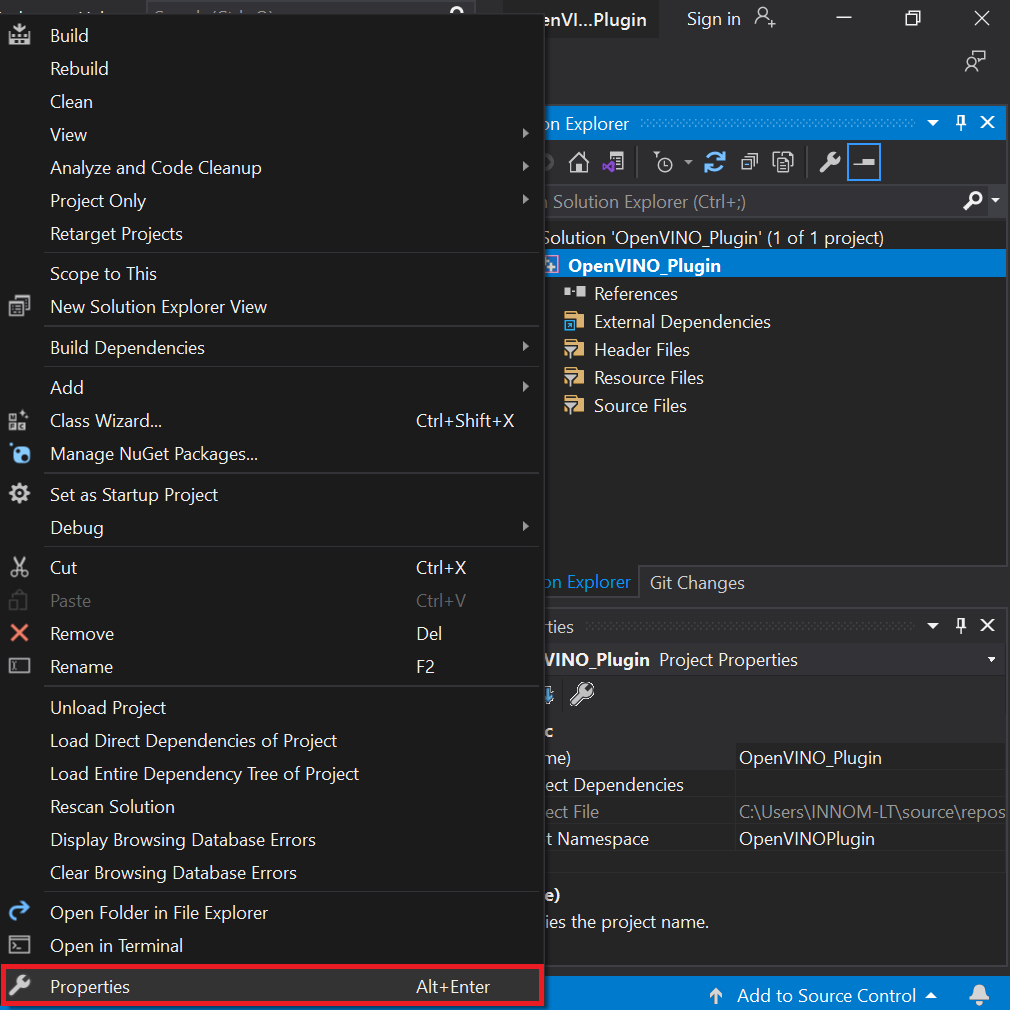

Visual Studio needs to be told where the OpenVINO™ Toolkit is located, so we can access its APIs. In the Solution Explorer panel, click the project name.

Select Properties in the popup menu.

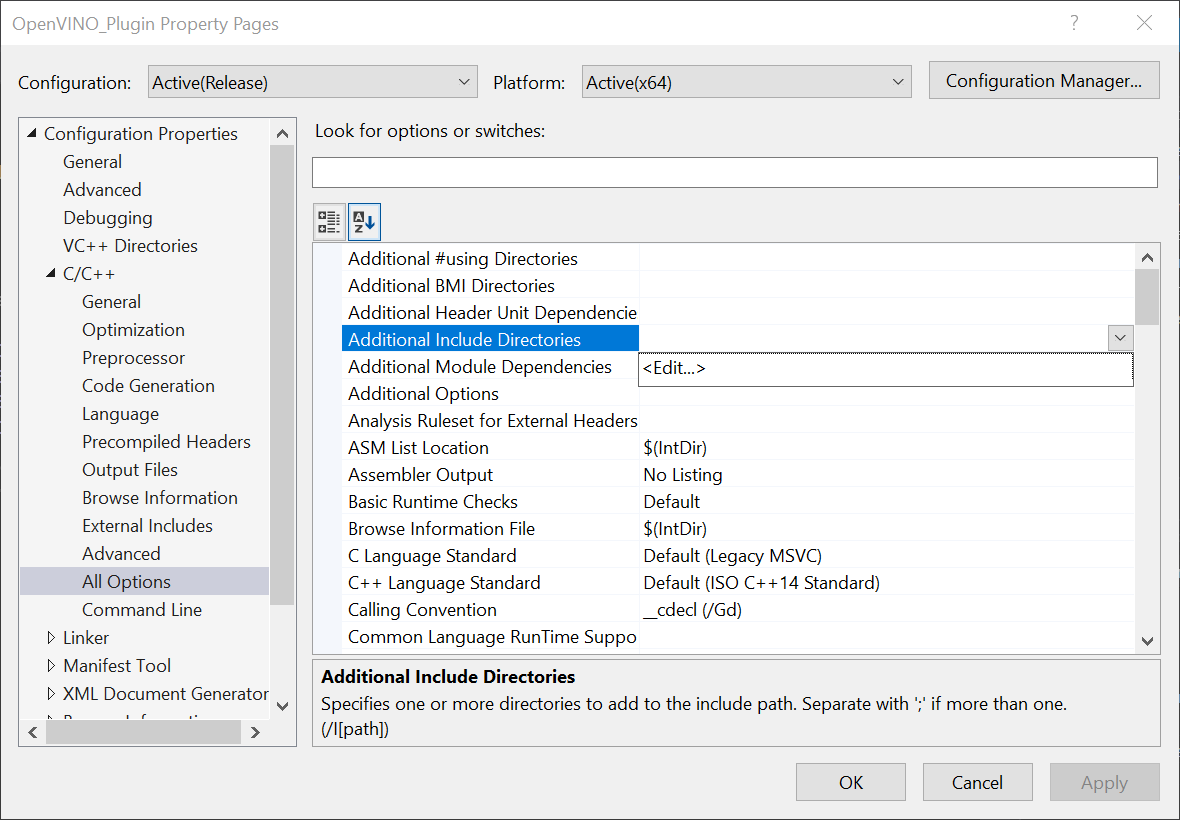

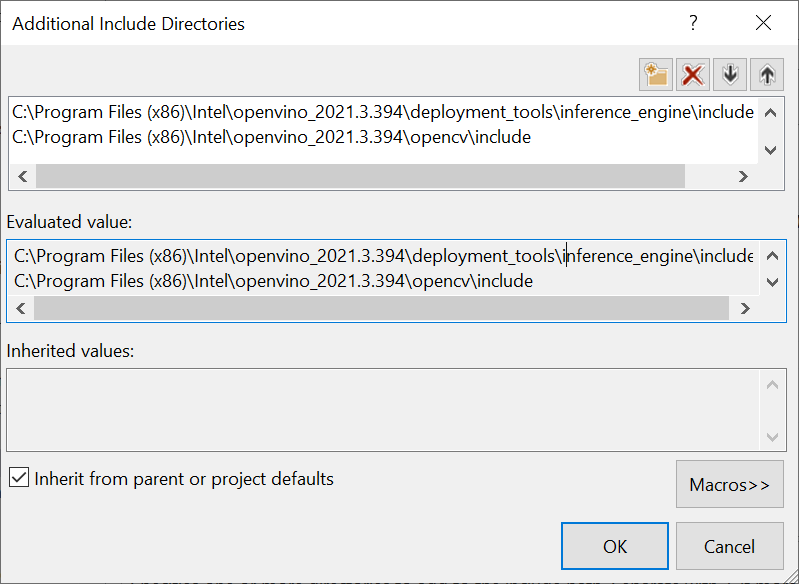

In the Properties Window, open the C++ dropdown and select All Options. Select the Additional Include Directories section and click on <Edit..> in the dropdown.

Add include directories for the OpenVINO™ inference engine and the OpenCV libraries included with the OpenVINO™ Toolkit.

Add the following lines and click OK. Feel free to open these folders in the File Explorer and see what exactly they provide access to.

- C:\Program Files

(x86)\Intel\openvino_2021.3.394\deployment_tools\inference_engine\include - C:\Program Files (x86)\Intel\openvino_2021.3.394\opencv\include

Link Libraries

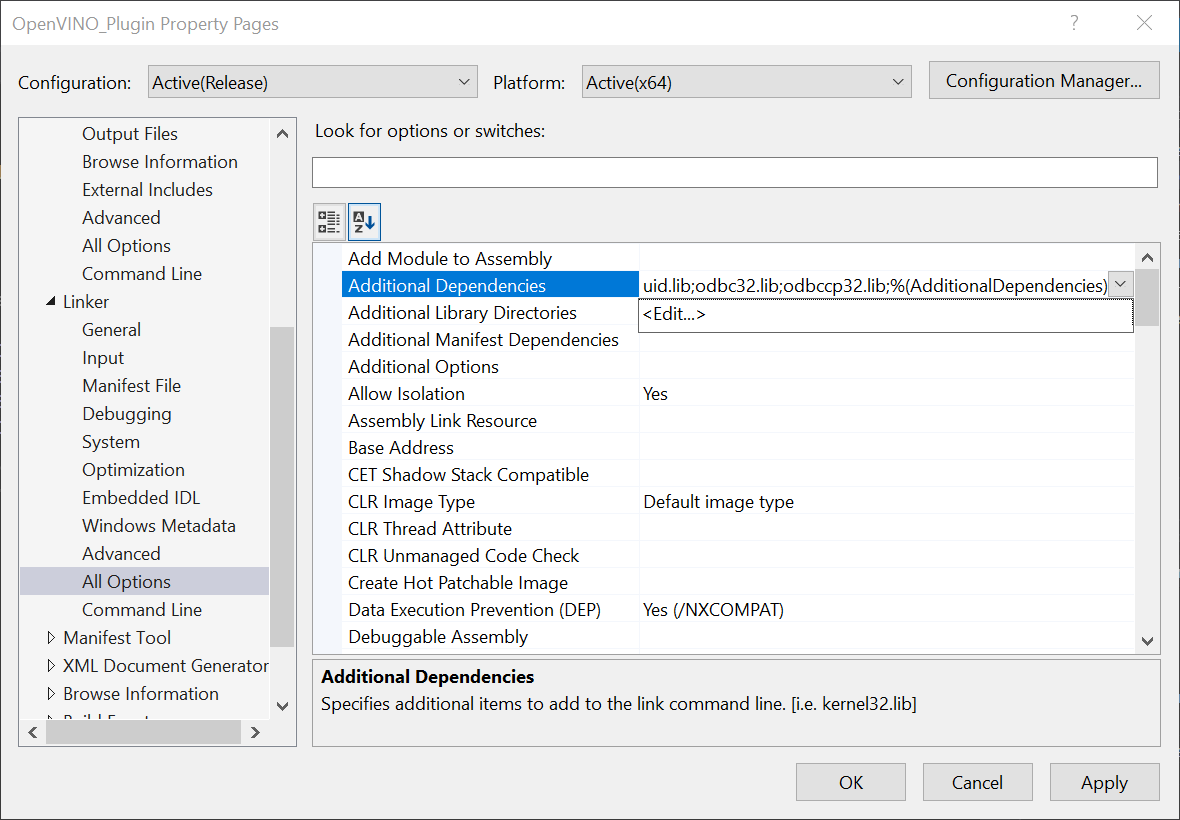

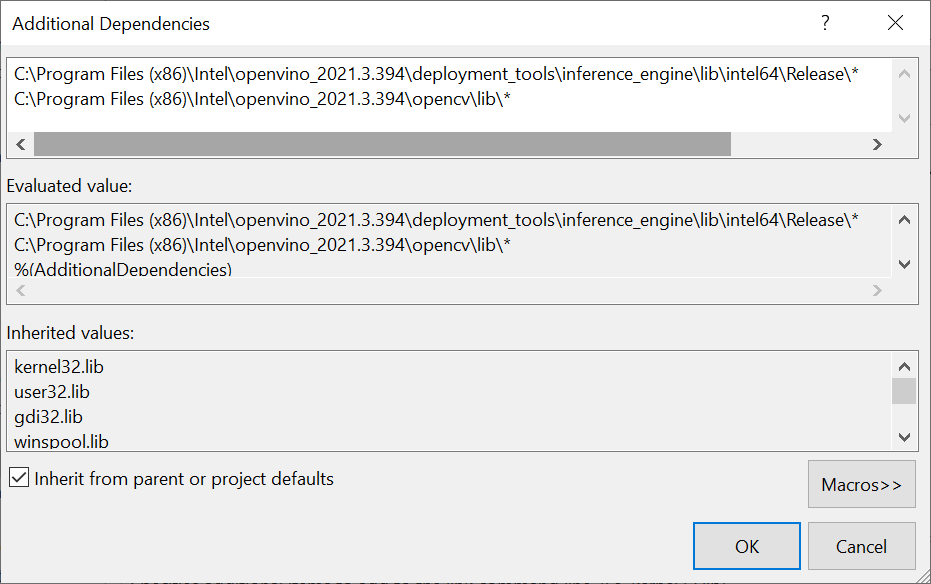

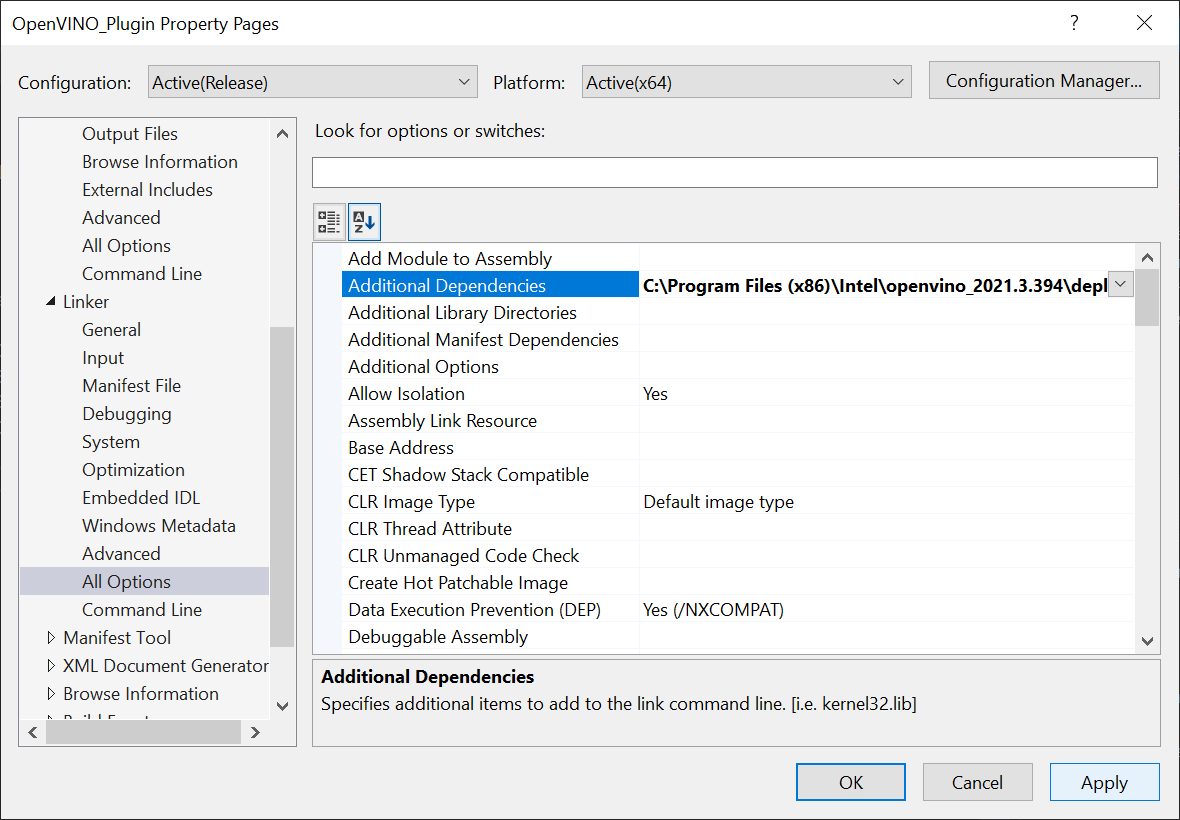

Next, open the Link dropdown in the Properties window and select All Options. Scroll up to the top of the All Options section and select Additional Dependencies.

Add the following lines for the OpenVINO™ and OpenCV libraries, then click OK. The * at the end tells Visual Studio to add all the .lib files contained in those folders. We do not technically need every single one, but this is more convenient than manually typing the specific file names.

- C:\Program Files

(x86)\Intel\openvino_2021.3.394\deployment_tools\inference_engine\lib\intel64\Release\* - C:\Program Files (x86)\Intel\openvino_2021.3.394\opencv\lib\*

Lastly, click Apply and close the Properties window.

Clear Default Code

Now, we can finally start coding. The default code for the dllmain.cpp file is as follows.

// dllmain.cpp : Defines the entry point for the DLL application. #include "pch.h" BOOL APIENTRY DllMain( HMODULE hModule, DWORD ul_reason_for_call, LPVOID lpReserved ) { switch (ul_reason_for_call) { case DLL_PROCESS_ATTACH: case DLL_THREAD_ATTACH: case DLL_THREAD_DETACH: case DLL_PROCESS_DETACH: break; } return TRUE; }

We can delete everything below the #include "pch.h" line.

// dllmain.cpp : Defines the entry point for the DLL application. #include "pch.h"

Update Precompiled Header File

The pch.h file is a Precompiled Header file that is generated by Visual Studio. We can place any header files that won't be updated here and they will only be compiled once. This can reduce build times for larger projects. We can open the pch.h file by selecting that line and pressing F12.

// pch.h: This is a precompiled header file. // Files listed below are compiled only once, improving build performance for future builds. // This also affects IntelliSense performance, including code completion and many code browsing features. // However, files listed here are ALL re-compiled if any one of them is updated between builds. // Do not add files here that you will be updating frequently as this negates the performance advantage. #ifndef PCH_H #define PCH_H // add headers that you want to pre-compile here #include "framework.h" #endif //PCH_H

Add the required header files below #include "framework.h". Each one can be explored by selecting that line and pressing F12 as well.

// add headers that you want to pre-compile here #include "framework.h" // A header file that provides a set minimal required Inference Engine API. #include <inference_engine.hpp> // A header file that provides the API for the OpenCV modules. #include <opencv2/opencv.hpp> // Regular expressions standard header #include <regex>

Update dllmain

Back in the dllmain.cpp file, add the InferenceEngine namespace and create a macro to mark functions we want to make accessible in Unity*.

// dllmain.cpp : Defines the entry point for the DLL application. #include "pch.h" using namespace InferenceEngine; // Create a macro to quickly mark a function for export #define DLLExport __declspec (dllexport) We need to wrap the code in extern "C" to prevent name-mangling issues with the compiler. // Create a macro to quickly mark a function for export #define DLLExport __declspec (dllexport) // Wrap code to prevent name-mangling issues extern "C" { }

Declare Variables

Inside the wrapper, declare the variables needed for the DLL.

Keep track of the available compute devices for OpenVINO™, so we can select them in Unity*. Create a std::vector<std::string> variable named availableDevices. This will store the names of supported devices found by OpenVINO™ on the system. Combine the list of available devices into a single std::string variable to send it to Unity*.

Next, create a cv::Mat to store the input image data from Unity*.

To use the OpenVINO™ inference engine, we first need to create a Core instance called ie. Use this variable to read the model file, get the available compute devices, change configuration settings, and load the model onto the target compute device.

Store the information from the .xml and .bin file in a CNNNetwork variable called network.

Create an executable version of the network before performing inference. Create an ExecutableNetwork variable called executable_network.

After that, create an InferRequest variable called infer_request. We will use this variable to initiate inference for the model.

Once inference request is created, write access to the input tensor for the model and read access to the output tensor for the model. This is how we update the input and read the output when performing inference. Create a MemoryBlob::Ptr variable called minput and a MemoryBlob::CPtr variable called moutput.

Since the input and output dimensions are the same, we can use the same size variables when iterating through the input and output data. Create two size_t variables to store the number of color channels and number of pixels for the input image.

Lastly, create an std::vector<float> called data_img that will be used for processing the raw model output.

Code :

// Wrap code to prevent name-mangling issues extern "C" { // List of available compute devices std::vector<std::string> availableDevices; // An unparsed list of available compute devices std::string allDevices = ""; // The name of the input layer of Neural Network "input.1" std::string firstInputName; // The name of the output layer of Neural Network "140" std::string firstOutputName; // Stores the pixel data for model input image and output image cv::Mat texture; // Inference engine instance Core ie; // Contains all the information about the Neural Network topology and related constant values for the model CNNNetwork network; // Provides an interface for an executable network on the compute device ExecutableNetwork executable_network; // Provides an interface for an asynchronous inference request InferRequest infer_request; // A poiner to the input tensor for the model MemoryBlob::Ptr minput; // A poiner to the output tensor for the model MemoryBlob::CPtr moutput; // The number of color channels size_t num_channels; // The number of pixels in the input image size_t nPixels; // A vector for processing the raw model output std::vector<float> data_img; }

Create GetAvailableDevices() Function

Create a function that returns the available OpenVINO™ compute devices so that we can view and select them in Unity*. This function simply combines the list of available devices into a single, comma separated string that will be parsed in Unity*. Add the DLLExport macro since we will be calling this function from Unity*.

Code :

// Returns an unparsed list of available compute devices DLLExport const std::string* GetAvailableDevices() { // Add all available compute devices to a single string for (auto&& device : availableDevices) { allDevices += device; allDevices += ((device == availableDevices[availableDevices.size() - 1]) ? "" : ","); } return &allDevices; }

Create SetDeviceCache() Function

It can take over 20 seconds to upload the OpenVINO™ model to a GPU. This is because OpenCL kernels are being compiled for the specific model and GPU at runtime. There isn't much we can do about this the first time a model is loaded to the GPU. However, we can eliminate this load time in future uses by storing cache files for the model. The cache files are specific to each GPU. Additional cache files will also be created while using a new input resolution for a model. We do not need to add the DLLExport macro as this function will only be called by other functions in the DLL.

We will use a regular expression to confirm a compute device is a GPU before attempting to set a cache directory for it.

We can specify the directory to store cache files for each available GPU using the ie.SetConfig() method. We will just name the directory, cache.

By default, the cache directory will be created in the same folder as the executable file that will be generated from the Unity* project.

Code:

// Configure the cache directory for GPU compute devices void SetDeviceCache() { std::regex e("(GPU)(.*)"); // Iterate through the available compute devices for (auto&& device : availableDevices) { // Only configure the cache directory for GPUs if (std::regex_match(device, e)) { ie.SetConfig({ {CONFIG_KEY(CACHE_DIR), "cache"} }, device); } } }

Create PrepareBlobs() Function

The next function will get the names of the input and output layers for the model and set the precision for them. We can access information about the input and output layers with network.getInputsInfo() and network.getOutputsInfo() respectively.

The model only has one input and output, so we can access them directly with .begin() rather than using a for loop. There are two values stored for each layer. The first contains the name of the layer and the second provides access to get and set methods for the layer.

Code :

// Get the names of the input and output layers and set the precision DLLExport void PrepareBlobs() { // Get information about the network input InputsDataMap inputInfo(network.getInputsInfo()); // Get the name of the input layer firstInputName = inputInfo.begin()->first; // Set the input precision inputInfo.begin()->second->setPrecision(Precision::U8); // Get information about the network output OutputsDataMap outputInfo(network.getOutputsInfo()); // Get the name of the output layer firstOutputName = outputInfo.begin()->first; // Set the output precision outputInfo.begin()->second->setPrecision(Precision::FP32); }

Create InitializeOpenVINO() Function

This is where we will make the preparations for performing inference and will be the first function called from the plugin in Unity*. The function will take in a path to an OpenVINO™ model and read in the network information. We will then set the batch size for the network using network.setBatchSize() and call the PrepareBlobs() function.

We can initialize our list of available devices by calling ie.GetAvailableDevices(). Any available GPUs will be stored last, so reverse the list. The first GPU found (typically integrated graphics) would be named GPU.0. The second would be named GPU.1 and so on.

Lastly, we will call the SetDeviceCache() function now that we know what devices are available.

Code :

// Set up OpenVINO inference engine DLLExport void InitializeOpenVINO(char* modelPath) { // Read network file network = ie.ReadNetwork(modelPath); // Set batch size to one image network.setBatchSize(1); // Get the output name and set the output precision PrepareBlobs(); // Get a list of the available compute devices availableDevices = ie.GetAvailableDevices(); // Reverse the order of the list std::reverse(availableDevices.begin(), availableDevices.end()); // Specify the cache directory for GPU inference SetDeviceCache(); }

Create SetInputDims() Function

Next, make a function to update the input resolution for the model from Unity*. The function will take in a width and height value. The output resolution (i.e. the amount of values the model needs to predict) is determined by the input resolution. As a result, the input resolution has a significant impact on both inference speed and output quality.

OpenVINO™ provides the InferenceEngine::CNNNetwork::reshape method to update the input dimensions at runtime. This method also propagates the changes down to the outputs.

To use it, create an InferenceEngine::SizeVector variable and assign the new dimensions. We can then pass the SizeVector as input to network.reshape().

Initialize the dimensions of the texture variable with the provided width and height values.

Code :

// Manually set the input resolution for the model DLLExport void SetInputDims(int width, int height) { // Collect the map of input names and shapes from IR auto input_shapes = network.getInputShapes(); // Set new input shapes std::string input_name; InferenceEngine::SizeVector input_shape; // create a tuple for accessing the input dimensions std::tie(input_name, input_shape) = *input_shapes.begin(); // set batch size to the first input dimension input_shape[0] = 1; // changes input height to the image one input_shape[2] = height; // changes input width to the image one input_shape[3] = width; input_shapes[input_name] = input_shape; // Call reshape // Perform shape inference with the new input dimensions network.reshape(input_shapes); // Initialize the texture variable with the new dimensions texture = cv::Mat(height, width, CV_8UC4); }

Create UploadModelToDevice() Function

In this function, create an executable version of the network and create an inference request for it. This function will take as input an index for the available Devices variable. This will allow us to specify and switch between compute devices in the Unity* project at runtime.

Once we have the inference request, we can get pointers to the input and output tensors using the .GetBlob() method. We need to cast each Blob as a MemoryBlob. The dimensions of the input tensor can be accessed using the minput->getTensorDesc().getDims() method.

We will return the name of the device the model will be executed on back to Unity*.

Code:

// Create an executable network for the target compute device DLLExport std::string* UploadModelToDevice(int deviceNum) { // Create executable network executable_network = ie.LoadNetwork(network, availableDevices[deviceNum]); // Create an inference request object infer_request = executable_network.CreateInferRequest(); // Get a poiner to the input tensor for the model minput = as<MemoryBlob>(infer_request.GetBlob(firstInputName)); // Get a poiner to the ouptut tensor for the model moutput = as<MemoryBlob>(infer_request.GetBlob(firstOutputName)); // Get the number of color channels num_channels = minput->getTensorDesc().getDims()[1]; // Get the number of pixels in the input image size_t H = minput->getTensorDesc().getDims()[2]; size_t W = minput->getTensorDesc().getDims()[3]; nPixels = W * H; // Filling input tensor with image data data_img = std::vector<float>(nPixels * num_channels); // Return the name of the current compute device return &availableDevices[deviceNum];; }

Create PerformInference() Function

The last function in DLL will take a pointer to raw pixel data from a Unity* Texture2D as input. It will then prepare the input for the model, execute the model on the target device, process the raw output, and copy the processed output back to the memory location for the raw pixel data from Unity*.

We first need to assign the inputData to the texture.data. The inputData from Unity* will have an RGBA color format. However, the model is expecting an RGB color format. We can use the cv::cvtColor() method to convert the color format for the texture variable.

We can get write-only access to the input tensor for the model with minput->wmap().

The pixel values are stored in a different order in the OpenCV Mat compared to the input tensor for the model. The Mat stores the red, green, and blue color values for a given pixel next to each other. In contrast, the input tensor stores all the red values for the entire image next to each other, then the green values, then blue. We need to take this into account when writing values from texture to the input tensor and when reading values from the output tensor.

Once updated the input tensor with the current inputData, we can execute the model with infer_request.Infer(). This will execute the model in synchronous mode.

When inference is complete, we can get read-only access to the output tensor with moutput->rmap().

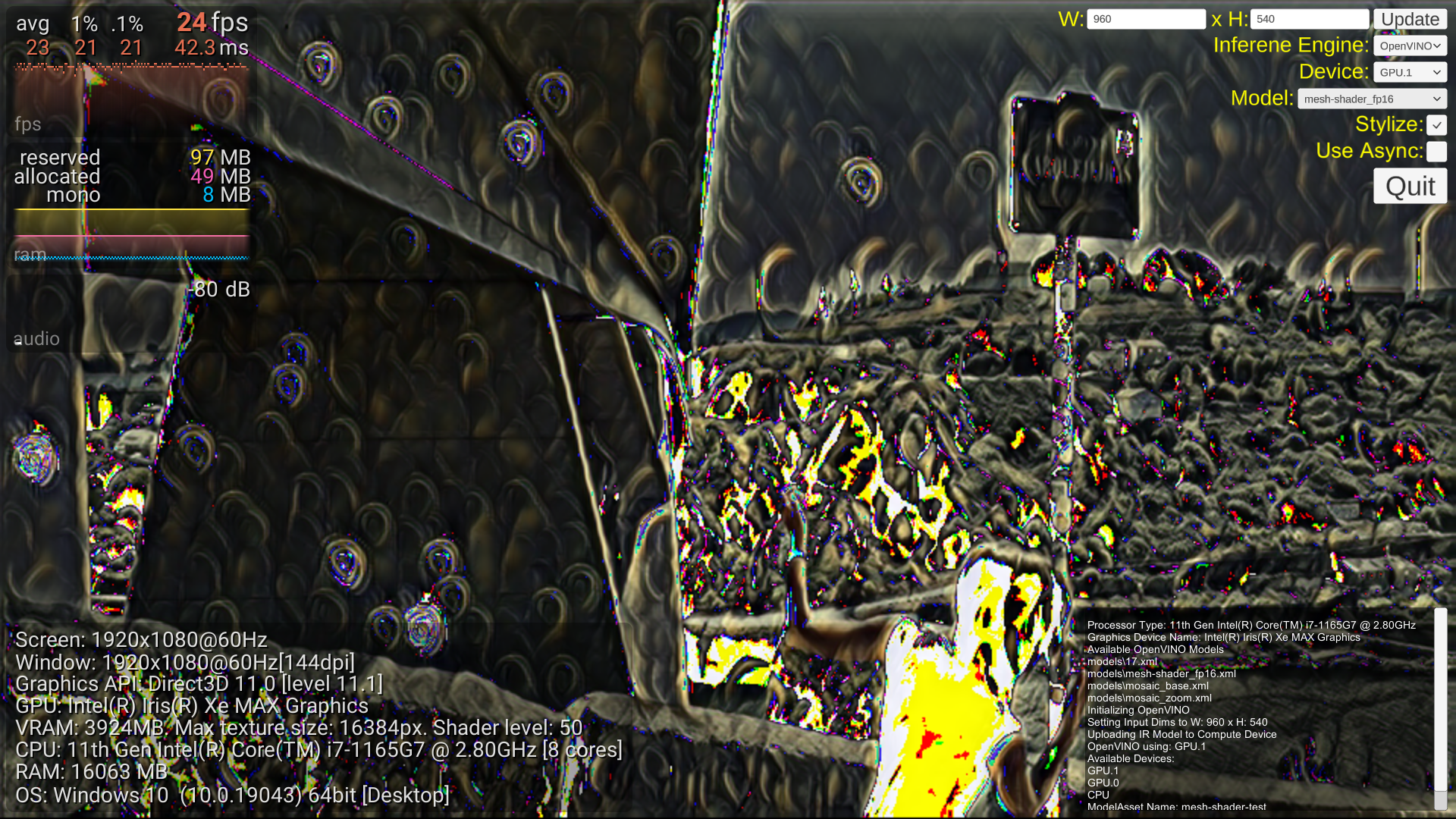

Valid color values are in the range [0, 255]. However, the model might output values slightly outside of that range. We need to clamp the output values to this range. If we don't, the output in Unity* will look like the image below where pixels near pure black or white are discolored.

We will perform this post processing step using the std::vector<float> data_img we declared earlier, before assigning the values back into texture.

We need to use the cv::cvtColor() method again to add an alpha channel back to texture.

Finally, we can copy the pixel data from texture back to the Unity* texture data using the std::memcpy() method.

Code :

// Perform inference with the provided texture data DLLExport void PerformInference(uchar* inputData) { // Assign the inputData to the OpenCV Mat texture.data = inputData; // Remove the alpha channel cv::cvtColor(texture, texture, cv::COLOR_RGBA2RGB); // locked memory holder should be alive all time while access to its buffer happens LockedMemory<void> ilmHolder = minput->wmap(); // Filling input tensor with image data auto input_data = ilmHolder.as<PrecisionTrait<Precision::U8>::value_type*>(); // Iterate over each pixel in image for (size_t p = 0; p < nPixels; p++) { // Iterate over each color channel for each pixel in image for (size_t ch = 0; ch < num_channels; ++ch) { input_data[ch * nPixels + p] = texture.data[p * num_channels + ch]; } } // Perform inference infer_request.Infer(); // locked memory holder should be alive all time while access to its buffer happens LockedMemory<const void> lmoHolder = moutput->rmap(); const auto output_data = lmoHolder.as<const PrecisionTrait<Precision::FP32>::value_type*>(); // Iterate through each pixel in the model output for (size_t p = 0; p < nPixels; p++) { // Iterate through each color channel for each pixel in image for (size_t ch = 0; ch < num_channels; ++ch) { // Get values from the model output data_img[p * num_channels + ch] = static_cast<float>(output_data[ch * nPixels + p]); // Clamp color values to the range [0, 255] if (data_img[p * num_channels + ch] < 0) data_img[p * num_channels + ch] = 0; if (data_img[p * num_channels + ch] > 255) data_img[p * num_channels + ch] = 255; // Copy the processed output to the OpenCV Mat texture.data[p * num_channels + ch] = data_img[p * num_channels + ch]; } } // Add alpha channel cv::cvtColor(texture, texture, cv::COLOR_RGB2RGBA); // Copy values from the OpenCV Mat back to inputData std::memcpy(inputData, texture.data, texture.total() * texture.channels()); }

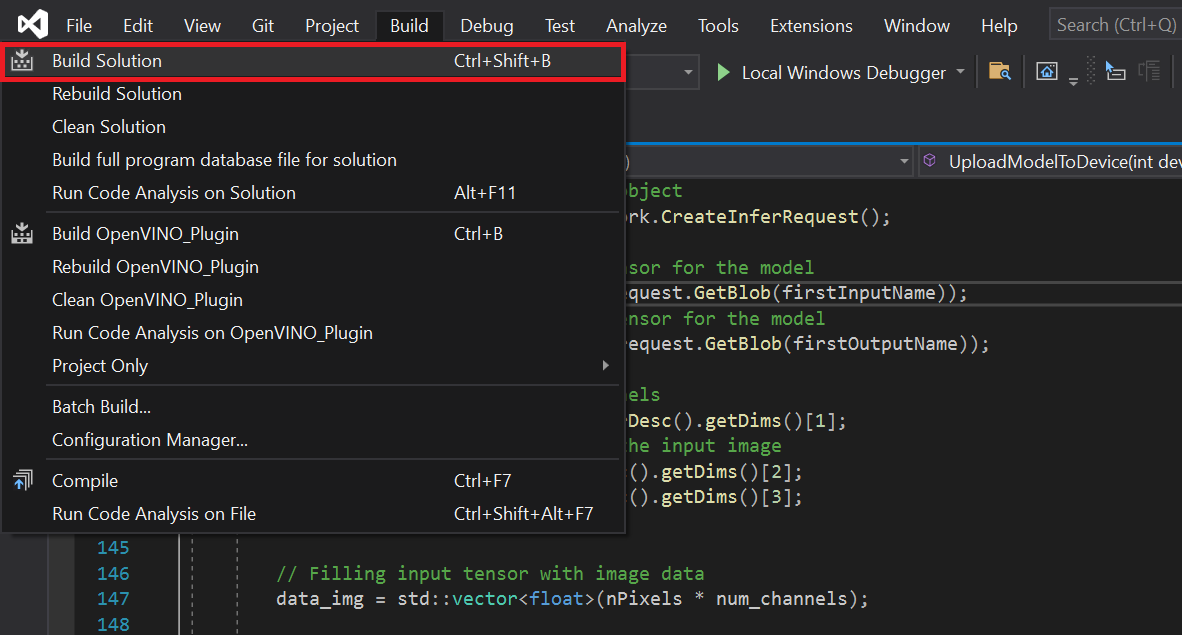

Build Solution

Now that the code is complete, we just need to build the solution to generate the .dll file.

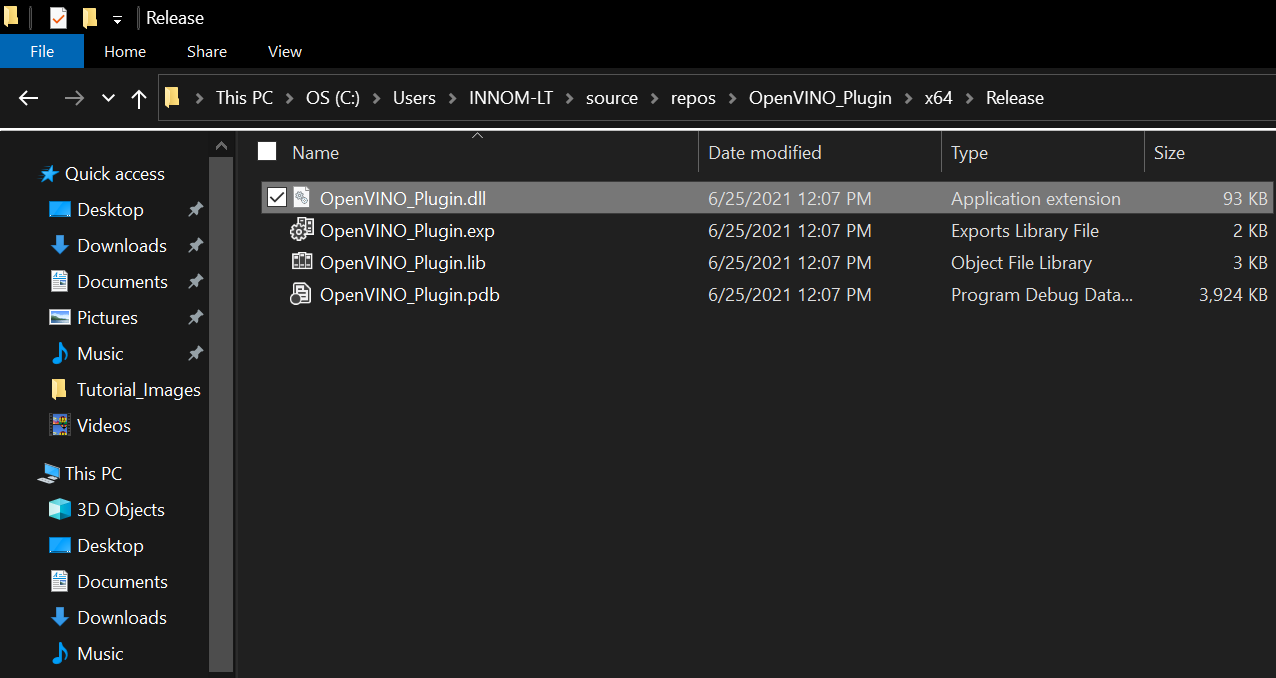

Open the Build menu at the top of the Visual Studio window and click Build Solution. This will generate a new x64 folder in the project's directory.

Navigate to that folder in the File Explorer and open the Release child folder. Inside, you will find the .dll file along with a few other files that will not be needed.

Gather Dependencies

The .dll file generated by our project is still dependent on other .dll files from both OpenVINO™ and OpenCV. Those .dll files have dependencies of their own as well. Copy these dependencies along with the OpenVINO_Plugin.dll file into a new folder called x86_64 for the Unity* project.

Here are the dependencies needed to use .dll.

- clDNNPlugin.dll

- inference_engine.dll

- inference_engine_ir_reader.dll

- inference_engine_legacy.dll

- inference_engine_lp_transformations.dll

- inference_engine_preproc.dll

- inference_engine_transformations.dll

- libhwloc-5.dll

- MKLDNNPlugin.dll

- ngraph.dll

- opencv_core_parallel_tbb452_64.dll

- opencv_core452.dll

- opencv_imgcodecs452.dll

- opencv_imgproc452.dll

- plugins.xml

- tbb.dll

The required dependencies can be found in the following directories.

- OpenVINO: C:\Program Files

(x86)\Intel\openvino_2021.3.394\inference_engine\bin\intel64\Release - nGraph: C:\Program Files

(x86)\Intel\openvino_2021.3.394\deployment_tools\ngraph\lib - TBB: C:\Program Files

(x86)\Intel\openvino_2021.3.394\deployment_tools\inference_engine\external\tbb\bin - OpenCV: C:\Program Files (x86)\Intel\openvino_2021.3.394\opencv\bin

You can download a folder containing the OpenVINO_Plugin.dll file and its dependencies from the link below.

Conclusion

That is everything we need for the OpenVINO™ functionality. In the next part, we will demonstrate how to access this functionality as a plugin inside a Unity* project.

Corresponding Tutorial Sections:

- Developing OpenVINO™ Inferencing Plugin for Unity* Tutorial: Part 1 – Setup

- Developing OpenVINO™ Inferencing Plugin for Unity* Tutorial: Part 3 – Building the Unity* Plugin

Related Links:

Project Resources: