Major fields of modern computing rely heavily on matrices and tensors to efficiently represent and manipulate the properties of computational objects.

This is especially true for simulations, advanced numerical differential equations, visual computing, CGI VFX, financial risk analysis, gaming, and, of course, AI. In short, hardly any large software application remains untouched. GPUs and other similar accelerators play an important role these days, mainly because they were designed as matrix hardware from the ground up, which has obviously benefited computer graphics from the beginning.

What if we had a unified interface for matrix and tensor operations compatible with SPIR-V* cooperative matrix extensions, made it part of SYCL, and thus made it available to a wide variety of hardware and heterogeneous compute environments?

Combine this with a rich set of targeted and tuned performance kernels for frequent operations that can be easily adjusted and optimized for the full SYCL compute ecosystem, and we are close to a common unified abstraction for matrix hardware programming. This unified API for matrices could be used across various libraries and utilities.

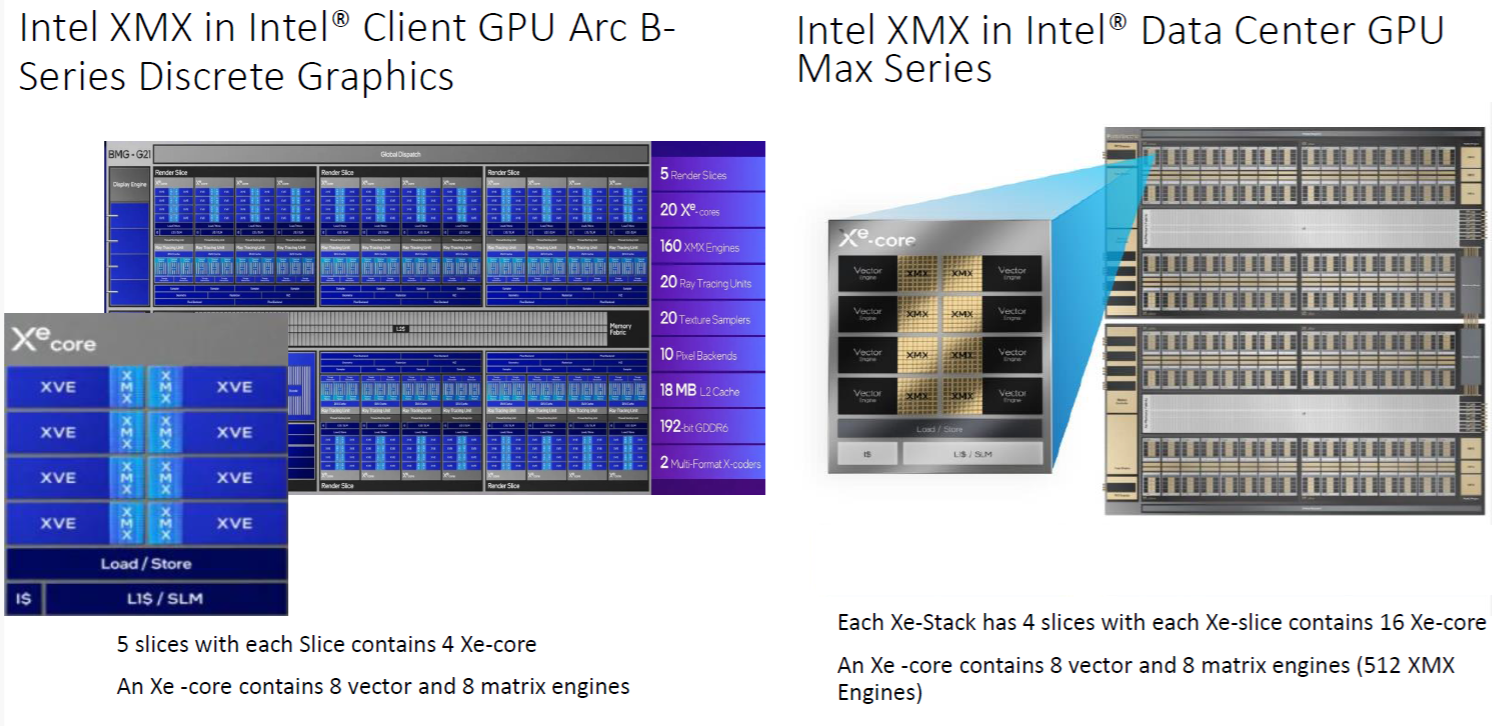

Figure 1: Tile and Slice organization in GPUs

Let us briefly discuss some of the highlights of Dounia Khaldi’s insightful presentation (linked in the callout box above)

Although frameworks like PyTorch* and Tensorflow* and libraries like the Intel® oneAPI Deep Neural Network Library (oneDNN) are designed to use matrix hardware acceleration very effectively, sometimes they may not have incorporated the latest accelerations yet, or we may want to build our own neural network applications, where these off-the shelf approaches seem too generalized or heavyweight.

This is where lower-level API abstractions can assist in writing custom workload-specific optimizations. Joint matrix provides this lower level of abstraction for custom solutions, enabling it to provide performance, productivity, and fusion capabilities, combined with the portability of using one codebase to target different matrix hardware.

“Joint Matrix: A Unified SYCL Extension for Matrix Hardware Programming”

⇒ Replay the full presentation at IXPUG 2025.

⇒ Check out the full slide deck.

Presenter: Dounia Khald, Intel Corporation

Matrix Hardware Examples

As an example of matrix hardware, we can look at Intel® Xe Matrix Extensions (Intel® XMX), a systolic array added to both Intel® GPUs for client and data center applications (Figure 1). It consists of a parallel processing architecture with identical tightly coupled processing elements (PEs) interconnected in a grid-like fashion. Each PE performs a specific calculation and then passes the results to its neighbors, creating a rhythmic data flow through the matrix.

Intel® Arc B-Series Discrete Graphics has 160 Intel XMX engines, and an Intel® Data Center GPU Max Series has 512 Intel XMX engines to work with. They are designed for fast processing of matrix multiply and add kernels using data types such as bfloat16, half, tf32, int8, or int4.

Similarly, current Intel® Xeon® scalable processors and Intel® Xeon® 6 processors include Intel® Advanced Matrix Extensions (Intel® AMX), with 2-dimensional registers for matrix compute kernel acceleration.

SYCL Joint Matrix Extension API

The experimental SYCL namespace sycl::ext::oneAPI::experimental::matrix defines a new data type used for joint matrix operations:

using namespace sycl::ext::intel::experimental::matrix;

template <typename Group, typename T, use Use, size_t Rows,

size_t Cols, layout Layout = layout::dynamic>

struct joint_matrix;

enum class use { a, b, accumulator};

enum class layout {row_major, col_major, dynamic};

Memory Operations

The typical memory load, store, and pointer operations can be applied for entire SYCL work groups, and data can be prefetched for streamlined execution. Pointers can be overloaded.

void joint_matrix_mad(Group g, joint_matrix<>&D,

joint_matrix<>&A, joint_matrix<>&B, joint_matrix<>&C);

void joint_matrix_apply(Group g, joint_matrix<>&jm, F&& func);

void joint_matrix_apply(Group g,

joint_matrix<>& jm0, joint_matrix<>& jm1, F&& func);

void joint_matrix_copy(Group g, joint_matrix<Group, T1, Use1,

Rows, Cols, Layout1> &dest,

joint_matrix<Group, T2, Use2, Rows, Cols, Layout2> &src);

Compute Operations

Matrix multiply and add (D=A*B+C) supports all the essentials, like per-element operations, activation functions, (de-)quantization, and matrix copy with data type conversion.

void joint_matrix_fill(Group g, joint_matrix<>&dst, T v);

void joint_matrix_load(Group g, joint_matrix<>&dst,

multi_ptr<> src, size_t stride, Layout layout);

void joint_matrix_load(Group g, joint_matrix<>&dst,

multi_ptr<> src, size_t stride);

void joint_matrix_store(Group g, joint_matrix<>src,

multi_ptr<> dst, unsigned stride, Layout layout);

void joint_matrix_prefetch(Group g, T* ptr, size_t

stride, layout Layout, Properties properties);

Code Sample

The resulting joint matrix multiply and add code sequence, a basic GEMM implementation, can be executed on any of the many hardware platforms supported by SYCL, whether from Intel, Nvidia*, AMD*, or other vendors. The main performance consideration for different architectures is hardware, work group, and subgroup tile sizing.

using namespace sycl::ext::oneapi::experimental::matrix;

queue q;

range<2> G = {M / MSG /*32*/, (N / NSG /*64*/) * SG_SIZE};

range<2> L = {MWG / MSG /*32*/, NWG / NSG /*64*/ * SG_SIZE};

…

q.submit([&](sycl::handler& cgh) {

auto pA = address_space_cast<…>(memA);

auto pB = address_space_cast<…>(memB);

auto pC = address_space_cast<…>(memC);

cgh.parallel_for(nd_range<2>(G, L), [=](nd_item<2> item) {

joint_matrix<…32,16…> A;

joint_matrix<…16,64,…> B;

joint_matrix<…32,64,…> C;

joint_matrix_fill(sg, subC, 0);

for (unsigned int kwg = 0; kwg < K; kwg += KWG /*32*/) {

for (unsigned int ksg = 0; ksg < KWG /*16*/;

ksg+=KSG/*16*/) {

joint_matrix_load(sg, A, pA + offsetA, K);

joint_matrix_load(sg, B, pB + offsetB, N);

joint_matrix_mad(sg, C, A, B, C);

}

}

joint_matrix_store(sg, C, pC + offsetC, N, layout);

});

});q.wait;

Figure 2: Data workgroup (kwg) and subgroup (sg) sizing.

Optimization Considerations

Block Sizing

Optimization of matrix operations begins with getting the datasets to work on, arranged into the best tensor format for our use case and the hardware we intend to use.

We can think of this in terms of access speed hierarchy. How much data should be in local cache, registers, or inside a given matrix accelerator kernel, and then additionally in terms of logical workgroup size and subgroup size for each execution kernel.

The sizing of the code snippet in Figure 2 assumes the following layout:

|

Kernel tile |

MxNxK |

4096x4096x4096 |

|

Work group tile |

MWGxNWGxK WG |

256x256x32 |

|

Subgroup tile |

MSGxNSGxKSG |

32x64x16 |

|

Joint matrix |

MJMxNJMxKJM |

8x16x16 or 32x64x16 |

Prefetch and Cache Control

For additional performance optimization, we can look at prefetching matrices A and B we intend to multiply:

// Before k loop

constexpr size_t prefDistance = 3;

for (int p = 0; p < prefDistance; p++)

joint_matrix_prefetch<8, 32>(sg, A + offset, K,

layout::row_major,

syclex::properties{syclex::prefetch_hint_L1});

// After joint_matrix_mad (before next load)

joint_matrix_prefetch<8, 32>(

sg, A + prefetch_offsetA, K, layout::row_major,

syclex::properties{syclex::prefetch_hint_L1});

We can also additionally use annotated pointers to bypass the cache completely to load matrix A out of turn if that would be beneficial to the execution flow and timing:

auto pA = syclex::annotated_ptr{A, syclex::properties{

syclintelex::read_hint<syclintelex::cache_control<

syclintelex::cache_mode::uncached,

syclex::cache_level::L1,syclex::cache_level::L3>>}};

joint_matrix_load(sg, A, pA + offsetA, K);

There are many opportunities and options for fine-tuning the performance of a joint_matrix operation.

Streamline Multiarchitecture Matrix Multiply

Support for SYCL Joint Matrix Extensions is available in the latest Intel® oneAPI DPC++/C++ Compiler and Intel® DPC++ Compatibility Tool 2025.x

You can check out the source code for the latest experimental joint matrix kernels on Dounia Khaldi’s GitHub.

Give it a go and use it for your next CUDA* to SYCL* code migration, custom AI, or edge AI project.

Find out More

- Programming Intel® XMX Using SYCL Joint Matrix Extension

- SYCL oneAPI Joint Matrix Extension GitHub

- IXPUG: Joint Matrix: A Unified SYCL Extension for Matrix Hardware Programming (Slides)

- IXPUG Annual Conference 2025