A growing number of workloads can benefit from AI to improve quality, increase efficiency, accelerate tasks, and reduce errors. And with that, GPUs, LLMs, inference and other keywords are quickly becoming part of the vocabulary for many businesses and developers looking to ensure their competitiveness. However, many challenges make it hard to use AI in various tasks such as content creation and programming. Our customers have been vocal about this growing need for secure inference infrastructure that is simple to set up, scalable to their needs, and at accessible prices. We call these systems inference workstations.

Codename: Project Battlematrix

Designed to meet the needs of modern AI inference, Project Battlematrix is a codename for an all-in-one inference platform combining full-stack validated hardware and software. This platform enables artists, engineers and experienced professionals to increase their productivity by leveraging their own data, or create their own customized fine-tuned AI workflows.

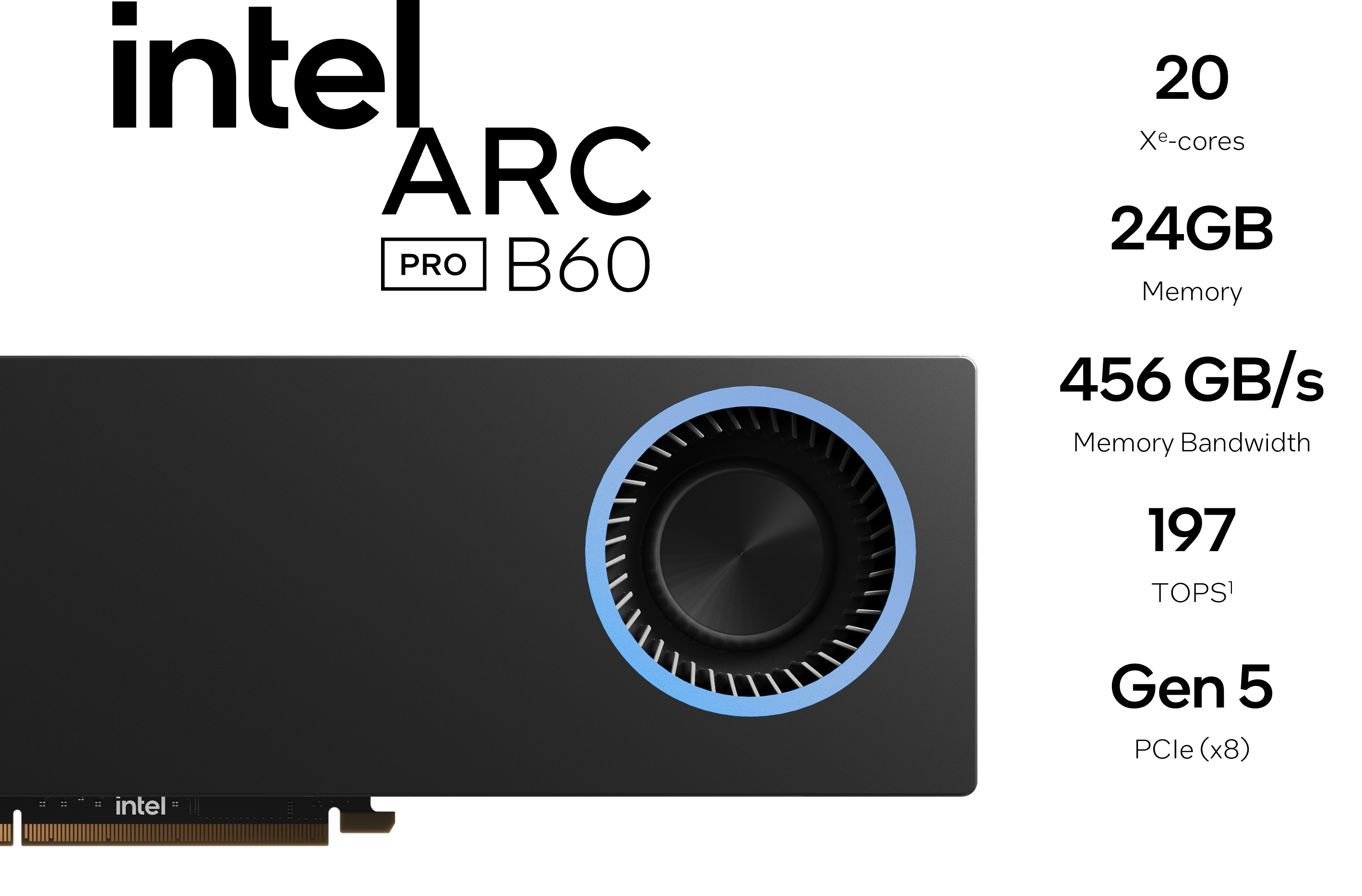

Project Battlematrix inference workstations are designed for professionals who prioritize data privacy and seek to avoid high subscription costs associated with proprietary AI models but require capabilities to deploy Large Language Models. The combined hardware and software workstation solution leverages the power of multiple Intel® Arc™ Pro B60 GPUs paired with a workstation-class Intel® Xeon® platform, ensuring high performance and efficiency.

The new Intel Arc Pro B60 GPU features 24GB of dedicated memory

The Intel Arc Pro B60 GPUs have been architected with the appropriate mix of compute, bandwidth and memory capacity to run demanding inference workloads efficiently. And multi-GPUs configurations open the doors to much more. More performance of course, each Intel Arc Pro B60 packs a lot of dedicated compute TOPs, and support of larger models offering the higher accuracy and lower perplexity required to make business impact.

But when it comes to AI inference, the capability to support not only larger models but also larger contexts is critical. Larger contexts are needed to work on more complex problems and maintain coherency through successive requests to the model. This requires a lot of GPU memory, and even more is required when considering higher concurrency, or the ability to serve multiple users with a single system.

Our vision for Project Battlematrix includes:

- Up to 8 Intel Arc Pro B60 GPUs

- Up to 192 GB of combined VRAM and up to 1576 dense INT8 TOPs

- Workstation-class PCIe Gen 5 Intel Xeon platform

- Optimized Linux Software Experience

AI-Assisted Productivity

Project Battlematrix is built with concrete applications of AI inference in mind. A good example of that is software development. Reproducing small and bigger bugs, identifying root causes and implementing fixes is part of the daily life of all software engineering teams. The traditional process to do that can take multiple days: analyze and debug test logs, locate issues, identify root causes, fix code often looking over thousands of lines and verify fixes.

Today, AI can help.

By inserting AI agents this whole process can be completed in hours or less with many benefits:

- Improved Efficiency: Triage and debugging effort reduced from hours to just minutes

- Reduced Misleading Error Codes: AI can eliminate many test failures that are noise or external dependency issues

- Lower Barrier to Entry: Users can quickly understand large and complex problems

Our goal with Project Battlematrix is to make these new AI capabilities and benefits more accessible, particularly to small and medium-sized businesses.

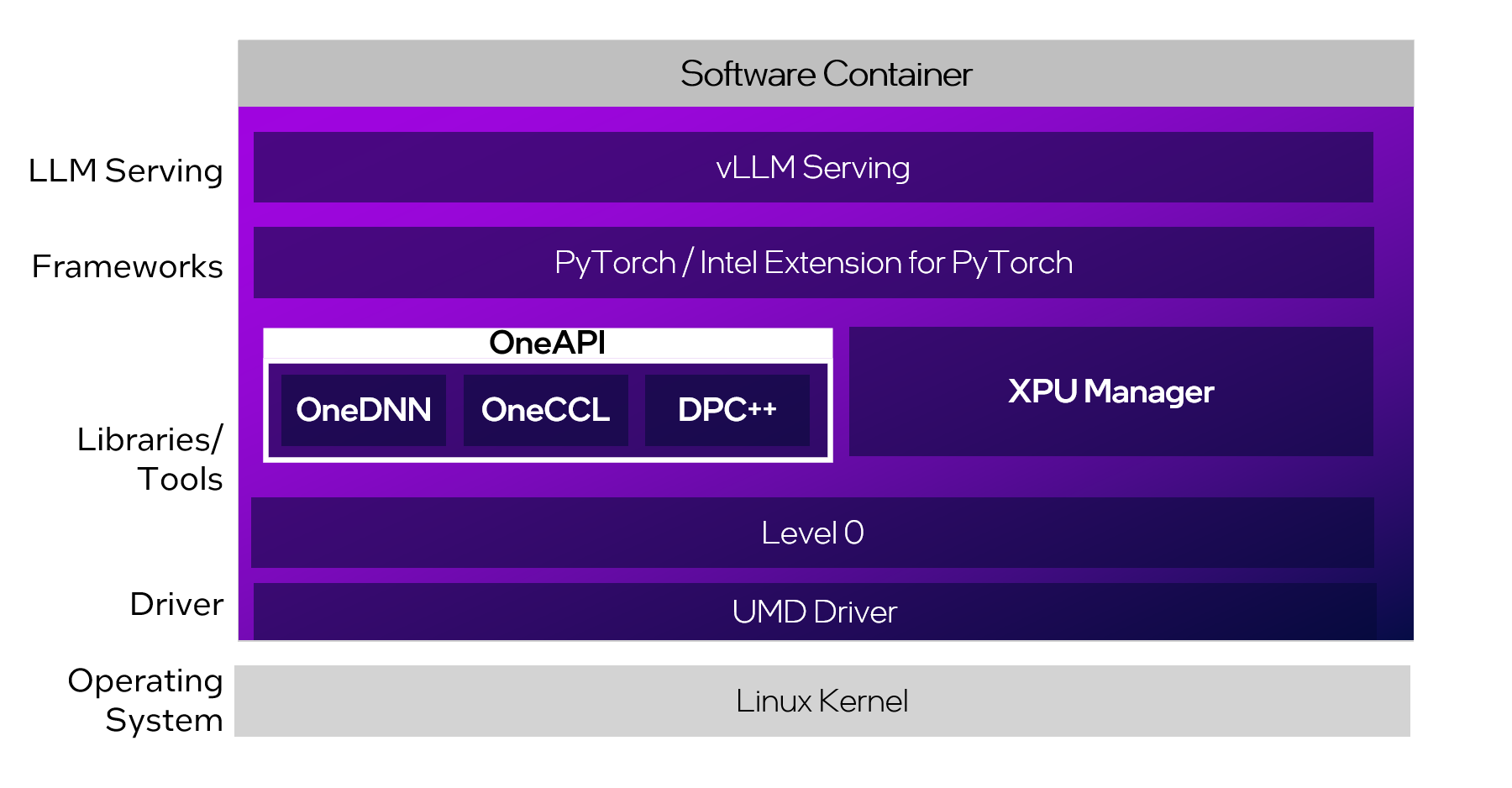

Optimized Linux Software Stack

Project Battlematrix aims to simplify the adoption and ease of use with a new containerized solution built for Linux environment, optimized to deliver incredible inference performance with multi-GPU scaling and PCIe P2P data transfers, and designed to include enterprise-class reliability and manageability features such as ECC, SRIOV, telemetry and remote firmware updates.

We will be deploy these capabilities in a fast evolving software stack with the intention to deliver a fully enabled containerized solution by the end of the year:

1. Basic enabling – Q2 2025

- Baseline Windows & Linux Driver

2. AI acceleration updates – Q3 2025

- Improved parallelism

- vLLM Serving Support

- Baseline GPU telemetry

- Baseline SRIOV support

3. Full feature set – Q4 2025

- GPU telemetry

- SRIOV

- Passthrough virtualization

- XPU manager with ECC toggle and firmware update support

Working with the ecosystem

To bring Project Battlematrix to life, we’re working with multiple partners, customers, developers and the community. Our add-in board vendors are launching various Intel Arc Pro B60 with form factors ranging from compact passive solutions to actively cooled dual-GPU cards, enabling a broad set of systems. You can find more information about the Intel Arc Pro B60 products from our partners:

“Intel Arc GPUs shows a greater price/performance advantage compared with other competitor products. We are very much looking forward to further testing more of Intel's GPU products, especially the large memory GPUs required by Chinese customers, which can cover more all-in-one product needs.“ Meng Xiangde, General Manager Artificial Intelligence Product R&D Center (https://www.asiainfo.com/)

![]()

"Built on Intel's W-series platform, Beiliande redefines AI computing with Intel Arc Pro B60 — not just more powerful but reshaping what’s possible in the era of intelligent computing." Kitty Qin, CEO Beiliande (https://www.beiliande.com/)

![]()

"Intel's newest generation graphics card, Intel Arc Pro B60, with 24GB of video memory, can support algorithms with larger parameters, higher precision, and greater input volume, meeting the needs of high-precision, multi-concurrent task scenarios on campus. In this new historical opportunity for technological innovation, Seewo hopes to continue partnering with Intel to achieve mutual success in the market!” Huang Bolin, Vice President Future Education BG (https://global.cvte.com)

![]()

“The Intel Arc Pro B60, with its 24GB of video memory, can accommodate the parameter space of mainstream large language models in current privatized deployments, meeting the needs of enterprise-level RAG inference scenarios.” Ruan Zhimin, CEO Fit2Cloud (https://www.fit2cloud.com/)

![]()

“The Yuge 3U 4GPU AI All-in-One Machine is powered by Intel W-series processors and Intel Arc Pro B60, delivering robust performance and significantly reducing corporate costs. It supports the operation of the DeepSeek-R1 32B large model. It can quickly build a company's own intelligent Q&A system, ensuring data privacy and security.” Zhang Kang, CTO YGE (https://www.ygeit.com/)

![]()

Notices & Disclaimers

Performance varies by use, configuration and other factors. Learn more at intel.com/performanceindex

Performance results are based on testing as of dates shown in configurations and may not reflect all publicly available updates. See backup for configuration details. No product or component can be absolutely secure.

Product plans, capabilities, and features under development. Subject to change without notice.

Your costs and results may vary.

Intel technologies may require enabled hardware, software or service activation.

© Intel Corporation. Intel, the Intel logo, and other Intel marks are trademarks of Intel Corporation or its subsidiaries. Other names and brands may be claimed as the property of others.