By Rodolfo Alonso Hernández

Motivation

Until now, only one firewall was implemented in OpenStack's Neutron project: an iptables-based firewall. This firewall is a natural fit for people using Linux Bridge for their networking needs. Unfortunately, Linux Bridge is not the only networking option in Neutron nor is it the most popular. This "award" instead goes to Open vSwitch (OVS), which currently powers an astonishing 46% of all OpenStack public deployments. While iptables may be a perfect complement for deployments using Linux Bridge, it isn't necessarily the best fit for OVS. Not only does meshing iptables with Open vSwitch require a lot of coding and networking "voodoo", but OVS itself already provides its own methods for implementing internal rules (using the OpenFlow protocol) that we should be using, which is what we did.

The firewall I present here is based entirely on OVS rules, thus creating a pure OVS model that is not dependent on functionality from the underlying platform. It uses the same public API to talk to the Neutron agent as the existing Linux Bridge firewall implementation and should be a straight swap for people already using OVS.

Technical approach

During the OpenStack Vancouver Summit 2015 a new firewall was presented. Although not yet implemented, it makes use of the "conntrack" module from Linux. This module provides a way to implement a "stateful firewall" through tracking of connection statuses. It is expected that using conntrack will minimize the need to bring the traffic packets up to user space to be processed and should therefore yield higher performance.

The firewall we implemented, on the other hand, is based on "learn action" These learn actions track the traffic in one direction and set up a new flow to allow the same traffic flow in the reverse direction. This implementation is fully based on the OpenFlow standard.

When a Security Group rule is added, a "manual" OpenFlow rule is added to the OVS configuration. This new rule allows, for example, ingress TCP traffic for a specific port. When a packet matches this rule, the "manual" rule allows the packet to be delivered to its destination. However, and this is a substantial aspect of the new firewall, a new "automatic" rule is to send reverse traffic replies back to the source.

We initially worried that this design could have an adverse effect on performance, due to the fact that using learn actions forces the processing of all packets in user space. However, the benchmark results provided later in this blog show that this design's performance was better than iptables. Even more significantly, this firewall allows the usage of the DPDK features of OVS, yielding performance that is more than four times higher than the performance of non-DPDK OVS without firewall.

How it works, in a nutshell

Traffic flows can be grouped into traffic between two internal virtual machines (east-west traffic) or traffic between an internal machine and an external host (north-south traffic). The Security Group rules only apply to machines controlled by Neutron and included in one or several security groups, which means only the virtual machines inside a compute host will be affected by these rules.

In a compute node several bridges are created inside the OVS to handle the traffic in the host. The bridge that the virtual machines are attached to is the "integration bridge". The firewall will only manage the rules of this bridge.

The firewall rules applied in the integration bridge begin to process traffic as soon as a packet arrives on this bridge. The following section describes the OVS processing tables and rules applied.

Processing tables, in-depth

The following graphics show the different processing tables, the rules applied, and the priority (from top to bottom).

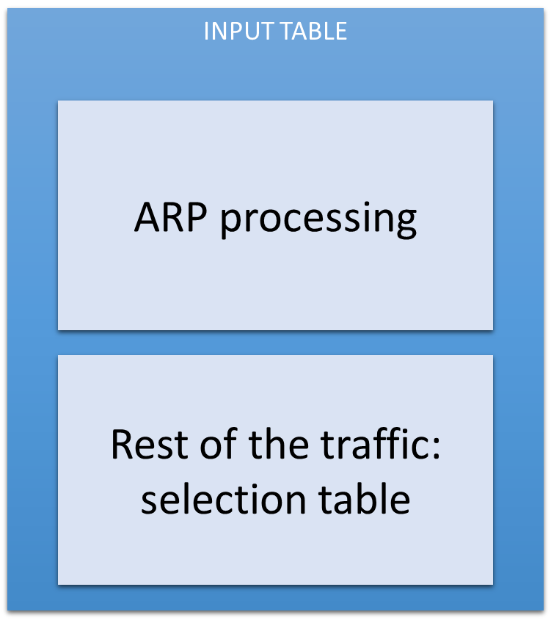

Table 0: The Input Table

The first table, the input table (table = 0), is the default table, and all traffic injected inside a bridge is processed by this table. The ARP packets are processed with the highest priority. Each machine inside a VLAN must be able to populate its address among the other VLAN machines.

The rest of the traffic (no ARP packets) is sent to the selection table.

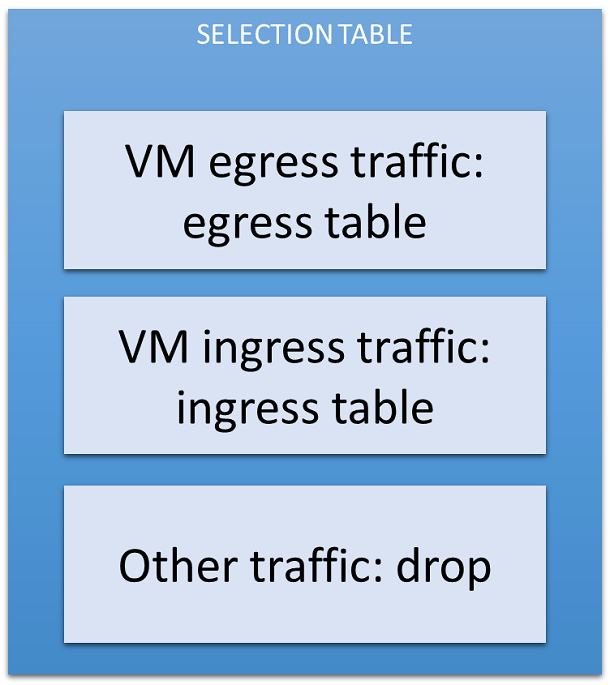

Table 1: The Selection Table

The selection table watches if every packet is from or to a virtual machine. The rules added ensure only packets matching the stored port MAC address, VLAN tag and port number are allowed to pass. If a packet is a DHCP packet, the IP must be 0.0.0.0.

Traffic not matching this rule is dropped.

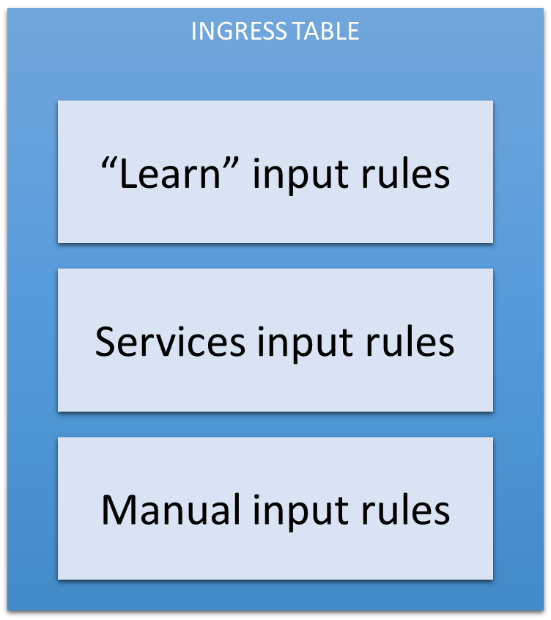

Table 2: The Ingress Table

The ingress table has three kinds of rules:

- "Learn" input rules are created automatically when an output rule is matched. As stated previously, creation of this output rule also invokes the creation of a reverse input rule.

- Services input rules are always added by the firewall to allow certain ICMP traffic and DHCP messages.

- Manual input rules are added by the user in the Security Group.

If the traffic doesn't match any of these rules, it is dropped.

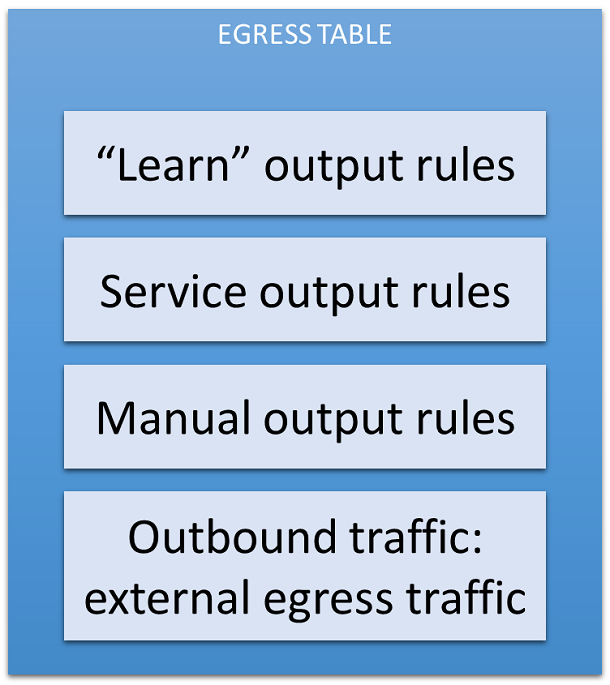

Table 3: The Egress Table

The egress table has three kinds of rules (plus a fourth one):

- "Learn" input rules are created automatically when an output rule is matched. As stated previously, creation of this output rule also invokes the creation of a reverse input rule.

- Services output rules are always added by the firewall to allow certain ICMP traffic and DHCP messages.

- Manual output rules are added by the user in the Security Group.

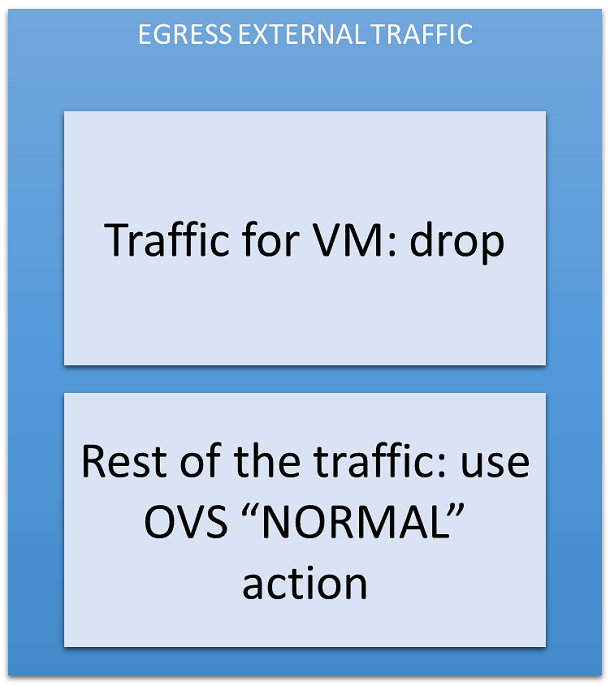

If the traffic doesn't match one of the previous rules, it is sent to the egress external traffic.

This table processes the north-south traffic. If the traffic needs to leave the integration bridge, then it reaches this table. A final check is made: if any traffic in this table is sent to a virtual machine, then this traffic is dropped. Only external egress traffic must be managed by this table. The traffic not filtered by these rules is sent using the "normal" action. The packets are sent by OVS using the built-in ARP table.

Benchmarks

We conducted the benchmark testing of this new firewall on a server with two Intel® Xeon® processors E5-2680 v2 @ 2.80GHz (40 threads) and 64 GB of RAM.

A unique controller/compute node was created for this test, with a virtual machine running on the same host. The virtual machine was booted with Ubuntu* Desktop Edition 14.04 LTS (3.16.0-30 kernel), 4 GB of RAM, and 8 cores.

To inject traffic inside the host and the virtual machine, an Ixia XG12 chassis was used with a 10 Gbps port.

In the benchmark results tables shown below, all values are in Mbps.

No DPDK, no firewall

| Packet size \ Users | 1 | 10 | 100 | 1,000 | 10,000 |

|---|---|---|---|---|---|

| 64 | 93.14 | 86.66 | 98.58 | 96.41 | 101.38 |

| 128 | 121.08 | 122.29 | 118.03 | 121.78 | 118.17 |

| 256 | 144.93 | 197.10 | 197.08 | 197.09 | 197.08 |

| 512 | 366.59 | 421.05 | 420.78 | 420.88 | 420.83 |

| 1024 | 870.21 | 870.43 | 814.53 | 815.06 | 759.62 |

| 1280 | 1,122.00 | 984.45 | 1,053.85 | 1,053.03 | 929.00 |

| 1518 | 1,125.55 | 1,264.42 | 1,264.04 | 1,195.11 | 1,056.33 |

No DPDK, iptables

| Packet size \ Users | 1 | 10 | 100 | 1,000 | 10,000 |

|---|---|---|---|---|---|

| 64 | 93.18 | 91.38 | 84.75 | 92.83 | 84.20 |

| 128 | 101.06 | 88.33 | 94.29 | 87.51 | 90.16 |

| 256 | 197.10 | 144.75 | 197.10 | 196.90 | 144.86 |

| 512 | 421.05 | 312.54 | 312.78 | 366.90 | 312.78 |

| 1024 | 815.28 | 594.25 | 704.98 | 760.15 | 649.80 |

| 1280 | 984.56 | 707.33 | 984.57 | 983.91 | 818.45 |

| 1518 | 1,125.80 | 709.09 | 1,125.43 | 1,125.55 | 875.31 |

No DPDK, learn actions

| Packet size \ Users | 1 | 10 | 100 | 1,000 | 10,000 |

|---|---|---|---|---|---|

| 64 | 92.45 | 101.95 | 76.36 | 93.24 | 100.92 |

| 128 | 131.15 | 122.00 | 99.48 | 108.40 | 91.91 |

| 256 | 196.94 | 197.09 | 197.10 | 197.06 | 197.13 |

| 512 | 366.59 | 421.05 | 421.04 | 421.02 | 366.95 |

| 1024 | 759.61 | 870.34 | 815.04 | 870.21 | 704.87 |

| 1280 | 983.69 | 986.76 | 929.21 | 984.55 | 929.15 |

| 1518 | 931.07 | 1,264.45 | 986.17 | 1,264.32 | 931.54 |

DPDK, no firewall

| Packet size \ Users | 1 | 10 | 100 | 1,000 | 10,000 |

|---|---|---|---|---|---|

| 64 | 1,641.76 | 923.58 | 952.42 | 1,010.71 | 910.67 |

| 128 | 2,761.31 | 1,575.62 | 1,578.31 | 1,708.01 | 1,543.14 |

| 256 | 5,257.29 | 3,188.03 | 3,186.35 | 3,350.85 | 3,074.94 |

| 512 | 8,946.62 | 5,304.72 | 5,393.62 | 5,616.17 | 5,410.86 |

| 1024 | 9,807.88 | 9,699.39 | 9,634.91 | 9,806.72 | 8,810.03 |

| 1280 | 9,845.58 | 9,845.57 | 9,845.55 | 9,845.53 | 9,845.50 |

| 1518 | 9,869.43 | 9,869.37 | 9,869.38 | 9,869.39 | 9,869.34 |

DPDK, learn actions

| Packet size \ Users | 1 | 10 | 100 | 1,000 | 10,000 |

|---|---|---|---|---|---|

| 64 | 559.01 | 359.72 | 311.09 | 252.73 | 134.53 |

| 128 | 1,022.93 | 713.27 | 540.54 | 540.28 | 151.89 |

| 256 | 2,028.90 | 1,353.60 | 1,066.66 | 996.52 | 163.30 |

| 512 | 3,742.67 | 2,537.26 | 2,043.94 | 1,898.97 | 169.93 |

| 1024 | 6,619.18 | 4,817.83 | 3,829.52 | 3,712.83 | 248.32 |

| 1280 | 8,012.43 | 5,858.60 | 4,709.67 | 4,534.04 | 475.08 |

| 1518 | 8,946.96 | 6,786.09 | 5,472.00 | 5,296.74 | 551.59 |

Note: All data presented in these tables is preliminary. Software and workloads used in performance tests may have been optimized for performance only on Intel® microprocessors. Performance tests, such as SYSmark* and MobileMark*, are measured using specific computer systems, components, software, operations and functions. Any change to any of those factors may cause the results to vary. You should consult other information and performance tests to assist you in fully evaluating your contemplated purchases, including the performance of that product when combined with other products. For more information go to http://www.intel.com/performance.

For more complete information about performance and benchmark results, visit www.intel.com/benchmarks.

How to deploy it

To use this firewall in combination with OVS DPDK, you need to download the networking-ovs-dpdk project:

git clone https://github.com/stackforge/networking-ovs-dpdk.gitAlso you can install this project as a PIP package:

pip install networking-ovs-dpdkTo enable the firewall, you can add this section to your devstack/local.conf file:

[[post-config|/etc/neutron/plugins/ml2/ml2_conf.ini]]

[securitygroup]

#firewall_driver = neutron.agent.linux.iptables_firewall

firewall_driver = networking_ovs_dpdk.agent.ovs_dpdk_firewall.OVSFirewallDriver