Overview

It is inevitable for mechanical and electrical parts to wear out over time with use. This leads to the malfunction of devices, equipment downtime, and high costs associated with fixing the issues. Predictive assets maintenance is a proactive approach of using historical data to effectively predict potential asset failures in advance. This allows organizations to detect and address issues early, prevent unexpected breakdowns, and reduce equipment downtime. In turn, this reduces costs, enhances safety, and optimizes asset performance to ensure continuous operation.

To address this problem, there needs to be a solution to capture data in real time and determine if there are any anomalies or differences from the expected values. One such end-to-end AI solution from data loading, processing, use of built-in models, and training to inference is the Predictive Assets Maintenance reference use case. This application is set up to identify data points with anomalies for an elevator, something that breaks often and causes inconvenience to customers and owners alike. The absolute value of vibration is used to determine anomalies. The goal is to reduce developers’ time to solution and provide an optimized solution that can be customized as needed.

Time Series Analysis Using BigDL-Chronos

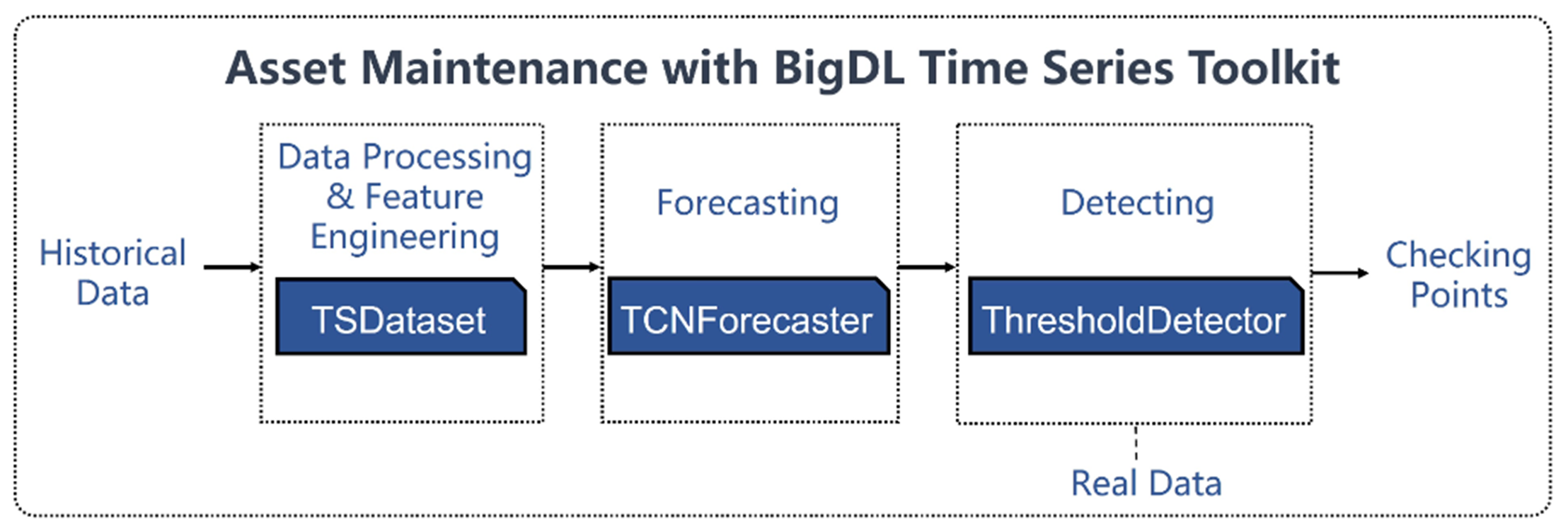

BigDL-Chronos (Chronos for short) supports building AI solutions on a single node or cluster and provides more than 10 built-in models for forecasting, anomaly detection, and simulation. Moreover, it can be used for performance tuning for extreme latency and throughput on Intel® hardware. By deploying a model for forecasting based on input data and another model for detecting using predicted and real data, potential abnormal behaviors or failures can be effectively detected before any significant assets damage occurs. Three objects from the BigDL API are used: TSDataset, TCNForecaster, and ThresholdDetector.

The high-level architecture of the reference use case is shown in this diagram:

Figure 1. Pipeline for predictive assets maintenance

TSDataset: Data Processing and Feature Engineering

TSDataset is used for processing data including impute, deduplicate, resample, scale and unscale, and roll, as well as feature engineering using datatime and aggregation. It can be initialized from a pandas dataframe and be converted to a pandas dataframe or NumPy ndarray.

The dataset used is the Elevator Predictive Maintenance Dataset, which consists of time-series datasets from Internet of Things (IoT) sensors sampled at 4 Hz in high-peak and evening elevator use. The data is taken from electromechanical sensors such as the door ball bearing, humidity levels, and vibration intensity.

TCNForecaster: Forecasting

The processed data is used as input into the TCNForecaster for training and prediction. During the initialization of the TCNForecaster, users can specify the length of historical data, length of predicted data, number of variables to observe, and number of variables to predict. The TCNForecaster is a temporal convolutional network (TCN), a neural network with a convolutional architecture instead of that for recurrent networks. These convolutional layers adapt to sequence data by using causal convolutions to ensure the model makes predictions for a given timestep based only on information from that timestep or earlier. The output is the absolute value of the vibration intensity at the next timestep.

ThresholdDetector: Detecting

The predicted data and optional real data are both input into the ThresholdDetector for generating anomaly time points. With these checking points, potential failures can be identified in advance and avoid loss of asset operation. There are two types of anomalies that can be detected:

- Pattern anomaly, which compares real data and the predicted data

- Trend anomaly, which forecasts an anomaly with only predicted data

The threshold can be set by the user or estimated from the training data according to the anomaly ratio and statistical distributions.

Performance and Results

This Jupyter* Notebook includes the steps taken to perform predictive assets maintenance on the elevator dataset. The training time for the TCNForecaster varies depending on your hardware. The results shown in Figure 2 were run on a single socket Intel® Xeon® Platinum 8380 CPU. For full details, see the Product and Performance Information at the end of this article.

Epoch 4: 100%|███████████████████████████████████████████████████████████████████████| 1814/1814 [01:39<00:00, 18.32it/s, loss=0.311]

Training completed

MSE is 25.46

The index of anomalies in test dataset only according to predict data is:

pattern anomaly index: []

trend anomaly index: [3199, 3159, 3079]

anomaly index: [3079, 3159, 3199]

The index of anomalies in test dataset according to true data and predicted data is:

pattern anomaly index: [279, 679, 1079, 1479, 1879, 2279, 2319, 2679, 2719, 2739, 2751, 2783, 3079, 3119, 3139, 3151, 3183, 3479, 3519, 3551, 3879, 3919, 4279, 4679, 5079, 5479, 5879, 6279]

trend anomaly index: [2959, 3599, 2839, 2719, 3359, 2599, 3239, 3119, 2999, 3639, 2879, 3519, 2759, 3399, 2639, 3279, 3159, 2519, 3039, 3199, 2919, 3559, 2799, 3439, 3319, 2559]

anomaly index: [3079, 6279, 5879, 2319, 2959, 3599, 3479, 279, 2839, 2719, 3359, 679, 3879, 2599, 3239, 3119, 2739, 1079, 4279, 2999, 3639, 3319, 3519, 2751, 2879, 3139, 1479, 4679, 2759, 3399, 2559, 3151, 3919, 2639, 3279, 1879, 5079, 3159, 2519, 2783, 3551, 3039, 2279, 5479, 2919, 3559, 3183, 2799, 3439, 2679, 3199]

Figure 2. Raw output of training and anomaly indices

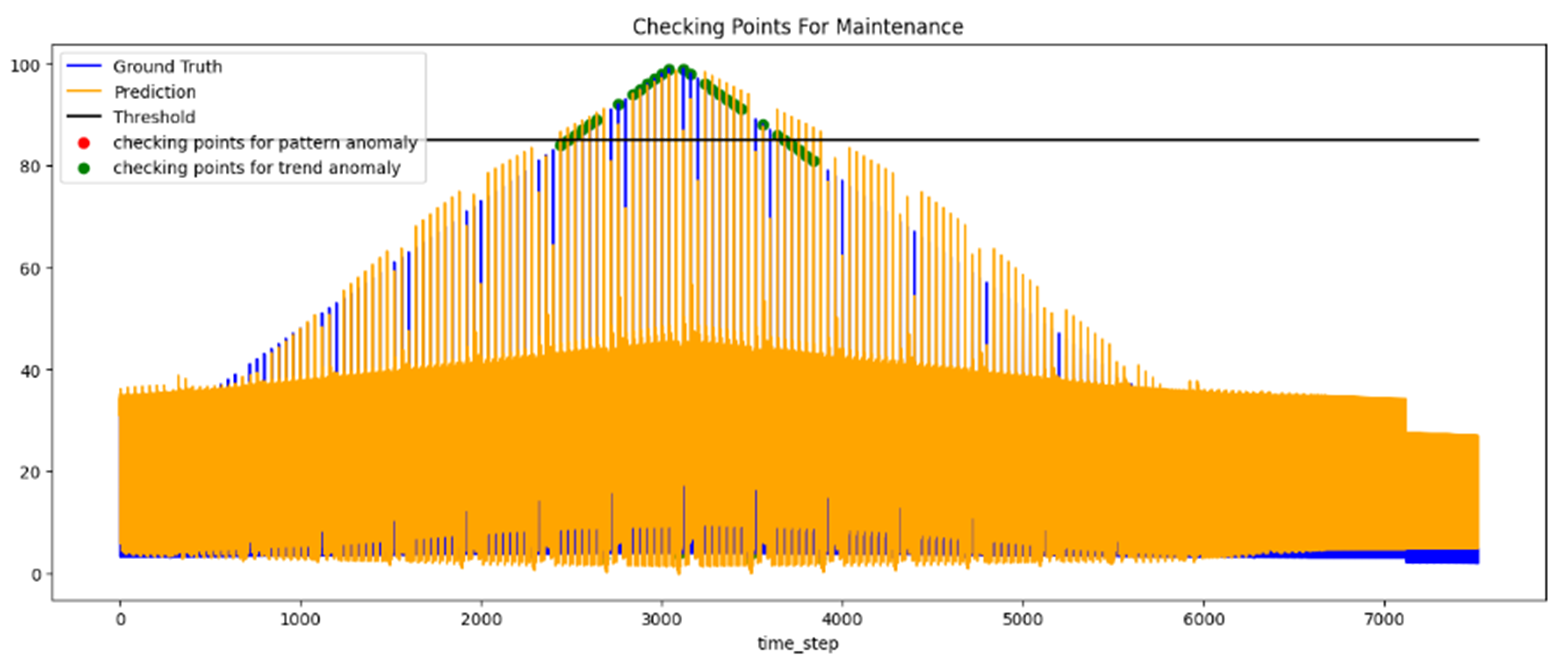

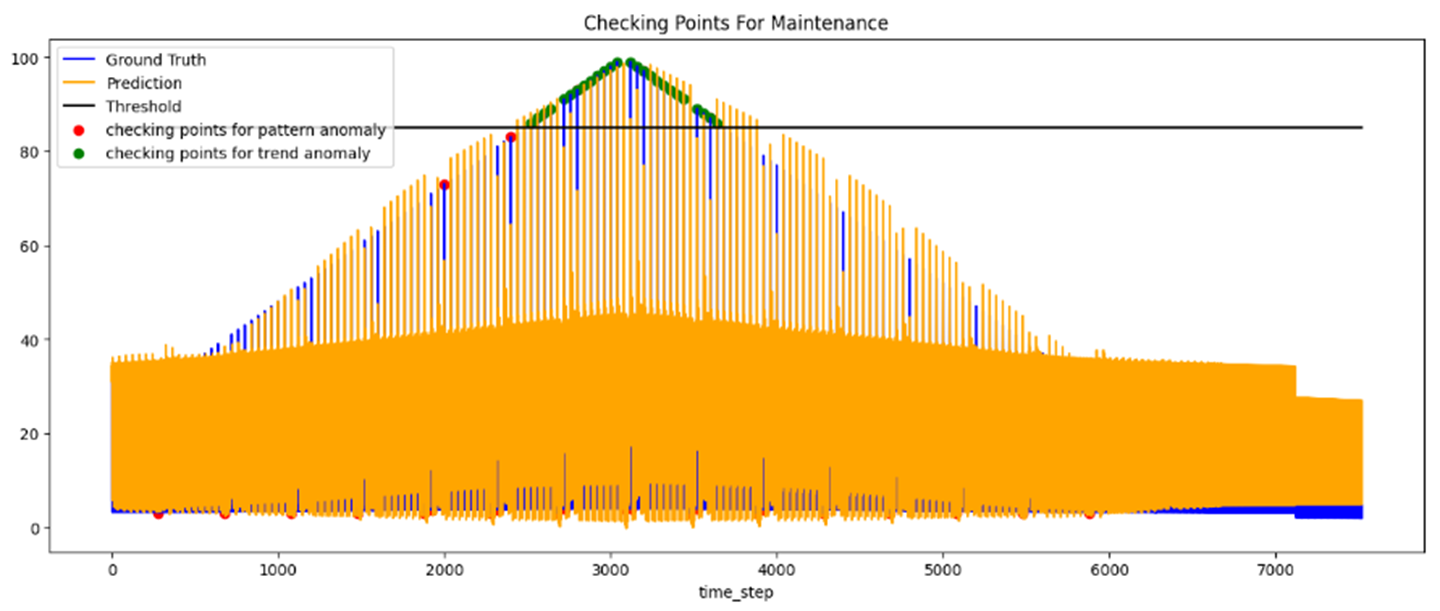

The training time took less than two minutes and gave a mean-square error (MSE) of 25.46. There is no expected value for this, but an MSE of 0 means the model fits the data points exactly, so the lower the number the better. The printed anomaly indices are the indices of the data points that should be paid attention to since they are flagged as anomalies. The Jupyter Notebook also visualizes the data, as shown in Figures 3 and 4. The green data points should be checked for trend anomaly, and the red data points should be checked for pattern anomaly.

Figure 3. Trend anomaly using only predicted data to forecast anomalies

Figure 4. Pattern anomaly using real data and predicted data to forecast anomalies

Conclusion

The Predictive Assets Maintenance reference use case can reduce your time to create such a solution by providing the entire pipeline and code to run. With the BigDL-Chronos toolkit, both functionality and performance are in place and optimized to run on Intel hardware. To customize this use case, change the path to the dataset and the threshold for which the user wants anomalies to be flagged. To realize satisfactory forecasting performance, users may need to select proper data processing techniques of TSDataset based on characteristics of their own customized dataset. Parameters of TCNForecaster may also need to be tuned for their customized datasets.

If you have any questions, the Predictive Assets Maintenance team tracks both bugs and enhancement requests using GitHub* issues. You can also provide your feedback in this survey.

Additional Resources

Product and Performance Information

System Configuration

The results shown were conducted on a single socket of a machine equipped with an Intel Xeon Platinum 8380 CPU at 2.30 GHz and 512 GB memory. More detailed information about the configuration is listed in the following tables.

Table 1. Hardware Configuration

| Name | Specification |

|---|---|

| CPU Model | Intel® Xeon® Platinum 8380 CPU @ 2.30GHz |

| CPU(s) | 160 |

| Core(s) per socket | 40 |

| Memory Size | 512GB |

Table 2. Software Configuration

| Package | Version |

|---|---|

| OS | Ubuntu 20.04.3 LTS |

| python | 3.9 |

| bigdl-chronos | 2.4.0b20230522 |

| torch | 1.13.1 |

| pytorch-lightning | 1.6.4 |

| intel-extension-for-pytorch | 1.13.100 |

| pandas | 1.3.5 |

| numpy | 1.26.2 |

| matplotlib | 3.7.1 |

| notebook | 6.4.12 |