Note: This article represents work completed by Intel Software Innovator, Silviu-Tudor Serban

Introduction to Intel® Xe Graphics Architecture

Intel® Xe graphics is a novel GPU architecture consisting of four different microarchitectures: Intel® Processor Graphics Xᵉ-LP for integrated and entry-level discrete graphics, Intel® Processor Graphics Xᵉ-HP and Intel® Processor Graphics Xᵉ-HPG for enthusiast and datacenter parts, and Intel® Processor Graphics Xᵉ-HPC for high performance computing clusters.

In this article we focus on the Intel® Processor Graphics Xᵉ-LP microarchitecture and its compute performance along with potential for powering AI workloads. The Xᵉ architecture is uniquely positioned to leverage Intel® Deep Link technology, where users can simultaneously unleash the compute performance of all system silicon on complex AI workloads.

Our Xᵉ-LP system is a Dell XPS* 13 laptop, powered by an Intel® Core™ i7-1165G7 CPU and Intel® Iris® Xe integrated graphics.

The Intel Xᵉ-LP iGPU lives on the same chip with the processor and utilizes a portion of the system’s memory, rather than using a dedicated DDR chip.

In terms of performance, the Xᵉ-LP iGPU is very capable for compute scenarios and its 96 Execution Units and 1.30Ghz max running frequency translate to outstanding AI inference capabilities for its size and wattage.

Intel® Xe Powered AI Workloads in Windows* Environments

Microsoft DirectML* and Intel® Distribution of OpenVINO™ toolkit are powerful platforms which are turbocharging AI development by lowering the barrier of entry to Machine Learning. We will explore Intel Xᵉ architecture’s potential for multi-platform AI deployment and its performance running Microsoft DirectML and OpenVINO™ workloads.

To make things more interesting, we will run our assessment on three very different systems:

- The Dell XPS* 13 laptop powered by a 4-core Intel i7-1165G7 and Intel® Iris® Xe integrated graphics

- An HP workstation powered by a 10-core Intel® Xeon®-4114 and Quadro* P1000 discrete GPU

- A custom high-end PC powered by an 8-core Intel i7-10700K and an RTX* 3070 discrete GPU

Microsoft DirectML

DirectML is a high-performance, hardware-accelerated DirectX 12 library for machine learning. DirectML provides GPU acceleration for common machine learning tasks across a broad range of supported hardware and drivers, including all DirectX 12-capable GPUs from vendors such as AMD, Intel, NVIDIA, and Qualcomm.

When used standalone, the DirectML API is a low-level DirectX 12 library and is suitable for high-performance, low-latency applications such as frameworks, games, and other real-time applications. The seamless interoperability of DirectML with Direct3D 12 as well as its low overhead and conformance across hardware makes DirectML ideal for accelerating machine learning when both high performance is desired, and the reliability and predictability of results across hardware is critical.

DirectML efficiently executes the individual layers of your inference model on the GPU (or on AI-acceleration cores, if present). Each layer is an operator, and DirectML provides a library of low-level, hardware-accelerated machine learning primitive operators. While DirectML is in its early stages compared to the more mature CUDA, it provides several advantages that make it an attractive option for many AI workloads.

DirectML Environment Setup

A really nice feature of DirectML is that it provides support for TensorFlow*, one of the most popular end-to-end open source platforms for machine learning, via theTensorFlow-DirectML project.

TensorFlow requires Python, which leads us to installing Anaconda as a first step. Anaconda is one of the best tools for setting up and managing Python environments on Windows. It’s also free and open source.

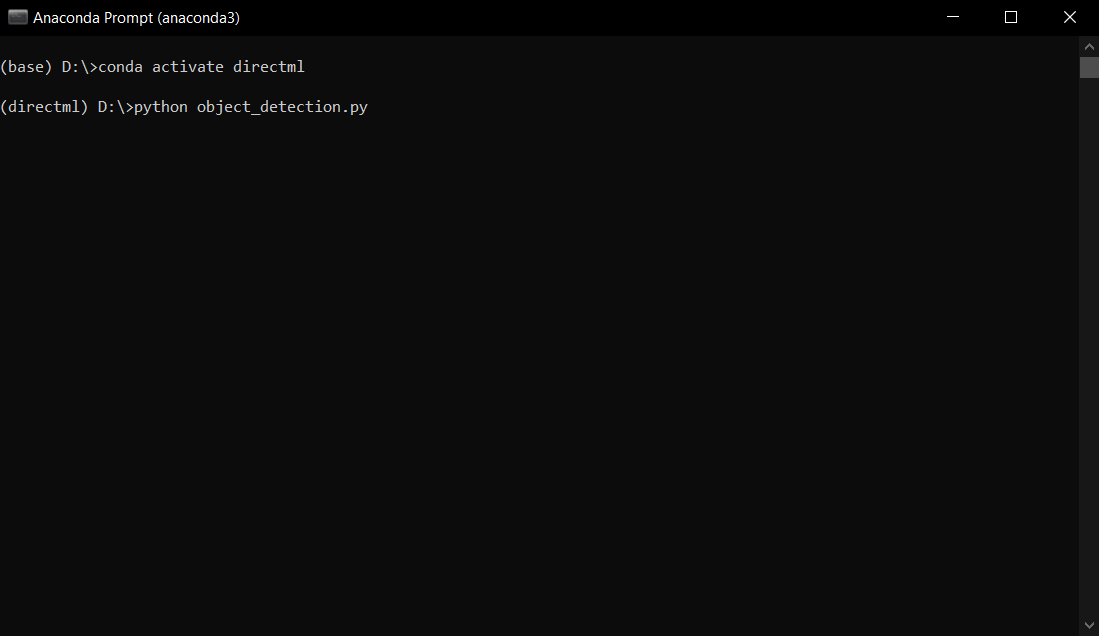

Assuming Anaconda is successfully installed, we can fire up the Anaconda Command Prompt and create a development environment with 3 commands:

conda create --name directml python=3.7

conda activate directml

pip install tensorflow-directml

In order to verify everything is working well, we can fire up a Python session and see if TensorFlow DirectML is running correctly:

import tensorflow.compat.v1 as tf

tf.enable_eager_execution(tf.ConfigProto(log_device_placement=True))

print(tf.add([1.0, 2.0], [3.0, 4.0]))

We should see a message similar to the following if there were no errors:

2021-03-25 11:27:18.235973: I tensorflow/core/common_runtime/dml/dml_device_factory.cc:45] DirectML device enumeration: found 1 compatible adapters.

This means DirectML and Tensorflow are up and running on our system and all we need to do to run our detection scripts in the future is to launch an Anaconda Prompt and activate the DirectML environment.

DirectML Xe Graphics Performance

We’ve selected a custom trained Resnet101 model from our production pipeline for comparing inference performance using DirectML. The model is trained on large images and running inference on it is sufficiently resource intensive to challenge the three test systems.

Our primary goal regarding the Xᵉ-LP graphics system is to see how well suited it is for running inference in a production setup and to see how well Xᵉ-LP stacks up against the discrete GPUs from our test systems.

As we can see in Table 1 below, the Xᵉ-LP system packs quite a punch. Remarkably the total TDP of the i7-1165G7, which includes the iGPU, is only 28W.

| Hardware configuration | Inference Platform | Inference Device | Average FPS (Higher is Better) |

Average CPU Load (Lower is better) |

System Type |

|---|---|---|---|---|---|

| i7-10700K | DirectML | CPU | 0.7 | max-content | Desktop |

| i7-1165G7 | DirectML | CPU | 0.4 | max-content | Ultrabook (AC power) |

| i7-1165G7 w/ Xe-LP iGPU | DirectML | GPU | 0.6 | 20% | Ultrabook (AC power) |

| Xeon-4114 w/ Quadro P1000 | DirectML | GPU | 0.6 | 10% | Desktop |

| i7-10700K w/ RTX 3070 | DirectML | GPU | 2.2 | 10% | Desktop |

By further analyzing the results table, we can extract a set of interesting conclusions:

- Running inference explicitly on CPU produces good results in terms of FPS, but it comes at a great cost regarding CPU load, which makes it a less than ideal option when critical software needs to run on the same machine.

- While the CPU load looks to be a bit higher on the Xe-LP system compared to the others, this is most likely a consequence of a less powerful mobile CPU compared to desktop CPUs (4 core i7-1165G7 vs 8 core i7-10700K vs 10 core Xeon-4114)

- The DirectML inference performance of Xᵉ-LP is on par with the dedicated Quadro P1000 workstation dedicated graphics card

- While DirectML inference FPS on the RTX 3070 is roughly 3.5x faster than Xᵉ-LP, the RTX 3070 can draw up to 300W in intensive loads and comes at a considerably higher price tag.

Intel® Distribution of OpenVINO™ toolkit

Intel OpenVINO is a platform for computer vision inference and deep neural network optimization which focuses on high-performance AI deployment from Edge to Cloud and it works on Linux, Windows and MacOS.

It provides optimized calls for OpenCV and OpenVX and a common API for heterogeneous inference execution across a wide range of computer vision accelerators- CPU, GPU, VPU and FPGA.

A complete set of guides for getting up and running with OpenVINO is available online.

Furthermore, an open model zoo containing pre-trained models, demos and a downloader tool for public models is provided with the distro as well as a separate repository.

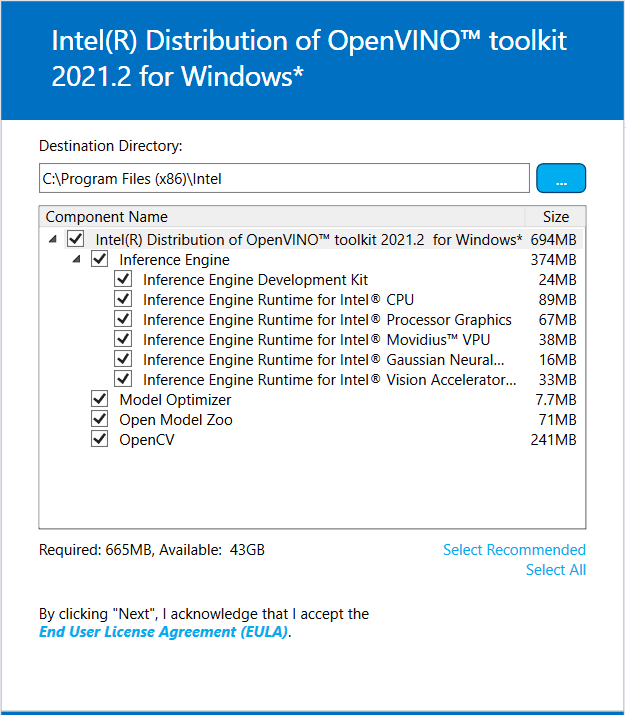

OpenVINO Environment Setup

Previously, we performed tests using our custom trained Resnet101 model on DirectML, so it makes sense to go through the OpenVINO Model Optimizer conversion process by using a very similar TensorFlow Resnet101 model, which is publicly available and pre-trained on the COCO dataset.

Model Optimizer is a python command-line tool built to facilitate the transition between the training and deployment environment, by taking an existing trained model and converting it to the OpenVINO intermediate representation (ir).

Here are the steps for completing the ir conversion process:

Step 1. Retrieve the TensorFlow model from the modelzoo repository. There are plenty of available models ready for out-of-the-box inference, however for the scope of this article we choose "faster_rcnn_resnet101_coco"

Step 2. Extract the "faster_rcnn_resnet101_coco" archive to a location of your choice. For simplicity we chose the D: partition.

The files of primary interest are "interested frozen_inference_graph.pb" and "pipeline.config", which will be used with the model python script

Step 3. We need to run 2 OpenVINO setup scripts to make sure all prerequisites are installed.

We open a Command Prompt and run the following commands:

> cd C:\Program Files (x86)\Intel\openvino_2021\bin

> setupvars.bat

> cd C:\Program Files (x86)\Intel\openvino_2021\deployment_tools\model_optimizer\install_prerequisites

> install_prerequisites.bat

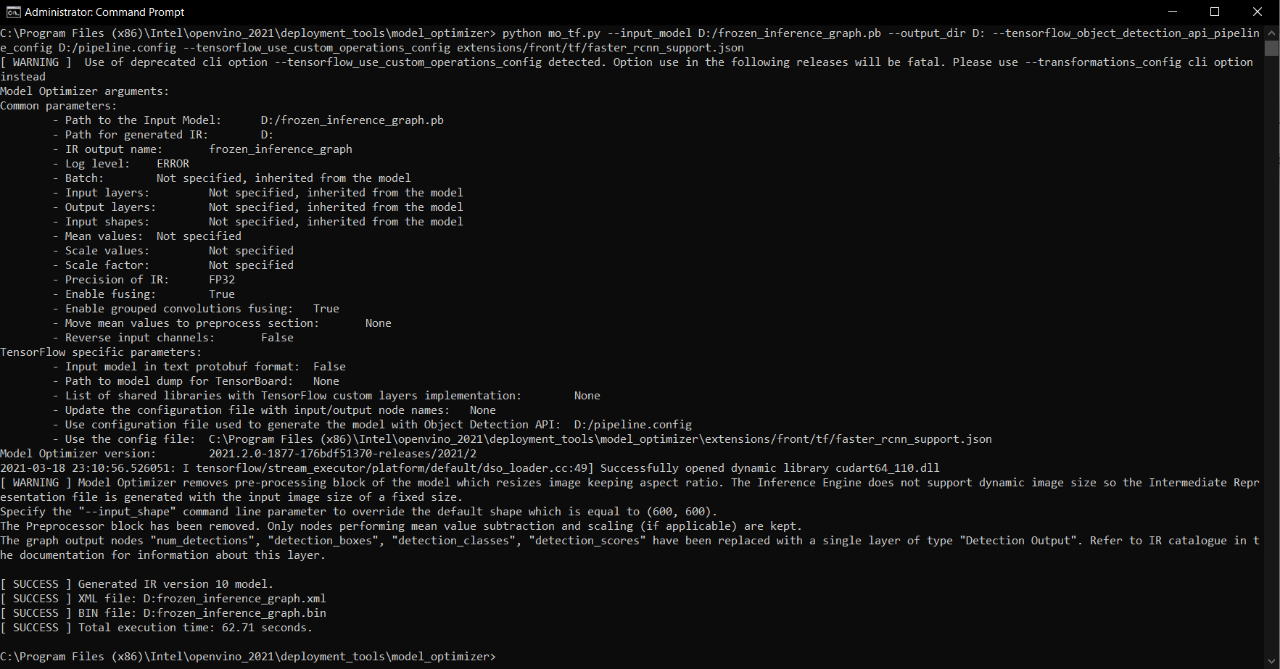

Step 4. Now we are ready to run the Model Optimizer script.

In the same Command Prompt we run:

> cd C:\Program Files (x86)\Intel\openvino_2021\deployment_tools\model_optimizer

> python mo_tf.py --input_model D:/frozen_inference_graph.pb --output_dir D: --tensorflow_object_detection_api_pipeline_config D:/pipeline.config --tensorflow_use_custom_operations_config extensions/front/tf/faster_rcnn_support.json

If the script runs successfully, the output will resemble this:

The result of this script conversion process is a set of 3 files that can be consumed by the OpenVINO inference engine: "frozen_inference_graph.xml", "frozen_inference_graph.bin", and "frozen_inference_graph.mapping".

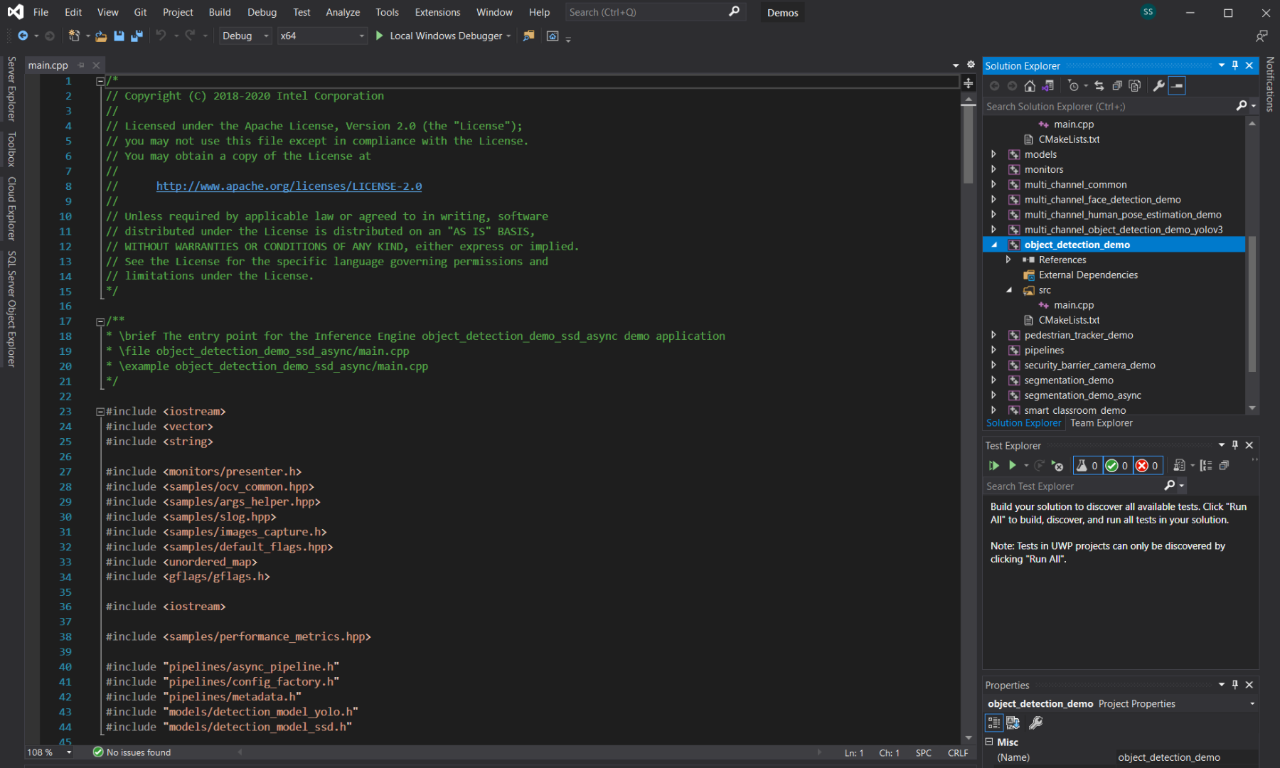

Step 5. Download and install Visual Studio 2019

Step 6. Next, open a command prompt and run the following commands:

> cd C:\Program Files (x86)\Intel\openvino_2021\inference_engine\samples\cpp

> build_samples_msvc.bat

A Visual Studio solution named Demos.sln should now be available in the user home folder under \Documents\Intel\OpenVINO\omz_demos_build

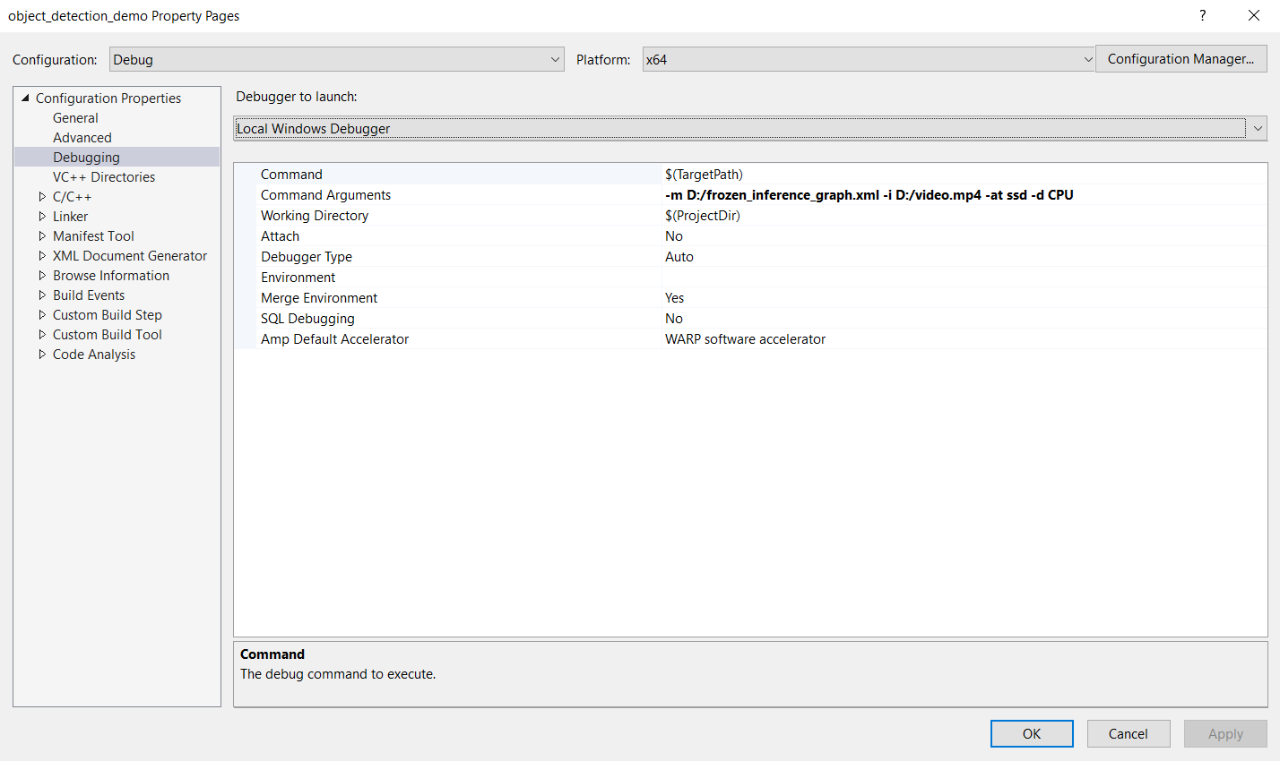

Step 7. Launch the generated Visual Studio solution, and then select the "object_detection_demo" project and set it as Startup Project:

Step 8. Prepare a video file to run inference on and save it in D: directory as video.mp4.

Step 9. Go back to Visual Studio and set the Command Arguments similar to this:

Note: A complete list of command line arguments for running the object detection demo is available online.

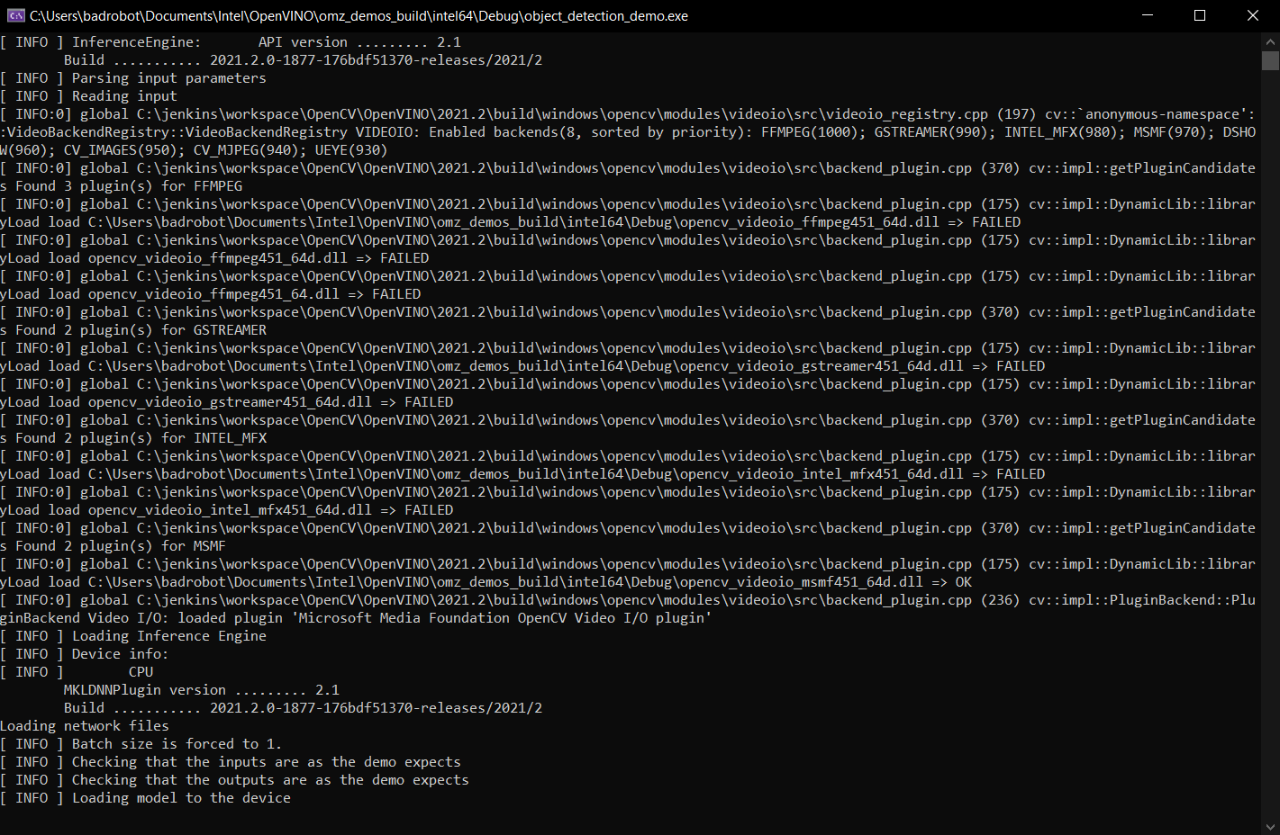

Step 10. Run the Local Windows Debugger in order to observe the models as they are running inference.

The OpenVINO environment is now ready for running inference.

OpenVINO Xe performance

For consistency, we evaluate the OpenVINO optimized version of the custom Resnet101 model which was used previously. This also facilitates a clear comparison between OpenVINO’s inference engine performance vs. that of DirectML.

The results in Table 2 reveal that running on OpenVINO provides significant detection speed benefits, with the single caveat of not being able to run OpenVINO on the Quadro P1000 and RTX 3070.

That being said, we gain a very interesting set of insights:

- Running the Intel OpenVINO converted model, on the same hardware, provides up to 3x FPS performance compared to the same hardware running DirectML, which is a significant boost.

- The inference FPS performance of Xe on OpenVINO is comparable to a RTX 3070 with DirectML and more than 3x faster than a P1000 Quadro dedicated GPU with DirectML.

| Hardware configuration | Inference Platform | Inference Device | Average FPS (Higher is Better) |

Average CPU Load (Lower is Better) |

System Type |

|---|---|---|---|---|---|

| i7-10700K | DirectML | CPU | 0.7 | max-content | Desktop |

| i7-10700K | OpenVINO | CPU | 1.9 | 60% | Desktop |

| i7-1165G7 | DirectML | CPU | 0.4 | max-content | Ultrabook (AC Power) |

| i7-1165G7 | OpenVINO | CPU | 1.0 | 65% | Ultrabook (AC Power) |

| i7-1165G7 + Xe-LP | DirectML | GPU | 0.6 | 20% | Ultrabook (AC Power) |

| i7-1165G7 + Xe-LP | OpenVINO | GPU | 2.0 | 30% | Ultrabook (AC Power) |

| Xeon-4114 + Quadro P1000 | DirectML | GPU | 0.6 | 10% | Desktop |

| i7-10700K + RTX 3070 | DirectML | GPU | 2.2 | 10% | Desktop |

Conclusion

In this article, we’ve taken a close look at the outstanding capabilities of the Xᵉ-LP microarchitecture for AI workloads.

Given that Xᵉ-LP has a significantly smaller form factor compared to its sibling microarchitectures, we can only expect proportionally higher performance scaling up with upcoming Xᵉ-HP, Xᵉ-HPG and Xᵉ-HPC GPUs.

Furthermore, when Intel® Xe graphics technology is combined with the power of the OpenVINO inferencing engine, we achieve performance levels that to date, have only been witnessed on significantly more costly AI computing platforms.

We believe Intel® Xe graphics is a game changing technology that has the power to remove the traditional barriers of entry for AI and empower software developers and ML professionals to create new solutions that improve the lives of millions of people.

Elevate Your Graphics Skills

Ignite inspiration, share achievements, network with peers and get access to experts by becoming an Intel® Software Innovator. Game devs, media developers, and other creators can apply to be Intel® Software Innovators under the new Xᵉ Community track – opening a world of opportunity to craft amazing applications, games and experiences running on Xᵉ architecture.

To start connecting and sharing your projects, join the global developer community on Intel® DevMesh.