We’ve all been stuck in traffic congestion at some point in our daily commute or travels. While waiting for the bottleneck to end, we sometimes wish for a transporter to appear that could whisk our vehicle away and drop it into a traffic-free lane.

There is obviously no such transporter on our roads and expressways. But in the digital world, devices can quickly access and exit hardware and software systems through the use of hotplug technology.

What is Hotplug?

Hotplug is a series of standard software and hardware technologies with mutually supportive components and similar electrical characteristics for mainboard hardware, CPU, firmware, NIC, operating system kernel, USB, and devices. It lets you plug devices into a system with power on and, after using the device, simply pull it out without shutting down the system. This plug-in and plug-out process does not affect the work and load of the system.

Hotplug technology does more than simply make it convenient to use devices. For a cloud computing-based web data center or a server cluster in a communication enterprise, it plays a wider and more important role. For example, hotplug technology can enable 24/7 service, dynamic deployment, disaster preparedness, and more. As Figure 1 shows, it can be used to help balance loads, reduce power consumption, handle hardware errors, and realize live migration.

Figure 1. Common uses of hotplug technology

Hotplug and Data Plane Development Kit—Working Together

To enable the Data Plane Development Kit (DPDK) hotplug feature for one of the four usages in Figure 1, follow these steps:

- Check the server’s product manual to see if the mainboard supports hotplug.

- Ask an IT operation staff member to verify whether the OS running on the hard disk supports hotplug technology (Linux* kernel versions greater than or equal to 2.4.6 support it).

- The IT staff member needs a set of mechanisms to realize the communication and processing of user-state and kernel-state hotplug events. The open source DPDK enables developers to easily implement hotplug by calling one or two API interfaces without paying attention to hardware drivers and operating system details.

Why DPDK?

The DPDK is qualified to provide these services because it:

- Supports various buses, including OFED, IGB_UIO/VFIO PCI, Hyper-V/VMbus, and more.

- Provides several device class interfaces, including Ethdev, Crypto, Compress, and Event.

- Supports the device syntax of advance, including bus = pci, id = BDF/class = eth, .../driver = virtio, ... (18.11).

- Provides a blocklist/passlist configuration. A dynamic policy API for device management is currently under development.

- Provides corresponding APIs for device plug in and plug out(17.08).

- Provides a failsafe driver (17.08), Uevent monitor (18.05), Hotplug Handler (18.11).

Note: This article covers device hotplug (17.08). Memory hotplug (18.05) and multiprocess hotplug (18.11) are beyond its scope.

The data expressway of the DPDK's working thread focuses on data plane performance. In the extreme case where a device is unplugged, it should allow the main control thread to take over the control. Using hotplug technology in the DPDK is sufficient and necessary to realize dynamic device management.

DPDK Hotplug Framework

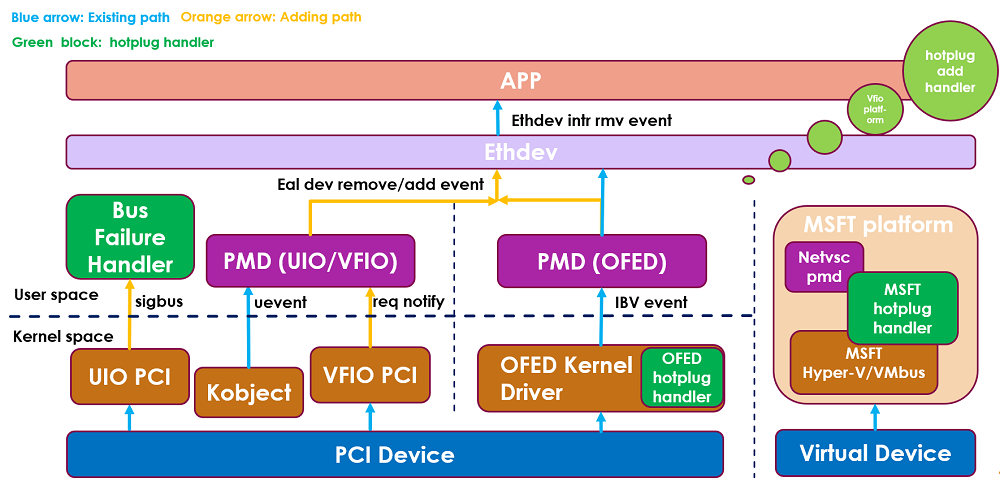

Figure 2 illustrates the current DPDK hotplug framework.

Figure 2. The current DPDK hotplug framework.

DPDK hotplug includes hot plug-in (add) and hot unplug (remove). The process of handling hotplug in DPDK is roughly as follows:

For hotplug add:

->Uevent(add)

->hotplug handle and RTE_DEV_EVENT_ADD notify

->application calls rte_eal_hotplug_add

->PMD probe ports

->PMD calls rte_eth_dev_probing_finish

->RTE_ETH_EVENT_NEW

->application get new ports

For hotplug remove:

->Uevent(remove) or req notify(for vfio)

->hot-unplug handle and RTE_DEV_EVENT_REMOVE notify

->application calls rte_eal_hotplug_remove

->PMD calls rte_eth_dev_removing

->RTE_ETH_EVENT_DESTROY

->application calls rte_eth_dev_close

For more details on these processes, refer to the related open source code.

Hotplug Event Detection

Hotplug does not allow you to complete the communication between user-space programs and devices through the common interface ioctl or write/read fd provided by device drivers. Instead, you can use the Linux-specific mechanism of netlink communication and uevent device model to monitor hotplug events. Note that uevent is a Linux-specific mechanism and is not currently supported on freeBSD.

Using the include/linux/kobject.h file of Linux kernel 4.17.0 as an example, specifically focus on the ADD and REMOVE events.

In the lib/librte_eal/linuxapp/eal/eal_dev.c file of DPDK 18.11, socket fd is established to monitor events.

In the driver/vfio/pci/vfio_pci_private.h header file of the Linux kernel, the req_trigger in the vfio_pci_device structure defines the contents of the corresponding eventfd.

The code path of eventfd for req notifier is in the drivers/bus/pci/linux/pci_vfio.c file of DPDK 18.11.

Hotplug Process Mechanism

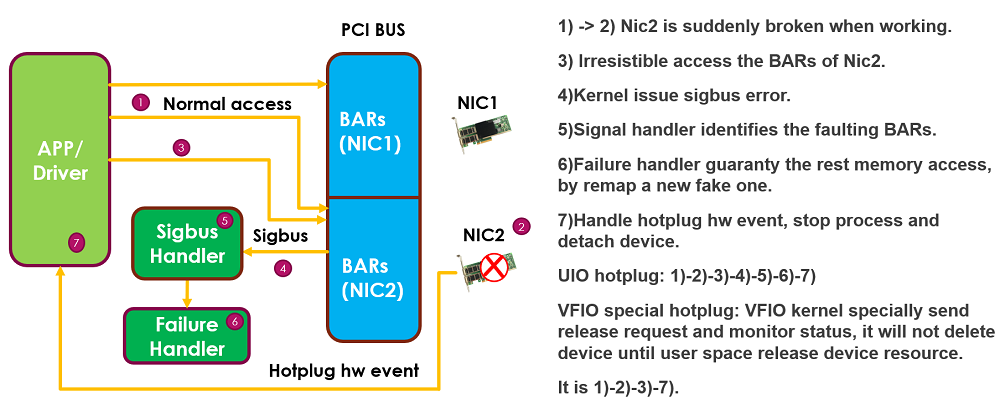

During a hotplug remove event, reading and writing of a device‘s effective address will result in failure and lead to a program exception. In this situation, a hotplug add event will not result in failure. The hot-unplug handler involves two processes: the handling of sigbus handler and the hot-unplug failure handler.

- Control path events are monitored and then processed in sequence. For the data path of per-core per-pooling thread, communication with devices is through mapped memory I/O (MMIO) and direct memory access (DMA), and it can communicate with devices much faster than the control path. The difference in communication speeds can cause a situation where the data path is unaware that a hotplug event is being processed in the control path, and it continues to illegally read and write to devices that have been declared invalid by the kernel. To solve this problem, use the sigbus handler to intercept and resolve illegal events to prevent the program from exiting abnormally (see Figure 3).

- Since the Linux kernel handles hot-unplug differently in igb_uio pci and vfio pci, the DPDK hot-unplug handler must implement two different mechanisms. In vfio, when a hot-unplug occurs, the kernel reports to user space to release resources before deleting the device, returning control to the kernel through a special device request event. It is the opposite in igb_uio. That is, when a hot-unplug occurs, the kernel deletes the device directly before reporting to user space through uevent.

Figure 3. Intercepting and handling illegal events

Hardware Event Handlers

The registration of two kinds of hotplug hardware events (uevent and vfio req) and one kind of sigbus software event needs to be handled.

The Uev handler of the igb_uio registers the RTE_INTR_HANDLE_DEV_EVENT event:

The Req handler of the vfio registers the RTE_INTR_HANDLE_VFIO_REQ event:

The handler of the sigbus registers the SA_SIGINFO event. Now the behavior of the process after receiving the signal needs to change using sigaction to register the user-defined sigbus_handler.

In sigbus_handler, a generic sigbus error and special sigbus error that result from a hotplug are handled differently. When sigbus_handler is triggered by a sigbus event, use the si_addr in the siginfo_t structure to obtain the fault accident’s memory address. Then check if it is located in the BARs space of the device to perform read-write protection on the memory of the hot-unplug handler on the located device. If the sigbus error is generic, the generic sigbus handler is restored and then an abnormal exit occurs.

The processing of hot-unplug in the igb_uio PCI bus is the above-mentioned read-write protection on the memory. By remapping the BARs (MMIO or I/O port) space of the device to a new anonymous fake memory, the read-write of the subsequent device will not result in a sigbus error from the hot-unplug. This can ensure that the control patch is done smoothly and the device is safely released, and it avoids abnormal termination of the process.

The hot-unplug processing in vfio pci is quite different, and it requires the monitoring of request irq. When a hot-unplug occurs, the kernel sends a request to call the device control. After the user space receives the signal, it first releases the device, and then the kernel deletes the device when its polling detects that the device was released by user space. (Linux kernel versions greater than or equal to 4.0.0 support vfio req notify.)

For more details, see the open source code of the DPDK Hotplug Framework module at DPDK.org.

A Demo Using Testpmd

This simple demo shows that in a QEMU/kernel-based virtual machine (KVM) environment, testpmd is affected during the process of receiving and sending packets when hot plugging an NIC that passes though from SR-IOV.

System Environment

Host kernel: Linux 4.17.0-041700rc1-generic

VM kernel: Linux ubuntu 4.10.0-28-generic

QEMU emulator: 2.5.0

DPDK: 18.11

NIC: Linux ixgbe* or i40e NIC or other (pci uio nic)

Host Steps

- Bind port 0 to vfio-pci:

modprobe vfio_pci

echo 1> /sys/bus/pci/devices/0000:81:00.0/sriov_numvfs

./usertools/dpdk-devbind.py -b vfio-pci 81:10.0

- Start QEMU scripts:

taskset -c 12-21 qemu-system-x86_64 \

-enable-kvm -m 8192 -smp cores=10,sockets=1 -cpuhost -name dpdk1-vm1 \

-monitor stdio \

-drive file=/home/vm/ubuntu-14.10.0.img \

-device vfio-pci,host=0000:81:10.0,id=dev1 \

-netdevtap,id=ipvm1,ifname=tap5,script=/etc/qemu-ifup -device rtl8139,netdev=ipvm1,id=net0,mac=00:00:00:00:00:01 \

-localtime -vnc :2

VM and QEMU Steps

Step 1. VM: Bind port 0 to vfio-pci:

./usertools/dpdk-devbind.py -b vfio-pci 00:03.0

Step 2. VM: Start testpmd and enable the hotplug feature:

./x86_64-native-linuxapp-gcc/app/testpmd -c f-n 4 -- -i --hot-plug

Step 3.

testpmd> set fwd txonly

Step 4.

testpmd> start

Step 5. QEMU: Remove device for unplug:

(qemu) device_del dev1

Step 6. QEMU: Add device for plug

(qemu) device_addvfio-pci,host=0000:81:10.0,id=dev1

Step 7. Bind port 0 to vfio-pci:

./usertools/dpdk-devbind.py -b vfio-pci 00:03.0

Step 8.

testpmd> stop

Step 9.

testpmd> port attach 0000: 00:03.0

Step 10.

testpmd> port start all

Step 11.

testpmd> start

As the demo shows, when the NIC is hot swapped, the testpmd—in the process of receiving and sending packets—will not exist abnormally. Rather, it will smoothly detach or attach the device, and then normally restart traffic to receive and send packets.

Advanced Application Scenarios

Application scenarios of DPDK hotplug are not limited to those mentioned above. The various functional modules in the DPDK framework may be helpful in other advanced use cases and solutions.

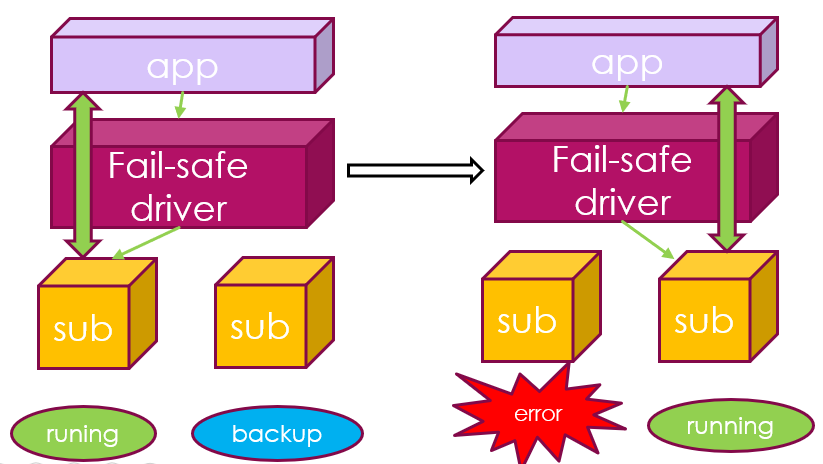

For example, you can recover network equipment through hotplug and the Fail-safe PMD to guarantee the uninterrupted operation of a data path in the process of hotplugging, as shown in Figure 4.

Figure 4. Recover network equipment through hotplug and the Fail-safe PMD

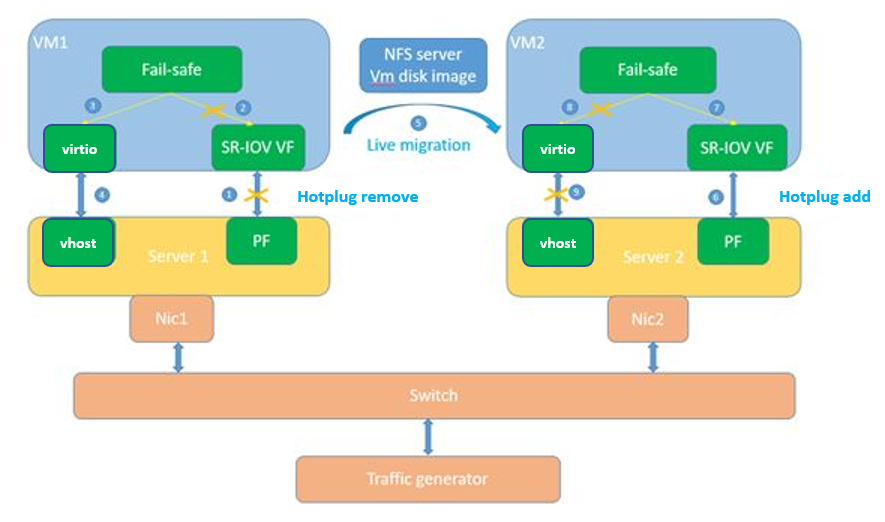

Furthermore, an application load under software-defined network and network function virtualization can realize SR-IOV-based VM live migration through hotplug + Fail-safe PMD and virtio + QEMU/KVM, as shown in Figure 5.

Figure 5. Realizing live migration through hotplug + Fail-safe PMD and virtio + QEMU/KVM

Looking Ahead

The hotplug dynamic device-management mechanism still needs some improvements, including devices—and ports’ removal of devices—in a one-to-many situation, automatic binding of kernel drivers, and the performance contribution of the uio kernel driver and hotplug in live-migration. Some of these problems will be resolved in future releases, and solutions to others will be explored in response to the needs of the workload or application, dynamic evolution of the kernel, and continuous evolution of the DPDK framework.

About the Author

Jeff Guo Jia, is a network software engineer at Intel who is mainly engaged in the development of DPDK frameworks and PMD software.