Introduction

Deploying a containerized Intel® Distribution of OpenVINO™ toolkit solution as a Microsoft Azure* module can help manage your application, and Azure can even deploy multiple modules to the same device. This article will go over setting up an Intel® Distribution of OpenVINO™ toolkit module in Azure and explore the considerations for running multiple modules on CPU and GPU on the IEI Tank* AIoT Developer Kit.

The module will run the Benchmark Application Demo, which comes with the Intel® Distribution of OpenVINO™ toolkit. It will do inference on an image 100 times asynchronously using the AlexNet model.

As multiple modules will be deployed to the same machine, the Intel® Distribution of OpenVINO™ toolkit will be install on the IEI Tank itself, which the containers will be able to access through bind mounts. This way each container does not have to have the Intel® Distribution of OpenVINO™ toolkit installed within it, making build time faster and also taking up less space.

Hardware

IEI Tank* IoT Developer Kit with Ubuntu* 16.04 as Edge Device

Set Up the IEI Tank* AIoT Developer Kit

Install the Intel® Distribution of OpenVINO™ toolkit on the IEI Tank following these instructions.

Build the samples following the instructions here.

Setting up Microsoft Azure*

If not already setup in Azure to deploy modules, then follow the quick start guide to set up a standard tier IoT Hub and then register, and connect the IEI Tank as an IoT Edge device to it. In addition, create a registry to store the container images.

Docker*

The Intel® Distribution of OpenVINO™ toolkit containers will be built on the IEI Tank with Linux* OS (another Linux machine could be used) using Docker* and then pushed up the Azure registry before being deployed back down to the IEI Tank.

Install Docker onto the IEI Tank.

The Dockerfile to build the container is below. It will install Intel® Distribution of OpenVINO™ toolkit dependencies, included those for interfacing with GPU.

Note that the GPU dependencies might need to be updated. Look in /opt/intel/openvino/install_dependencies for the latest deb files and update the Dockerfile if needed.

Code Block 1. Dockerfile to build the container

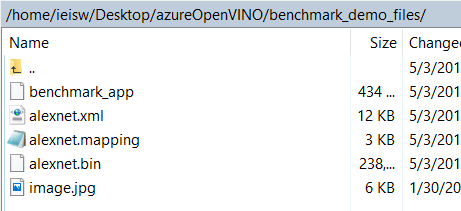

The ‘COPY . /’ will copy whatever is in the same directory as the Dockerfile to the Docker container, in this case we want a folder called benchmark_demo_files to be there. Inside of it should be the compiled Benchmark Application sample application, the image, and the converted AlexNet files from the Model Downloader.

To get the AlexNet model, use the model downloader that comes with the Intel® Distribution of OpenVINO™ toolkit.

Convert the model.

Next build the benchmark_app.

Then copy the benchmark_app file to the project directory and the model files.

Figure 1: ‘benchmark_demo_files’ folder

Also in the same location is the script run_benchmark_demo.sh that will run the object detection program on the video file in a continuous loop with the optimized model file. To run it on the GPU, just change CPU to GPU.

Code Block 2: run_benchmark_demo.sh

The Dockerfile, benchmark_demo_files folder, and run_benchmark _demo.sh should all be in the same location. Now the container is ready to be built.

Build and push the Docker container to Azure:

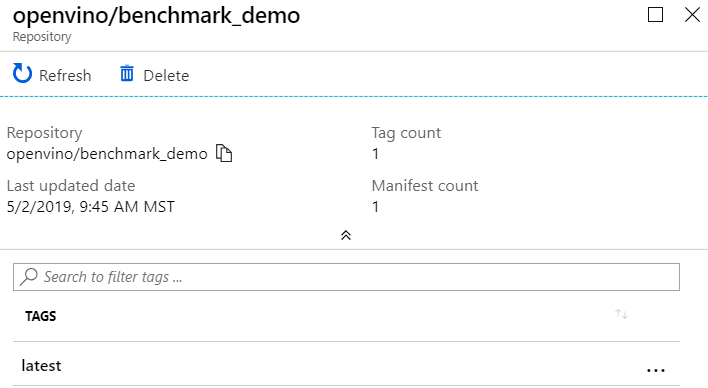

The benchmark_demo module should now be in the registry in Azure.

Figure 2: benchmark_demo container in Azure Repository

Deploy

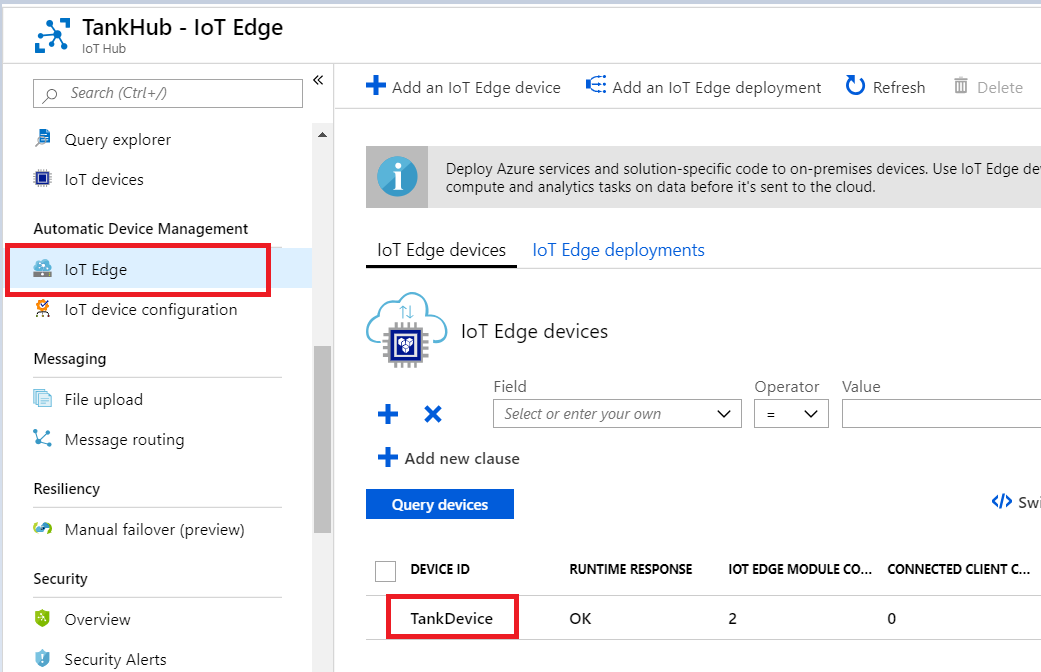

Management and click on IoT Edge. In the pane that opens on the right, click on the Device ID of the IEI Tank.

Figure 3: Going to the Device in Azure

At the top, click on Set modules

Figure 4: Click on Set modules

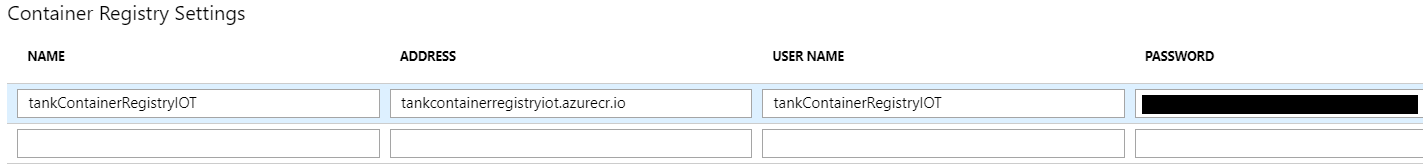

First, link the container registry to the device so it can access it. Add the name, address, user name, and password.

Figure 5: Configure the Registry

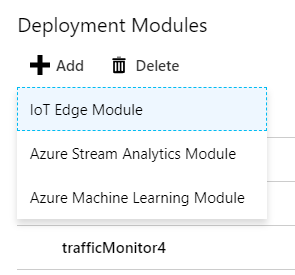

Now you can add a Deployment Module by clicking on +Add and selecting IoT Edge Module.

Figure 6. Add an IoT Edge Module

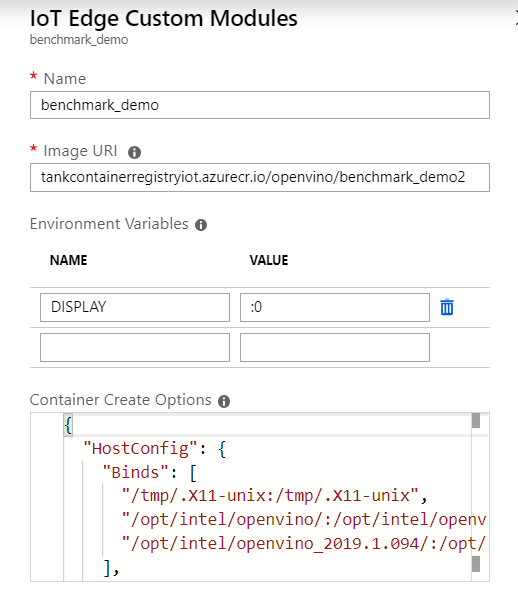

Now it is time to configure the module with a name and the image URI, which is where you pushed it originally with Docker. Refer to Figure 8 for how the configuration will look.

As the container doesn’t have the Intel® Distribution of OpenVINO™ toolkit installed, it needs bind mounts to access it installed on the IEI Tank itself. The Privileged option allows the container to access the GPU. Add the below to the Container Create Options field.

Code Block 3. Container Create Options

Choose a Restart Policy.

Note: Microsoft Azure may declare an invalid configuration when selecting On-Failed during testing. It is recommended to select another option.

Finally at the end, you need to define one environment variable for the X11 forwarding to work: DISPLAY = :0

Note: X11 forwarding can be different per machine. You need to make sure X11 forwarding is enabled on your device, and you need to run command xhost + in a cmd prompt, or manage how the container will connect to your machine. The value of DISPLAY may also be different.

Figure 7: Module configuration

Click Save, and click Next, then Submit until the module is deployed to the device.

Add more modules as desired. For GPU, remember to update the run_benchmark_demo.sh with -d GPU option and push it to Azure under a new name like <registryname>.azurecr.io/openvino/benchmark_demo_gpu.

Useful commands

List the module installed:

Remove the container:

Look at the logs of the container:

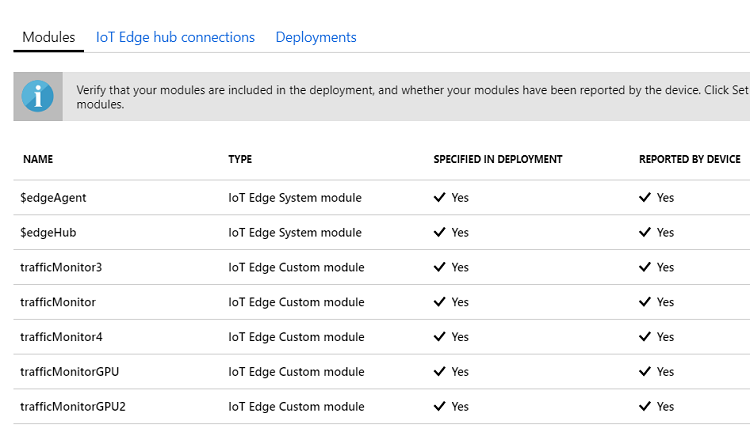

Figure 8. Modules deployed to IEI Tank

Conclusion

Now that multiple Azure modules are running on the IEI Tank, there are some considersations to take into account. First of all, containerizing the solutions can make it run slower. The impact should be neglible to the average Intel® Distribution of OpenVINO™ toolkit application, however in one where milliseconds matter the cost could be considered very high. Also, the modules running the inference on the GPU are still making use of the CPU to do other computation and impact the CPU modules quite heavily. The sample application from the GitHub repository used in this paper prints out the times for preprocessing (ms), inference (ms/frame), and postprocessing (ms) in the logs, using those as reference to see how the processing time increases with each module, which can be helpful.

About the author

Whitney Foster is a software engineer at Intel in the core and visual computing group working on scale enabling projects for Internet of Things and computer vision.

References

Get Started with the Intel® Distribution of OpenVINO™ Toolkit and Microsoft Azure* IoT Edge