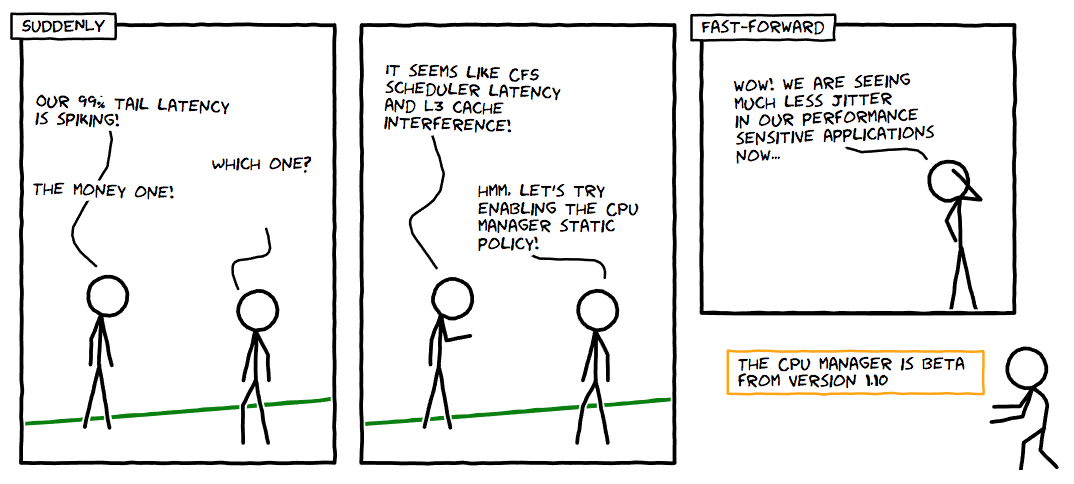

CPU Manager is a beta feature in Kubernetes, enabling better placement of workloads in the Kubernetes node agent. If your workload faces CPU-intensive challenges, then CPU Manager can provide better performance isolation by allocating CPUs for exclusive-use.

CPU Manager might help workloads with the following characteristics:

- Sensitive to CPU throttling effects

- Sensitive to context switches

- Sensitive to processor cache misses

- Benefits from sharing a processor resources (e.g., data and instruction caches)

- Sensitive to cross-socket memory traffic

- Sensitive or requires hyperthreads from the same physical CPU core

How it Works

Most Linux* distributions include the following three CPU resource controls:

- Completely Fair Scheduler (CFS) shares—your weighted fair share of CPU time on the system

- CFS quota—your hard cap of CPU time over a specified period

- CPU affinity—on what logical CPUs you are allowed to execute

By default, all the pods and the containers running on a compute node of your Kubernetes cluster can execute on any available cores in the system. The total amount of allocable shares and quota are limited by the CPU resources explicitly reserved for kubernetes and system daemons. However, limits on CPU time can be specified using CPU limits in the pod spec. Kubernetes uses CFS quota to enforce CPU limits on pod containers.

When CPU Manager is enabled with the "static" policy, it manages a shared pool of CPUs. Initially this shared pool contains all the CPUs in the compute node. When a container with integer CPU request in a Guaranteed pod is created by the Kubelet, CPUs for that container are removed from the shared pool and assigned exclusively for the lifetime of the container. Other containers are migrated off these exclusively allocated CPUs.

All non-exclusive-CPU containers (Burstable, BestEffort and Guaranteed with non-integer CPU) run on the CPUs remaining in the shared pool. When a container with exclusive CPUs terminates, its CPUs are added back to the shared CPU pool.

How CPU Manager Improves Performance Isolation

With CPU Manager static policy enabled, the workloads might perform better for one of the following reasons:

- Exclusive CPUs can be allocated for the workload container but not the other containers. These containers do not share the CPU resources. As a result, expect better performance due to isolation when an aggressor or a co-located workload is involved.

- There is a reduction in interference between the resources used by the workload because CPUs can be partitioned among workloads. These resources might also include the cache hierarchies and memory bandwidth and not just the CPUs. This helps improve the performance of workloads, in general.

- CPU Manager allocates CPUs in a topological order on a best-effort basis. If a whole socket is free, the CPU Manager will exclusively allocate the CPUs from the free socket to the workload. This boosts the performance of the workload by avoiding any cross-socket traffic.

- Containers in Guaranteed QoS pods are subject to CFS quota. Very bursty workloads may get scheduled, burn through their quota before the end of the period, and get throttled. During this time, there may or may not be meaningful work to do with those CPUs. Because of how the resource math lines up between CPU quota and number of exclusive CPUs allocated by the static policy, these containers are not subject to CFS throttling (quota is equal to the maximum possible CPU-time over the quota period).

To understand the performance improvement and isolation provided by enabling the CPU Manager feature in the Kubelet, we ran experiments on an Intel® Xeon® processor-based dual-socket compute node with 48 logical CPUs (24 physical cores each with 2-way hyperthreading). Find the results of those tests, and learn more about CPU Manager performance as demonstrated with real-world workloads for three different scenarios, in the Kubernetes blog, “Feature Highlight: CPU Manager.”