This article describes how to configure vHost-user with multiqueue for a virtual machine (VM) connected to Open vSwitch (OvS) with the Data Plane Development Kit (DPDK). It was written with network admin users in mind who want to use the vHost-user multiqueue configuration for increased bandwidth to VM vHost-user port types in their Open vSwitch with DPDK server deployment.

At the time of this writing, vHost-user multiqueue for OvS with DPDK is available in Open vSwitch 2.5, which can be downloaded here. If using Open vSwitch 2.5, the installation steps for OvS with DPDK can be found here.

You can also use the OvS master branch, which can be downloaded here if you want to have access to the latest development features. If using the OvS master branch, installation steps for OvS with DPDK can be found here.

Note: As setup and component requirements differ for OvS with DPDK between Open vSwitch 2.5 and Open vSwitch master, be sure to follow the installation steps relevant to your Open vSwitch deployment.

vHost-user Multiqueue in OvS with DPDK

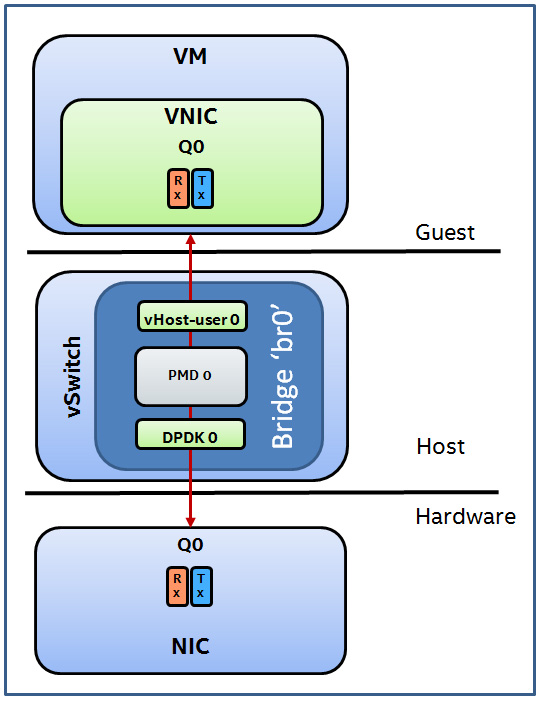

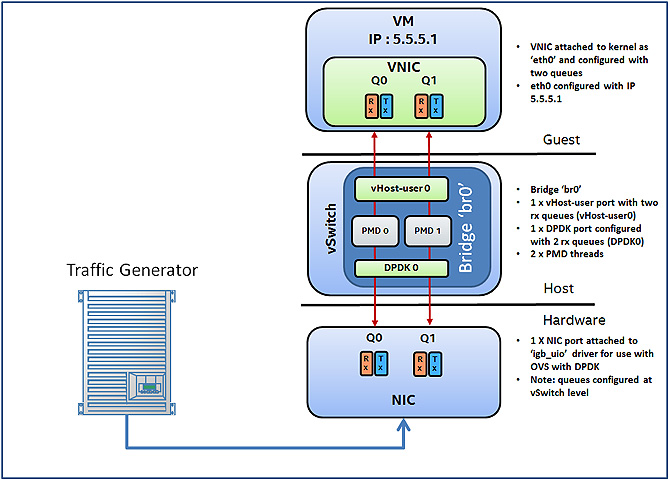

Before we configure vHost-user multiqueue, let’s first discuss how it is different from the standard vHost-user (that is, a single queue). Figure 1 shows a standard single queue configuration of vHost-user.

Figure 1. vHost-user default configuration.

The illustration is divided into three parts.

Hardware: a network interface card (NIC) configured with one queue (Q0) by default; a queue consists of a reception (rx) and transmission (tx) path.

Host: the host runs the vSwitch (in this case OvS with DPDK). The vSwitch has a DPDK port (‘DPDK0’) to transmit and receive traffic from the NIC. It has a vHost-user port (‘vHost-user0’) to transmit and receive traffic from a VM. It also has a single Poll Mode Driver thread (PMD 0) by default that is responsible for executing transmit and receive actions on both ports.

Guest: A VM. This is configured with a Virtual NIC (VNIC). A VNIC can be used as a kernel network device or as a DPDK interface in the VM. When a vHost-user port is configured by default, only one queue (Q0) can be used in the VNIC. This is a problem as a single queue can be a bottleneck; all traffic sent and received from the VM can only pass through this single queue.

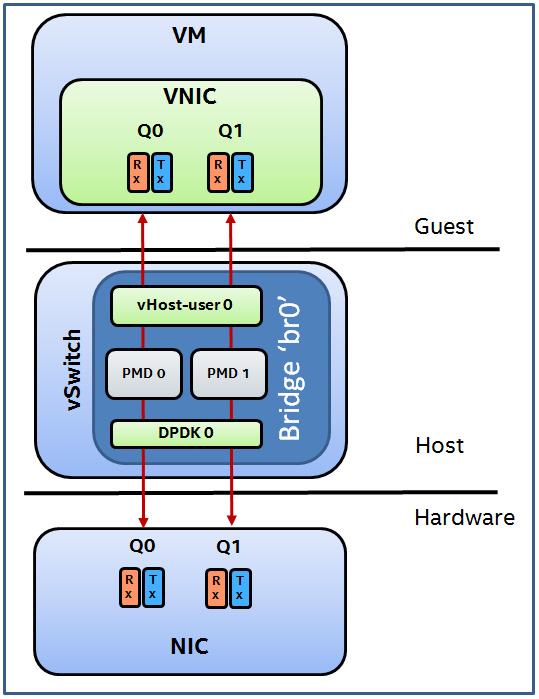

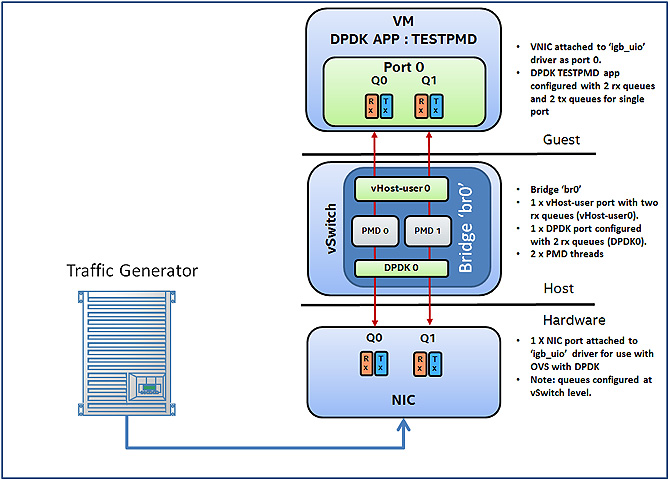

vHost-user multiqueue resolves this problem by allowing multiple queues to be used in the VNIC in the VM. This provides a method to scale out performance when using vHost-user ports. Figure 2 describes the setup required for two queues to be configured in the VNIC with vHost-user multiqueue.

Figure 2. vHost-user multiqueue configuration with two queues.

In this configuration, notice the change in each component.

Hardware: The NIC is now configured with two queues (Q0 and Q1). The number of queues used in the VNIC is matched with the number of queues configured in the hardware NIC. Receive Side Scaling (RSS) is used to distribute ingress traffic among the queues on the NIC.

Host: The vSwitch is now configured with two PMD threads. Each queue is handled by a separate PMD thread so that the load for a VNIC is spread across multiple PMDs.

Guest: The VNIC in the guest is now configured with two queues (Q0 and Q1). The number of queues configured in the VNIC dictates the minimum number of PMDs and rx queues required for optimal distribution.

Test Environment

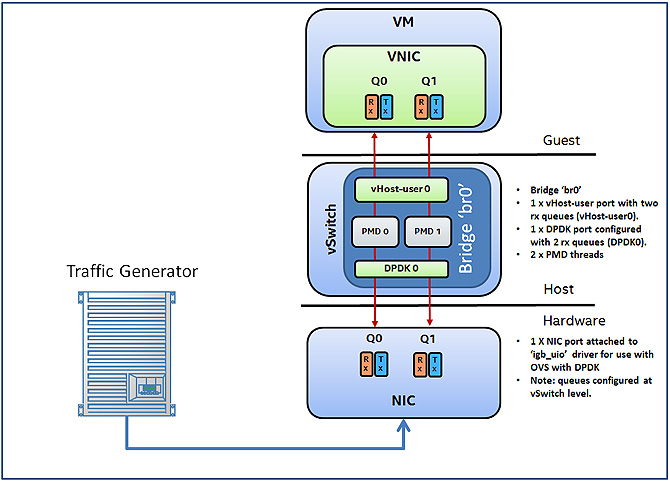

Figure 3. Test environment.

This article covers two use cases in which vHost-user multiqueue will be configured and verified within this guide.

- vHost-user multiqueue using kernel driver (virtio-net) in guest.

- vHost-user multiqueue with DPDK driver (igb_uio) in guest.

Note: Both the host and the VM used in this setup run Fedora* 22 Server 64bit with Linux* kernel 4.4.6. DPDK 16.04 is also used in both the host and VM. QEMU* 2.5 is used on the host for launching the VM with vHost-user multiqueue, which connects the VM to the vSwitch bridge.

The setup of the following components remains the same in both use cases:

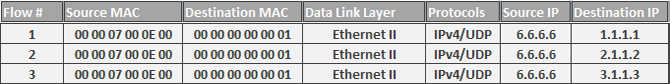

Test traffic configuration

The traffic generator is external and connected to the vSwitch via a NIC. There is no specific traffic generator recommended for these tests; traffic generated via software or hardware can be used. However to confirm vHost-user multiqueue functionality, multiple flows are required (a minimum of two flows). Below are examples of three flows that can be configured and should cause traffic to be distributed between the multiple queues.

Notice that the destination IP varies. This is required in order for RSS to be used on the NIC. When using a NIC with vHost-multiqueue this is a required property for ingress traffic. All other fields can remain the same for testing purposes.

NIC configuration

The NIC used for this article is an Intel® Ethernet Converged Network Adapter XL710 but other NICs can also be used; a list of NICs that are supported by DPDK can be found here. The NIC has been bound to the ‘igb_uio’ driver on the host system. Steps for building the DPDK and binding a NIC to ‘igb_uio’ can be found in the INSTALL.DPDK.rst document that is packaged with OvS. The NIC is connected to the traffic generator. Rx queues for the NIC are configured at the vSwitch level.

vSwitch configuration

Follow the commands to launch the vSwitch with the DPDK in INSTALL.DPDK.rst. Once the vSwitch is launched with the DPDK, configure it with the following commands.

Add a bridge ‘br0’ as type netdev.

ovs-vsctl add-br br0 -- set Bridge br0 datapath_type=netdev

Add physical port as ‘dpdk0’, as port type=dpdk and request ofport=1.

ovs-vsctl add-port br0 dpdk0 -- set Interface dpdk0 type=dpdk ofport_request=1

Add vHost-user port as ‘vhost-user0’, as port type= dpdkvhostuser and request ofport=2.

ovs-vsctl add-port br0 vhost-user0 -- set Interface vhost-user0 type=dpdkvhostuser ofport_request=2

Delete any existing flows on the bridge.

ovs-ofctl del-flows br0

Configure flow to send traffic received on port 1 to port 2 (dpdk0 to vhost-user0).

ovs-ofctl add-flow br0 in_port=1,action=output:2

Set the PMD thread configuration to two threads to run on the host. In this example core 2 and core 3 on the host are used.

ovs-vsctl set Open_vSwitch . other_config:pmd-cpu-mask=C

Set the number of receive queues for the physical port ‘dpdk0’ to 2.

ovs-vsctl set Interface dpdk0 options:n_rxq=2

Set the number of receive queues for the vHost-user port ‘vhost-user0’ to 2.

ovs-vsctl set Interface vhost-user0 options:n_rxq=2

Guest VM Configuration

The QEMU parameters required to launch the VM and the methods by which multiple queues are configured within the guest will vary depending on whether the VNIC is used as a Kernel interface or as a DPDK port. The following sections deal with the specific commands required for each use case.

vHost-user Multiqueue with Kernel Interface in Guest

Figure 4. vHost-user multiqueue using kernel driver (virtio-net) in guest.

Once the vSwitch has been configured as described in the Test Environment section, launch the VM with QEMU (QEMU 2.5 was used for this test case). Launch the VM with the following sample command:

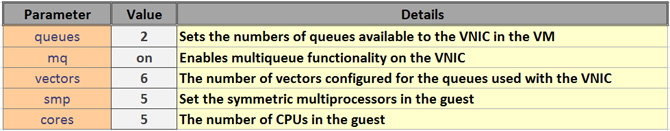

./qemu/x86_64-softmmu/qemu-system-x86_64 -cpu host -smp 2,cores=2 -hda /root/fedora-22.img -m 2048M --enable-kvm -object memory-backend-file,id=mem,size=2048M,mem-path=/dev/hugepages,share=on -numa node,memdev=mem -mem-prealloc -chardev socket,id=char1,path=/usr/local/var/run/openvswitch/vhost-user0 -netdev type=vhost-user,id=mynet1,chardev=char1,vhostforce,queues=2 -device virtio-net-pci,mac=00:00:00:00:00:01,netdev=mynet1,mq=on,vectors=6

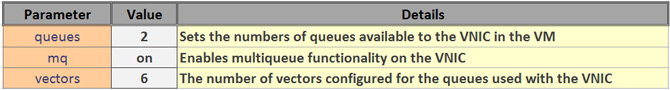

The parameters of particular relevance to vHost-user multiqueue here are the following:

The vector value is computed with ‘number of queues x 2 + 2'. The example configures two queues for the VNIC, which requires six vectors (2 x 2 + 2).

Once launched, use the following commands to configure eth0 with multiple queues.

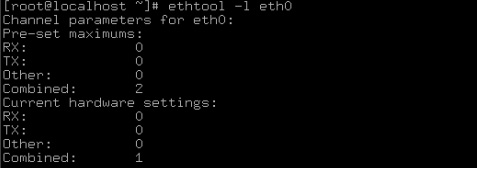

Check the channel configuration for the virtio devices with the following:

ethtool -l eth0

Note that the Pre-set maximum for combined channels is 2, but the current hardware settings combined channels is 1. The number of combined channels must be set to 2 in order to use multiqueue. This can be done with the following command

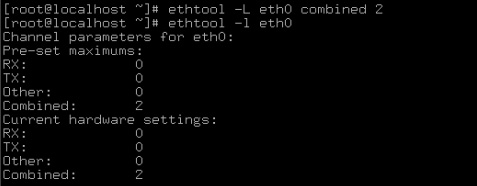

ethtool -L eth0 combined 2

Examining the channel configuration with ‘ethtool -l eth0’ now shows that two combined channels have been configured.

Configure eth0, arp entries and route ip rules in the VM.

ifconfig eth0 5.5.5.1/24 up

sysctl -w net.ipv4.ip_forward=1

sysctl -w net.ipv4.conf.all.rp_filter=0

ip route add 6.6.6.0/24 dev eth0

route add default gw 6.6.6.6 eth0

arp -s 6.6.6.6 00:00:07:00:0E:00

ip route add 1.1.1.0/24 dev eth0

route add default gw 1.1.1.1 eth0

arp -s 1.1.1.1 DE:AD:BE:EF:CA:FA

ip route add 2.1.1.0/24 dev eth0

route add default gw 2.1.1.2 eth0

arp -s 2.1.1.2 DE:AD:BE:EF:CA:FB

ip route add 3.1.1.0/24 dev eth0

route add default gw 3.1.1.3 eth0

arp -s 3.1.1.3 DE:AD:BE:EF:CA:FC

Once these steps are complete, start the traffic flows. From the VM you can check that traffic is being received on both queues on eth0 with the following command:

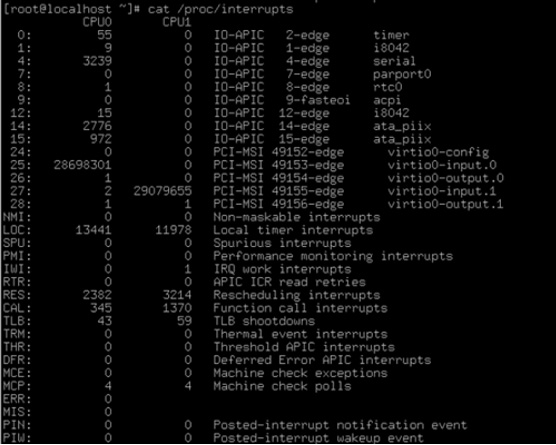

cat /proc/interrupts

You should examine the interrupts generated for ‘virtio0-input.0’ and ‘virtio0-input.1’ as interrupts here will signify packets arriving on input queue 0 and 1 for eth0. Note that if the test traffic is generated at a high rate you will not see interrupts, because QEMU switches to PMD mode when processing traffic on the eth0 interface. If this occurs, lower the transmission rate and interrupts will be generated again by QEMU.

vHost-user Multiqueue with DPDK Port in Guest

Figure 5. vHost-multiqueue with DPDK driver (igb_uio) in guest.

Once the vSwitch has been configured as described in the Test Environment section, launch the VM with QEMU (QEMU 2.5 was used for this test case). The VM can be launched with the following sample command:

./qemu/x86_64-softmmu/qemu-system-x86_64 -cpu host -smp 5,cores=5 -hda /root/fedora-22.img -m 4096M --enable-kvm -object memory-backend-file,id=mem,size=4096M,mem-path=/dev/hugepages,share=on -numa node,memdev=mem -mem-prealloc -chardev socket,id=char1,path=/usr/local/var/run/openvswitch/vhost-user0 -netdev type=vhost-user,id=mynet1,chardev=char1,vhostforce,queues=2 -device virtio-net-pci,mac=00:00:00:00:00:01,netdev=mynet1,mq=on,vectors=6

The parameters of particular relevance to vHost-user multiqueue here are the following:

In this test case we are using DPDK 16.04 in the guest, specifically the test-pmd application. The DPDK can be downloaded here; a quick start guide on how to build and compile DPDK can be found here.

Once the DPDK has been built and the eth0 interface attached to the ‘igb_uio’ driver, navigate to the test-pmd directory and make the application.

cd dpdk/app/test-pmd/

make

Test-pmd is a sample application that can be used to test the DPDK in a packet forwarding mode. It also provides features, such as queue stat mapping, that is useful for validating vHost-user multiqueue.

Run the application with the following command:

./testpmd -c 0x1F -n 4 --socket-mem 1024 -- --burst=64 -i --txqflags=0xf00 --dissable-hw-vlan --rxq=2 --txq=2 --nb-cores=4

The core mask of 0x1F allows test-pmd to use all five cores in the VM; however one core will be used for running the application command line while four cores will be used for running the forwarding engines. It is important to note here that we set the number of rxq and txq to 2 and that we set the nb-cores to 4 (each rxq and txq requires one core at a minimum). A detailed explanation of each test-pmd parameter as well as expected usage can be found here.

Once test-pmd has launched, enter the following commands at the command prompt:

set stat_qmap rx 0 0 0 # Map rx stats from port 0, queue 0 to queue map 0

set stat_qmap rx 0 1 1 # Map rx stats from port 0, queue 1 to queue map 1

set fwd rxonly # Configure test-pmd to process rx operations only

start # Start test-pmd packet processing operations

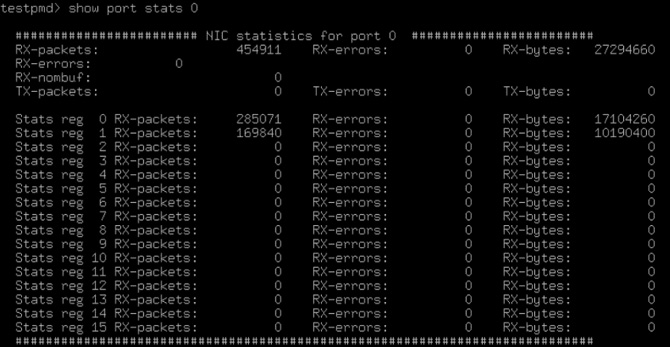

Now start the test traffic flows on the traffic generator. While traffic is flowing, use the following command to examine the queue stats for port 0.

show port stats 0 # Display the port stats for port 0

You should see traffic arriving over queue0 and queue 1 as shown below.

Conclusion

In this article we showed two use cases for using vHost-user multiqueue with a VM on OvS with the DPDK. These use cases were kernel interface in the guest and DPDK interface in the guest. We demonstrated the utility commands to configure mutliqueue at the vSwitch, QEMU, and VM levels. We described the type of test traffic required for multiqueue and demonstrated how to examine and verify multiqueue is working correctly in both a kernel interface and DPDK port inside the guest.

About the Author

Ian Stokes is a network software engineer with Intel. His work is primarily focused on accelerated software switching solutions in the user space running on Intel® architecture. His contributions to Open vSwitch with DPDK include the OvS DPDK QoS API and egress/ingress policer solutions.

Additional Information

Have a question? Feel free to follow up with the query on the Open vSwitch discussion mailing thread.

To learn more about Open vSwitch with DPDK in general, we encourage you to check out the following videos and articles in the Intel® Developer Zone and Intel® Network Builders University.

Rate Limiting Configuration and Usage for Open vSwitch with DPDK