Hello, everyone! In the previous part of this blog post series, we presented the nature of the simulations performed by the BioDynaMo project. Moreover, we observed how our desired requirements and constraints for the distributed runtime affected the design of the architecture and defined the choice of tools/frameworks.

Today, we will present some of the technical details of the distributed runtime prototype.

The Majordomo pattern

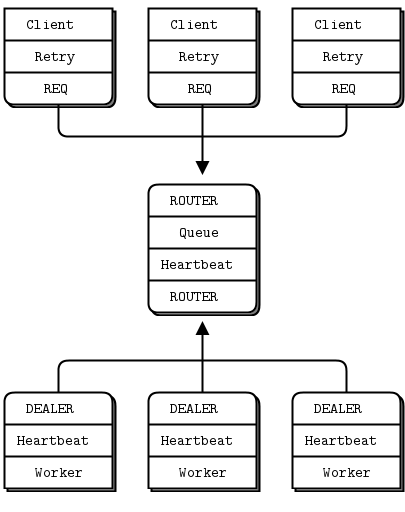

Majordomo is one of the reliable request-reply patterns described in the ZeroMQ guide. It provides a service-oriented queuing mechanism, where a set of clients can send messages to a set of workers. Each worker can register to one or more services, to indicate that it can serve particular requests. An intermediate node called broker (a.k.a primary node in our case) is responsible for handling the messages either from clients or workers.

Because the broker is the essential component of the architecture, it has some extra responsibility. At first, it manages the connections to workers and clients, respectively. For that purpose, it uses a single ROUTER socket (asynchronous reply ZeroMQ socket), which can send and receive messages from multiple clients and workers. Thus, each client can send messages to a specific worker, using the worker's unique identifier (i.e., service name) for routing. Using the same mechanism the worker can send a reply back to the client.

In addition to the routing mechanism, the broker makes use of heartbeats to detect failures across workers. Every few seconds it sends messages with no payload to each worker. If the worker replies "on time" it means that it is still alive and is capable of serving future requests. If it does not, the broker retries a few times, to minimize the possibility of a network-related issue. If there is still no answer, the broker assumes that the worker node is offline, and does the cleanup.

Modifying and extending the pattern

Even though the Majordomo pattern is a solid foundation for what we are trying to build, we still have a couple of issues to address. Namely, the worker-to-worker communication and the creation of a middleware layer that exposes a communication interface to the application.

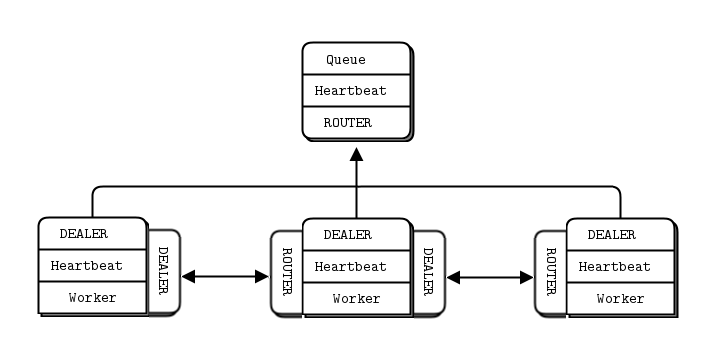

To implement the former, we use a DEALER-ROUTER socket pair between neighboring workers. By convention, the right worker creates a ROUTER socket and acts a server (i.e., binds to an IP address), and the left worker creates a DEALER socket and acts as a client (i.e., connects to this IP address). This way, neighboring workers can exchange their halo regions (described in the previous blog post) asynchronously. Also, we properly exploit the power of ZeroMQ by using a single socket for sending and receiving the data as well as a single network endpoint. Our new connection diagram is shown below:

Next step is the implementation of the middleware layer. The goal of this layer is to abstract the low-level network communication details from the application itself (i.e., the simulation engine), exposing at the same time a simple interface to the application.

Middleware network layer

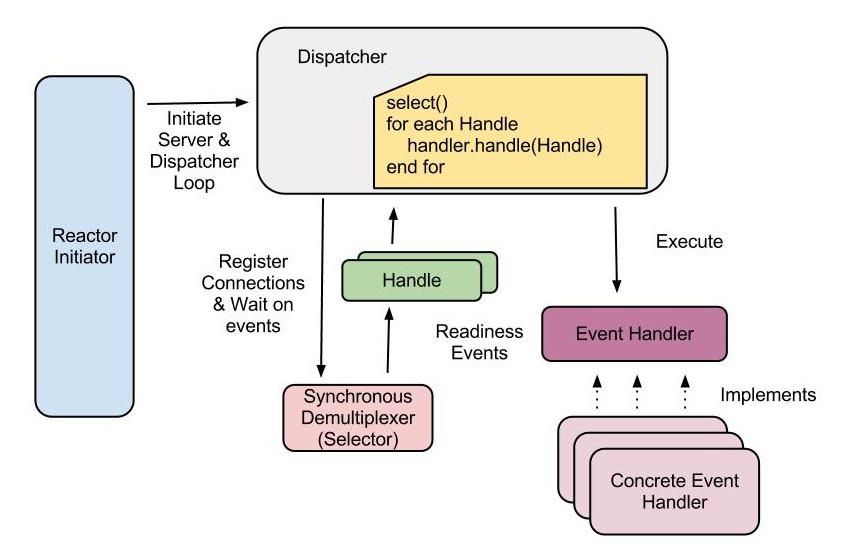

Because we want to send/receive messages to/from other nodes (e.g., broker and neighboring workers) during the simulation computations, we spawn a separate thread (namely network thread) to deal with all the communication issues. By using the ZMQPP Reactor class, this thread initially waits until there is input available from any of the registered socket objects (a.k.a file descriptors). Thus, the network thread wakes up and deals with the node communication, only when needed, without wasting valuable CPU cycles in the process. The reactor pattern is summarized in the following diagram. The diagram is taken from here where you can also find more information about the pattern itself and some of its extensions.

Using the reactor class, we first register the ZeroMQ sockets responsible for communicating with the broker and the workers. We also register a PAIR-PAIR ZeroMQ socket, to act as a pipe between the network thread and the application thread. This pipe is then used to signal the network thread when the application wants to send a message over the network. Note that we do not write the message to the pipe itself as this would be expensive when dealing with large messages (i.e., hundreds of megabytes); instead, we pass a unique_ptr (shared address space) to the message itself. Then, the network thread runs on a loop (Dispatcher) to handle the incoming requests. The ZMQPP Reactor class manages the selection (Synchronous Demultiplexer) and the execution of the correct Event Handler, internally.

To further simplify the implementation of the middleware layer, we define an interface (Event Handler) for the classes that handle the actual communication to the broker and the neighbor workers. This interface also encapsulates the ZeroMQ socket object (Handle) itself. Thus we define a Communicator interface with BrokerCommunicator and WorkerCommunicator being the concrete implementations (Concrete Event Handlers) of this interface. The reactor is now able to call the Handle class method from the interface, ignoring all the internal details. Now we have a flexible, modular and efficient prototype ready to be tested!

You can find the implementation of the distributed runtime prototype at Github.

Thanks for reading!