Introduction

Persistent memory enables persistence at cache line granularities, compared to block granularity for traditional block storage. But in some cases, legacy software may need to access remote persistent memory using block semantics. This is not a primary use case for persistent memory but may be useful to present a small portion of a larger persistent memory pool over a network fabric. This article describes how the open source Software Performance Development Kit (SPDK) integrates with the Persistent Memory Development Kit (PMDK) to enable low-latency remote access to persistent memory with traditional block semantics using NVM Express* (NVMe*) over Fabrics (NVMe-oF).

Persistent Memory Development Kit Support for Block Storage

A key aspect of block semantics is guaranteeing write atomicity. When a block is written and a power failure occurs, we want to ensure that either all of the data or none of the data is written. This is critical for writing correct storage software such as write-ahead logs. The PMDK libpmemblk library provides such a guarantee for implementing block storage on top of persistent memory. Libpmemblk utilizes a block translation table (BTT), which behaves similarly to a flash translation layer (FTL) found in modern solid-state drives (SSDs). The BTT acts as an indirection table, enabling a separation of copying user data to a block-sized region of persistent memory from the mapping of that region to a logical block address.

Storage Performance Development Kit

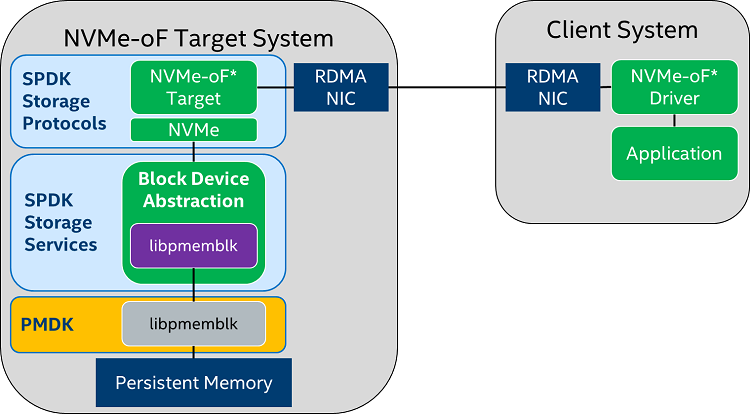

Next, how do we present this block storage over a network fabric? NVMe-oF is a popular answer. NVMe-oF is designed for modern multicore architectures, enabling multiple queues for parallel access, and using remote direct memory access (RDMA) protocols to reduce software overhead and minimize latency.

Enter the SPDK. It provides a set of tools, libraries, and applications for creating high performance, scalable, user-mode storage applications. One of SPDK's applications is a poll-mode NVMe-oF target. SPDK provides a block device layer called bdev, which provides a generic interface to a heterogenous set of block devices that are created by bdev modules. Examples of SPDK bdev modules include:

- NVMe—for accessing either local or remote attached storage using the NVMe protocol

- Malloc—for accessing DRAM as a RAM disk

- Ceph RBD—for accessing Ceph* RADOS block devices

- PMDK—for accessing PMDK libpmemblk pools

SPDK and PMDK Integration

The SPDK PMEM bdev driver uses the pmemblk pool as the target for block input/output (I/O) operations.

Here we see the block device abstraction, which presents the PMDK pool as a block device that can be served as an NVMe-oF namespace over the network fabric. The client system can then access this storage remotely using the NVMe-oF protocol with any NVMe-oF compliant driver.

Configuration

Let's walk through how to configure an SPDK NVMe-oF target with libpmemblk-based namespaces.

First, we need to configure the target system. These instructions assume that you are already familiar with PMDK and have installed PMDK on the target system. If this is not the case, instructions for installing PMDK can be found on the Intel® Developer Zone at Getting Started with Persistent Memory. We also assume that you are familiar with RDMA and have RDMA interfaces configured on both the target and client systems.

Start by building SPDK on the target system. See the instructions for downloading the source code, installing prerequisite packages, and building SPDK. To enable PMDK with SPDK, you must pass --with-pmdk to the SPDK configure script.

Now we can start the NVMe-oF target:

cd <spdk root directory>

app/nvmf_tgt/nvmf_tgtThe NVMe-oF target should now be running. In a separate terminal window, we will use the SPDK RPC interface to configure a libpmemblk SPDK block device (bdev). Later, we will attach this bdev to an NVMe-oF namespace.

This example creates the backing storage in /tmp, but this can be changed to any directory in a persistent memory-enabled file system. The bdev will be 8192 blocks, with a block size of 4096, for a total of 32 MiB (mebibytes). The name of the bdev will be pmdk0.

cd <spdk root directory>

scripts/rpc.py create_pmem_pool /tmp/spdk_pool 8192 4096

scripts/rpc.py construct_pmem_bdev /tmp/spdk_pool –n pmdk0Now we can create the NVMe-oF subsystem and attach the pmdk0 bdev as a namespace:

scripts/rpc.py construct_nvmf_subsystem \

nqn.2016-06.io.spdk:cnode1 '' '' -a -s SPDK0001

scripts/rpc.py nvmf_subsystem_add_listener \

nqn.2016-06.io.spdk:cnode1 -t RDMA \

-a 192.168.10.1 -s 4420

scripts/rpc.py nvmf_subsystem_add_ns \

nqn.2016-06.io.spdk:cnode1 pmdk0

The client system should now be able to connect:

nvme connect -t rdma -n nqn.2016-06.io.spdk:cnode1 \

-a 143.182.136.99 -s 4420Summary

Using block protocols over a network fabric can quickly enable legacy applications to take advantage of persistent memory. NVMe-oF is the ideal protocol for this type of persistent memory access. The SPDK NVMe-oF target provides an easy-to-use PMDK plugin to enable persistent memory access over NVMe-oF, and its user space poll mode architecture optimizes access latency, compared to traditional interrupt-driven target applications.

About the Author

Jim Harris is a principal software engineer in Intel's Data Center Group. His primary responsibility is architecture and design of the Storage Performance Development Kit (SPDK). Jim has been at Intel since 2001, serving in a wide variety of storage software related roles. Jim has a B.S. and M.S. in Computer Science from Case Western Reserve University.