Introduction

Image matting is a vision problem which gets more complicated with similar background and foreground colors. Researchers from Beckman Institute for Advanced Science and Technology, University of Illinois at Urbana-Champaign and engineers from Adobe* Research introduced a deep image matting algorithm at CVPR'17.

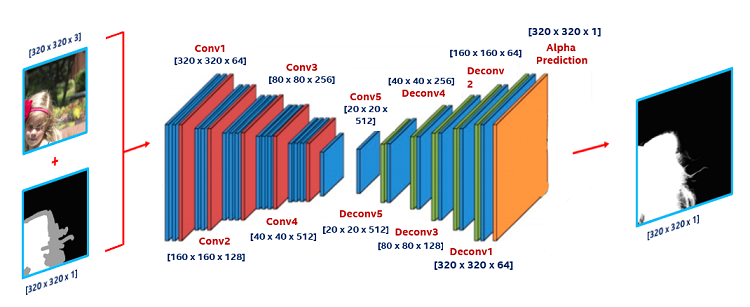

The algorithm (Figure 1) uses deep learning to intelligently separate foreground from the background, but takes a lot of time on CPUs and thus is not ready for large scale deployment running locally.

Figure 1. Inference per tile algorithm

Bringing deep learning inference to clients is a trend for future use cases as it helps meet latency, reduce cost, eliminate worries about network bandwidth (can work offline) and give consumers trust and privacy.

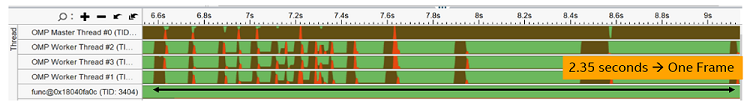

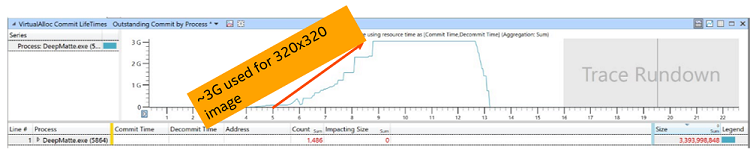

We ran deep image matting on a 320x320 image and found the time for one inference is approximately 2.35 seconds which is not acceptable for client inference. Figure 1 shows total time taken by the deep image matting algorithm on an Intel® platform using the Intel® VTune™ Amplifier on a 7th generation Intel® Core™ i7 processor with integrated graphics. While Figure 2 shows memory utilization for the deep image matting algorithm using Windows* Performance Analyzer memory.

Figure 2. Deep image matting CPU using Intel® Math Kernel Library (Intel® MKL) BLAS - 2.35 seconds

Figure 3. Deep image matting memory utilization

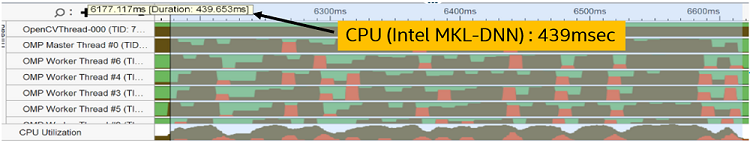

Using the Intel® Distribution of OpenVINO™ toolkit and Intel® Math Kernel Library for Deep Neural Networks (Intel® MKL-DNN), Intel optimized the image deep matte algorithm by 5.3 times and reduced memory consumption by 3 times. Figure 4 shows total time to complete one iteration for the image deep matte algorithm with Intel VTune Amplifier.

Figure 4. Deep image matting using Intel® Distribution of OpenVINO™ toolkit

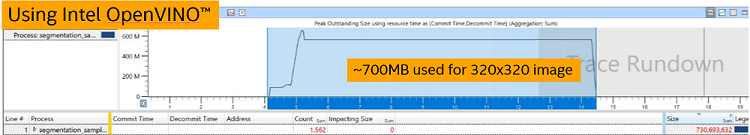

Figure 5 shows memory utilization result in Windows Performance Analyzer for the image deep matting algorithm.

Figure 5. Deep image matting memory utilization

Intel Distribution of OpenVINO toolkit helps algorithms to use optimized pipeline on Intel hardware to offer the best performance.

Conclusion

Bringing intelligence to clients helps developers reduce cost as well as meet latency and security requirements. The Intel Distribution of OpenVINO toolkit used with Intel MKL-DNN library helps the image deep matting algorithm to reduce compute time by 5 times with the help of optimized deep learning libraries. In addition, the toolkit optimizes the memory utilization for deep learning inference by 3 times.

References

- Deep Image Matting

- Intel Distribution of OpenVINO toolkit

- Intel® VTune™ Amplifier

- Intel® MKL-DNN

- Windows* Performance Analyzer

- Bringing Deep Learning-based Applications to Client Platform

System configuration

- 7th Generation Intel® Core™ i7 Processor (4 Cores, 8 Threads) with Integrated Graphics

- Power Limit (TDP) : 15W

- Memory: 8GB RAM

- Operating System: Windows® 10