Overview

Use a low code device AI development platform with Intel® Smart Edge Open. Features include low threshold (full UI operation), low data requirements (enriching pre-training models), high efficiency (semi-automatic labeling), high performance (model pruning and compression), and high precision (Quantization Aware Training and Knowledge Distillation).

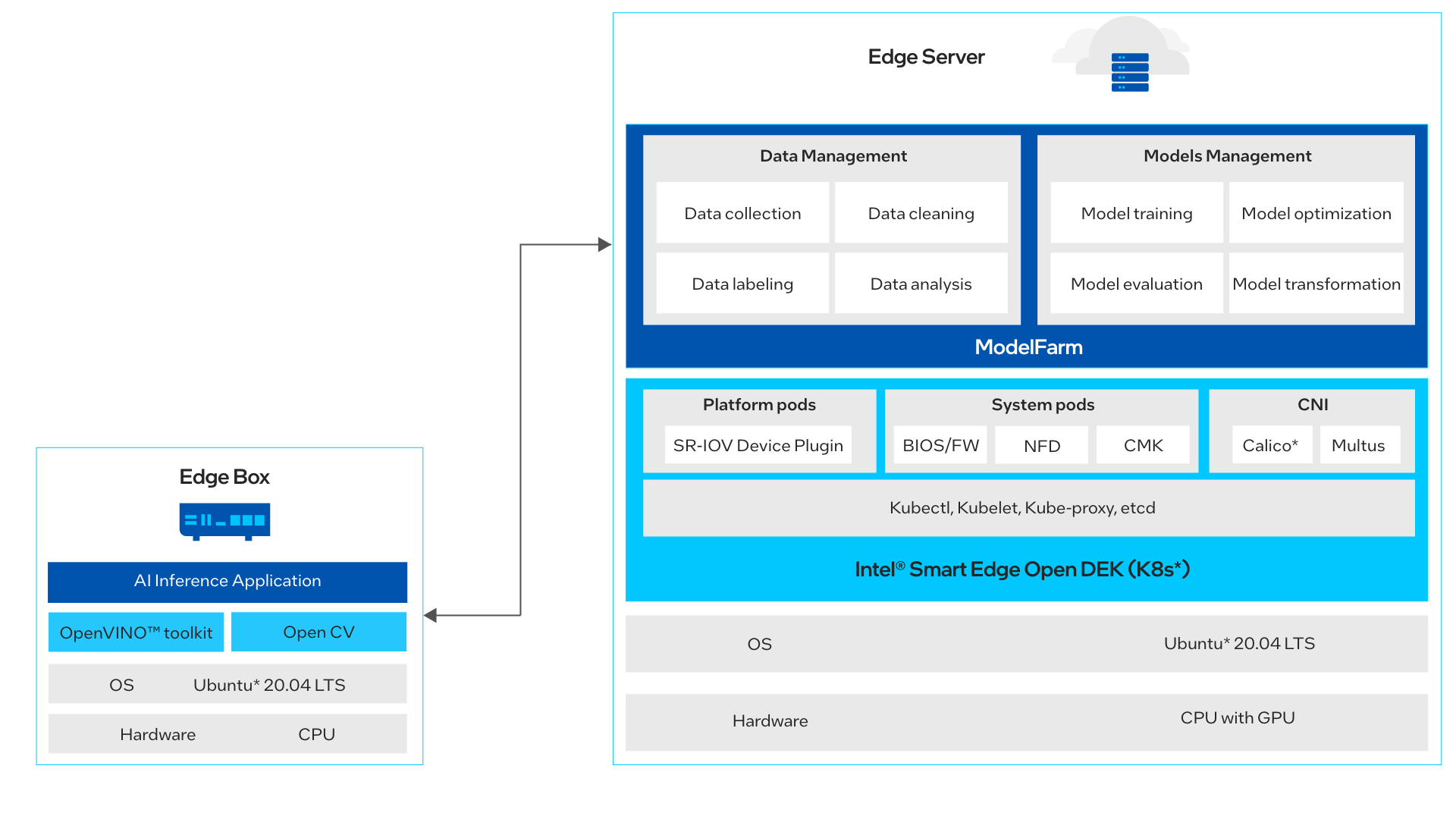

ModelFarm* includes two functional modules: data management and model management.

- Data management includes data cleaning, data labeling, and data analysis.

- Model management includes model training, model optimization, model evaluation, model transformation (target operation platform), and algorithm SDK export.

Use the exported AI algorithm SDK to deploy on your edge device and inference AI for your use case. Use cases include:

- Object detection, such as helmet detection, facemask detection, area intrusion detection, defect inspection etc.

- Behavior analysis, such as Driver Monitor System (DMS).

- Environment monitoring, such as bright kitchen and stove, restaurant use transparent video, and other methods to show the public the process of catering services in kitchens.

To run the reference implementation, you will need to first download and install the Intel® Smart Edge Open Developer Experience Kit.

- Time to Complete: 20 minutes

- Programming Language: C, C++

Target System Requirements

Edge Server:

- One of the following processors.

- Intel® Xeon® Scalable processor.

- Intel® Xeon® D processor.

- At least 128 GB RAM.

- At least 4T GB hard drive.

- GPU RTX3090 or V100 or A100

- Ubuntu* 20.04 LTS Server.

- An Internet connection.

AI Box:

- 6th generation or higher Intel® Core™ processors.

- At least 4 GB RAM.

- At least 10 GB hard drive.

- Ubuntu* 18.04 / Ubuntu* 20.04.

- An Internet connection.

- OpenVINO™ toolkit

- OpenCV

How It Works

Upload the dataset from the camera or local video mp4 file to the ModelFarm platform and complete the model training.

The following algorithms are supported for training:

- Object detection

- Image classification

- Image segmentation

- Face key point detection

- Human body key point detection

Deploy the completed training model SDK to the edge equipment. The camera transmits the real-time video stream to the edge device for inference by the edge AI application. The AI inference results will be transmitted to your supervision platform or visualization monitor in real-time.

Get Started

Edge Server Side Installation

- To run the reference implementation, you will need to first download and install the Intel® Smart Edge Open Developer Experience Kit.

- After you install Intel® Smart Edge Open Developer Experience Kit, contact ThunderSoft to install ModelFarm as it is a licensed software.

- This reference implementation provides a server that already installed ModelFarm.

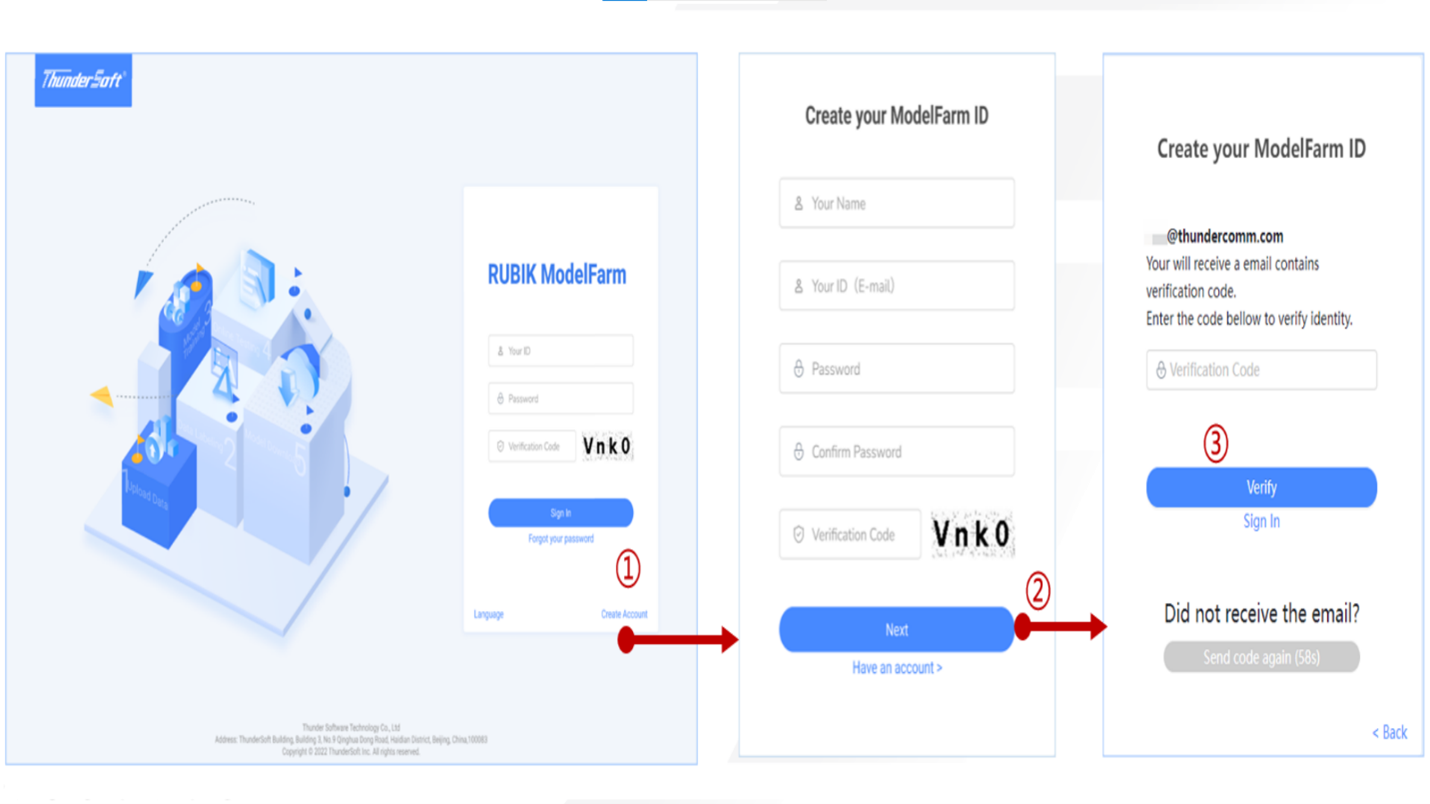

Register Account

Log in.

- Select the Create Account button to enter the registration page.

- Enter your E-mail (ID) and basic information. Select Next and the verification code will be sent to the registered email.

- Check the verification code from the email and fill it in for verification. If the email does not have the verification code, select the option to send the verification code again after 60 seconds.

Accept Invitation to Register

- Select the Join button to enter the invitation registration page.

- Enter the basic information and select the Create button.

- Check the agreement and select the Join button to enter the Welcome page.

- If you have registered an account, directly select the Sign in button to log in.

- If you have joined the organization through the above email, select Join again, and the following information will be prompted.

Create Dataset

- Select the Create Dataset button to create a new dataset.

- Select the model card to view the version details.

- Select the Create New Version button to inherit or create the existing data version.

- If the inheritance history version is closed, it is a new dataset Version (no dataset version is inherited).

Upload Data and Label

- Select the Local Data Upload button to upload local data.

- Select the Label button to enter the label page.

Create a New Model

- Select the Create a New Model button to create the number model card.

- Enter the basic information and select Next to select the pre-train model.

Train the Model

- Select the Create button to create the model training task.

- Select a version to inherit.

- Select the data set version for model training.

Create a Quantization Aware Training (QAT) Task

- Select the QAT button to create a quantitative training task.

- After the parameter configuration is completed, select the dataset.

Create Knowledge Distillation Task

- Select the KD button to create knowledge distillation task.

- After the parameter configuration is completed, select the dataset.

- Create and generate a teacher model.

- When a teacher model is obtained from training, kd training can be started based on the teacher model.

Create Validation Task

- Select Validation button to create validation task.

- Enter validation basic information and select the dataset.

- View the comparison of ground truth and inference result.

Complete a Test

- Select images for test and view the test result.

- Select a video file for test and view the test result.

Download

- Select the Download button.

- Select Intel OpenVino to download.

Edge AI Box Demo

You can run an edge application demo on the Edge AI Box. For a real case, you can train an object detection model downloaded from ModelFarm.

- Install the downloaded < ts_ai_demo.deb > package. This package already includes a training model and dataset. You can download from Configure & Download.

Install the Reference Implementation

Select Configure & Download to download the reference implementation and then follow the below steps to install it.

- Open a new terminal, go to the downloaded folder and move the downloaded zip package to /home/<non-root-user> folder.

mv <path-of-downloaded-directory>/ts_ai_demo.deb /home/<non-root-user>

- Go to the /root directory of the non-root user using the following command to install the package:

cd /home/<non-root-user> sudo dpkg -i ts_ai_demo.deb

- Go to the /opt/ts/demo directory using the following command:

cd /opt/ts/demo

- Execute the command below to run the demo:

./run.sh

- You will see the following images during the demo.

How to Use the New Training Model (Optional)

You must follow Edge AI Box demo step to make sure you can successfully run the demo. Then use your training model generated by ModelFarm and download it on the Edge AI Box to run your model inference application with the following steps.

- Download the trained model from ModelFarm. Unzip the package name3_OPENVINO_FP32_V2.0_L.zip. All the info will be unzipped into the folder name3_OPENVINO_FP32_V2.0_L.

-

Move xxx.onnx and Readme.txt file to the folder /opt/ts/demo, using the command below:

mv xxx.onnx /opt/ts/demo mv Readme.txt /opt/ts/demo

- Copy the new file Readme.txt to label.txt, then modify label.txt using the command below:

cp Readme.txt label.txt

- Modify file /opt/ts/demo/config.json. Use the command

cp Readme.txt label.txt vi label.txt

===================================================

Before:// ******* Label Info ******* //

//***** ID ***** Label Name *****//

1 banana

2 orange

3 appleAfter:

banana

orange

apple

===================================================

- Modify file /opt/ts/demo/config.json, use the command vi /opt/ts/demo/config.json .

- Change “"ie-file":"1587724663042211840.onnx",” replace 1587724663042211840.onnx to your xxx.onnx file name.

- Change “"label-path":"./fruit_list.txt",” replace fruit_list.txt to your label file (label.txt).

- Change "video-url":"fruits.mp4", replace “fruits.mp4” to your local mp4 file name.

- The mp4 video format should be 1920*1080.

Note: This also supports RTSP video stream. Replace fruits.mp4 with RTSP video stream address.

After making the above modification, run the command ./run.sh under the folder /opt/ts/demo to see your demo.

Summary and Next Steps

You have successfully learned how to use ModelFarm to generate an SDK algorithm and how to infer AI applications on the Edge AI Box.

As a next step, you can train your own AI model by ModelFarm and download the SDK algorithm on the Edge AI Box, then infer with your local video or RTSP video stream.

Learn More

To continue learning, see the following guides and software resources:

Troubleshooting

If the demo failed, check whether or not your system has Ubuntu 20.04 installed. Install Ubuntu 20.04 if it is not present on your system.

Check all the lib files under the folder. Reinstall xxx.deb if you are able.

Support Forum

If you're unable to resolve your issues, contact the Support Forum.