Overview

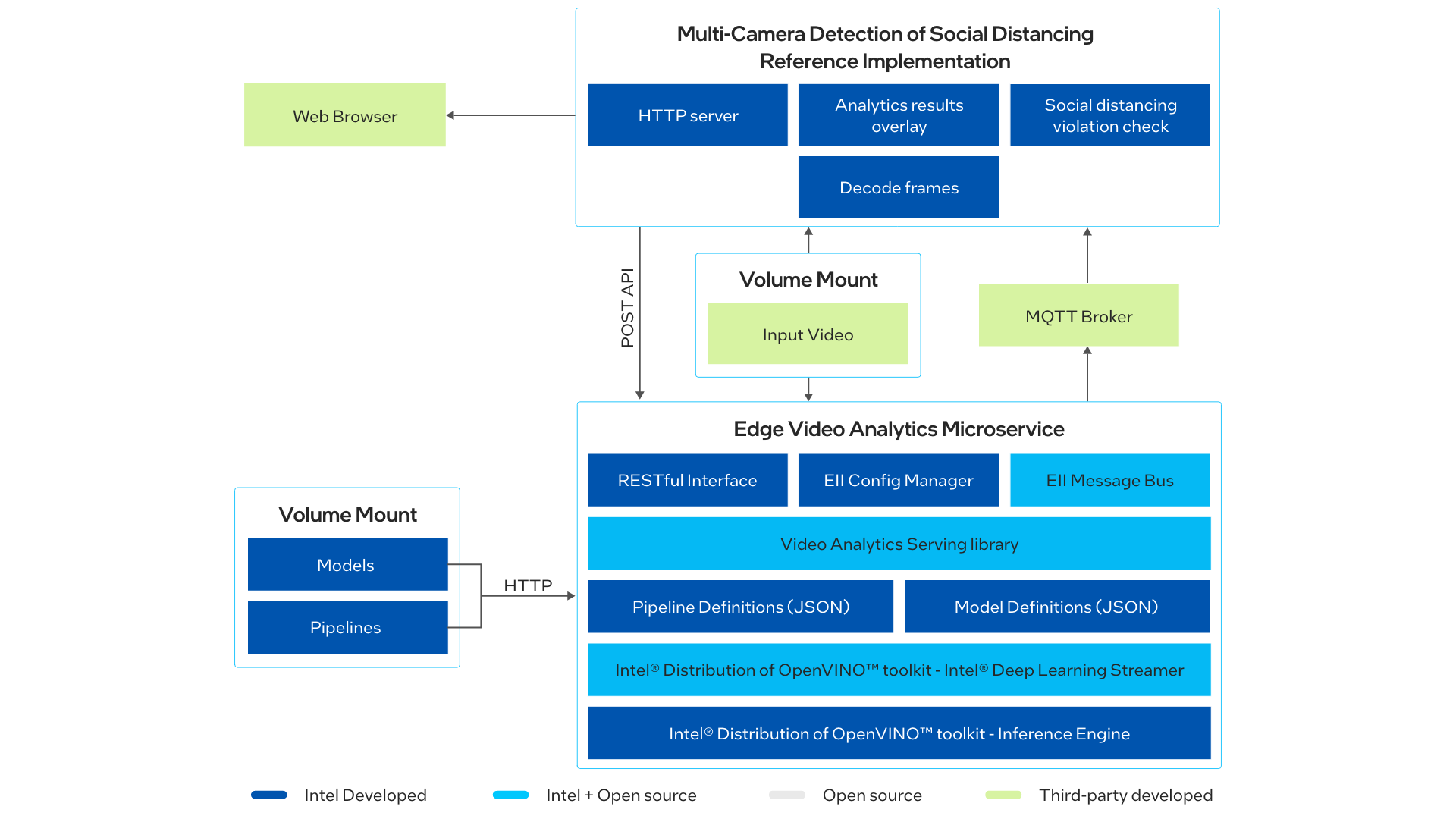

Create an end-to-end video analytics pipeline to detect people and calculate the social distance between people from multiple input video feeds. Multi-Camera Detection of Social Distancing demonstrates how to use the Video Analytics Microservice in an application and store the data to InfluxDB*. This data can be visualized on a Grafana* dashboard.

Select Configure & Download to download the reference implementation and the software listed below.

- Time to Complete: 45 minutes

- Programming Language: Python* 3.6

- Available Software: Video Analytics Microservice, Video Analytics Serving, Intel® Distribution of OpenVINO™ toolkit 2021 Release

Target System Requirements

- One of the following processors:

- 6th to 11th Generation Intel® Core™ processors

- 1st to 3rd generation of Intel® Xeon® Scalable processors

- Intel Atom® processor with Intel® Streaming SIMD Extensions 4.2 (Intel® SSE4.2)

- At least 8 GB RAM.

- At least 64 GB hard drive.

- An Internet connection.

- Ubuntu* 18.04.3 LTS Kernel 5.0†

- Ubuntu* 20.04 LTS Kernel 5.4†

Refer to OpenVINO™ Toolkit System Requirements for supported GPU and VPU processors.

† Use Kernel 5.8 for 11th generation Intel® Core™ processors.

How It Works

This is a reference implementation that demonstrates how to use the Video Analytics Microservice in an application for creating a social distancing detection use case. The reference implementation consists of the pipeline and model config files that are used with the Video Analytics Microservice Docker image by volume mounting and the docker-compose.yml file for starting the containers. The results of the pipeline execution are routed to MQTT broker and can be viewed from there. The inference results are also used by the vision algorithms in the Docker image mcss-eva for population density detection, social distance calculation, etc.

Container Engines and Orchestration

The package uses Docker* and Docker Compose for automated container management.

- Docker is a container framework widely used in enterprise environments. It allows applications and their dependencies to be packaged together and run as a self-contained unit.

- Docker Compose is a tool for defining and running multi container Docker Applications.

End-to-End Video Analytics

A multi-camera solution demonstrates an end-to-end video analytics application to detect people and calculate the social distance between people from multiple input video feeds. It uses the video analytics microservice to ingest input videos and perform deep learning inference. Based on the inferenced output data published on MQTT topic, it then calculates the social distance violations and serves the inference results to a webserver.

The steps below are performed by the application:

- The video files for inference should be present in resources folder.

- When the Video Analytics Microservice starts, the models, pipelines, and resources folders are mounted in the microservice. The REST APIs are available to interact with the microservice.

- The MCSS microservice creates and sends a POST request to the Video Analytics Microservice for starting the video analytics pipeline.

- On receiving the POST request, the edge video analytics pipeline starts an instance of the pipeline requested and publishes the inference results to MQTT broker. The pipeline uses the person-detection-retail-0013 model from Open Model Zoo to detect people in the video streams.

- The MCSS microservice subscribes to the MQTT messages, receives the inference output metadata, and calculates the Euclidean distance between all the people.

- Based on above measurements, it checks whether any people are violating i.e., are less than N pixels apart.

- The input video frames, inference output results and social distancing violations are served to a webserver for viewing.

Get Started

Step 1: Install the Reference Implementation

Select Configure & Download to download the reference implementation and then follow the steps below to install it.

- Open a new terminal, go to the downloaded folder and unzip the downloaded RI package:

unzip multi_camera_detection_of_social_distancing.zip - Go to multi_camera_detection_of_social_distancing directory:

cd multi_camera_detection_of_social_distancing - Change permission of the executable edgesoftware file:

chmod 755 edgesoftware - Run the command below to install the Reference Implementation:

sudo groupadd docker sudo usermod -aG docker $USER newgrp docker ./edgesoftware install

NOTE: If you have issues with the image pull, try this command: sudo ./edgesoftware install

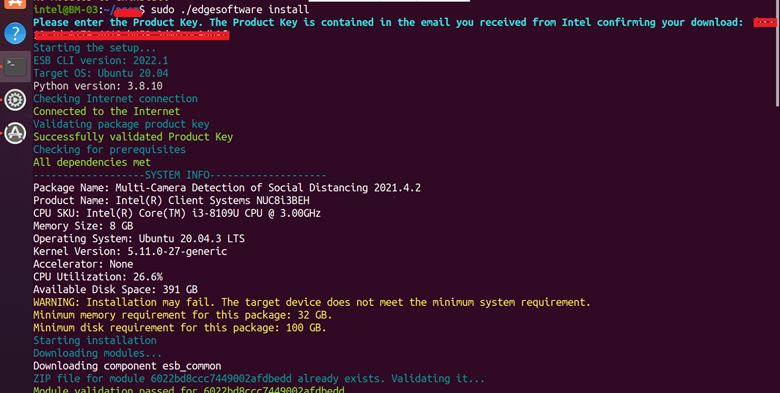

See Troubleshooting at the end of this document for details. - During the installation, you will be prompted for the Product Key. The Product Key is contained in the email you received from Intel confirming your download.

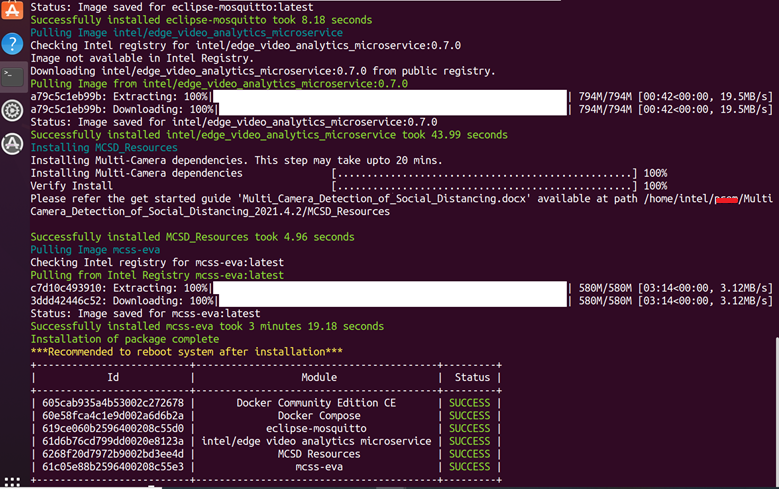

Figure 2: Product Key - When the installation is complete, you see the message “Installation of package complete” and the installation status for each module.

Figure 3: Install Success

- Go to the working directory:

cd multi_camera_detection_of_social_distancing/MultiCamera_Detection_of_Social_Distancing_<version>/MCSD_ResourcesNOTE: In the command above, <version> is the Package version downloaded.

Step 2: Download the Input Video

The application works better with input feed in which cameras are placed at eye level angle.

Download sample video at 1280x720 resolution, rename the file by replacing the spaces with the _ character (for example, Pexels_Videos_2670.mp4), and place it in the following directory:

multi_camera_detection_of_social_distancing/MultiCamera_Detection_of_Social_Distancing_<version>/MCSD_Resources/resources

Where <version> is the package version selected while downloading.

(Data set subject to this license. The terms and conditions of the dataset license apply. Intel® does not grant any rights to the data files.)

To use multiple videos or any other video, download the video files and place them under the resources directory.

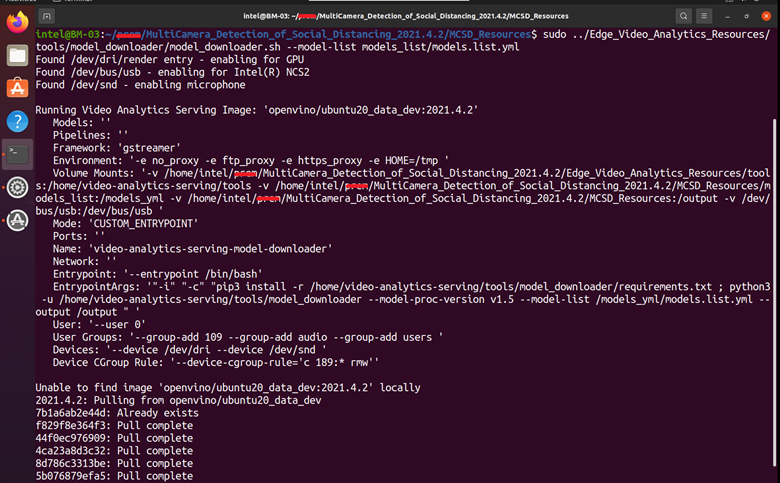

Step 3: Download the object_detection Model

The model to download is defined in the models.list.yml present in the model_list folder.

Execute the below commands to download the required object_detection model (person-detection-retail-0013) from Open Model Zoo:

sudo chmod +x ../Edge_Video_Analytics_Resources/tools/model_downloader/model_downloader.sh

sudo ../Edge_Video_Analytics_Resources/tools/model_downloader/model_downloader.sh --model-list models_list/models.list.yml

You will see output similar to:

Step 4: Review the Pipeline for the Reference Implementation

The pipeline for this RI is present in the /MCSD_Resources/pipelines/ folder. This pipeline uses the person-detection-retail-0013 model downloaded in the above step. The pipeline template is defined below:

"template": [

"{auto_source} ! decodebin",

" ! gvadetect model={models[object_detection][person_detection][network]} name=detection",

" ! gvametaconvert name=metaconvert ! gvametapublish name=destination",

" ! appsink name=appsink"

]

The pipeline uses standard GStreamer elements for input source and decoding the media files, gvadetect to detect objects, gvametaconvert to produce json from detections, and gvametapublish to publish results to MQTT destination. The model identifier for gvadetect element is updated to the new model. The model downloaded is present in the models/object_detection/person_detection directory.

Refer to Defining Media Analytics Pipelines for understanding the pipeline template and defining your own pipeline.

Step 5: Run the Application

- Go to the working directory:

NOTE: In the command above, <version> is the Package version downloaded.cd multi_camera_detection_of_social_distancing/MultiCamera_Detection_of_Social_Distancing_<version>/MCSD_Resources

- Set the HOST_IP environment variable with the command:

export HOST_IP=$(hostname -I | cut -d' ' -f1)

- Run the application by executing the command:

This will volume mount the pipelines and models folders to the edge video analytics microservice.sudo -E docker-compose up -d

- To check if all the containers are up and running, execute the command:

sudo docker-compose ps

You will see output similar to:

Figure 5: Check Status of Running Containers

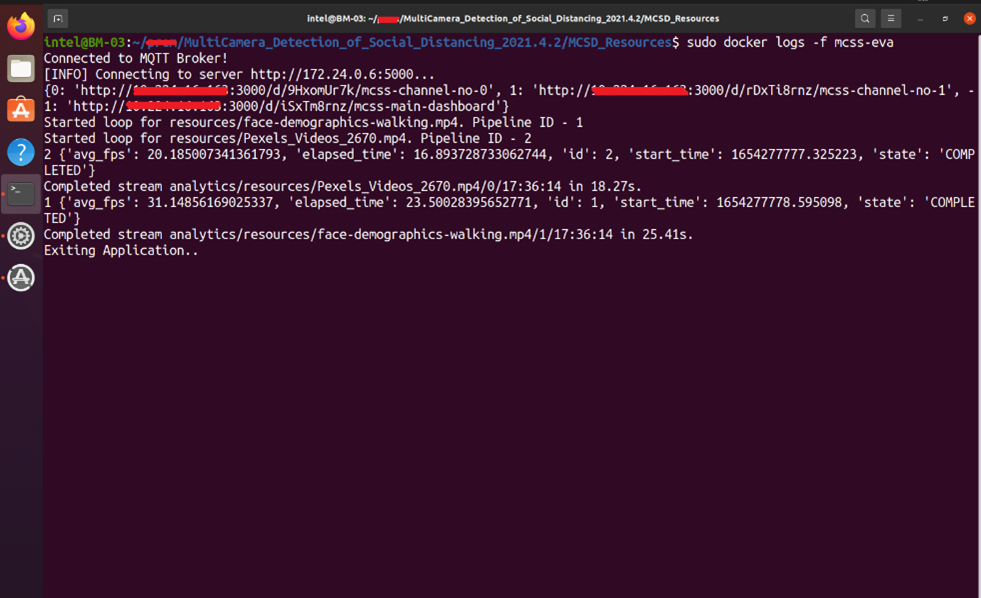

- To check the application logs, run the command:

sudo docker logs -f mcss-eva

You will see output similar to:

Figure 6: Logs of Running Containers

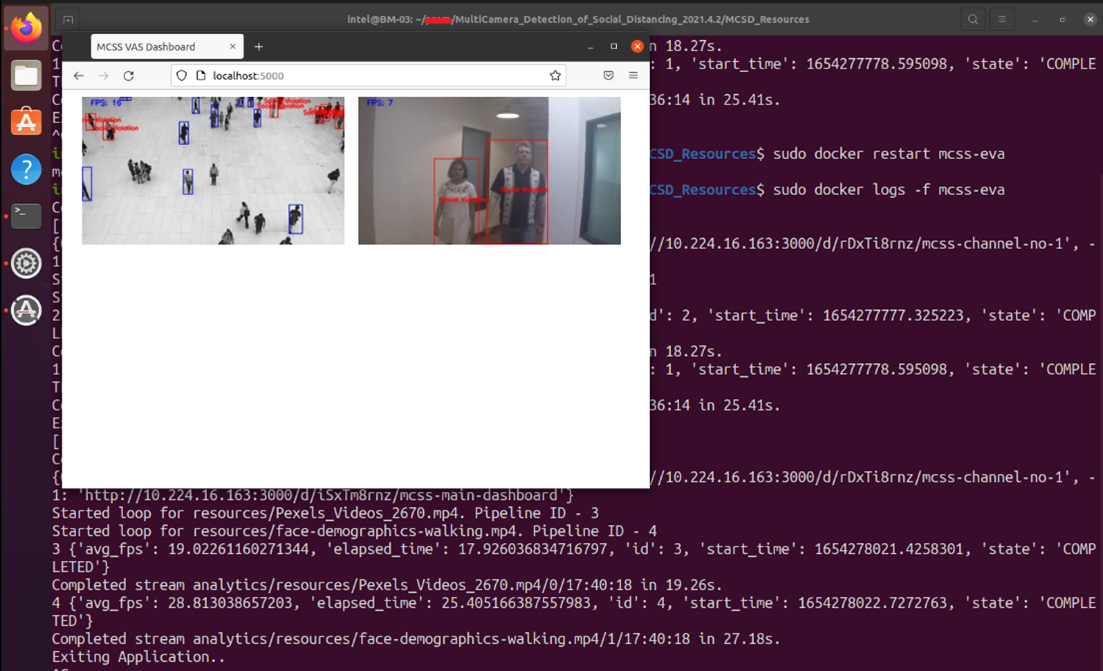

Step 6: View the Output

To view the output streams, open the browser with url <System IP:5000>.

In some cases, the video execution concludes even before you get to view this dashboard. In order to see the output streams, you may need to rerun the mcss-eva container by executing this command:

sudo docker restart mcss-eva

NOTE: In case of failure in proxy enabled network and failure in the sudo docker-compose up command, refer to the Troubleshooting section of this document.

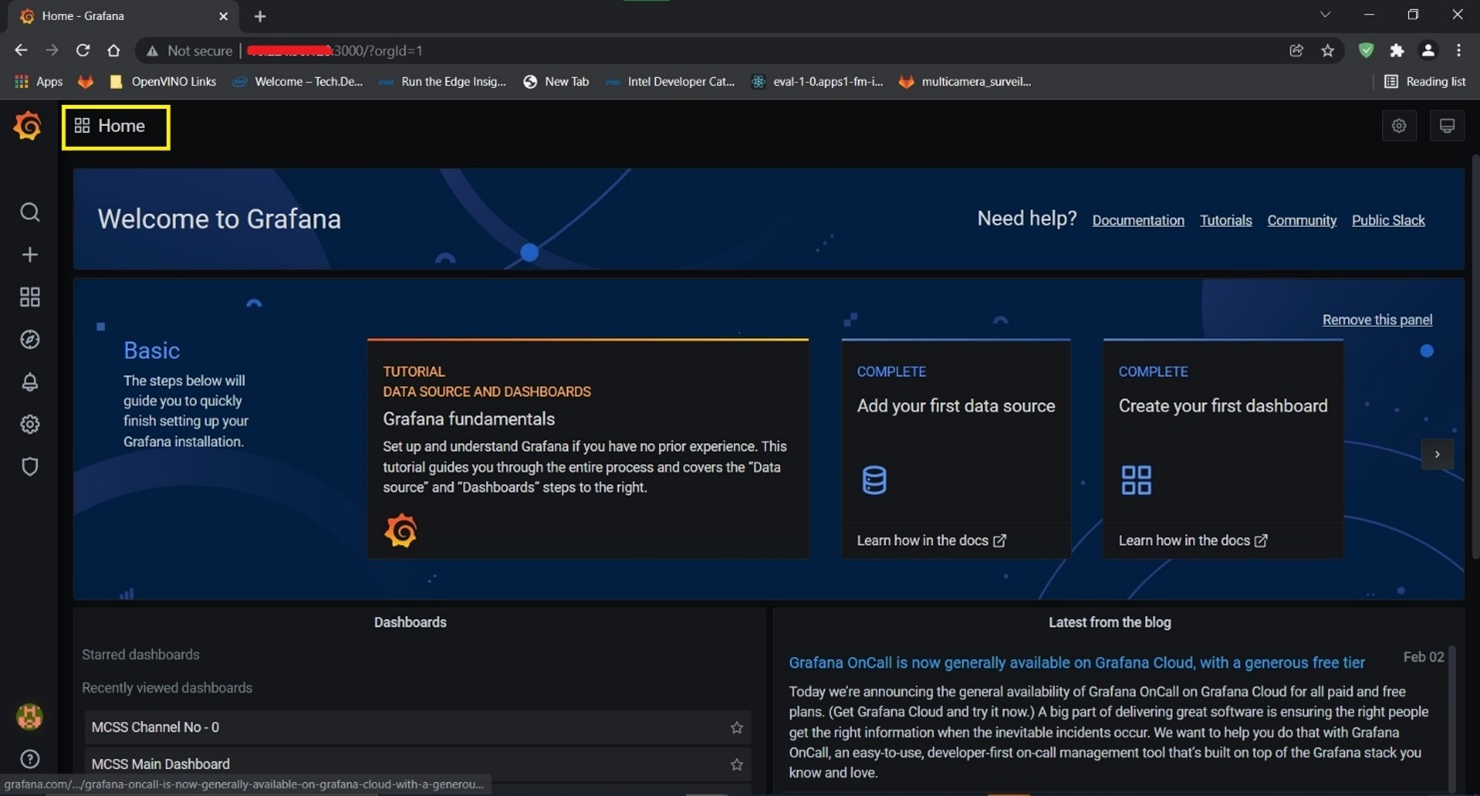

Step 7: Visualize the Output on Grafana

- Navigate to localhost:3000 on your browser.

NOTE: If accessing remotely, go to http://<hostIP>:3000. Get host system IP using the command:hostname -I | cut -d' ' -f1

- Login with user as admin and password as admin.

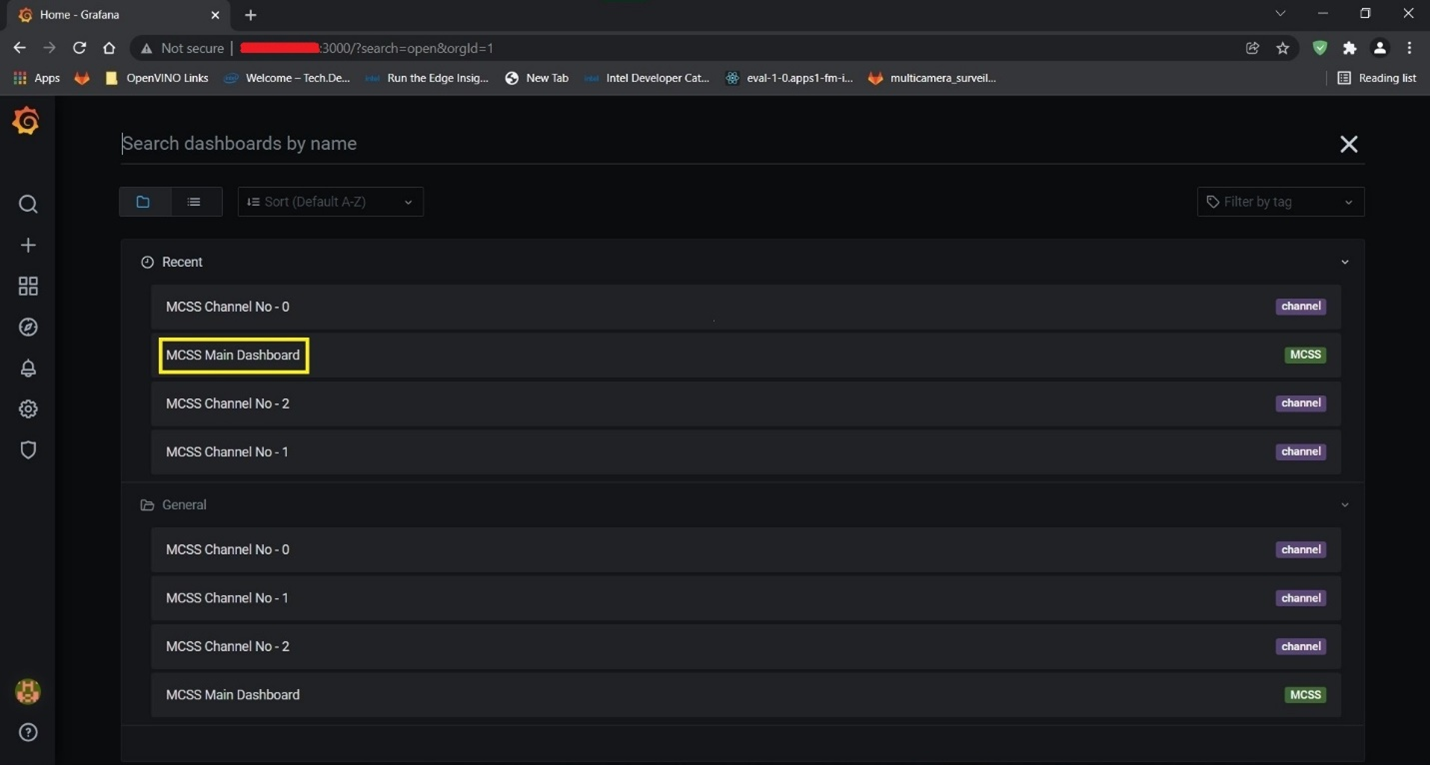

- Click Home and Select the MCSS Main Dashboard to open the main dashboard.

Figure 8: Grafana Home Screen

Figure 9: Select MCSS Main Dashboard

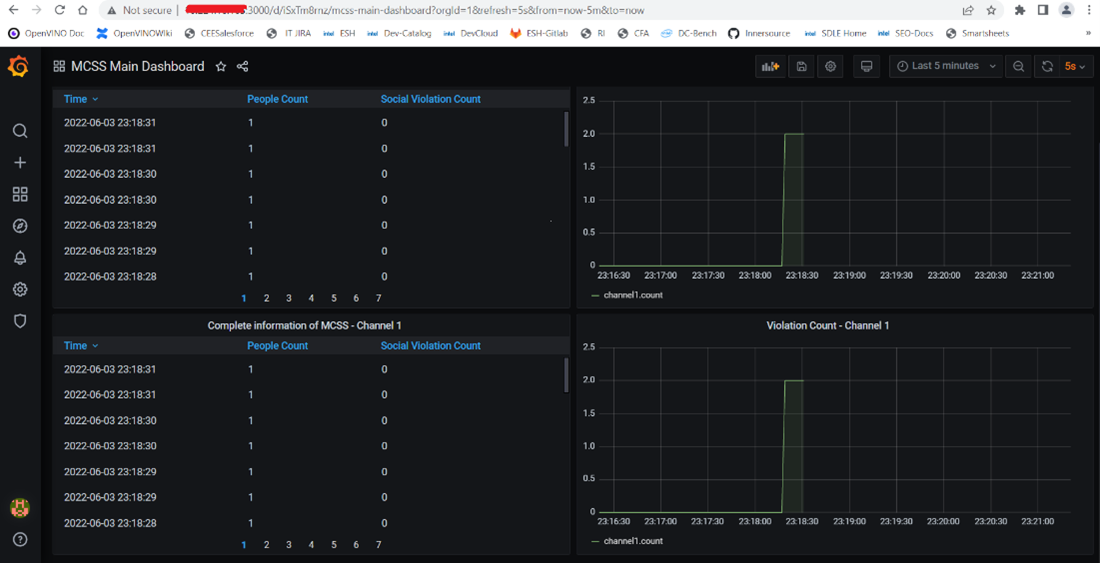

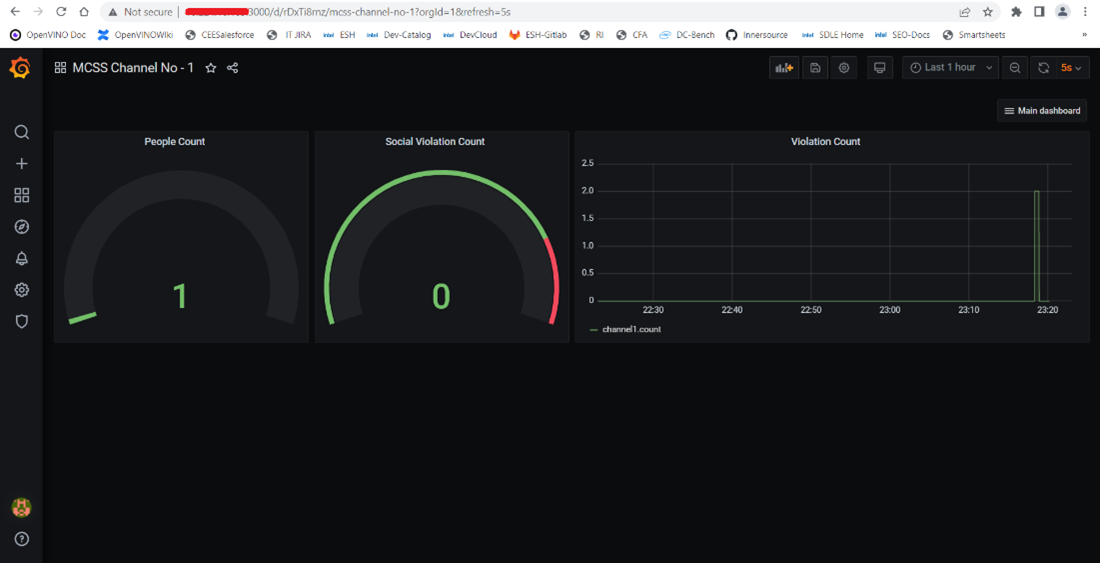

Figure 10: MCSS Main Grafana Dashboard

Figure 11: MCSS Channel Grafana Dashboard

Summary and Next Steps

This application successfully leverages Intel® Distribution of OpenVINO™ toolkit plugins using Video Analytics Microservice for detecting and measuring distance between the people and storing data to InfluxDB. It can be extended further to provide support for feed from network stream (RTSP camera) and the algorithm can be optimized for better performance.

Learn More

To continue learning, see the following guides and software resources:

- Intel® Distribution of OpenVINO™ Toolkit

- RESTful Microservice interface

- Customizing Video Analytics Pipeline Requests

- Defining Media Analytics Pipelines

Troubleshooting

- Make sure you have an active internet connection during the full installation. If you lose Internet connectivity at any time, the installation might fail.

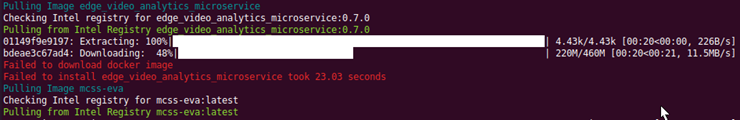

- If installation fails while downloading and extracting steps of pulling the images from Intel registry, then re-run the installation command sudo ./edgesoftware install by providing the product key.

- Make sure you are using a fresh Ubuntu* installation. Earlier software, especially Docker and Docker Compose, can cause issues.

-

In a proxy environment, if single user proxy is set (i.e. in .bashrc file) then some of the component installation may fail or installation hangs. Make sure you have set the proxy in /etc/environment.

-

If your system is in a proxy network, add the proxy details in the environment section in the docker-compose.yml file.

HTTP_PROXY=http://<IP:port>/ HTTPS_PROXY=http://<IP:port>// NO_PROXY=localhost,127.0.0.1 -

If proxy details are missing, then it fails to get the required source video file for running the pipelines and installing the required packages inside the container.

Run the command: sudo -E docker-compose up -

Additionally, if your system is in a proxy network, add the proxy details for Docker at path /etc/system/system/docker.service.d and add the details inside the proxy.conf file:

HTTP_PROXY=http://<IP:port>/ HTTPS_PROXY=http://<IP:port>// NO_PROXY=localhost,127.0.0.1Once the proxy has been updated, execute the following commands:

sudo systemctl restart docker sudo systemctl daemon-reload -

Stop/remove the containers if it shows conflict errors.

To remove the edge_video_analytics_microservice and eiv_mqtt, follow the steps below:-

First, run one of the following commands:

sudo docker stop edge_video_analytics_microservice eiv_mqttOr

sudo docker rm edge_video_analytics_microservice eiv_mqtt -

Next, run sudo docker-compose down from the path specified below:

cd multi_camera_detection_of_social_distancing/MultiCamera_Detection_of_Social_Distancing_<version>/Edge_Video_Analytics_Resources sudo docker-compose down

NOTE: In the command above, <version> is the Package version downloaded.

-

Support Forum

If you're unable to resolve your issues, contact the Support Forum.