Introduction

Many developers and customers are under the impression that Data Plane Development Kit (DPDK) documentation and sample applications include only data plane applications. In a real-life scenario, it is necessary to integrate the data plane with the control and management plane. The purpose of this article is to describe some simple multiplane scenarios.

Simple Scenarios—Data Plane and Control Plane Interactions

Every product or appliance has its own share of data plane and control plane functionality. Here, we illustrate two simple run-time scenarios:

- A network interface card (NIC) port configuration change

- Changing the port itself

Scenario 1: Hardware change

In a multiple core server with as many as 72 Logical cores (lcores) and multiple NIC ports performing packet processing in parallel, how do you synchronize the control plane operations with the data plane? What do you need to understand in order to play by DPDK rules of the game?

Actions that require synchronization

- Pull out a transceiver and insert it again—during run time

- Pull out a transceiver, say 10 GB, and insert a completely different one, say 1 GB

If a change of hardware device requires a release of the instance of the device that was removed and creation of a device instance for the new one, you must coordinate release of threads that are still accessing the data structures of the original instance.

Releasing resources requires coordination with the user space applications using those resources. Applications can be using a single or multiple cores. If the resource being released is used by multiple cores, we need to request an acknowledgement handshake from each core in use, indicating that they are all finished with the resource and it can be safely released.

Scenario 2: Parameter change

Assume you are not changing any hardware during runtime, but you do want to change a global parameter—say maximum transmission unit (MTU). This may be a lightweight initialization compared to the previous case of heavyweight initialization. So, when you use an API that does a lightweight initialization, which parameters can you expect to be persistent across the operation, and which parameters can you not assume will remain the same? This is very useful information to know in order to correctly change parameters during run time.

Please note that this is only part of the story. The other part is synchronizing with data plane applications that are running, that is, waiting for a resource to be available, so that APIs to reconfigure can be called. We will look at that and refer to pointers available in DPDK documentation and source code.

Before we get into these details, let’s step back and look at the big picture:

- What are the core assumptions DPDK makes in terms of concurrency?

- What are the boundaries that DPDK controls and that the application must manage to ensure synchronization?

Rules for Polling Queues

Can multiple cores poll one RX queue simultaneously?

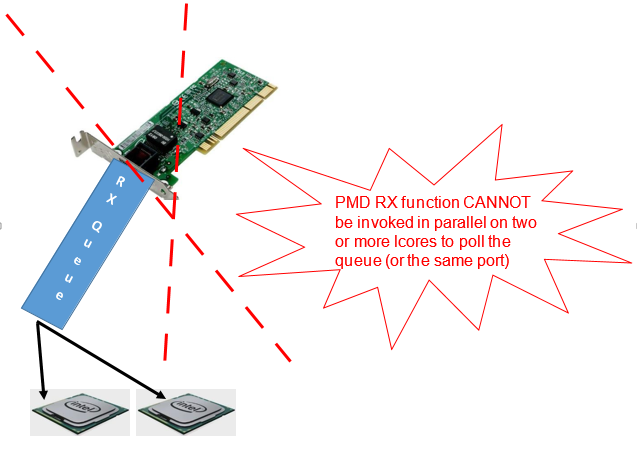

By design, the receive function of a poll mode driver (PMD) cannot be invoked in parallel on multiple logical cores, to poll the same RX queue (of the same port). The benefit of this design is that all the functions of the Ethernet Device API exported by a PMD are lock-free functions. This is possible because the receive function will not be invoked in parallel on different logical cores to work on the same target object.

Figure 1. PMD RX parallelization rule: only one core at a time can do RX processing.

In the case of a single RX queue per port, only one core at a time can do RX processing.

When you have multiple RX queues per port, each queue can be polled by only one lcore at a time. Thus, if you have four RX queues per port, you can have four cores simultaneously polling the port if you have configured one core per queue.

Can you have eight cores and four RX queues per port?

No, because that assigns more than one core per RX queue.

Can you have four cores with eight RX queues per port?

We can only answer this question by knowing full configuration details. Even though you have more RX queues than cores, if you have configured two cores for any single RX queue, that is not allowed. The key is not having more than one core per RX queue, irrespective of more queues in total available, compared to the number of cores.

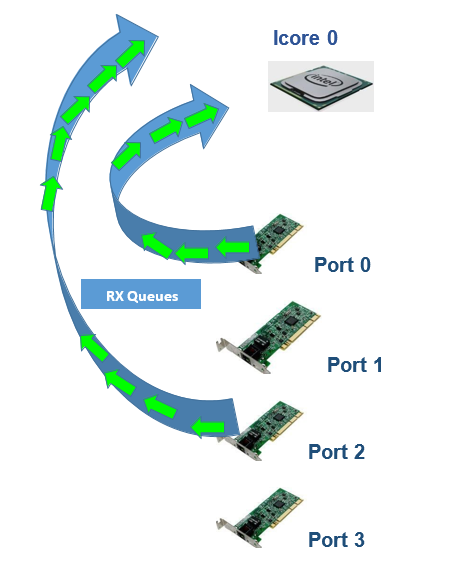

Can one Icore poll multiple RX queues?

Yes. One lcore can poll multiple RX queues. The maximum number of RX queues that one lcore can poll depends on performance requirements and how much headroom should be available for applications after servicing some number of queues. Packet size and packet arrival rates also constrain the cycle budget available on the core.

Note With the port numbering in the system, one lcore can poll multiple RX queues that need not be necessarily consecutive. This is clear from the figure below. Lcore 0 polls RX queue 0 and Rx queue 2. It does not poll RX queue 1 and RX queue 3.

Figure 2. One lcore polling multiple RX queues.

Who is responsible for mutual exclusion so that multiple cores don’t work on the same receive queue?

The one-line answer is—you—the application developer. All the functions of the Ethernet Device API exported by a PMD are lock-free functions, which are not to be invoked in parallel on different logical cores to work on the same target object.

For instance, the receive function of a PMD cannot be invoked in parallel on two logical cores to poll the same RX queue (on the same port). Of course, this function can be invoked in parallel by different logical cores on different RX queues. Please note and be aware that it is the responsibility of the upper-level application to enforce this rule.

If you don’t design your application to enforce this exclusion, allowing multiple cores to step on each other while accessing the device, you are certain to get segmentation errors and crashes. DPDK goes with lockless accesses for high performance and assumes that you, as a higher-level application developer, will ensure that multiple cores do not work on the same receive queue.

What if your design requires multiple cores to share queues?

If needed, parallel accesses to shared cores by multiple logical cores must be explicitly protected by dedicated inline lock-aware functions, built on top of their corresponding lock-free functions of the PMD API.

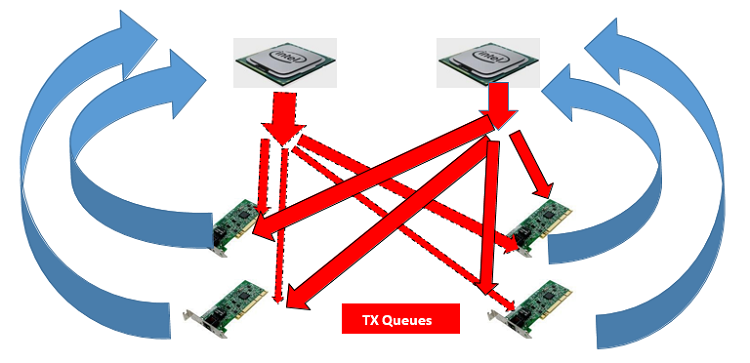

TX port: Why should each core be able to transmit on each and every transmit port?

We saw that for an RX queue, an lcore can only poll a subset of RX ports, but what about TX ports? Can an lcore connect only to a subset of TX ports in the system? Or should each and every lcore connect to all TX ports?

The answer is that a forwarding operation running on an lcore may result in a packet destined for any TX port in the system. Because of this, each lcore should be able to transmit to each and every TX port.

Figure 3. An lcore can poll only a subset of RX ports, but can transmit to any TX port in the system.

While the Data Plane can be Parallel, the Control Plane is Sequential

Control plane operations like device configuration, queue (RX and TX) setup, and device start depend on certain sequences to be followed. Hence, they are sequential.

Device setup sequence

To set up a device, follow this sequence:

rte_eth_dev_configure()

rte_eth_tx_queue_setup()

rte_eth_rx_queue_setup()

rte_eth_dev_start()

After that, the network application can invoke, in any order, the functions exported by the Ethernet API to get the MAC address of a given device, the speed and the status of a device physical link, receive/transmit packet bursts, and so on.

Summary

Application developers will benefit from understanding DPDK assumptions regarding application roles and responsibilities. To start, it’s important to comprehend the scope of DPDK’s roles and responsibilities. This will help you to correctly architect from the start in terms of thread safety, lockless API call usage, multiprocessor synchronization, and control plane and data plane synchronization.

Next Steps

Architect a couple of your own usage models of the data plane co-existing with the control and management plane. Look for similar approaches used by testpmd and other applications, and described by the DPDK HowTo Guides. Test them out.

Exercises

- Can you have eight cores per port with four RX queues per port?

- Can you have four cores per port with eight RX queues per port?

- What are the implications of multiple cores transmitting on one transmit port—in terms of control plane and data plane synchronization?

- Control plane operations—should be done in interrupt context itself or as a deferred procedure?

References

- Additional DPDK tutorials on Intel® Developer Zone (Intel® DZ)

- DPDK.org

- Port Hotplug Framework - In the Programmer's Guide at DPDK.org.