Intel® Neural Compressor is an open source Python* library designed to help quickly optimize inference solutions on popular deep learning frameworks (TensorFlow*, PyTorch*, ONNX* [Open Neural Network Exchange] runtime, and Apache MXNet*). Intel Neural Compressor is a ready-to-run optimized solution that uses the features of 3rd generation Intel® Xeon® Scalable processors (formerly code named Ice Lake) for performance improvement.

This quick start guide provides instructions for deploying Intel Neural Compressor to Docker* containers. The containers are packaged by Bitnami* on Amazon Web Services (AWS)* for the Intel® processors.

What's Included

The Intel Neural Compressor includes the following precompiled binaries:

|

Intel®-Optimized Library |

Minimum Version |

|---|---|

|

2.7.3 2.5.0 1.9.0 |

|

|

1.10.0 |

|

|

1.8.0 1.6.0 |

Prerequisites

- An AWS account with Amazon EC2*. For more information, see Get Started.

- 3rd generation Intel Xeon Scalable processors

- Sign into the AWS console.

- Go to your EC2 Dashboard, and then select Launch Instances.

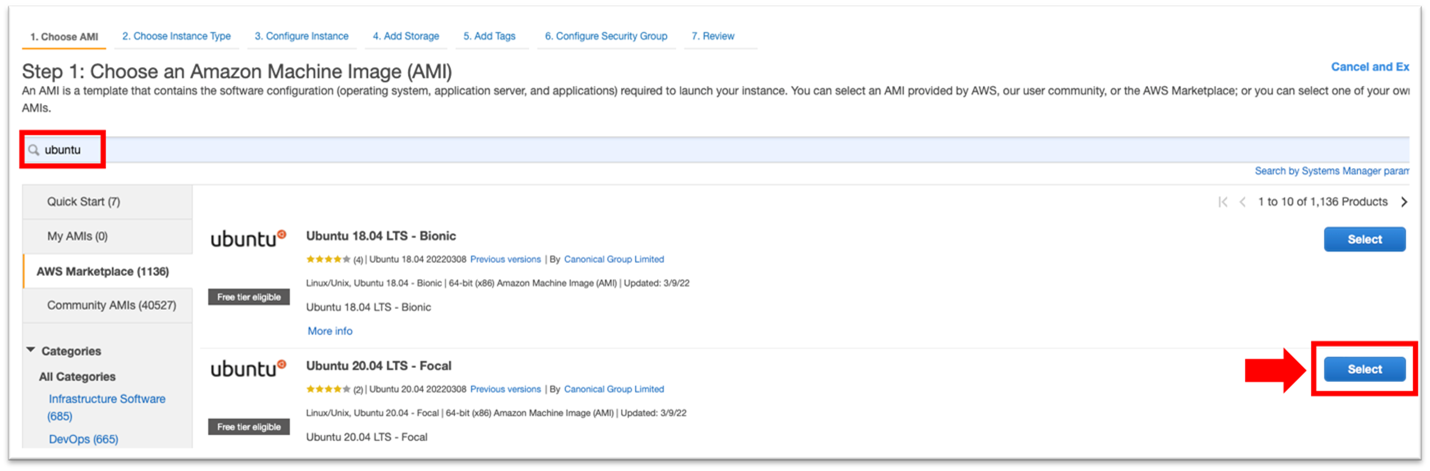

- In the search box, enter Ubuntu.

- Select the appropriate Ubuntu* instance, and then select Next. The Choose an Amazon Machine Image (AMI) screen appears.

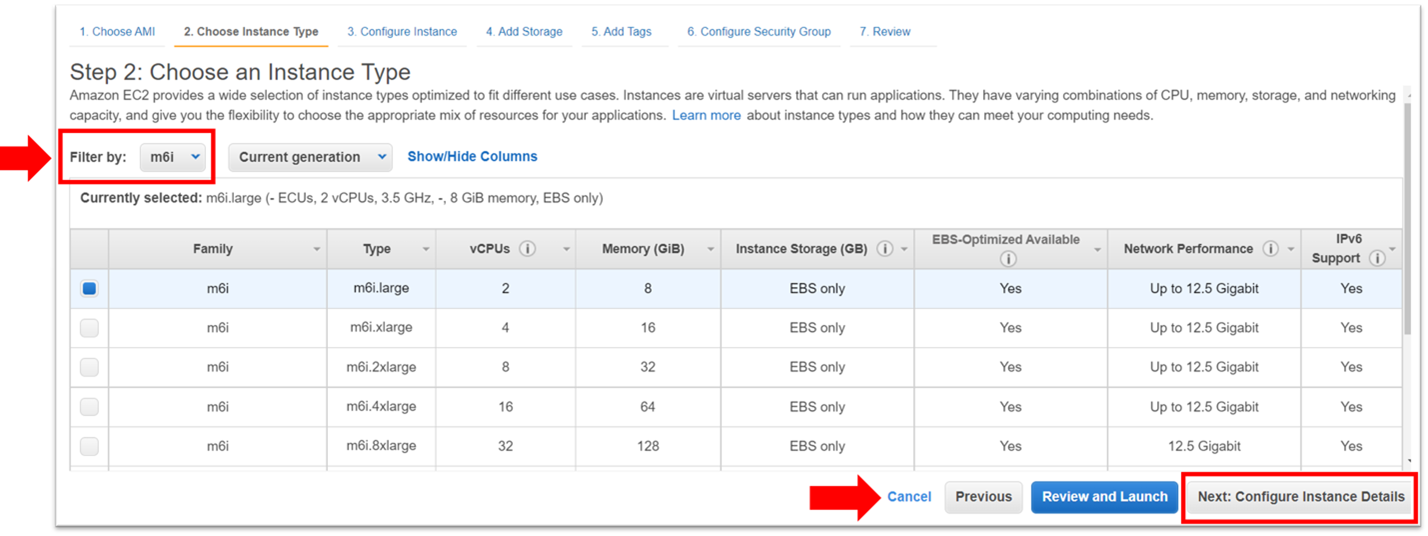

- To choose an instance:

a. Locate the right region and any of the Intel Xeon Scalable processors, and then select the Select button.

b. Select Configure Instance Details. The available regions are:

• US East (Ohio)

• US East (N. Virginia)

• US West (N. California)

• US West (Oregon)

• Asia Pacific (Mumbai)

• Asia Pacific (Seoul)

• Asia Pacific (Singapore)

• Asia Pacific (Sydney)

• Asia Pacific (Tokyo)

• Europe (Frankfurt)

• Europe (Ireland)

• Europe (Paris)

• South America (São Paulo)

For up-to-date information, see Amazon EC2 M6i Instances.

- Select Next: Configure Instance Details. The next page appears.

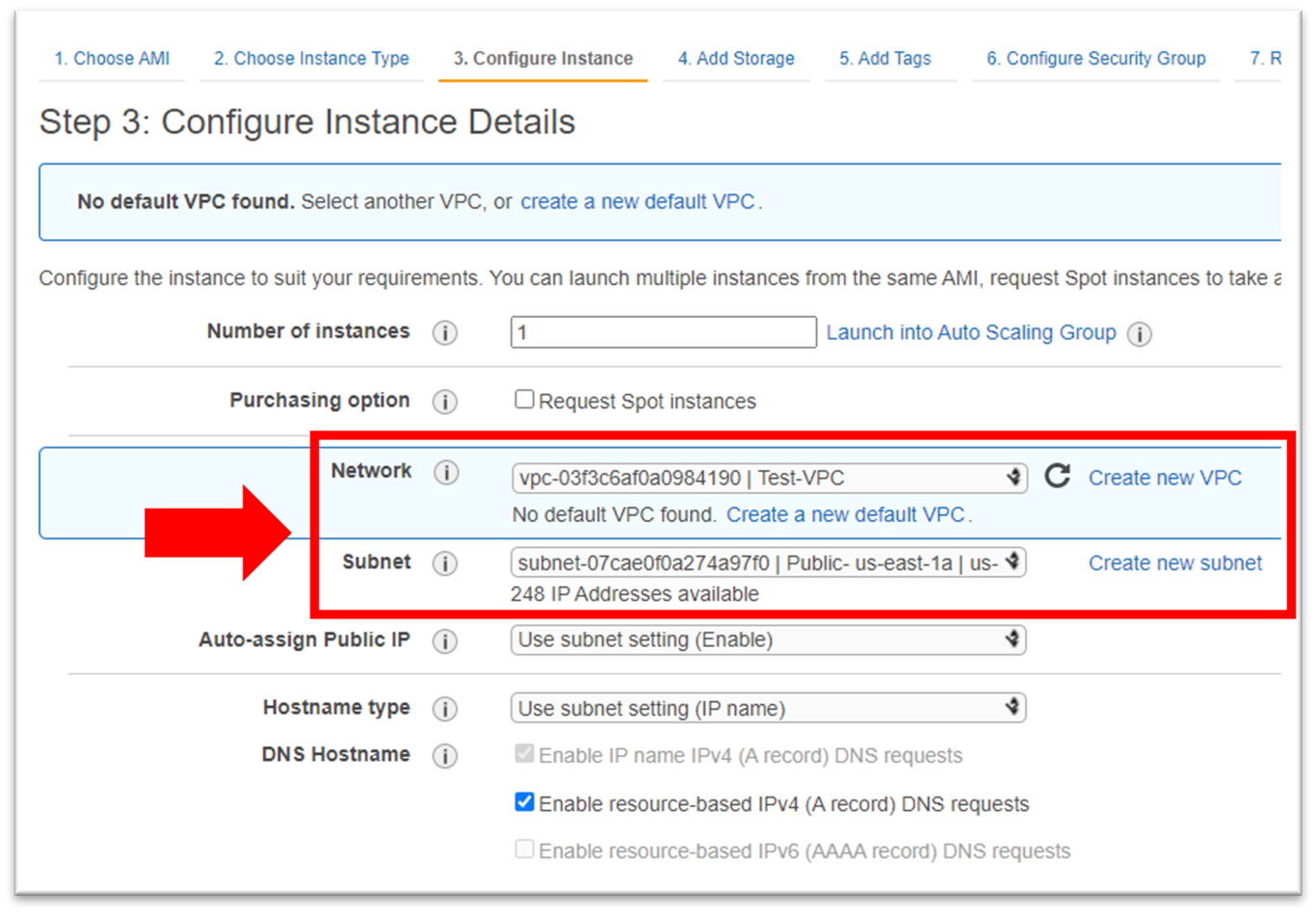

- To configure the instance details, select the appropriate Network for the VPC and the Subnet for your instance to launch into.

- Select Add Storage. The storage page appears.

- Increase the storage size to fit your needs, and then select Add a Tag. The tag page appears.

- (Optional) On the Add Tags tab, add the tags (notes for your own reference), and then select Configure Security Group. The Configure Security Group page appears.

- To create a security group:

- Enter a Security group name and Description.

- Configure the protocol and port range to allow for communication on port 22.

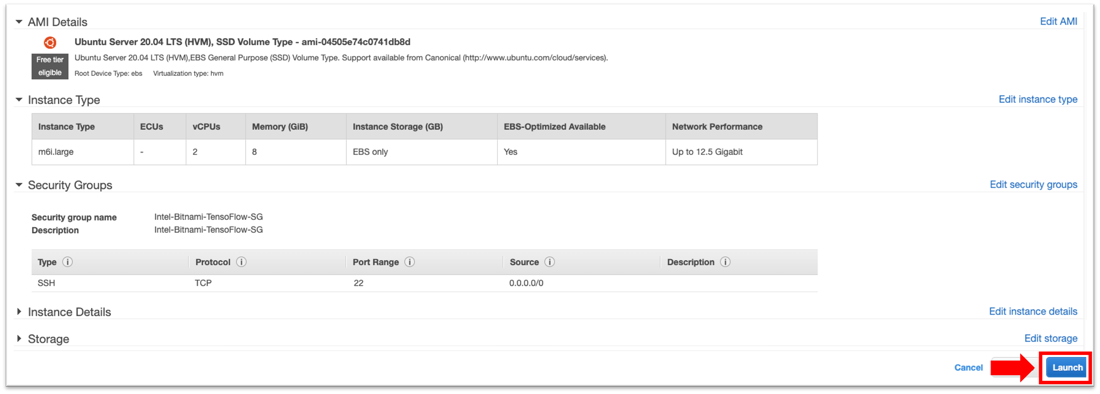

- Select Review and Launch. The Review Launch Instance page appears.

- To ensure that the details match your selection, scroll through the information.

- Select Launch. A keypair page appears.

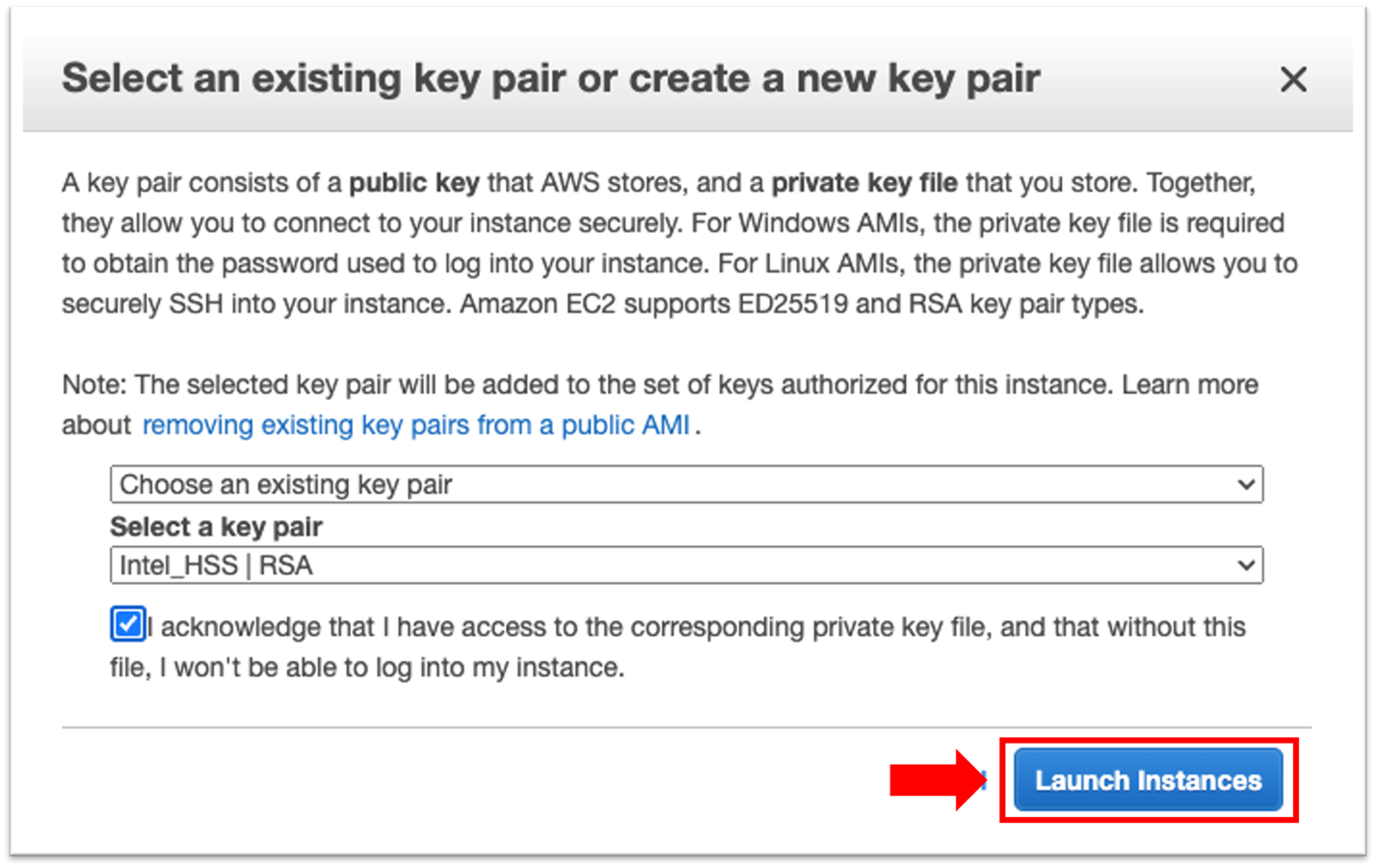

- To choose a keypair:

- Select Choose to create new or Existing keypair.

- In Select a keypair, choose the keypair you created if you have an existing keypair. Otherwise, choose Create New to generate one.

- Select the I acknowledge check box.

- Select Launch Instances. The Instances page appears. It shows the launch status.

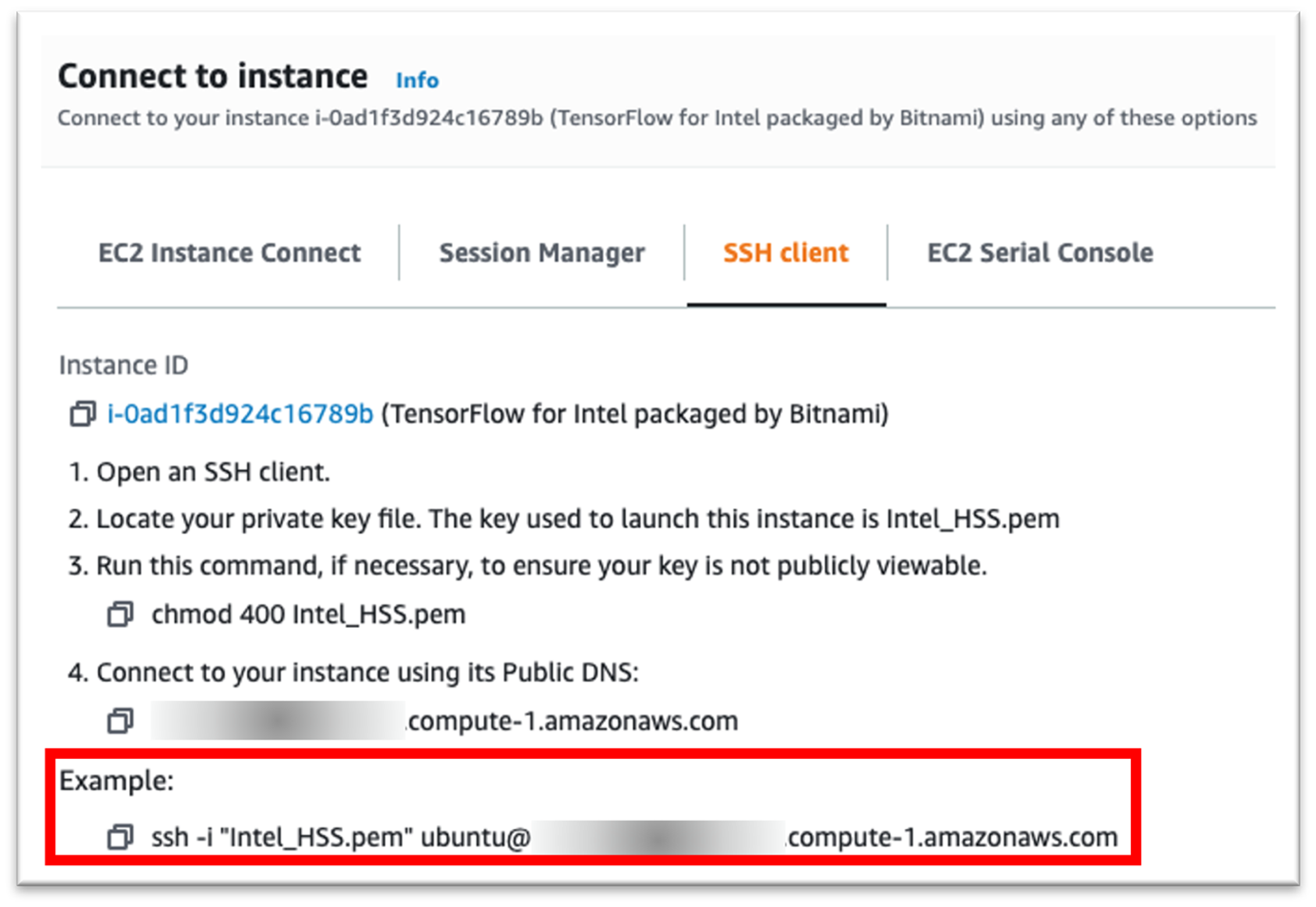

- To launch an instance:

- On the left side of the page, select the check box next to the instance.

- In the upper-right of the page, select Connect. The Connect to instance page appears. This page has information on how to connect to the instance.

- Select the SSH client tab, and then copy the command under Connect to your instance using its Public DNS.

- In a terminal window, to connect the instance, enter the SSH command. You specify the path and file name of the private key (.pem), the username for your instance, and the public DNS name.

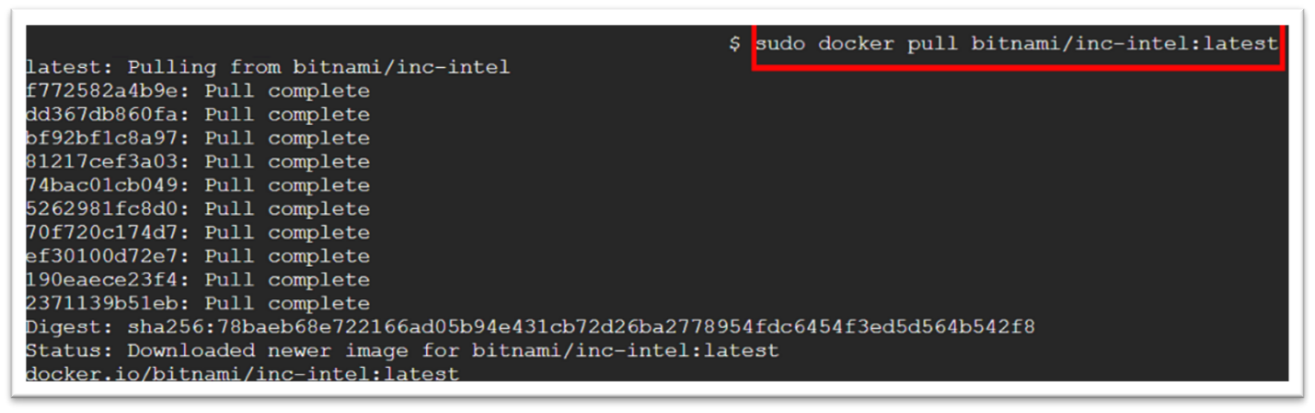

- To deploy Intel Neural Compressor on a Docker container:

- If needed, install Docker on Ubuntu.

- To pull the latest Intel Neural Compressor image, open a terminal window, and then enter the following command: docker pull bitnami/INC-intel:latest.

Note Intel recommends using the latest image. If needed, you can find older versions in the Docker Hub Registry.

-

- To test Intel Neural Compressor, start the container with this command: sudo docker run -it --name inc bitnami/inc-intel

Note inc-intel is the container name for the bitnami/inc-intel image.

For more information on the docker run command (which starts a Python* session), see Docker Run. The container is now running.

- To import Intel Neural Compressor into your program, in the terminal window, enter the following command: from neural_compressor.experimental import Quantization, common

For more information about using Intel Neural Compressor and its API, see Documentation.

Connect

To ask questions for product experts at Intel, go to the Intel Collective at Stack Overflow, and then post your question with the intel-cloud tag.

For questions about the Intel Neural Compressor image on Docker Hub, see the Bitnami Community.

To file a Docker issue, see Issues.