Suppose you want to build a chatbot that answers questions about your company’s documents. You will need:

- A document ingestion pipeline

- Vector embeddings and a vector database

- A retrieval system

- A large language model (LLM) integration

- Context management

- Response generation

- And perhaps, some guardrails and monitoring

Each of these components has a few implementation choices. How do you handle chunking? Which embedding model? Which vector database?

A monolithic solution that tightly couples these components can be easier to get started. But if you need to make small changes, for instance swapping out an embedding model or vector database consistent with your other applications, it can trigger a cascade of painful rewrites. There’s a better way.

OPEA’s Solution: Microservices for AI Workflows

The Open Platform for Enterprise AI (OPEA) is an open source framework to facilitate the production and deployment of enterprise-ready GenAI workloads.

OPEA’s approach is straightforward: take those AI workflow components and turn them into standalone microservices, then use a service composer to orchestrate them into a megaservice that coordinates your end-to-end use case. So instead of building a monolithic retrieval-augmented generation (RAG) application, you’re composing discrete services that can be developed, deployed, and scaled independently.

Let’s examine the core components of the OPEA architecture—microservices, megaservices, and gateways—and explore how they interrelate with a theater production analogy.

Microservices: The Fundamental Building Blocks

In OPEA, microservices are specialized, containerized components—each built to handle a specific task within a GenAI application. These tasks might include text embedding, document retrieval, reranking, or communicating with LLMs. By breaking functionality into discrete units, microservices bring modularity, scalability, and flexibility to integrate with multiple providers.

Think of microservices like members of a theater production: actors, lighting technicians, set designers—each playing a distinct, well-defined role that contributes to the overall performance.

Check out GenAIComps for the repository of OPEA microservices.

Megaservices: Orchestrators of GenAI Microservice Workflows

A megaservice in OPEA is a dedicated microservice that orchestrates the execution of a GenAI workflow based on a blueprint. Rather than functioning as a single monolithic application, a megaservice coordinates multiple microservices to handle complex tasks in a modular, distributed way.

Continuing our theater production analogy, a megaservice is the complete performance. It brings together all the cast and crew—each microservice—under the direction of a script and director. Instead of functioning as one performer doing everything, the full show (e.g., a ChatQnA RAG application) is a coordinated effort to deliver a compelling result to the audience (end user).

OPEA includes ready-made megaservices each tailored to different GenAI workflows. Typically, users can leverage existing megaservices without modification. Creating or customizing a megaservice is only necessary for specialized scenarios. This includes use cases requiring significantly different workflows, custom orchestration logic, or integration with domain-specific components.

For instance, ChatQnA is an example of a megaservice that orchestrates vector embedding, retrieval, reranking, and LLM microservices to process a user’s query and return a coherent answer grounded in a specific knowledge base. Another megaservice, GraphRAG, takes a different approach by using knowledge graphs instead of traditional vector-based retrieval, enabling more structured reasoning that can trace relationships between concepts and entities.

Gateway: The Unified Entry Point

The gateway serves as the single entry point for clients interacting with megaservices. It handles request routing, authentication, and load balancing, simplifying client interactions with the system. For example, a widely used open source solution in this context is Nginx, a “reverse proxy” that can provide some gateway functionality.

Conceptually, the gateway is like the front-of-house team: handling ticketing (authentication), directing patrons to their seats (routing), and managing capacity to prevent overcrowding (load balancing).

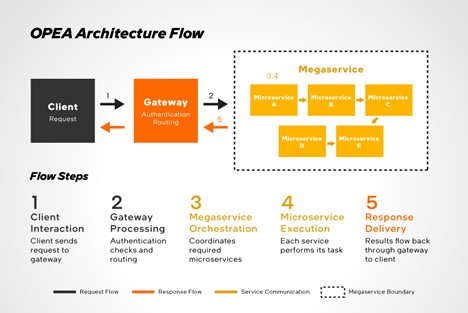

How OPEA Components Interact

The interaction between these components can be visualized as follows:

- Client Interaction: A client sends a request to the system via the gateway.

- Gateway Processing: The gateway receives the request, performs necessary checks (like authentication), and routes it to the appropriate microservice(s) within the megaservice.

- Megaservice Orchestration: The megaservice orchestrates the required microservices to process the request.

- Microservice Execution: Each microservice performs its designated task and passes the result along the workflow.

- Response Delivery: The final output is sent back through the megaservice to the gateway, which then returns the response to the client.

Start Building Your GenAI Apps with Megaservices

You can find the following core OPEA repositories on its GitHub page.

- GenAIComps: The library of reusable microservices

- GenAIExamples: Full megaservice implementations for common GenAI use cases

For example, if you’re building a document summarization system, the DocSum example is a great starting point. You can deploy it locally with Docker, explore how the microservices interact, and customize it to fit your needs. Since the services communicate through standard APIs, you can mix OPEA components with your own custom services or third-party tools.

Understanding the roles of microservices, megaservices, and gateways in OPEA’s architecture is crucial for building scalable, maintainable, and efficient GenAI applications. By modularizing functionality, orchestrating complex workflows, and managing client interactions, OPEA provides a robust framework for enterprise AI applications.

For more detailed examples and deployment guides, refer to the GenAIExamples repository.

Finally, if you found this article valuable, please share it with your network.

Acknowledgments

The author thanks Jack Erickson, Eze Lanza, and Nikki McDonald for providing feedback on an earlier draft of this work.

About the Author

Ehssan Khan, Senior AI Software Solutions Engineer, Intel

Dr. Ehssan Khan is a Senior AI Software Solutions Engineer, currently specializing in enabling developers through creating and demonstrating Intel’s AI tools. His recent work includes building language models powered by synthetic data and delivering training sessions to both industry professionals and academic researchers.