I am pleased to announce the latest Intel® oneAPI tools release (2022.2). This updates oneAPI 2022, which I described previously.

The oneAPI initiative, and its open specification, are focused on enabling an open, multiarchitecture world with strong support for software developers—a world of open choice without sacrificing performance or functionality.

These oneAPI tools are based on decades of Intel software products that have been globally used to build myriad applications and they’re built from multiple open-source projects to which Intel contributes heavily.

Our oneAPI tools emphasize support for standards including C, C++, Fortran, MPI, OpenMP, and SYCL. We also provide support for accelerating Python (an open source de facto standard).

C++, Fortran, OpenMP, MPI, SYCL, and more

The compiler updates bring numerous performance enhancements, bug fixes, and added support for the latest standards, including:

- Intel® oneAPI DPC++/C++ (LLVM-based) Compiler enhances OpenMP* 5.1 compliance and improves performance of OpenMP reductions for compute offload. A complete run down feature-by-feature can be found online for our C/C++ compiler and for our Fortran compiler (note: the compiler versions to look for are '2022.1' since they started at '2022.0' originally - this is where I crack a joke about Fortran vs. C/C++ array numbering, or American vs. European building floor numbering).

- Intel® oneAPI DPC++/C++ (LLVM-based) Compiler enhances SYCL 2020 support with numerous features from the very active open source project included prebuilt in this oneAPI update. A full run down of feature status can be found online, and expect the newest to be labeled as 2022.1 as mentioned previously.

- Our LLVM-based Fortran compiler is closing in on finishing its five-year implementation journey to fully reinvent Intel Fortran goodness within the LLVM framework. This update adds Fortran support for parameterized derived types, F2018 IEEE Compare, VAX structures, and OpenMP 5.0 Declare Mapper for scalars.

- Intel® MPI Library enables better resource planning and control at an application level with GPU pinning, plus adds multi-rail support to improve application internode communication bandwidth.

- Intel® oneAPI Collective Communications Library (oneCCL) now supports Intel® Instrumentation and Tracing Technology profiling, opening new insights with tools such as the Intel® VTune™ Profiler.

- Intel® oneAPI Math Kernel Library (oneMKL) MKL_VERBOSE support extended to transpose domain on CPUs, and for BLAS GPU functionality with DPC++ and OpenMP offload.

SYCLomatic

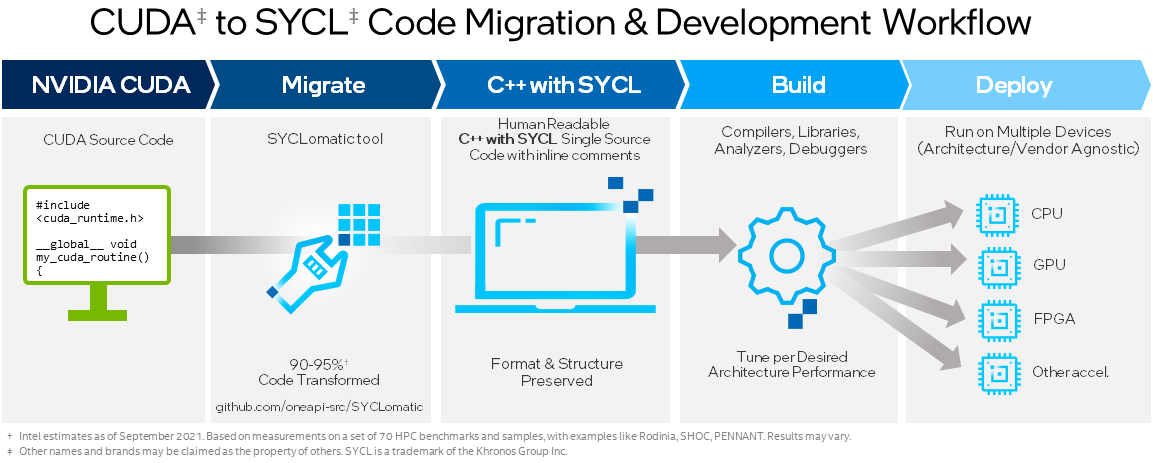

To support migration of NVIDIA CUDA code to the open world of C++ and SYCL, Intel has open sourced the migration tool (Intel® DPC++ Compatibility Tool) as the new SYCLomatic project. The tool helps developers more easily port their code to C++ with SYCL to accelerate cross-architecture programming for heterogeneous architectures. The SYCLomatic project enables community collaboration to advance adoption of the SYCL standard, a key step in freeing developers from a single-vendor proprietary ecosystem.

To support migration of NVIDIA CUDA code to the open world of C++ and SYCL, Intel has open sourced the migration tool (Intel® DPC++ Compatibility Tool) as the new SYCLomatic project. The tool helps developers more easily port their code to C++ with SYCL to accelerate cross-architecture programming for heterogeneous architectures. The SYCLomatic project enables community collaboration to advance adoption of the SYCL standard, a key step in freeing developers from a single-vendor proprietary ecosystem.

The project maintainers encourage contributions to the SYCLomatic project, and the oneAPI distribution from Intel will continue to offer a supported prebuilt version of the tool under the Intel® DPC++ Compatibility Tool name.

AI Accelerated

AI workloads continue to run faster thanks to performance improvements, including:

- Intel Extension for Tensorflow with faster model loading and support of pluggable hardware acceleration devices.

- Additional functionality and productivity for Intel® Extension for Scikit-learn and Intel® Distribution of Modin through new features, algorithms, and performance improvements such as Minkowski and Chebyshev distances in kNN and acceleration of the t-SNE algorithm.

- Acceleration for AI deployments with quantization and accuracy controls in the Intel® Neural Compressor, making great use of low-precision inferencing across supported Deep Learning Frameworks.

- Support of new PyTorch model inference and training workloads via Model Zoo for Intel® Architecture.

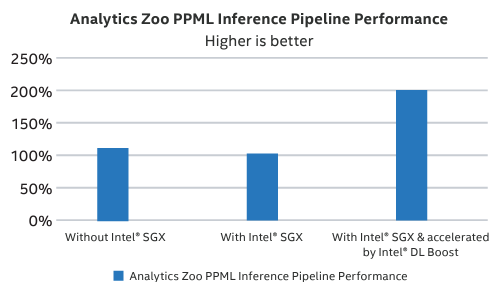

Security matters! The Intel® oneAPI Deep Neural Network Library (oneDNN) library expands its use of Intel® SGX. An included demonstration sample shows how to use it. Privacy-Preserving Machine Learning is not only accelerated, it can also be protected.

Security matters! The Intel® oneAPI Deep Neural Network Library (oneDNN) library expands its use of Intel® SGX. An included demonstration sample shows how to use it. Privacy-Preserving Machine Learning is not only accelerated, it can also be protected.

See (Visualize) Better

Intel® OSPRay and Intel® OSPRay Studio add support for Multi-segment Deformation Motion Blur for mesh geometry and new light features and optimizations.

Intel® Open Volume Kernel adds support for indexToObject affine transform and constant cell data for Structured Volumes. Intel® Implicit SPMD Program Compiler Run Time (ISPCRT) library is included now by default, so a separate download is no longer needed for this indispensable capability.

Support for the New Player in the Game

Intel® Arc™ hardware offers an exciting new product line of discrete GPUs for consumer high-performance graphics. The first laptops equipped with these stunningly powerful GPUs are available now.

Intel® Arc™ hardware offers an exciting new product line of discrete GPUs for consumer high-performance graphics. The first laptops equipped with these stunningly powerful GPUs are available now.

Intel® oneAPI Video Processing Library (oneVPL) uses dedicated AV1 hardware to deliver a 50X performance boost compared with software encode.

Other Intel oneAPI tools also provide valuable software development support including deep learning oneDNN and performance tuning insights with the VTune Profiler. Some HPC users will find this a useful development testing ground for single-precision codes (there is no hardware acceleration for double precision in the first Intel® Arc™ hardware).

Intel® Extension for TensorFlow uses oneDNN to harness the power of this exciting new GPU, as does the separately available the oneAPI-powered Intel® Distribution of OpenVINO Toolkit.

Get oneAPI with Updates Now

You can get the updates in the same places as you got your original downloads - the Intel oneAPI tools on Intel Developer Zone, via containers, apt-get, Anaconda, and other distributions.

The tools are also accessible on the Intel DevCloud which includes very useful oneAPI training resources. This in-the-cloud environment is a fantastic place to develop, test and optimize code, for free, across an array of Intel CPUs and accelerators without having to set up your own systems.

About oneAPI

oneAPI is an open, unified, and cross-architecture programming model for heterogeneous computing. oneAPI embraces an open, multivendor, multiarchitecture approach to programming tools (libraries, compilers, debuggers, analyzers, frameworks, etc.). Based on standards, the programming model simplifies software development and delivers uncompromised performance for accelerated compute without proprietary lock-in, while enabling the integration of legacy code. A recent post by Sanjiv Shah, discussed specification 1.1 and the ‘coming soon’ 1.2 specification. Learn more at oneapi.io.