The US Defense Advanced Projects Agency* (DARPA*) ran a Cyber Grand Challenge in 2016, where automated cyber-attack and cyber-defense systems were pitted against each other to drive progress in autonomous cyber-security. Simics* virtual platforms automated the vetting of competitors’ submissions, as told in the paper Cyber Grand Challenge (CGC) Monitor - A Vetting System for the DARPA Cyber Grand Challenge, from the 2018 Digital Forensic Research Workshop (DFRWS).

The researchers built an impressive automated vetting system that checked if submissions to the CGC were trying to honestly compete or rather trying to circumvent or subvert the competition infrastructure (as hackers might be expected to do). It is an excellent example of what can be done with the Simics virtual platform framework—beyond the basics of running code and modeling hardware platforms.

The Cyber Grand Challenge

The CGC setup had automated hacking systems compete against each other in a game of finding weaknesses in programs, exploiting them, and patching the programs to stop other teams from exploiting the same weaknesses. A good write-up on what the Cyber Grand Challenge looked like from the competitor perspective is found in the Phrack article Cyber Grand Shellphish by the Shellphish team from the University of California at Santa Barbara* (UCSB*).

To reduce the problem space enough to make the challenge feasible, the CGC used a custom simplified operating system, the DARPA Experimental Cyber Research Evaluation Environment (DECREE). The programs to be examined and possibly exploited all ran on top of DECREE, which had only seven system calls (syscalls). Still, those calls included support for networking and allowed for software that was much more sophisticated than just toy examples.

Indeed, the programs used as targets for the automated hacking systems, known as Challenge Binaries (CB), were far from trivial. The CBs included programs like instruction-set simulators using just-in-time compilation techniques. Thus, while the environment was simplified, the problem set was realistic enough to indeed provide a grand challenge.

The competitors’ automated tools analyzed the CBs using techniques like fuzzing, abstract interpretation, binary instrumentation, symbolic execution, de-compilation, and anything else they could think of. When weaknesses were found, competing tools constructed exploits to crash the CB or leak a secret contained in the program at runtime (also known as capturing the flag).The tools also created patched binaries that were supposedly safe against the exploits they found, to show how cyber defense can be automated. Each patched CB was then given to other teams to attack again, round by round.

Automated Vetting with Simics*

The Simics-based vetting tool described in the DFRWS paper checked if any competitor-provided binaries tried to attack the competition infrastructure rather than follow the rules of the game. While the scored competition rounds were run on physical hardware, Simics was used as a separate check on the competitor-provided binaries. The Simics-based tool ran the competition binaries, and tracked the execution of the software on the target system to find suspicious behaviors and signs of compromise, such as writing to memory that should not be written to.

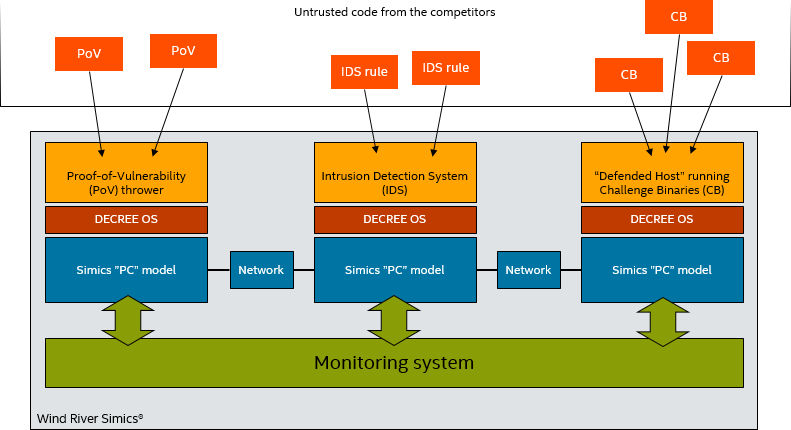

The setup used for the vetting modeled the hardware setup used in the CGC, with some simplifications. The Simics model used three machines in two networks, inside a single Simics simulation: a Proof of Vulnerability (PoV) Thrower machine that generated network packets to exploit a CB; an Intrusion Detection System (IDS) that ran network rules to stop attacks; and a Defended Host that ran the CBs.

Figure 1: The vetting monitor setup

The monitoring system watched what went on inside of each target machine. It implemented its own operating-system awareness (OSA) for the DECREE OS to determine the currently running process and to notify the monitor when software made a syscall. The OSA used several types of breakpoints in order to track the execution of the operating system without imposing significant overhead on the Simics simulation: execution of code; access to data; processor exceptions; and change in the processors’ execution modes. This is a good example of what can be built on top of the fundamental abilities and primitives exposed by Simics and its API.

The system monitored target system behavior whenever untrusted applications were running (PoVs, IDS rules, and CBs). Monitoring was limited in this way in order to optimize overall simulation performance. To see if the software managed to break the DECREE OS, four different actions where monitored:

Kernel return-oriented programming (ROP):checked by putting breakpoints on all return instructions in the kernel, and then checking that each return instruction was jumping back to a matching call instruction. This kind of breakpoint can be implemented only in a virtual platform.

Kernel page table modifications:changes were detected by data access breakpoints on the memory area storing the page tables. This kind of giant breakpoint is not feasible in hardware, and it thus required a virtual platform.

Credential changes:monitored by data access breakpoints on the credential part of the thread data structures in the DECREE OS.

Unexpected execution locations:kernel code that was not expected run during the execution of the untrusted code was identified by hand, and execution breakpoints were put on their code.

This impressive use of Simics required the researchers to fully understand the environment they created and know what to monitor. It would be pointless to try to build a generic monitor for something like this—it is all about doing the right thing in a given operating system context (and turning off monitoring when not running untrusted code—to reduce the overall performance impact).The monitor also recorded all syscalls made for later analysis, as well as any suspicious activity detected by the monitor.

Attaching to the Interactive Debugger (IDA)

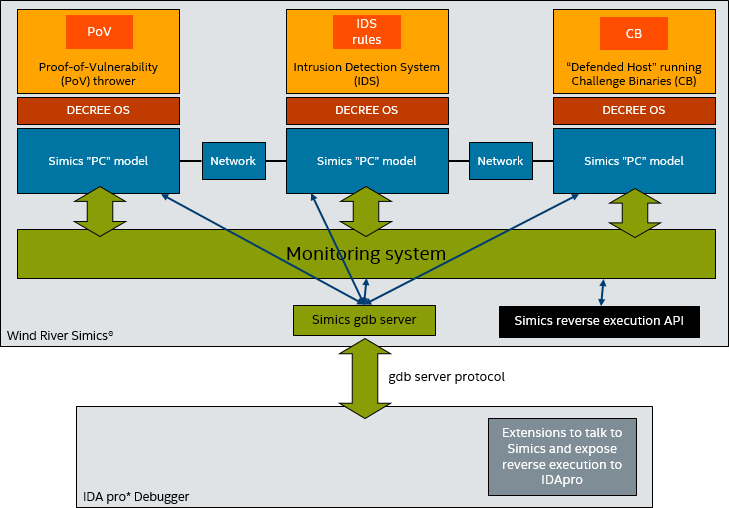

In addition to monitoring system execution, the researchers also connected the HexRays* IDA Pro* Debugger to the Simics virtual platform. IDA is the industry-standard tool for malware analysis, used by many security researchers to reverse-engineer the behavior of unknown binaries. It was connected to Simics using the gdb server protocol, with a twist, as illustrated in Figure 2, below.

Figure 2: Connecting IDA Pro to Simics

In addition to the standard gdb debugger commands that control the execution of the target system, set breakpoints, and read memory, the researchers tunneled commands from IDA pro to their monitoring system using gdb MONITOR commands. Together with scripts running in IDA pro, this made it possible to add features specific to the CGC vetting system.

For example, the researchers enabled IDA pro to support reverse debugging (even though IDA pro natively has no idea about reverse). They used IDA pro scripts to add commands to IDA pro that mirrored standard reverse execution commands (reverse step, reverse until time, etc). These scripts would send MONITOR commands to Simics, where the CGC monitor would receive them and control the execution of Simics. Importantly, this implementation was connected to the OS awareness for DECREE, so it supported debugging within the context of a Simics process (just like the built-in Simics debugger does with its OS awareness).

Scaling Up

The Cyber Grand Challenge evaluation covered many thousands of Simics runs. To handle that efficiently, DARPA created an automated Simics execution system that allowed hundreds of simultaneous simulations to run. Thanks to Simics hypersimulation capabilities and the mostly idle targets, it was possible to run six Simics processes simultaneously on a quad-core (eight-thread) server. The scheduling and execution of jobs was automated using Apache ZooKeeper*.

Beyond Hardware Replacement

The DARPA CGC vetting system represents an impressive application that leveraged Simics features, capabilities, and programmability to create a customized solution to a full-system problem. The monitoring system and debug connection serve as good examples of what can be achieved with a virtual platform when it is used for more than hardware replacement. Virtual platforms offer unique abilities for instrumenting and analyzing the execution of a system.

Related Content

Cyber Grand Challenge (CGC) Monitor - A Vetting System for the DARPA Cyber Grand Challenge by Michael F. Thompson and Timothy Vidas of the Digital Forensic Research Conference (DFRC) describing DARPA’s use of Simics software for the CGC.

Presentation slides by Michael F. Thompson and Timothy Vidas summarizing their DFRC paper.

Analysis of a DARPA Cyber Grand Challenge Proof of Vulnerability is a short video about the IDA pro integration.

cgc-monitor is the code on GitHub for the Cyber Grand Challenge forensics platform and automated analysis tools based on Simics software.

Author

Dr. Jakob Engblom is a product management engineer for the Simics virtual platform tool, and an Intel® Software Evangelist. He got his first computer in 1983, and has been programming ever since. Professionally, his main focus has been simulation and programming tools for the past two decades. He looks at how simulation in all forms can be used to improve software and system development, from the smallest IoT nodes to the biggest servers, across the hardware-software stack from firmware up to application programs, and across the product life cycle from architecture and pre-silicon to the maintenance of shipping legacy systems. His professional interests include simulation technology, debugging, multicore and parallel systems, cybersecurity, domain-specific modeling, programming tools, computer architecture, and software testing. Jakob has more than 100 published articles and papers, and is a regular speaker at industry and academic conferences. He holds a PhD in Computer Systems from Uppsala University, Sweden.

Dr. Jakob Engblom is a product management engineer for the Simics virtual platform tool, and an Intel® Software Evangelist. He got his first computer in 1983, and has been programming ever since. Professionally, his main focus has been simulation and programming tools for the past two decades. He looks at how simulation in all forms can be used to improve software and system development, from the smallest IoT nodes to the biggest servers, across the hardware-software stack from firmware up to application programs, and across the product life cycle from architecture and pre-silicon to the maintenance of shipping legacy systems. His professional interests include simulation technology, debugging, multicore and parallel systems, cybersecurity, domain-specific modeling, programming tools, computer architecture, and software testing. Jakob has more than 100 published articles and papers, and is a regular speaker at industry and academic conferences. He holds a PhD in Computer Systems from Uppsala University, Sweden.