This is the final article in the series about how memory management works in the Data Plane Development Kit (DPDK). While previous articles have concentrated on outlining general concepts behind memory management in DPDK, giving an in-depth overview of various input-output virtual address (IOVA) related options, and describing memory related features available in DPDK 17.11, this article covers the latest and greatest in all things about DPDK memory management.

DPDK version 18.11 is a long-term support release version, and its memory management features are a culmination of work that was done during the 18.05 and 18.08 release cycles on reworking the DPDK memory subsystem. Compared to DPDK 17.11, the user facing API is (almost) unchanged, but there are some pretty fundamental changes under the hood, as well as many new and useful features and APIs that were simply not possible to achieve before.

Dynamic Memory Management

The biggest change in DPDK 18.11 is that memory usage can now grow and shrink at run time, doing away with the DPDK memory map being static. This brings a number of usability improvements.

In DPDK 17.11, running DPDK without any Environment Abstraction Layer (EAL) arguments would have reserved all available huge page memory for DPDK’s use, leaving no memory for other applications or other DPDK instances. With DPDK 18.11 that is no longer the case; instead, DPDK starts with zero memory usage, and reserves memory as needed. DPDK is now much better behaved in that sense, and there is less effort involved in making DPDK play nice with other applications.

Similarly, there is no longer any need to know the application’s memory requirements in advance, the memory map can grow and shrink, so the DPDK memory subsystem grows its memory usage as needed and returns the memory to the system when it is no longer required. This means there is less effort involved in deploying DPDK applications, as now DPDK manages its memory requirements by itself.

DPDK 18.11 and IOVA Contiguousness

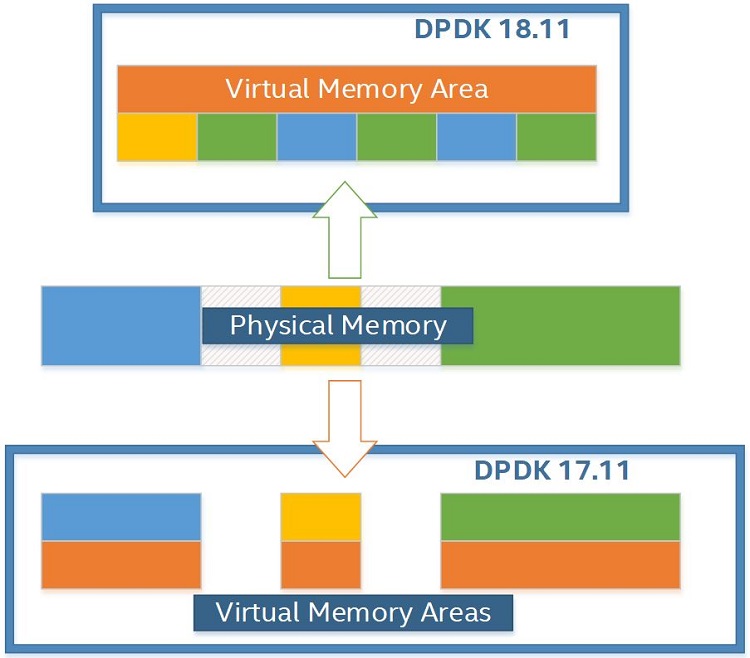

A fundamental under-the-hood change in DPDK 18.11 is that the virtual address (VA) contiguous memory is not guaranteed to be IOVA-contiguous; there is no longer any correlation between the two. In IOVA as VA mode, the IOVA layout still follows the VA layout as before, but in IOVA as physical addresses (PA) mode, the PA layout is arbitrary (it is not uncommon for physical pages to be mapped backwards).

Figure 1. IOVA layout comparison between DPDK versions.

This is not as disruptive a change as it may seem, because not many data structures actually require IOVA-contiguous memory; all of the software data structures (rings, memory pools, hash tables, and so on) only require VA-contiguous memory and couldn’t care less about the underlying physical memory layout. This makes it so that most user applications that worked on earlier versions of DPDK can seamlessly transition to the new version with no changes in code.

Still, some data structures do in fact require IOVA-contiguous memory (for example, hardware queue structures), and for those cases, a new allocator flag was introduced. Using this flag, it is possible to make the memzone allocator attempt to allocate memory that is IOVA-contiguous.

Legacy Mode

Previous versions of DPDK (17.11 or earlier) reserved large amounts of IOVA-contiguous memory by sorting all huge pages by their physical memory addresses at initialization. In 18.11, this is no longer the case because the memory is reserved and released at run time on a page-by-page granularity. This means that, unless DPDK runs in IOVA as VA mode, there will likely be no large chunks of IOVA-contiguous memory available. This is usually not an issue, as even the biggest IOVA-contiguous zones required by, for example drivers, will not exceed the size of a huge page.

Still, even though a great majority of applications based on earlier versions of DPDK will work fine with the new DPDK version, some use cases will require large amounts of IOVA-contiguous memory and using IOVA as VA mode may not be an option for one reason or another. Since DPDK no longer sorts its memory by physical addresses, it is impossible for DPDK 18.11 running in IOVA as PA mode to reserve large amounts of IOVA-contiguous memory.

To address those rare use cases, DPDK 18.11 provides a legacy mode with a --legacy-mem EAL command line parameter. The legacy mode closely emulates how earlier DPDK versions worked; that is, reserve and sort memory at initialization, and keep the memory map static. The trade-off here is that this also comes with all the old limitations, such as not being able to reserve/release memory at run time, and dependency on the underlying physical memory layout.

New EAL Features and Command Line Flags

In DPDK 18.11, some EAL parameters have changed their meaning, and some new ones were added as well, either to add new functionality or to replace old parameters. They are of course not mandatory to use, and DPDK 18.11 in its default configuration is a much better citizen than previous DPDK versions were. However, knowing these new features can be useful in certain scenarios.

Note: none of the new features work in legacy mode.

Controlling DPDK Memory Use

The memory reservation related parameters --m and --socket-mem have been changed to indicate the minimum amount DPDK will use. For example, initializing DPDK with --socket-mem 1024,1024 reserves 1 gigabyte (GB) on non-uniform memory access (NUMA) nodes 0 and 1, and this memory will never be released for the duration of the application’s lifetime. However, more memory can and will be added, if it is needed.

So, with DPDK 17.11 and earlier, it was necessary to run DPDK with either -m or --socket-mem flags, but now they are optional and are used only in cases where the application needs a guaranteed minimum of reserved memory to be available at all times.

It is also possible to limit DPDK’s memory use per NUMA node, by using a --socket-limit EAL command line parameter. It works similarly to --socket-mem parameters, in that it accepts a per NUMA node list of values and will set an upper limit on how much memory DPDK is allowed to reserve from the system.

Figure 2. Explaining --socket-mem and --socket-limit.

For example, if a use case was to constrain DPDK’s memory usage between 256 megabytes (MB) and 1 GB, the following command line could be used:

On a two socket system, this reserves 256 MB per NUMA node, and also limits memory use to 1 GB per NUMA node. This way, DPDK’s memory use starts at 256 MB per NUMA node, and grows and shrinks as needed, but it will never go below 256 MB or above 1 GB.

Single File Segments

Older versions of DPDK stored one file per huge page in the hugetlbfs filesystem. This works for the majority of use cases, however this sometimes presents a problem. In particular, Virtio with a vhost-user backend will share files with the backend, and there is a hard limit on the number of file descriptors that can be shared. When using large page sizes (for example, 1 GB pages), this can work reasonably well, but with smaller page sizes, the number of files quickly goes over the file descriptor limit.

To address this problem, a new mode was introduced in version 18.11, the single file segments mode, enabled via a --single-file-segments EAL command-line flag. This makes it so that EAL creates fewer files in hugetlbfs, and that enables Virtio with vhost-user backend to work even with the smallest page sizes.

In-Memory Mode

In DPDK 17.11, there was a --huge-unlink option that removed huge page files from the hugetlbfs filesystem right after creating and mapping them. In DPDK 18.11 this is still working, however there is a new EAL command line parameter --in-memory that will activate the so called in-memory mode, which is recommended for use instead of --huge-unlink.

In this mode, DPDK will not create files on any filesystem (hugetlbfs or otherwise). Instead of creating and then deleting the files (and thus still needing the hugetlbfs filesystem), DPDK avoids creating any files in the first place. In fact, hugetlbfs mount points are not even required in this mode, so using this mode makes DPDK even easier to set up to work in environments where hugetlbfs mount points are not commonplace (such as cloud-native scenarios).

Moreover, unlike --huge-unlink, which only addresses the huge page files and will not prevent any other files from being created by EAL, --in-memory mode also covers other files created by EAL. This in effect allows DPDK to run off a read-only filesystem, so that it will not require write access to the system at all.

Since this option is a superset of the --huge-unlink option, the same limitation regarding inability to use secondary processes applies here as well.

New APIs in DPDK 18.11

As well as providing new features as EAL command-line parameters, DPDK 18.11 also adds a host of new APIs to better make use of the new features that were added in this release.

Memory-Related Callbacks

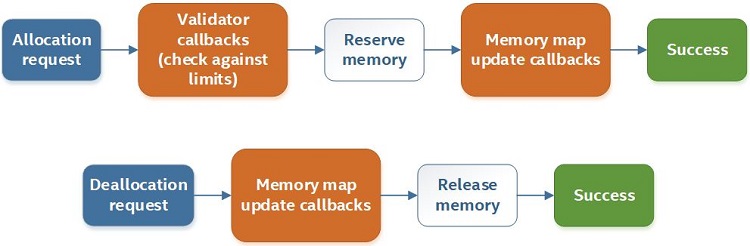

Previously, the DPDK memory map was static, so any facility that relied on its knowledge of how DPDK’s memory map is structured could scan the memory map only once and be confident that its knowledge was always up to date. Starting with DPDK 18.05, this is no longer the case: Memory layout can change at any time, so such facilities have to follow the memory map updates.

To make it easy to follow memory map updates, DPDK 18.11 adds a new API to register and listen for memory map updates via callbacks. Those are triggered each time a new segment is reserved, as well as each time a segment is about to be removed. This allows things like the VFIO subsystem to seamlessly follow memory map updates without any user interaction.

In addition to that, another set of callbacks is provided to offer some control over allocations. Allocation limits set up by the --socket-limit EAL parameter are static and unconditional, but there is also an API to register for callbacks to be triggered once allocation crosses some (per NUMA node) threshold and give the user a chance to deny allocation. This would, for example, allow setting a soft limit on allocations, where a couple of hundred megabytes above the limit are accepted, but a whole gigabyte is denied.

Figure 3. Memory-related callbacks flow.

Convenience Functions

Previously, iterating over the internal memory map and finding segment or page addresses was a manual job; each such iteration had copied the same iteration code over and over. Now that the internals of DPDK memory map are much more complex, such an approach is no longer viable (if it ever was). To address this, the DPDK 18.11 API also brings many convenience functions to provide a unified way of doing those little but useful things.

For example, functions are now available to iterate over internal DPDK page tables in various ways. There are also functions to map a virtual address to an individual page or a page table, as well as reverse-mapping an IOVA address to a virtual address. Applications are encouraged to use those functions whenever gaining information about DPDK’s internal memory map is desired, as these functions also perform all the necessary internal locking correctly.

Multiprocessing Considerations

DPDK 18.11’s memory map is dynamically changing, and that has implications on how primary and secondary processes work. The primary process handles all of the allocation/deallocation and synchronization, and secondary processes only follow memory map updates that happen in the primary process. Hence, if the primary process stops executing, the memory map becomes static.

There are also limitations on when certain functions (memory related callbacks, allocation functions, and so on) can be used. These are well documented in the DPDK API documentation, as well as in the DPDK Programmer’s Guide, so we recommend getting familiar with these limitations before attempting to use the new features.

Further Improvements to Virtio with Vhost-User Backend

Some use cases (for example, Virtio with vhost-user backend) require access to file descriptors corresponding to each huge page memory segment. In DPDK 18.11, these file descriptors are now stored internally, and are exposed via an API. This allows for improved reliability for Virtio with vhost-user backend, as well as making it possible to use Virtio with vhost-user backend in in-memory mode (that is, without any hugetlbfs mount points). Additionally, in DPDK 19.02 and later versions, these file descriptors are also available in no-huge mode, which allows using DPDK with Virtio with vhost-user backend without huge pages at all, as long as IOVA as VA mode is used (as well as a recent kernel).

External Memory

Another new feature in DPDK 18.11 is support for externally allocated memory. This is a new set of APIs designed to allow using memory that is not meant to be reserved by DPDK, but still use DPDK’s memory management facilities (such as allocating a memory pool over that memory). These APIs allow use of any kind of memory (such as memory mapped storage) in DPDK natively.

In addition, in version 19.02, another set of external memory related APIs was added. These APIs allow registering a chunk of external memory with DPDK but avoid using built-in allocator facilities with it. These APIs allow for use cases where said external memory is fully managed outside of DPDK, but access to information about this memory is needed by DPDK’s internal structures (for example, drivers).

Conclusion

Over the course of the four articles in this series, we looked at why DPDK’s memory subsystem works the way it does, provided an in-depth explanation of how DPDK handles physical addressing, and traced its evolution from being high performance but somewhat static and rigid in version 17.11, toward being much more dynamic and feature rich in version 18.11, while retaining the high performance that made DPDK popular.

More in-depth information can be found in DPDK documentation and code. Everyone is also invited to join our vibrant and welcoming community, and participate in discussions, code reviews, conferences, and industry events. More information on community and development processes is available at the DPDK community website.

Helpful Links

DPDK documentation for current release

DPDK API documentation for current release

EAL section of the Programmer’s Guide for current DPDK release

About the Author

Anatoly Burakov is a software engineer at Intel. He is the current maintainer of VFIO and memory subsystems in DPDK.