By Christian Mills, with Introduction by Peter Cross

Introduction

When CEO Pat Gelsinger made his return to Intel in 2021, he promised to ‘bring back the geek’. As it pertains to the developer community, we took that to heart, and doubled down on our commitment to provide game developers with the tools and the training to help demystify complex graphics technology.

As part of that commitment, we are creating and in some cases, partnering with influential developers, to bring practical world-class training and tutorials to the forefront, so that areas like AI and machine learning are no longer a scary place where only AAA studios dare to tread.

For this series, we feel privileged that Graphics Innovator, Christian Mills, allowed us to repurpose much of his training in the Machine Learning and Style Transfer world, and share it with the game developer community. The plan is for the first few parts in the series to focus on foundational areas of machine learning training and general style transfer techniques. After that, we hope to work with Christian for series updates that dive deeper into areas like gameobject specific style transfer targeting, GPU targeting (expansion of Intel® Deep Link technology), and usage of the Intel® Distribution of OpenVINO™ toolkit for realizing bigger gains with in-game inferencing performance.

Overview

This tutorial series covers how to train your own style transfer model and implement it in Unity using the Barracuda* library. Use the PyTorch* library to build and train the model. You will not need to set up PyTorch on your local machine to follow along. Instead, we will use the free Google Colab* service to train the model in a web browser. This does require you to have a Google account. You will also need some free space on Google Drive as we will save our model’s progress there.

In this first part, we will download our Unity project and install the Barracuda library. We will also create a folder for our project in Google Drive. This is where we will store our style images, test images and model checkpoints.

Note, most of the code from this tutorial is included in the github repository link at bottom of this article in case you want to skip certain sections.

Select a Unity Project & Sample Image

We will use the Kinematica_Demo project provided by Unity for this tutorial.

For the sample image serving as our style transfer base, just for fun, let’s use imagery tweeted earlier this year by Raja Koduri, the general manager of Architecture, Graphics, and Software at Intel. This will give us an interesting style transfer in-game effect.

The video linked below shows examples of various style formats applied to the Unity demo.

Our tutorial sample style (from Raja's tweet) is the last one depicted in the video.

Download Kinematica Demo

You can download the Kinematica project by clicking on the link below. The zipped folder is approximately 1.2 GB.

- Kinematica_Demo_0.8.0-preview: (download)

Add Project to Unity Hub

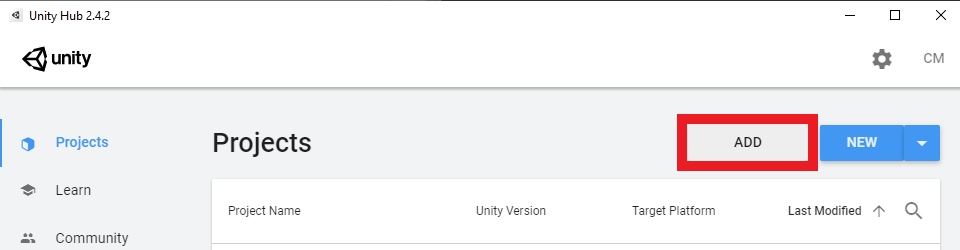

Once downloaded, unzip the folder and add the project to Unity Hub by clicking the Add button.

Set the Unity Version

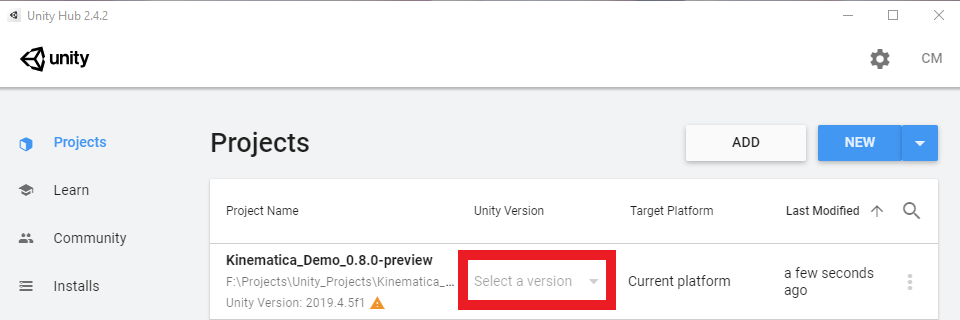

Select a Unity version from the drop-down menu. The demo project was made using Unity 2019.4.5f1. You can use a later 2019.4 release if you don’t have that version installed. The project also works in the latest LTS version of Unity 2020.X.

- Unity 2019.4.26 (or later): (download from this LTS page)

Open the Project

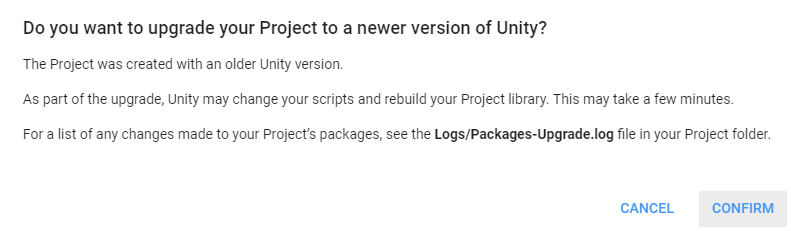

Now open the project. You will be prompted to upgrade the project to the selected Unity version. Click Confirm in the popup to upgrade the project. This project takes a while to load the first time.

Install Barracuda Package

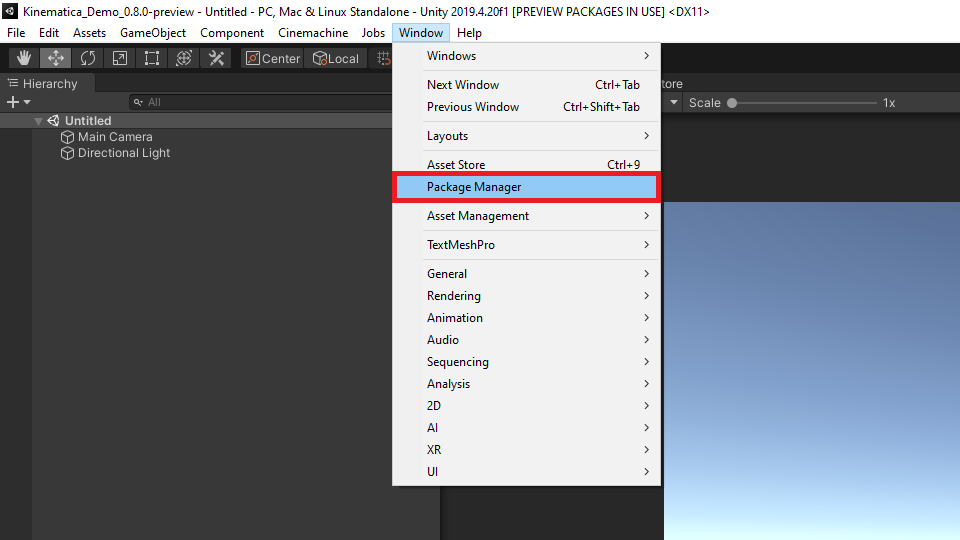

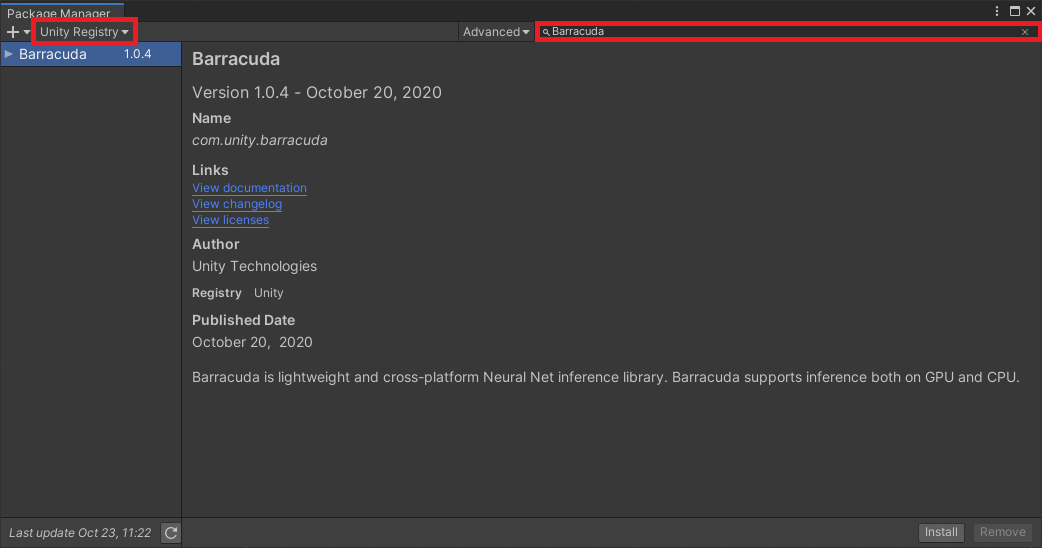

- Install the Barracuda package once the project has finished loading. Open the Package Manager window in the Unity editor.

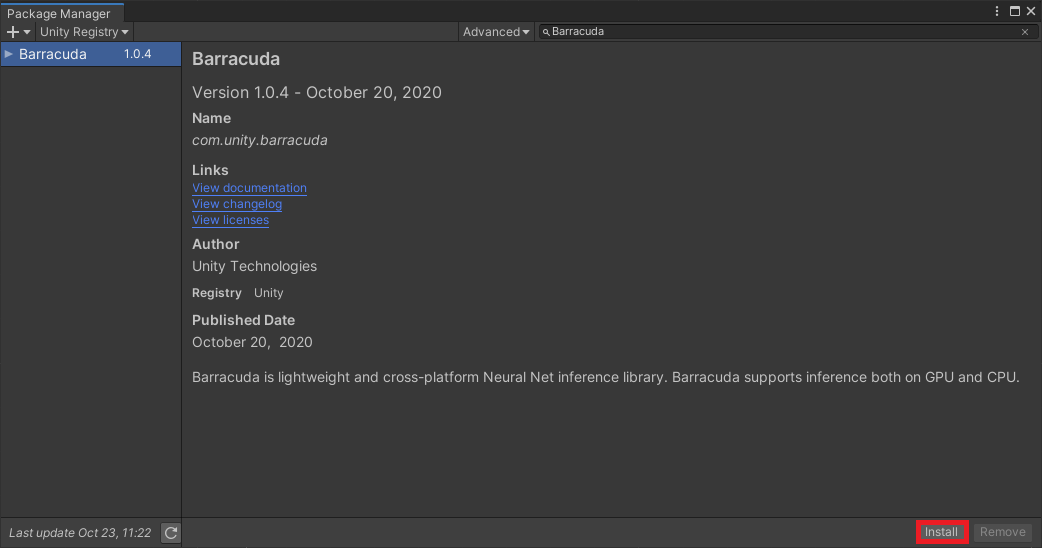

- In the Unity Registry section, type Barracuda into the search box. Use version 1.0.4.

Note: If you are using newer versions of Unity (i.e. Unity 2020.x), the Barracuda package is no longer displayed in the Package Manager due to a policy change. In this case, you may choose to instead search for ML Agents, and install that as it automatically installs Barracuda as dependency.

- Click the Install button to install the package.

Create Google Drive Project Folder

Google Colab environments provide the option to mount our Google Drive as a directory. Use this feature to automatically save the training progress.

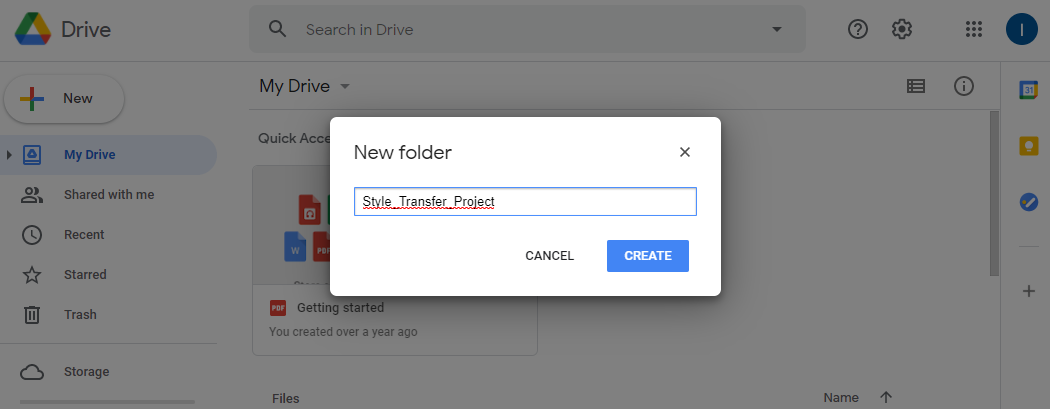

Create a Project Folder

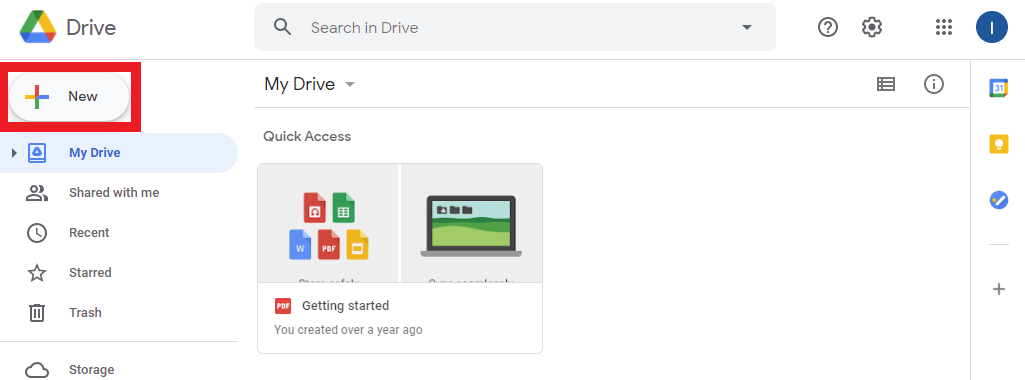

- Make a dedicated project folder to keep things organized. Open up your Google Drive and click the New button.

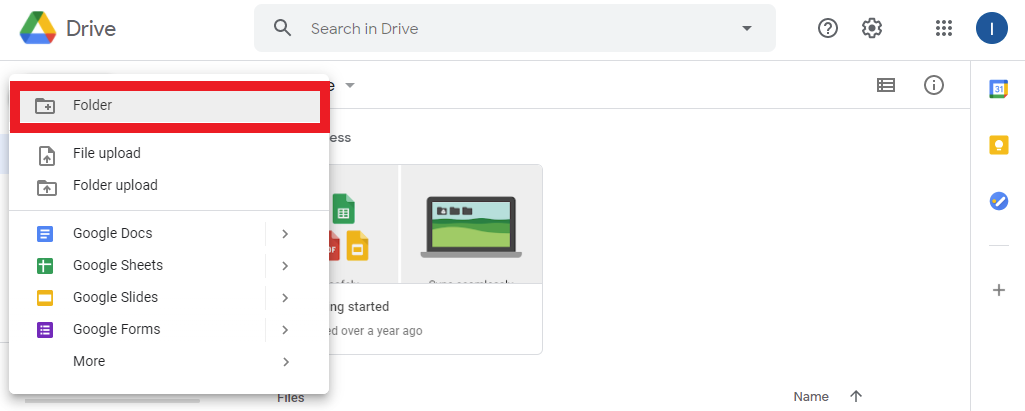

- Select the Folder option.

- Name the folder Style_Transfer_Project.

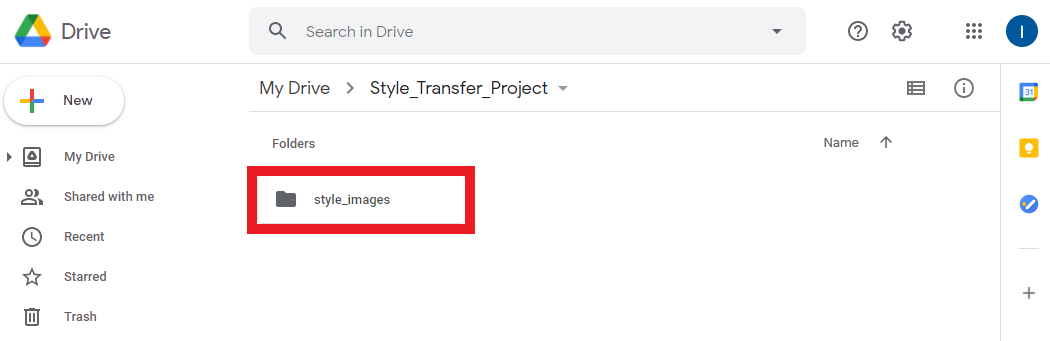

Create Folder for Style Images

- Open the project folder and create a new folder for storing the style images you want to use.

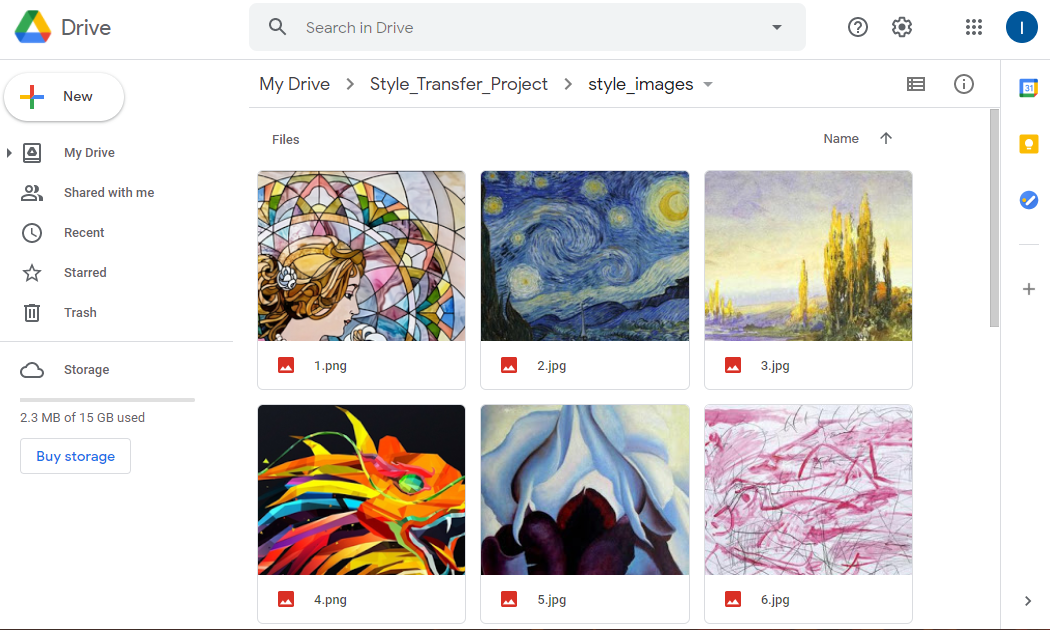

Upload Style Images

You can pick whatever images you want, but some will work better than others.

It is recommended to upload the images in their source resolution. You will have the option to resize them through code when training the model.

As mentioned previously, for this tutorial, we will use Raja’s mesh shader tweet referenced earlier.

Note: In general, it is recommended to crop the style images into squares. Not doing so can occasionally result in a border around the edges of the stylized output image. The sample image used for this tutorial has already been cropped.

Tutorial Style Image: (link)

More General Sample Style Images: (link)

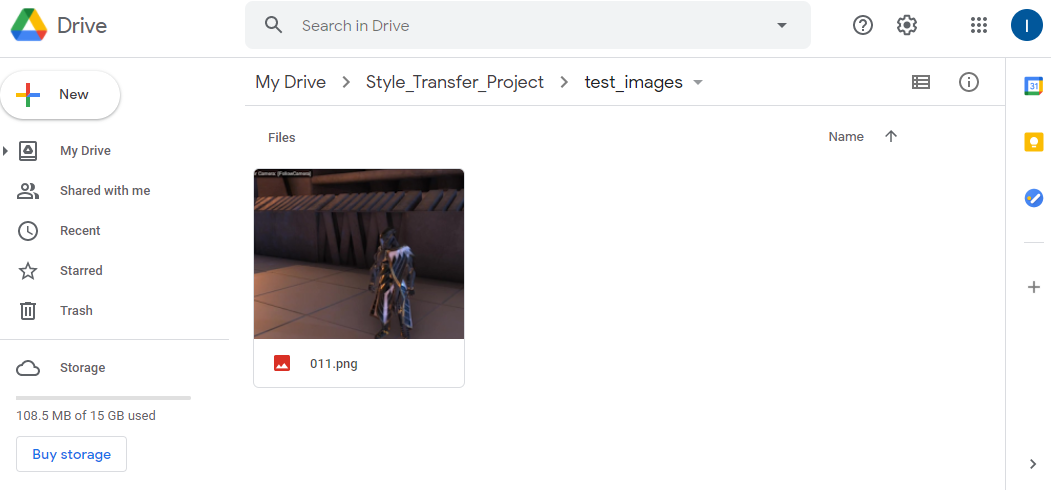

Create Folder for Test Images

We also need a test image to see how well the model is styling images during training. This is especially important as it can take some experimentation to get the model to generate desirable results. It is often clear early in the training session whether the model is learning as intended.

You can use this screenshot from the Kinematica demo for your test image.

● Kinematica Demo Screenshot: (link)

Place your test images in a new folder called test_images.

Conclusion-Part 1

That takes care of the required setup. In the next part, we will cover the optional step of recording in-game footage to add to your training dataset. This can help the model better adapt to the game’s specific environment. You can also skip ahead to part 2 where, we will be training our style transfer model in Google Colab*.

Next Tutorial Sections:

Part 1.5 (Optional)

Part 2

Part 3

Related Links:

Targeted GameObject Style Transfer Tutorial in Unity

Developing OpenVINO Style Transfer Inferencing Plug-in Tutorial for Unity

Project Resources:

GitHub Repository